Canaux

108470 éléments (108470 non lus) dans 10 canaux

Actualités

(48730 non lus)

Actualités

(48730 non lus)

Hoax

(65 non lus)

Hoax

(65 non lus)

Logiciels

(39066 non lus)

Logiciels

(39066 non lus)

Sécurité

(1668 non lus)

Sécurité

(1668 non lus)

Referencement

(18941 non lus)

Referencement

(18941 non lus)

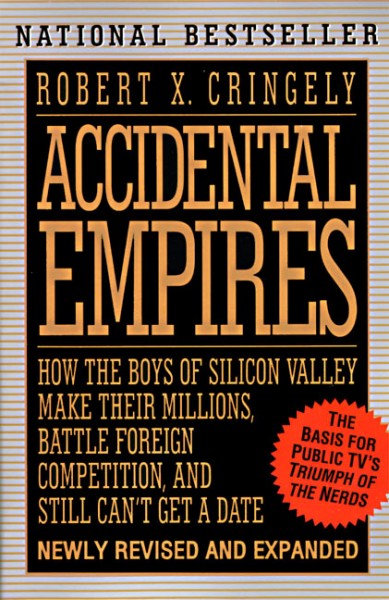

éléments par Robert X. Cringely

BetaNews.Com

-

The future of television

Publié: juin 10, 2019, 11:09am CEST par Robert X. Cringely

How is a television like a fax machine? They are both obsolete. Remember a time when nobody had a fax machine? Then suddenly everybody had a fax machine. And now nobody again has a fax machine. What would have previously come by fax today is a PDF attachment to an e-mail or text or to one of a number of messaging services. Well the same transformation is happening to traditional television and for generally similar reasons. And just as fax machines seemed to disappear in just a few years, I’ll be surprised if broadcast TV in the U.S. survives another… [Continue Reading] -

Prediction #4 -- Self-driving cars won't happen this year no matter what Elon Musk says

Publié: mars 19, 2019, 8:39pm CET par Robert X. Cringely

We all know people who seem to not like anything. There are very successful people who sometimes seem to have reached that success entirely through saying "no." I’m not that kind of person. I’m an optimist. I’m even a bit of a risk-taker. But I can’t say that we’re going to see anything beyond more beta tests of self-driving cars in 2019. So my Prediction #4 is that self-driving cars won’t hit the retail market in any fashion this year. We simply aren’t ready and probably won’t be for years to come. The problem with self-driving cars isn’t the technology.… [Continue Reading] -

2019 predictions #2 and #3 -- A Virtual Private Cloud (VPC) shakeout and legal trouble for AWS

Publié: mars 9, 2019, 9:30am CET par Robert X. Cringely

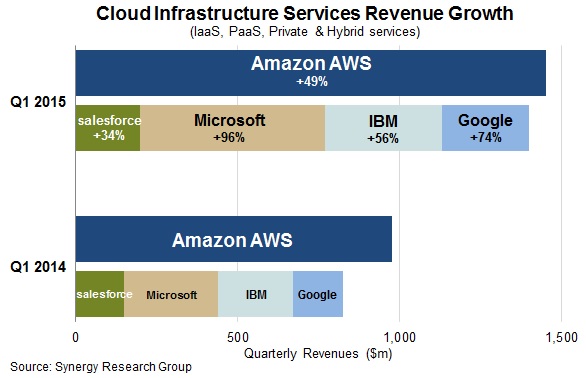

Prediction #2 -- And then there were only 3.5 VPC Cloud players. Cloud computing will continue to grow in 2019 with the key term being not Public Cloud, Private Cloud or Hybrid Cloud -- which are all so 2018 -- but Virtual Private Cloud (VPC). Virtual Private Cloud is an Amazon Web Services (AWS) invention but all the AWS competitors seem to be embracing the idea. What has developed is that the VPC solution based on Open Source using Linux will change the Internet-as-a-Service (IaaS) Cloudscape to VPC-only during 2019. Gartner has certainly embraced it. Here’s a 2018 Magic Quadrant chart… [Continue Reading] -

2019 prediction #1 -- Apple under Tim Cook emulates GE under Jack Welch

Publié: février 28, 2019, 3:05pm CET par Robert X. Cringely

People -- well, investors and financial analysts -- seem to worry a lot about Apple. They tend to see Apple as either wonderful or terrible, bound for further greatness or doomed. What Apple actually is is huge -- a super tanker of a company. And, like a super tanker, it’s hard to quickly change Apple’s direction or to make it go appreciably faster or slower. Those who see Apple as doomed, especially, should remember they are worrying about the most profitable enterprise in the modern history of business. Those who see Apple as immortal should remember that’s impossible. The worry… [Continue Reading] -

My predictions for 2019 -- The year when everything changes… forever

Publié: février 25, 2019, 3:26pm CET par Robert X. Cringely

Now, finally, to my predictions for 2019. This is, I believe, my 22nd and possibly last year of looking ahead, so I want to do something different and potentially bigger. Our old format works fine but I’ve been pondering this and I really think we’re at a sea-change in technology. It’s not just that new tech is coming but we as consumers of that tech are in major transitions of our own. It has as much to do with demographics as technology. So while I’ll be looking ahead all this week, coming up with the usual 10 predictions, I want… [Continue Reading] -

Looking back at my 2018 predictions, I was somehow 70 percent correct

Publié: février 25, 2019, 3:19pm CET par Robert X. Cringely

I can’t put this off any longer, so here are the tech predictions I made a year ago for 2018. We have to see how well or poorly I did before we can move on to my predictions for 2019 and beyond. These old predictions have been edited for length, but not to avoid embarrassment. I try to never avoid embarrassment. One thing I’ve noticed over the years is that my predictions get longer and longer (this column, alone, is 4329 words -- my second longest, ever) as they have drifted from new products to explaining new strategies. This sometimes… [Continue Reading] -

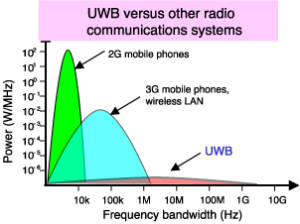

Apple knows 5G is about infrastructure, NOT mobile phones

Publié: novembre 22, 2018, 5:05pm CET par Robert X. Cringely

With Apple shares down more than 20 percent from their all-time highs of only a few weeks ago, writers are piling-on about what’s wrong in Cupertino. But sometimes writers looking for a story don’t fully understand what they are talking about. And that seems to me to be the case with complaints that Apple is too far behind in adopting 5G networking technology in future iPhones. For all the legitimate stories about how Apple should have done this or that, 5G doesn’t belong on the list. And that’s because 5G isn’t really about mobile phones at all. Just to get… [Continue Reading] -

Red Hat takes over IBM

Publié: octobre 29, 2018, 9:31pm CET par Robert X. Cringely

So IBM is buying Red Hat (home of the largest Enterprise Linux distribution) for $34 billion and readers want to know what I think of the deal. Well, if I made a list of acquisitions and things to do to save IBM, buying Red Hat would have been very close to the top of that list. It should have bought Red Hat 10 years ago when the stock market was in the gutter. Jumping the gun a bit, I have to say the bigger question is really which company’s culture will ultimately dominate? I’m hoping it’s Red Hat. The deal… [Continue Reading] -

Remembering Paul Allen

Publié: octobre 18, 2018, 9:21pm CEST par Robert X. Cringely

Microsoft co-founder Paul Allen died on Monday at age 65. His cause of death was Non-Hodgkins Lymphoma, the same disease that nearly killed him back in 1983. Allen, who was every bit as important to the history of the personal computer as Bill Gates, had found an extra 35 years of life back then thanks to a bone marrow transplant. And from the outside looking-in, I’d say he made great use of those 35 extra years. Of all the early PC guys, Allen was probably the most reclusive. Following his departure from Microsoft in 1983 I met him only four… [Continue Reading] -

Triggering a Trump meltdown: What was the point of that anonymous op-ed piece, anyway?

Publié: septembre 10, 2018, 7:32pm CEST par Robert X. Cringely

Thirty-nine years ago this past summer, I was working in a dingy cubicle in a K Street office building in Washington, DC when the man with white belt and shoes walked by. I was working as an investigator for the President’s Commission on the Accident at Three Mile Island and the man with white belt and shoes was a security consultant hired by the Commission to deal with a series of news leaks about our work. As a result, this consultant was overseeing the installation of an expensive video surveillance system, showing it off at that moment to the chief… [Continue Reading] -

Kai-Fu Lee's new book says Artificial Intelligence will be Google vs China and will kill half the world's jobs

Publié: août 31, 2018, 8:45pm CEST par Robert X. Cringely

Kai-Fu Lee was born in Taiwan but grew up in Tennessee, which is nothing -- nothing -- like Taiwan or China. His PhD is from Carnegie-Mellon and for the first half of his career Lee was "that voice recognition guy" first at Apple, then Microsoft, then Google. Lee took Google to China the first time (a new Google China effort is starting just now). Today Lee is an Artificial Intelligence expert who runs a $1 billion venture fund with offices in Taipei and Beijing and, according to Anina (the pretty girl in the picture with me on my site who… [Continue Reading] -

IT is urbanizing, McDonald's gets it, but Woonsocket doesn’t (yet)

Publié: août 9, 2018, 12:53pm CEST par Robert X. Cringely

My favorite UK TV producer once had to sell his house in Wimbledon and move to an apartment in Central London just to get his two adult sons to finally leave home. Now something similar seems to be happening in American IT. Some people are calling it age discrimination. I’m not sure I’d go that far, but the strategy is clear: IT is urbanizing -- moving to city centers where the labor force is perceived as being younger and more agile. The poster child for this tactic is McDonald’s, based for 47 years in Oak Brook, Illinois, but just this… [Continue Reading] -

How to cut the cable yet stay within your bandwidth cap

Publié: juillet 17, 2018, 11:10am CEST par Robert X. Cringely

After 31 years of doing this column pretty much without a break, I’m finally back from a family crisis and moving into a new house, which sadly are not the same things. Why don’t I feel rested? I have a big column coming tomorrow but wanted to take this moment to just cover a few things that I’ve noticed during our move. We have become cable cutters. Before the fire we had satellite TV (Dish) and could have kept it, but I wanted to try finding our video entertainment strictly over the Internet. It’s been an interesting experience so far… [Continue Reading] -

Cloud computing may finally end the productivity paradox

Publié: mai 21, 2018, 7:09pm CEST par Robert X. Cringely

One of the darkest secrets of Information Technology (IT) is called the Productivity Paradox. Google it and you’ll learn that for at least 40 years and study after study it has been the case that spending money on IT -- any money -- doesn’t increase organizational productivity. We don’t talk about this much as an industry because it’s the negative side of IT. Instead we speak in terms of Return on Investment (ROI), or Total Cost of Ownership (TCO). But there is finally some good news: Cloud computing actually increases productivity and we can prove it. The Productivity Paradox doesn’t claim that IT is useless, by the way,… [Continue Reading] -

GDPR kills the American internet: Long live the internet!

Publié: avril 22, 2018, 9:55pm CEST par Robert X. Cringely

I began writing the print version of this rag in September, 1987. Ronald Reagan was President, almost nobody carried a mobile phone, Bill Gates was worth $1.25 billion, and there was no Internet in the sense we know it today because Al Gore had yet to "invent" it. My point here is that a lot can change in 30+ years and one such change that is my main topic is that, thanks to the GDPR, the Internet is no longer American. We’ve lost control. It’s permanent and probably for the best. Before readers start attacking, let’s first deal with the… [Continue Reading] -

The space race is over and SpaceX won

Publié: avril 9, 2018, 12:00pm CEST par Robert X. Cringely

The U.S. Federal Communications Commission (FCC) recently gave SpaceX permission to build Starlink -- Elon Musk's version of satellite-based broadband Internet. The FCC specifically approved launching the first 4,425 of what will eventually total 11,925 satellites in orbit. To keep this license SpaceX has to launch at least 2,213 satellites within six years. The implications of this project are mind-boggling with the most important probably being that it will likely result in SpaceX crushing its space launch competitors, companies like Boeing and Lockheed Martin's United Launch Alliance (ULA) partnership as well as Jeff Bezos' Blue Origin. Starlink is a hugely… [Continue Reading] -

The real problem with self-driving cars

Publié: mars 27, 2018, 3:36pm CEST par Robert X. Cringely

Whatever happened to baby steps? Last week a 49 year-old Arizona woman was hit and killed by an Uber self-driving car as she tried to walk her bicycle across a road. This first-ever fatal accident involving a self-driving vehicle and a pedestrian has caused both rethinking and finger-pointing in the emerging industry, with Uber temporarily halting tests while it figures out what went wrong and Google’s Waymo division claiming that its self-driving technology would have handled the same incident without injury. Maybe, but I think the more important question is whether these companies are even striving for the correct goal… [Continue Reading] -

Facebook, Cambridge Analytica and our personal data

Publié: mars 21, 2018, 1:20pm CET par Robert X. Cringely

Facebook shares are taking it on the chin today as the Cambridge Analytica story unfolds and we learn just how insecure our Facebook data has been. The mainstream press has -- as usual -- understood only parts of what’s happening here. It’s actually worse than the press is saying. So I am going to take a hack at it here. Understand this isn’t an area where I am an expert, either, but having spent 40+ years writing about Silicon Valley, I’ve picked up some tidbits along the way that will probably give better perspective than what you’ve been reading elsewhere.… [Continue Reading] -

Stephen Hawking and me

Publié: mars 14, 2018, 5:26pm CET par Robert X. Cringely

I only met Stephen Hawking twice, both times in the same day. Hawking, who died a few hours ago, was one of the great physicists of any era. He wrote books, was the subject of a major movie about his early life, and of course survived longer than any other amyotrophic lateral sclerosis (ALS) sufferer, passing away at 76 while Lou Gehrig didn't even make it to 40. We’re about to be awash in Hawking tributes, so I want to share with you my short experience of the man and maybe give more depth to his character than we might… [Continue Reading] -

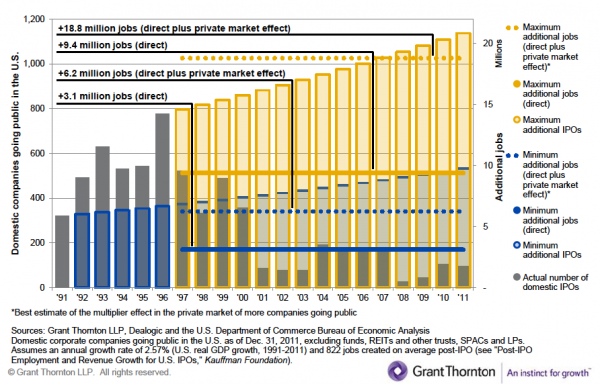

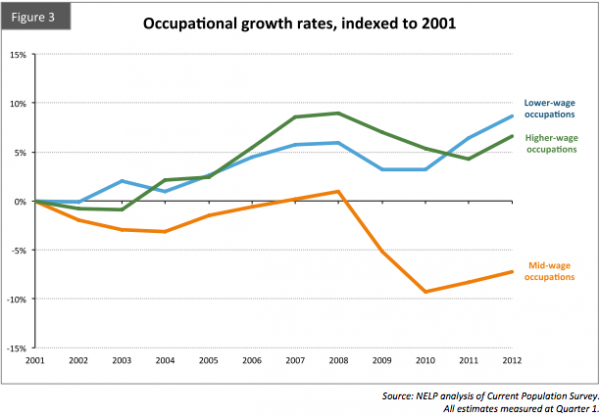

We win, you lose: How shareholder value screwed the middle class

Publié: février 27, 2018, 3:04pm CET par Robert X. Cringely

The American Dream changed somehow in the 1970s when real wages for most of us began to stagnate when corrected for inflation and worker age. My best financial year ever was 2000 -- 18 years ago -- when was yours? This wasn’t a matter of productivity, either: workers were more productive every year, we just stopped being rewarded for it. There are many explanations of how this sad fact came to be and I am sure it’s a problem with several causes. But this column concerns one factor that generally isn’t touched-on by labor economists -- Wall Street greed. Lawyers… [Continue Reading] -

Predictions #8-10: Apple, IBM & Zuckerberg

Publié: février 26, 2018, 11:02am CET par Robert X. Cringely

It’s time to wrap up all these 2018 predictions, so here are my final three in which Apple finds a new groove, IBM prepares for a leadership change, and Facebook’s Mark Zuckerberg gives up a dream. Apple has long needed a new franchise. It’s been almost eight years since the iPad (Apple’s last new business) was introduced. Thanks to Donald Trump’s tax plan, Cupertino can probably stretch its stock market winning streak for another 2-3 years with cash repatriation, share buy-backs, dividend increases and cost reductions, but the company really needs another new $20+ billion business and it will take… [Continue Reading] -

Prediction #7 -- 2018 will see the first Alexa virus

Publié: février 19, 2018, 2:08pm CET par Robert X. Cringely

There’s a new Marvel superhero series on Fox called The Gifted that this week inspired my son Fallon, age 11, to predict the first Alexa virus, coming soon to an Amazon Echo, Echo Dot or Echo Show cloud device near you. Or maybe it will be a Google Home virus. Fallon’s point is that such a contagion is coming and there probably isn’t much any of us -- including both Amazon and Google -- can do to stop it. The Gifted has characters from Marvel’s X-Men universe. They are the usual mutants but the novel twist in this series is that… [Continue Reading] -

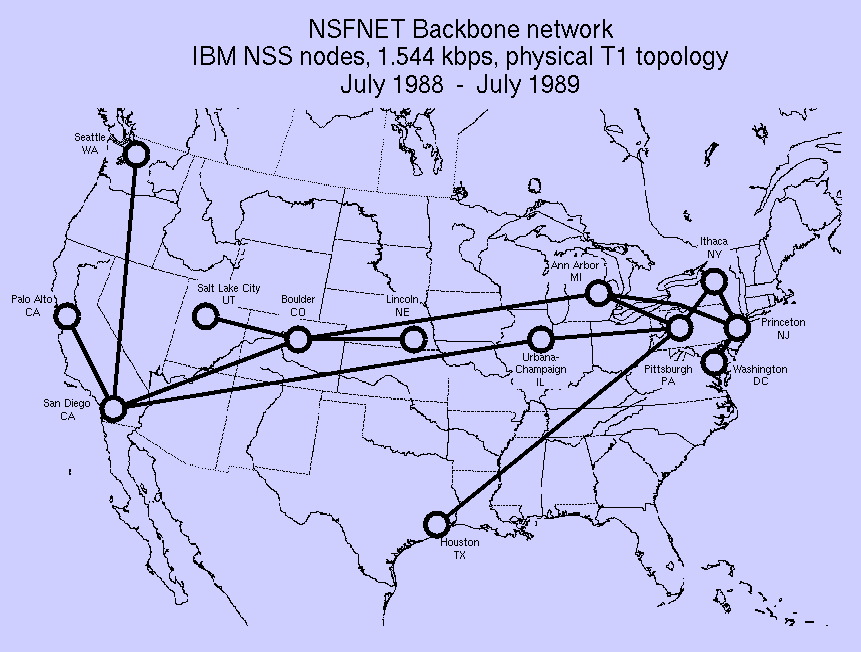

Prediction #6 -- AI comes of age, this time asking the questions, too

Publié: février 9, 2018, 10:08am CET par Robert X. Cringely

Paul Saffo says that communication technologies historically take 30 years or more to find their true purpose. Just look at how the Internet today is different than it was back in 1988. I am beginning to think this idea applies also to new computing technologies like artificial intelligence (AI). We’re reading a lot lately about AI and I think 2018 is the year when AI becomes recognized for its much deeper purpose of asking questions, not just finding answers. Some older readers may remember the AI bubble of the mid-1980s. Sand Hill Road venture capitalists invested (and lost) about $1… [Continue Reading] -

Prediction #4 -- Bitcoin stays crazy until traders learn it is not a currency

Publié: janvier 26, 2018, 9:10pm CET par Robert X. Cringely

2017 was a wild ride for cryptocurrencies and for Bitcoin in particular, rising in price at one point above $19,000 only to drop back to a bit over half of that number now. But which number is correct? If only the market can tell for sure -- and these numbers are coming straight from the market, remember -- what the heck does it all mean? It means Bitcoin isn’t a currency at all but traders are pretending that it is. 2018 will see investors finally figure this out. Confusion abounds, so let’s cut through the crap with an analogy. Cryptocurrencies… [Continue Reading] -

Prediction #3 -- 2018 foreign profit repatriation is a $591.8 BILLION taxpayer ripoff

Publié: janvier 20, 2018, 10:33pm CET par Robert X. Cringely

When I started this series of 2018 predictions I said the recently passed U.S. tax law was going to have a profound impact on upcoming events. Having had a chance to look closer at the issue I am even more convinced that this seismic financial event is, as I wrote above, a $591.8 billion taxpayer ripoff. This is not to say there aren’t some possible public benefits from the repatriation, but it’s fairly clear that the public loses more than it will ever gain. In case you don’t follow these things, multinational U.S. companies have, since 2005, squirreled away about… [Continue Reading] -

Why do I do this to myself? Bob's first predictions for 2018

Publié: janvier 16, 2018, 3:03pm CET par Robert X. Cringely

About 20 years ago, when I started publishing a list of annual technology predictions, it just made sense to look back to see how I had done the year before. Alas, I made that decision without looking to see that nobody else in my line of work actually does that. But I was stuck and have found since that by being deliberately vague and putting a fair amount of thought into this stuff I’ve been able to keep my long-term stats at about 70 percent correct. We’ll shortly see if that trend continues, but first I want to discuss how… [Continue Reading] -

Net Neutrality will die, so let's take the profit out of killing it

Publié: novembre 22, 2017, 4:59pm CET par Robert X. Cringely

The U.S. Federal Communication Commission, under the leadership of chairman Ajit Pai, will next week set in motion the end of Net Neutrality in the USA. This is an unfortunate situation that will cause lots of news stories to be written in the days ahead, but I’m pretty sure the fix is in and this change is going to happen. No matter how many protesters march on their local Verizon store, no matter how many impassioned editorials are written, it’s going to happen. The real question is what can be done in response to take the profit out of killing… [Continue Reading] -

Amazon is becoming the new Microsoft

Publié: novembre 21, 2017, 5:15pm CET par Robert X. Cringely

My last column was about the recent tipping point signifying that cloud computing is guaranteed to replace personal computing over the next three years. This column is about the slugfest to determine what company’s public cloud is most likely to prevail. I reckon it is Amazon’s and I’ll go further to claim that Amazon will shortly be the new Microsoft. What I mean by The New Microsoft is that Amazon is starting to act a lot like the old Microsoft of the 1990s. You remember -- the Bad Microsoft. Microsoft in the Bill Gates era was truly full of itself,… [Continue Reading] -

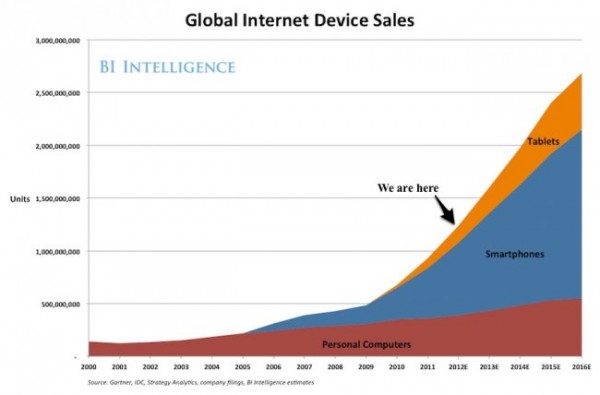

We've reached the cloud computing tipping point

Publié: octobre 31, 2017, 1:20pm CET par Robert X. Cringely

Between technology waves there is always a tipping point. It’s not that moment when the new tech becomes dominant but the moment when that dominance becomes clearly inevitable. For cloud computing I think the tipping point arrived a month ago. That future is now. This is a big deal. My count of technical waves in computing may not agree with yours but I see (1) batch computing giving way to (2) timesharing which gave way to (3) personal computers which gained (4) graphical user interfaces, then became (5) networked Internet computers and (6) mobile computers embodied in smartphones and tablets,… [Continue Reading] -

I have no boils

Publié: octobre 19, 2017, 9:46pm CEST par Robert X. Cringely

This is probably the last picture ever taken of our house in Santa Rosa, California. The time was 11:30PM Sunday and a neighbor had just pounded on our door. Fifty mph winds had been blowing all day but nobody expected fire. Yet the glow you see is from burning houses behind and beside ours. They, too, are gone. We left with what clothes we could grab. I forgot my computer. I’m still blind and awaiting surgery so Mary Alyce drove one car and we left the other to burn. By 8AM we were on the Mendocino coast with crappy Internet… [Continue Reading] -

The Google Lunar X-Prize wasn’t extended, it was ENDED

Publié: août 27, 2017, 10:35pm CEST par Robert X. Cringely

Google recently extended its Google Lunar X-Prize deadline to March 31, 2018, apparently giving the five remaining teams a little longer to vie for the $20 million top prize. But there’s a mystery here that suggests two vying reasons for the change -- one sincere and the other cynical. The final answer may turn out to be a combination of both. The Google Lunar X-Prize was announced in 2007, giving teams five years to put their landers on the Moon and drive around, sending back live HD video of the action. Though 30 teams eventually signed-up, none of them made it to the… [Continue Reading] -

A nanotechnology overnight sensation 30 years in the making!

Publié: août 14, 2017, 7:27pm CEST par Robert X. Cringely

One of my favorite mad scientists sent me a link recently to a very important IEEE paper from Stanford. Scientists at the Stanford Linear Accelerator Center (SLAC) have managed to observe in real time the growth of nanocrystalline superlattices and report that they can grow impressively in only a few seconds rather than the days or weeks they were formerly thought to take. What this means for you and me is future manufacturing on an atomic scale with whole new types of materials we can’t even imagine today. What’s strange about this is not that these developments are happening but… [Continue Reading] -

Will Trump avoid military action against North Korean ICBMs?

Publié: août 2, 2017, 11:41am CEST par Robert X. Cringely

We’re just a blind man and an 11 year-old boy, but Fallon and I have been learning a lot about North Korean ballistic missiles and the news is sobering for a world already in crisis. Not only does North Korea have missiles capable of reaching the U.S. mainland, that has been a well known fact in intelligence circles (not just at our house) since early 2016. The North Koreans probably have a 10-20 kiloton nuclear device of deliverable size and even if they don’t it’s easy to send a dirty bomb instead. Our capability for monitoring such activity from space… [Continue Reading] -

The robots are coming!

Publié: juin 6, 2017, 10:54am CEST par Robert X. Cringely

Elon Musk thinks he can increase the speed of his Tesla production line in Fremont, California by 20X. I find this an astonishing concept, but Musk not only owns a car company, he also owns the company that makes the robots used in his car factory. So who am I to say he’s wrong? And if he’s right, well then the implications for everything from manufacturing to the economy to geopolitics to ICBM targeting to your retirement and mine are profound. We may be in trouble or maybe we’re not, but either way it’s going to be an interesting ride. My… [Continue Reading] -

Trump's 2016 Big Data political arms race

Publié: mai 15, 2017, 2:33pm CEST par Robert X. Cringely

Events happen so quickly in the wacky whirlwind world of Donald Trump that it’s hard to react in anything close to real time, but there was an interesting story in the Guardian last weekend that I think deserves some technical context. The Great British Brexit Robbery: How our Democracy was Hijacked is a breathless but well sourced story about how a U.S. billionaire harnessed Big Data to split up the European Union and steal a U.S. Presidential election. It’s an interesting read, but the point I want to make here is that the tale was entirely predictable and if one… [Continue Reading] -

The cloud computing tidal wave

Publié: mai 8, 2017, 10:37am CEST par Robert X. Cringely

The title above is a play on the famous Bill Gates memo, The Internet Tidal Wave, written in May, 1995. Gates, on one of his reading weeks, realized that the Internet was the future of IT and Microsoft, through Gates’s own miscalculation, was then barely part of that future. So he wrote the memo, turned the company around, built Internet Explorer, and changed the course of business history. That’s how people tend to read the memo, as a snapshot of technical brilliance and ambition. But the inspiration for the Gates memo was another document, The Final Days of Autodesk, written… [Continue Reading] -

Can Amazon's Echo Dot make a good SIDS alarm?

Publié: avril 27, 2017, 10:37am CEST par Robert X. Cringely

It was 15 years ago this week that my son Chase Cringely died of Sudden Infant Death Syndrome (SIDS) at age 74 days. I wrote about it at the time and there was a great outpouring of support from readers. Back then, before the advent of social media, parents didn’t get a chance to grieve in print the way Mary Alyce and I did. We shed a light on SIDS and, for a couple years, even led to some progress in combating the condition, which still kills about 4,000 American babies each year. When you lose a child, especially one… [Continue Reading] -

Remembering Bob Taylor

Publié: avril 19, 2017, 11:50am CEST par Robert X. Cringely

Bob Taylor, who far more than Al Gore had a claim to being the Father of the Internet, died from complications of Parkinson’s Disease last Thursday at 85. Though I knew him for 30 years, I can’t say I knew Bob well but we always got along and I think he liked me. Certainly I respected him for being that rarity -- a non-technical person who could inspire and lead technical teams. He was in a way a kinder, gentler Steve Jobs. Bob’s career seemed to have three phases -- DARPA, XEROX, and DEC -- and three technical eras --… [Continue Reading] -

How to get rich trading Bitcoin

Publié: avril 4, 2017, 6:49pm CEST par Robert X. Cringely

As an observer of the Bitcoin market as long as this original cryptocurrency has existed, it never made much sense to me from an investment perspective. Bitcoin prices were too volatile and the volatility seemed too random. Volatility can be a good thing for traders, mind you, but only if you think you have an idea why the price goes up and down the way it does. Otherwise it is just a good way to lose all your money. But a couple of recent events have changed my view of Bitcoin. I now think I can explain its volatility and… [Continue Reading] -

Wikileaks finds a business model

Publié: mars 24, 2017, 9:20am CET par Robert X. Cringely

Within minutes of the electrons drying on my last column about the Wikileaks CIA document drop called Vault 7, Julian Assange came out with the novel idea that he and Wikileaks would assist big Internet companies with their technical responses to the obvious threats posed by all these government and third-party security hacks. After all, Wikileaks had so far published only documentation for the hacks, not the source code. There was still time! How noble of Assange and Wikileaks! OR, Wikileaks has found a new business model. When organized crime offers assistance against a threat they effectively control it’s called… [Continue Reading] -

The CIA, WikiLeaks and Spy vs Spy

Publié: mars 14, 2017, 12:13pm CET par Robert X. Cringely

As pretty much anyone already knows, WikiLeaks has dropped a trove of about 8700 secret documents that purport to cover a range of CIA plans and technologies for snooping over the Internet -- everything from cracking encrypted communication products to turning Samsung smart TVs into listening devices against their owners. Two questions immediately arise: 1) are these documents legit (they appear to be), and; 2) WTF does it mean for people like us, who aren’t spies, public officials, or soldiers of fortune? This latter answer requires a longer explanation but suffice it to say this news is generally not good… [Continue Reading] -

No fracking way! Fukishima is worse than ever

Publié: février 17, 2017, 7:01pm CET par Robert X. Cringely

Remember the Fukishima Daiichi nuclear accident following the 2011 earthquake and tsunami in Japan? I wrote about it at the time, here, here, here, here, and here, explaining that the accident was far worse than the public was being told and that it would take many decades -- if ever -- for the site to recover. Well it’s six years later and, if anything, the Fukushima situation is even worse. Far from being over, the nuclear meltdown is continuing, the public health nightmare increasing. Why aren’t we reading about this everywhere? Trump is so much more interesting, I guess. "The… [Continue Reading] -

Trump's anti-H-1B order won't be what it seems

Publié: février 7, 2017, 12:32pm CET par Robert X. Cringely

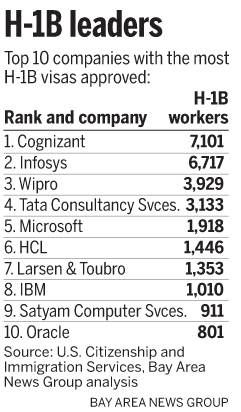

Immigration policy and trade protectionism play large roles in the new Administration of President Donald Trump. With the goal of Making America Great Again the new President wants to more tightly control the flow of goods and labor into the USA. Over the last week this has taken the form of an Executive Order limiting travel from seven specific Muslim countries. That order wasn’t well done, wasn’t well explained, has caused lots of angst here and abroad and is at this moment suspended pending litigation. That order is supposedly about limiting terrorism. It will be shortly followed, we’re told, by… [Continue Reading] -

Bob's big picture technology predictions for 2017

Publié: janvier 11, 2017, 12:26pm CET par Robert X. Cringely

I couldn’t put it off any longer so here are my technology predictions for 2017. I’ve been reading over my predictions from past years and see a fundamental change in structure over that time, going from an emphasis on products to an emphasis on companies. This goes along, I’d say, with the greater business orientation of this column. That makes sense with a maturing market and mature industries and also with the fact that a fair number of readers are here mainly as investors, something that didn’t used to be so much the case. Of course we begin with a… [Continue Reading] -

News we aren't supposed to know

Publié: décembre 15, 2016, 3:54pm CET par Robert X. Cringely

I’m writing this post on Wednesday evening here in California. Normally I wouldn’t point that out but in this case I want to put a kind of timestamp on my writing because at this moment we’re at the end of the second day of a concerted attack by the UAE Electronic Army on various DNS providers in North America. If you follow this stuff and bother to check, say, Google News right now for "UAE Electronic Army", your search will probably generate some Facebook entries but no news at all because -- two days into it -- this attack has gone unnoticed… [Continue Reading] -

What's real about Fake News

Publié: décembre 9, 2016, 2:55pm CET par Robert X. Cringely

I’m here nominally to address the problem of what’s being called Fake News. At its core this is as labeled -- news that is fake; news that isn’t news; deceptive content intended not to inform or convince but to manipulate and make trouble. It’s a huge problem, we’re told, that will require new algorithms and tons of cloud to fix. But I’m not so sure. You see the key to keeping fake news out is to put real news in. The recent Fake News tempest has got me thinking about what I do and don’t do right here in this… [Continue Reading] -

Welcome to the Post-Decision Age

Publié: novembre 29, 2016, 11:41am CET par Robert X. Cringely

There are more things to talk about than Donald Trump, though I doubt that Donnie agrees with me. But we have to get on with our lives which, at least in my case, means getting on with my reading. Where does all the crap I write here come from but reading, talking to people, and waiting in line at Starbucks? Nowhere else! And if you want to be like me you may choose to read a new book by Michael Lewis, The Undoing Project: A Friendship That Changed Our Minds. Of course the book is very good and it’s very… [Continue Reading] -

Saving the Internet of Things (IoT)

Publié: novembre 21, 2016, 11:30am CET par Robert X. Cringely

This is my promised column on data security and the Internet of Things (IoT). The recent Dyn DDoS attack showed the IoT is going to be a huge problem as networked devices like webcams are turned into zombie hoards. Fortunately I think I may have a solution to the problem. Really. I’m an idiot today, but back in the early 1990’s I ran a startup that built one of the Internet’s earliest Content Distribution Networks (CDN), only we didn’t call it that because the term had not yet been invented. Unlike the CDNs of today, ours wasn’t about video, it… [Continue Reading] -

President Trump: The hangover

Publié: novembre 9, 2016, 1:37pm CET par Robert X. Cringely

Wow, what an election! I’m tempted to say the FBI gave it to Trump but the results are too strong for that to be the sole reason for his victory. There’s a real movement behind this result and it isn’t in any sense a triumph of Republicanism. In fact I think it may be hard for the Republican Party as we knew it to even survive. Time will tell. Until such time, the world will go a little crazy. Stocks will slide, women will swoon, babies and men will cry. But eventually we’ll pick ourselves up and get back to… [Continue Reading] -

What the heck is happening at Apple?

Publié: novembre 1, 2016, 6:22pm CET par Robert X. Cringely

"What the heck is happening at Apple?" people ask me. "Has the company lost its mojo?" "Why no new product categories?" "Why didn’t Apple, instead of AT&T, buy Time Warner?" And "Why are the new MacBook Pros so darned expensive?" After first getting out of the way the fact that Apple is still the richest public company in the history of public companies, let’s take these questions in reverse order beginning with the MacBook Pros. In addition to their nifty OLED finger bar above the keyboard, these new Macs seem to have gained an average of $200 over the preceding… [Continue Reading] -

Social media? Bob needs a social mediaTOR

Publié: octobre 26, 2016, 11:21am CEST par Robert X. Cringely

Okay, I’m back, still without cataract surgery but I have the fonts cranked-up on this notebook and my one working eye is still, well, working so I am, too. My next column will be about last week’s Internet DNS failure but right now I want to write about all these folks who have been asking to connect with me on Facebook, LinkedIn, and other social media. I’ll bet you have the same problems that I do. Once you have enough connections (I have 2785 Facebook "friends" and 2552 "connections" on LinkedIn) you become a target for people trying to build… [Continue Reading] -

Fifteen years after 9-11, threats have evolved too

Publié: septembre 12, 2016, 6:54pm CEST par Robert X. Cringely

Fifteen years after 9-11 it’s interesting to reflect on how much our lives have -- and haven’t -- changed as a result of that attack. One very obvious change for all of us since 9-11 is how much more connected we are to the world and to each other than we were back then. Politico has a great post quoting many of the people flying on Air Force One that day with President George W. Bush as his administration reacted to the unfolding events. Reading the story one thing that struck me was the lack of immediate information about the attacks… [Continue Reading] -

What Carrie Underwood's success teaches us about IBM's Watson failure

Publié: septembre 7, 2016, 4:33pm CEST par Robert X. Cringely

I have a TV producer friend I worked with years ago who at some point landed as one of the many producers of American Idol when that singing show was a monster hit dominating U.S. television. She later told me an interesting story about Carrie Underwood, the country-western singer who won American Idol Season 4. That story can stand as a lesson applicable to far more than just TV talent shows. It’s especially useful for the purposes of this column for explaining IBM’s Watson technology and associated products. You see the producers of American Idol Season 4 knew before the… [Continue Reading] -

John Ellenby dies at 75

Publié: août 28, 2016, 11:00am CEST par Robert X. Cringely

I wouldn’t normally be writing a column early on a Saturday but I just read that John Ellenby died and I think that’s really worth mentioning because Ellenby changed all our lives and especially mine. If you don’t recognize his name, John Ellenby was a British computer engineer who came to Xerox PARC in the 1970s to manufacture the Xerox Alto, the first graphical workstation. He left Xerox in the late 1980s to found Grid Systems, makers of the Compass -- the first full-service laptop computer. In the 1990s he founded Agilis, which made arguably the first handheld mobile phone… [Continue Reading] -

The self-driving car is old enough to drink and drive

Publié: août 25, 2016, 4:35pm CEST par Robert X. Cringely

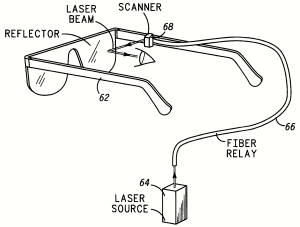

Twenty-one years ago, when we were shooting Triumph of the Nerds, the director, Paul Sen, introduced me to his cousin who was working at the time on a big Department of Transportation research program to build self-driving cars. Twenty-one years ago! Yet what goes around comes around and today there is nothing fresher than autonomous cars, artificial intelligence. You know, old stuff. As you can see from this picture, driverless cars were tested by RCA and General Motors decades earlier, back in the 1950s. What changed from 1995 until today in my view comes down to three major things: 1)… [Continue Reading] -

Moon Express gets FAA 'approval' for Moon mission

Publié: août 11, 2016, 5:18pm CEST par Robert X. Cringely

Last week Moon Express, a contender for the Google Lunar X-Prize (GLXP), announced that the company had received interagency approval from the White House, Federal Aviation Administration (FAA), Department of State and other U.S. government agencies "for a maiden flight of its robotic spacecraft onto the Moon’s surface to make the first private landing on the Moon". This heady announcement got a lot of press including this story I am linking to because it was in the New York Times, the USA’s so-called pape of record. If the Times writes "gets approval to put robotic lander on the Moon" it must… [Continue Reading] -

Outsourced IT probably hurt Delta Airlines when its power went out

Publié: août 9, 2016, 8:55am CEST par Robert X. Cringely

Delta Airlines last night suffered a major power outage at its data center in Atlanta that led to a systemwide shutdown of its computer network, stranding airliners and canceling flights all over the world. You already know that. What you may not know, however, is the likely role in the crisis of IT outsourcing and offshoring. Whatever the cause of the Delta Airlines power outage, data center recovery pretty much follows the same routine I used 30 years ago when I had a PDP-8 minicomputer living in my basement and heating my house. First you crawl around and find the… [Continue Reading] -

Famous American blogger strikes back against China

Publié: juillet 29, 2016, 8:52pm CEST par Robert X. Cringely

A few weeks ago I published a column here about online journalism. You may remember it from the picture of Jerry Seinfeld which I am using again here. While I have many readers in China, my work isn’t normally distributed there so I was surprised when a reader told me that column had been translated almost in its entirety and republished on a Chinese web site. How should I feel about this? I might be flattered or I might be angry. Certainly the translation was not authorized by me and I received no payment for it. It goes far beyond… [Continue Reading] -

Is anyone at Yahoo paying attention? Probably not

Publié: juillet 29, 2016, 7:19pm CEST par Robert X. Cringely

So Verizon is buying the heart of old Yahoo! I include the exclamation point because it was always there in the Yahoo! we knew back when the Internet was young. $4.83 billion in cash is a lot of cash, but for Verizon it’s a way of buying into the future while buying what to many of us seems to be the past. So let’s get the business part out of the way: Verizon can see Yahoo! as a bargain because Yahoo! has nearly always been more profitable on a gross margin basis than Verizon, a phone company. Even Yahoo! in… [Continue Reading] -

Why SoftBank is paying $32 billion for ARM Holdings

Publié: juillet 19, 2016, 1:17pm CEST par Robert X. Cringely

SoftBank is buying ARM Holdings for $32 billion. Why would a company not presently in the semiconductor business spend 32 times sales to enter a new industry? By traditional measures it makes little sense. But for SoftBank it makes perfect sense, because here’s a company that has spent more than 30 years making high-risk bets on entering new businesses by apparently over-paying for assets. It’s the way they’ve always done it and it has nearly always worked. In this case SoftBank is paying a 43 percent premium over the recent ARM share price because that’s how much money it took… [Continue Reading] -

A PayPal mystery

Publié: juillet 18, 2016, 8:07pm CEST par Robert X. Cringely

A loyal reader of this column has come to me with a problem that I, in turn, am submitting to all of you. He sells downloadable software over the Internet but lately some customers have been ordering, paying, downloading, yet not requesting the required unlocking key to use their software. Money is piling-up in the reader’s PayPal account and he is starting to worry this is some kind of scam. But if it is, it’s a scam that’s new to me. The first such order was placed on June 4th and there have been 20 such customers so far, though some… [Continue Reading] -

Thinking about Big Data -- Part two

Publié: juillet 7, 2016, 8:23pm CEST par Robert X. Cringely

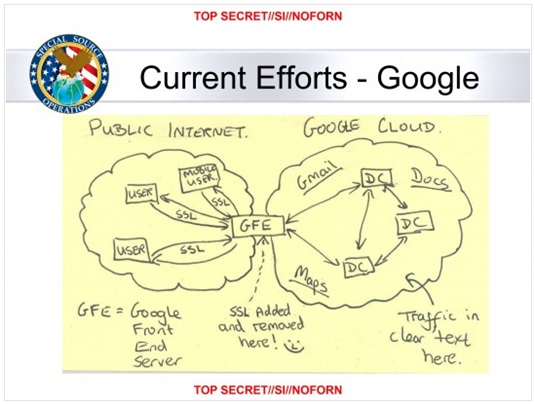

In Part one of this series of columns we learned about data and how computers can be used for finding meaning in large data sets. We even saw a hint of what we might call Big Data at Amazon.com in the mid-1990s, as that company stretched technology to observe and record in real time everything its tens of thousands of simultaneous users were doing. Pretty impressive, but not really Big Data, more like Bigish Data. The real Big Data of that era was already being gathered by outfits like the U.S. National Security Agency (NSA) and the UK Government Communications… [Continue Reading] -

Thinking about Big Data -- Part one

Publié: juillet 6, 2016, 11:27am CEST par Robert X. Cringely

Big Data is Big News, a Big Deal, and Big Business, but what is it, really? What does Big Data even mean? To those in the thick of it, Big Data is obvious and I’m stupid for even asking the question. But those in the thick of Big Data find most people stupid, don’t you? So just for a moment I’ll speak to those readers who are, like me, not in the thick of Big Data. What does it mean? That’s what I am going to explore this week in what I am guessing will be three long columns. My PBS… [Continue Reading] -

What's the deal with online journalism?

Publié: juin 29, 2016, 10:05am CEST par Robert X. Cringely

Not very long ago I started answering questions on Quora, the question-and-answer site. My answers are mainly about aviation because that’s my great hobby and one of the few things besides high tech that I really know a lot about. But there was a question last week about Internet news coverage that I felt deserved better answers than it was getting. So I contributed an answer that has been read, so far, only 388 times. I don’t like making a real effort that is so sparsely read. So here, with a little mild editing, is my answer to "What are the… [Continue Reading] -

LinkedIn gets lucky

Publié: juin 20, 2016, 9:01am CEST par Robert X. Cringely

Several readers have asked for my take on Microsoft’s purchase last week of LinkedIn for $26.2 billion -- a figure some think is too high and others think is a steal. I think there is generally more here than meets the eye. Microsoft definitely needed more presence in social media if it wants to be seen as a legit competitor to Google and Facebook. Yammer wasn’t big enough. LinkedIn fits Redmond’s business orientation and was big enough to show that Satya Nadella isn’t afraid to open up the BIG CHECKBOOK. A simple financial analysis of the deal shows LinkedIn was… [Continue Reading] -

The mainframe is dead... Long live the mainframe!

Publié: juin 16, 2016, 11:55am CEST par Robert X. Cringely

Rumors are flying within IBM this week that the z Systems (mainframe) division is up for sale with the most likely buyer being Hitachi. It’s all a big secret, of course, because IBM management doesn’t tell IBM workers anything, but the idea is certainly consistent with Big Blue’s determination to cut costs and raise cash for more share buybacks. And the murmurs are simply too loud to be meaningless. Think of this news in terms of a statement made last week by an IBM senior executive: "In a world of Cloud Computing, it does not matter what equipment or whose… [Continue Reading] -

What does Bill Gates know about raising chickens?

Publié: juin 13, 2016, 2:23pm CEST par Robert X. Cringely

Bill Gates is a blogger, did you know that? His blog is called Gates Notes and generally covers areas of interest not only to Bill but also to the Bill and Melinda Gates Foundation, which means there’s more coverage of malaria than Microsoft. His latest post that a reader pointed out to me today is about raising chickens, which Bill says he’d do if he was a poor woman in Africa. I’ll wait while you follow the link to read the post, just don’t forget to come back. And while you are there be sure to watch the video… For… [Continue Reading] -

The problem with analytics

Publié: mai 25, 2016, 9:07am CEST par Robert X. Cringely

There is a difference between knowledge and understanding. Knowledge typically comes down to knowing facts while understanding is the application of knowledge to the mastery of systems. You can know a lot while understanding very little. Just as an example, IBM’s Watson artificial intelligence system that defeated the TV Jeopardy champs a few years ago knew all there was to know about Jeopardy questions but didn’t really understand anything. Ask Watson to apply to removing your appendix its knowledge of hundreds of medical questions and you’d be disappointed and probably dead. That’s the problem with most analytics, which is why… [Continue Reading] -

Apple and Didi is about foreign cash and the future of motoring

Publié: mai 16, 2016, 8:57am CEST par Robert X. Cringely

Apple this week invested $1 billion in Xiaoju Kuaizhi Inc. -- known as Didi -- by far the dominant car-hailing service in China with 300 million customers. While Apple has long admitted being interested in car technology and has deals to put Apple technology into many car lines, this particular investment seems to have been a surprise to most everyone. Analysts and pundits are seeing the investment as a way for Apple to get automotive metadata or even to please the Chinese government. I think it’s more than that. I think it is a potential answer to Apple’s huge problem of… [Continue Reading] -

Searching for a nanotech self-organizing principle

Publié: mai 1, 2016, 9:05am CEST par Robert X. Cringely

One of the frustrations of nanotechnology is that we generally can’t make nano materials in large quantities or at low cost, much less both. For the last five years a friend of mine has been telling me this story, explaining that there’s a secret manufacturing method and that he’s seen it. I’m beginning to think the guy is right. We may finally be on the threshold of the real nanotech revolution. Say you want to build a space elevator, which is probably the easiest way to hoist payloads into orbit. Easy yet also impossible, because no material can be manufactured… [Continue Reading] -

Our $27,500 drone. Do you have one, too?

Publié: avril 16, 2016, 9:06am CEST par Robert X. Cringely

This is the kind of thing you find on the bedroom floor of a 14 year-old boy. It’s a gift from last Christmas, still sitting in its box, not yet flown for a reason that often comes down to some variation of "but the batteries need to be charged". I’d forgotten about it totally, which means the little drone missed the FAA’s January 20th registration deadline. Technically, I could be subject to a fine of up to $27,500. If the unregistered drone is used to commit a crime the fine could rise to $250,000 plus three years in prison. Do… [Continue Reading] -

Is IBM guilty of age discrimination? -- Part two

Publié: avril 6, 2016, 3:03pm CEST par Robert X. Cringely

This is the promised second part of my attempt to decide if IBM’s recent large U.S. layoff involves age discrimination in violation of federal laws. More than a week into this process I still can’t say for sure whether Big Blue is guilty or not, primarily due to the company’s secrecy. But that very secrecy should give us all pause because IBM certainly appears to be flaunting or in outright violation of several federal reporting requirements.

I will now explain this in numbing detail.

SEE ALSO: Is IBM guilty of age discrimination? -- Part one

Regular readers will remember that last week I suggested laid-off IBMers go to their managers or HR and ask for statistical information they are allowed to gather under two federal laws -- the Age Discrimination in Employment Act of 1967 and the Older Worker Benefit Protection Act of 1990. These links are to the most recent versions of both laws and are well worth reading. I’m trying to include as much supporting material as possible in this column both as a resource for those affected workers and to help anyone who wants to challenge my conclusions. And for exactly that reason I may as well also give you the entire 34-page separation document given last month to thousands of IBMers. It, too, makes for interesting reading.

For companies that aren’t IBM, reporting compliance with these laws is generally handled following something very much like these guidelines from Troutman Sanders, a big law firm from Atlanta. What Troutman Sanders (and the underlying laws) says to do that IBM seems to have not done comes down to informing the affected workers over age 40 of the very information I suggested last week that IBMers request (number of workers affected, their ages, titles, and geographic distribution) plus these older workers have to be encouraged to consult an attorney and they must be told in writing that they have seven days after signing the separation agreement to change their minds. I couldn’t find any of this in the 34-page document linked in the paragraph, above.

Here’s what happened when readers went to their managers of HR asking for the required information. They were either told that IBM no longer gives out that information as part of laying-off workers or they were told nothing at all. HR tends, according to my readers from IBM, not to even respond.

It looks like they are breaking the law, doesn’t it? Apparently that’s not the way IBM sees it. And they’d argue that’s not the way the courts see it, either. IBM is able to do this because of GILMER v. INTERSTATE/JOHNSON LANE CORP. This 1991 federal case held that age discrimination claims can be handled through compulsory arbitration if both parties have so agreed. Compulsory arbitration of claims is part of the IBM separation package. This has so far allowed Big Blue to avoid most of the reporting requirements I’ve mentioned because arbitration is viewed as a comparable but parallel process with its own rules. And under those rules IBM has in the past said it will (if it must) divulge some of the required information, but only to the arbitrator.

I’m far from the first to notice this change, by the way. It is also covered here.

So nobody outside IBM top management really knows how big this layoff is. And nobody can say whether or not age discrimination has been involved. But as I wrote last week all the IBMers who have reported to me so far about their layoff situation are over 55, which seems fishy.

IBM has one of the largest legal departments of any U.S. company, they have another army of private lawyers available on command, they’ve carefully limited access to any useful information about the layoff and will no doubt fight to the finish to keep that secret, so who is going to spend the time and money to prove IBM is breaking the law? Nobody. As it stands they will get away with, well, something -- a something that I suspect is blatant age discrimination.

The issue here in my view is less the precedent set by Gilmer, above, than the simple fact that IBM hasn’t been called on its behavior. They have so far gotten away with it. They are flaunting the law saying arbitration is a completely satisfactory alternative to a public court. Except it isn’t because arbitration isn’t public. It denies the public’s right to know about illegal behavior and denies the IBM workforce knowledge necessary to their being treated fairly under the law. Arbitration decisions also don’t set legal precedents so in every case IBM starts with the same un-level playing field.

So of course I have a plan.

IBM’s decision to use this particular technique for dealing with potential age discrimination claims isn’t without peril for the company. They are using binding arbitration less as a settlement technique than as a way to avoid disclosing information. But by doing so they necessarily bind themselves to keeping age discrimination outside the blanket release employees are required to sign PLUS they have to work within the EEOC system. It’s in that system where opportunity lies.

Two things about IBM’s legal position in this particular area: 1) they arrogantly believe they are hidden from view which probably means their age discrimination has been blatant. Why go to all this trouble and not take it all the way? And 2) They are probably fixated on avoiding employee lawsuits and think that by forestalling those they will have neutralized both affected employees and the EEOC. But that’s not really the case.

If you want to file a lawsuit under EEOC rules the first thing you do is charge your employer. This is an administrative procedure: you file a charge. The charge sets in motion an EEOC investigation, puts the employer on notice that something is coming, and should normally result in the employee being given permission by the EEOC to file a lawsuit. You can’t file a federal age discrimination lawsuit without EEOC permission. IBM is making its employees accept binding arbitration in lieu of lawsuits, so this makes them think they are exempt from this part, BUT THERE IS NOTHING HERE THAT WOULD KEEP AN AFFECTED EMPLOYEE FROM CHARGING IBM. Employees aren’t bound against doing it because age discrimination is deliberately outside the terms of the blanket release.

I recommend that every RA’d IBMer over the age of 40 charge the company with age discrimination at the EEOC. You can learn how to do it here. The grounds are simple: IBM’s secrecy makes charging the company the only way to find out anything. "Their secrecy makes me suspect age discrimination" is enough to justify a successful charge.

What are they going to do, fire you?

IBM will argue that charges aren’t warranted because they normally lead to lawsuits and since lawsuits are precluded here by arbitration there is no point in charging. Except that’s not true. For one thing, charging only gives employees the option to sue. Charging is also the best way for an individual to get the EEOC motivated because every charge creates a paper trail and by law must be answered. You could write a letter to the EEOC and it might go nowhere but if you charge IBM it has to go somewhere. It’s not only not prohibited by the IBM separation agreement, it is specifically allowed by the agreement (page 29). And even if the EEOC ultimately says you can’t sue, a high volume of age discrimination charges will get their attention and create political pressure to investigate.

Charging IBM is it can be done anonymously. And charges can be filed for third parties, so if you think someone else is a victim of age discrimination you can charge IBM on their behalf. This suggests that 100 percent participation is possible.

What will happen if in the next month IBM gets hit with 10,000 age discrimination charges? IBMers are angry.

Given IBM’s glorious past it can be hard to understand how the company could have stooped so low. But this has been coming for a long time. They’ve been bending the rules for over a decade. Remember I started covering this story in 2007. IBM seems to feel entitled. The rules don’t apply to them. THEY make the rules.

Alas, breaking rules and giving people terrible severance packages is probably seen by IBM’s top management as a business necessity. The company’s business forecast for the next several quarters is that bad. What IBM has failed to understand is it was cheating and bending rules that got them into this situation in the first place.

-

Is IBM guilty of age discrimination? -- Part one

Publié: mars 29, 2016, 10:43am CEST par Robert X. Cringely

Is IBM guilty of age discrimination in its recent huge layoff of US workers? Frankly I don’t know. But I know how to find out, and this is part one of that process. Part two will follow on Friday.

Here’s what I need you to do. If you are a US IBMer age 40 or older who is part of the current Resource Action you have the right under Section 201, Subsection H of the Older Worker Benefit Protection Act of 1990 (OWBPA) to request information from IBM on which employees were involved in the RA and their ages and which employees were not selected and their ages.

Quick like a bunny, ask your manager to give you this information, which they are required by law to do.

Then, of course, please share this information anonymously with me. Once we have a sense of the scope and age distribution of this layoff I will publish part two.

-

Equity crowdfunding finally arrives May 15: Curb your enthusiasm

Publié: mars 29, 2016, 12:00am CEST par Robert X. Cringely

Back in the spring of 2012 Congress passed the Jumpstart Our Business Startups Act (the JOBS Act) to make it easier for small companies to raise capital. The act recognized that nearly all job creation in the US economy comes from new businesses and attempted to accelerate startups by creating whole new ways to fund them.

The act required the United States Securities and Exchange Commission (SEC) by the end of 2012 to come up with regulations to enable the centerpiece of the act, equity crowd funding, which would allow any legal US resident to become a venture capitalist. But the regulations weren’t finished by the end of 2012. They weren’t finished by the end of 2013, either, or 2014. The regulations were finally finished on October 30, 2015 -- 1033 days late.

And the crowd funding industry they enabled looks very different from the one intended by Congress. For most Americans and even most American startups, equity crowdfunding is not likely to mean very much and I think that is a shame. And ironically, whatever success US startups have in equity crowdfunding is more likely to happen overseas than in our own country.

Just for the record, what we are talking about here is equity crowdfunding -- buying startup shares -- not the sort of crowdfunding practiced by outfits like Kickstarter and IndieGoGo where customers primarily pay in advance for upcoming startup products.

The vision of Congress as written in the JOBS Act was simple -- there had to be an easier and cheaper way for startups to raise money and the American middle class deserved a way to participate in this new capital market. Prior to the act only "qualified investors" -- individuals with a net worth of $1 million or more or making at least $200,000 per year -- were allowed to invest in startups, so angel investing was strictly a rich man’s game. The JOBS Act was meant to change that, creating new crowd funding agencies to parallel venture capital firms, broker-dealers, and investment banks and allowing regular people to invest through these new agencies.

But equity crowd funding under Title III of the Act -- crowd funding for regular investors -- was controversial from the start, which may help explain why it has taken so long to happen. Once the act was signed into law in April, 2012 the issue of potential fraud took center stage. Equity crowd funding with its necessarily relaxed reporting and investor qualification requirements looked to some people like a scam in the making. Having barely survived the financial crisis of 2008 promulgated by huge financial institutions, were we ready to do it all over again, but this time at the mercy of Internet-based scam artists? That was the fear.

The real story is, as always, more complex. Equity crowd funding promised to take business away from the SEC’s longtime constituents -- investment banks and broker-dealers. The idea was that an entirely new class of financial operatives would come into the market, potentially taking business away from the folks who were already there. And since the SEC placed power to organize and administer equity crowd funding in the Financial Industry Regulatory Authority (FINRA) -- a private self-regulatory agency owned by the stockbrokers and investment bankers it regulates -- the very people whose income was threatened by a literal interpretation of the JOBS Act -- no wonder things went pretty much to Hell.

There are problems with Title III equity crowd funding for all parties involved -- investors, entrepreneurs, and would-be crowd funding portals. What was supposed to be a simple funding process now has 686 pages of rules and those say that unqualified investors with an annual income or net worth of less than $100,000 can invest in all crowdfunding issuers during a 12-month period the greater of $2,000 or five percent of your annual income and individuals with an annual income or net worth of $100,000 or more can invest 10 percent of annual income, not to exceed $100,000 per year. And these investments have to be in individual startups (no crowdfunding mutual funds).

For entrepreneurs the current maximum to be raised is $1 million and that has to be finished within 12 months plus there are more financial qualification expenses than for raising money from traditional VCs. Crowd-funding portals end up having more liability than they’d probably like for the veracity of the companies they fund making it possibly not worth doing for raises below $1 million.

Equity crowdfunding starts on May 15 so we won’t really know how it plays until after then, but the prospects for Title III aren’t good. Angel funding (Title II) has no such investment or total raise limits and there’s also a Title IV mini-IPO that allows traditional broker-dealers to raise up to $50 million. Plus there is the simple fact that VCs don’t like crowded cap tables and if you expect your startup to need more money from them then Title III might work against you.

In the long run I suspect that the SEC and FINRA caution will drive equity crowd funding offshore. The US isn’t the only country doing this and some of the others are both ahead in the game and more innovative, especially the UK and Israel. So if I want to raise a crowdfunding mutual fund to invest in a basket of American tech startups, which makes all the sense in the world for a guy like me to do, I’d do it in London or Tel Aviv, not in the USA even though most of my investors might still be Americans.

Back in 1998 I spoke to the National Association of State and Provincial Lotteries, explaining to them the potential of the Internet as a gambling platform. The lottery officials were astounded to learn that they couldn’t stop the Internet at their state lines. I recommended they embrace the new technology and become gambling predators. Of course they didn’t and a robust international Internet gambling industry was the result. By moving so slowly and erring too much on the side of protection I fear the SEC and FINRA will guarantee a similar result for equity crowdfunding.

Photo credit: beeboys / Shutterstock

-

Ginni the Eagle: IBM’s corporate 'transformation'

Publié: mars 23, 2016, 12:42pm CET par Robert X. Cringely

I promised a follow-up to my post from last week about IBM’s massive layoffs and here it is. My goal is first to give a few more details of the layoff primarily gleaned from many copies of their separation documents sent to me by laid-off IBMers, but mainly I’m here to explain the literal impossibility of Big Blue’s self-described "transformation" that’s currently in process. My point is not that transformations can’t happen, but that IBM didn’t transform the parts it should and now it’s probably too late.

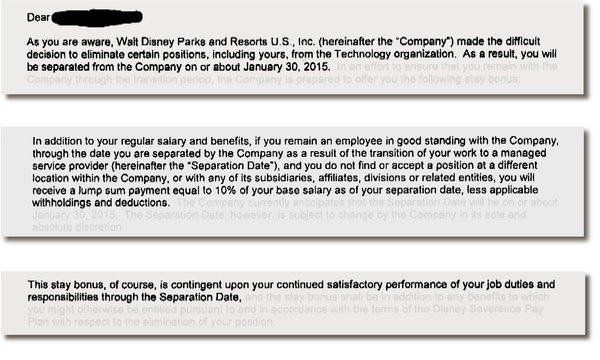

First let’s take a look at the separation docs. Whether you give a damn about IBM or not, if you work for a big company this is worth reading because it may well become an archetype for getting rid of employees. What follows is my summary based on having the actual docs reviewed by several lawyers.

IBM employees waive the right to sue the company. The company retains the right indefinitely to sue the employee. IBM employees waive the right to any additional settlement. Even if IBM is found at fault, in violation of EEOC rules, etc., employees will not get any more money. The agreement is written in a way that dictates how matters like this will be determined in arbitration.

There is no mention of unemployment claims. Eligibility for unemployment compensation is determined and managed by each state. Each state has rules on who is qualified, the terms and conditions, etc.. Some companies in some states have been known to report terminations in a way that disqualifies workers, thus saving the company money on unemployment insurance premiums. Some states have appeal processes. In others you may have to appeal the response with your former employer, which is of course the same bunch who just denied you (good luck with that).

IBM is being very opaque here about their process. Maybe it is hoping former IBMers won’t even think to apply for unemployment benefits. But if IBM takes a hardline position, the arbitration process and legal measures required would probably discourage many former employees from even trying. The question left unanswered then is how many of these folks will be able to receive their full 99 weeks of benefits?

The only way for employees to get more money or a better settlement is for their state or the federal government to sue IBM. In a settlement with a government, IBM could be made to pay its RA’d employees more.

There’s a final point that is being handled in different ways depending on the IBM manager doing the firing. It appears managers are being strongly urged to have their laid-off employees take their accrued vacation time prior to their separation date. Some managers are saying this is mandatory and some are not. From a legal standpoint it’s a bit vague, too.

Are they legally allowed to MAKE employees use their vacation time before separation? According to the lawyers I consulted, that depends on each person’s situation. If they have no work to do, then they may be required to use their vacation. If they are busy with work, then IBM can’t make them eat it. The distinction is important because IBM has been so busy in the past denying employees their vacation time that what’s accrued is in many cases more time than the puny 30 day severance.

To put this vacation pay issue in context, say you are a recently RA’d IBMer given three months' notice, a month’s severance pay, and you have four weeks of accrued vacation time. If you are forced to take that vacation during the three months before your separation date, well that’s four weeks less total pay. If the current layoff is around 20,000 people as I imagine, that could be 20,000 months, 1,667 man-years and close to $200 million in savings for IBM based on average employee compensation.

I wonder who got a bonus for thinking-up that one?

What if laid-off IBMers don’t sign, what happens then? They retain the right to sue, but lose their jobs without any of the separation benefits.

Another question that arises from my contact with IBMers who have been laid-off is whether there is age discrimination in effect here. Of the laid-off (not retiring -- this is key) IBMers who have contacted me so far, 80 percent are over 60 and 90 percent are over 55. Now that could say more about my readers than about IBM’s employees, but if the demographics of the current IBM layoff differ greatly from the company’s overall labor profile that could suggest age discrimination.

I’m thinking of doing an online survey to help find out. Is that something you, as readers, think I should do?

Now what’s the impact of all this on the company? There’s anger of course and that extends to almost every office of every business unit since the layoffs are so broad and deep. Employees are so angry and demoralized that some are supposedly doing a sloppy job. I have no way of knowing whether this is true, but do you want one of those zombie employees (ones being fired in 90 days) writing code for your mission-critical IBM applications?

But wait, there’s more! At least the workers being laid-off have some closure. Some of the ones not picked this time are even more demoralized and angry because they have to stay and probably become part of some subsequent firing that will offer zero weeks severance, not four weeks. One reader’s manager actually told them that they were fortunate to be picked this time for exactly that reason.

All this turmoil hasn’t gone down without an effect on IBM managers, either, many of whom see their own heads on some future chopping block. I have been told there are many managers trying to justify their existence by bombarding their remaining employees with email newsletters and emails with links to "read more on my blog".

Readers report being swamped with so many of these it’s hurting productivity. Not to mention they are being asked to violate the company security policy by clicking on the email link -- an offense that could lead to termination. Remember this is all happening in the name of IBM’s "transformation".

What about that transformation, how is that going and -- for that matter -- what does it even mean?

Having read all the IBM press releases about the current transformation, listened to all the IBM earnings conference calls about it, and talked about it to hundreds of IBMers, it appears to me that this is not a corporate transformation at all, but a product transformation.

Every announcement is about a shift in what IBM is going to be selling. Whole divisions are being sold, product lines condensed and renamed, but it’s all in the name of sales. That is not corporate transformation. Maybe the belief is that IBM as a corporation doesn’t need to be transformed, that it’s a well-oiled money machine that just needs a better product mix to regain its mojo. Alas, that is not the case.

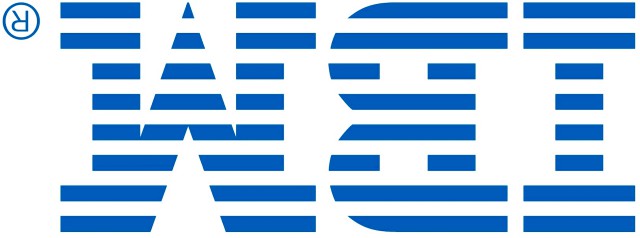

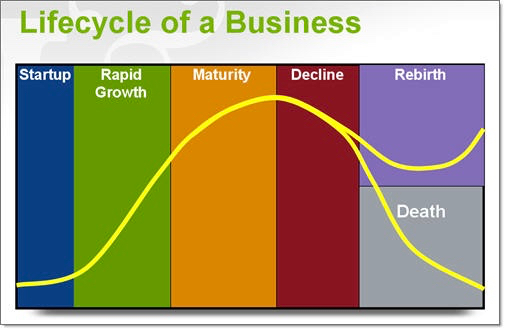

Last week I presented, but then didn’t refer to an illustration of the generally-accepted corporate life cycle. Here it is again:

And here’s a slightly different illustration covering the same process:

And here’s a slightly different illustration covering the same process:

It’s the rebirth section I’d like you to think about because this is what Ginni Rometty’s IBM is trying to do. They want to create that ski jump from new technologies and use it to take the company to new heights. Ginni the Eagle. Our challenge here is to decide whether that’s possible.

If we accept that this second chart is the ideal course for a mature business, when is the best stage for building the ski jump and where does IBM fit today on that curve? It’s not at all obvious that the best place to jump from is the start of decline as presented.

Many business pundits suggest that the place to start is early in the maturity phase (Prime in the earlier chart), before the company has peaked. IBM certainly missed that one, so let’s accept that early decline (between Stability and Aristocracy) is okay. But is IBM in early decline or late decline, are they in Aristocracy, Recrimination or even Bureaucracy? I’d argue the latter.

IBM today has 13 layers of management, four layers of which were added by Ginni Rometty’s predecessor, Sam Palmisano. I don’t want to be too hardcore, but 13 layers is too many for any successful company. That alone tags IBM as being in late bureaucracy, rather like the Ottoman Empire around 1911. So by this measure IBM is probably too far along in its dotage to avoid dying or being acquired.

It’s important to note that despite a very large number of executive retirements (a different/better pension plan?) none of the IBM transformation news has so far involved simplifying the corporate structure. No eliminating whole management levels or, for that matter, reducing management at all.

This is not to say IBM can’t learn from its mistakes, it’s just it doesn’t always learn as much as it should or sometimes even learn the wrong lessons. We’ve seen some of this before. In the late 80’s and early 90’s when John Akers was CEO IBM’s business was changing, sales were dropping, and the leadership at the time was slow to cut costs.

Instead they increased prices, which further hurt sales and accelerated the loss of business. It was a death spiral that eventually led to desperate times, Akers’ demise, and the arrival of IBM’s first outsider CEO, Lou Gerstner from American Express. The lesson IBM learned from that was to put through massive cost cuts AHEAD of the business decline. Which of course today is again accelerating their loss of business.

In both the early 1990s and today IBM has shown it really doesn’t understand the value of its people. Before Gerstner people = billable hours = lots of revenue. Little or no effort was expended to improve efficiency, productivity, to automate, etc. The more labor-intensive it was to do something, the better.

This changed somewhat with Sam Palmisano, who refined the calculation to people = cost = something expendable that can be cut. In most companies with efficient and effective processes they can withstand serious cuts and continue to operate well. IBM’s processes are not efficient and the staff cuts are debilitating.

In both cases IBM needed to transform its business -- an area where IBM’s skills are quite poor. It takes IBM a ridiculous amount of time to make a decision and act on it. While most of today’s CAMSS is a sound plan for future products and services, IBM is at least five-10 years late bringing them to market. While most of the world has learned to develop new products and services and bring them to market faster (Internet time), IBM still moves at its historic glacial pace.

This slow pace of management is not just because there are so many layers, but also because there is so much secrecy. IBM does not let most employees -- even managers -- manage or even see their own budget. IBM does not let them see the real business plan, either. Most business decisions are made at the senior level where the decision makers can’t help but be out of touch with both the market and their employees. How can anyone get anything done when senior executives must approve everything?

So if you can’t make quick decisions about much of anything, how do you transform your product lines? Well at IBM you either acquire products (that’s an investment, remember, rather than an expense and therefore not chargeable against earnings) or you take your old products and simply rename them.

IBM is a SALES company run by salespeople. It takes brains and effort to improve a product or create a new one. However if you can take an old product, rename it, and sell it as something new then no brains or effort are needed.

IBM products change names every few years. A few years ago several product lines became Pure. One of these Pure products was a family of Intel servers with some useful extra stuff. When it sold the X-Series business what happened to the Pure products? Nobody appears to know. Pure was just a word and no one was really managing the brand. It was up to each business unit to figure out what to do with its Pure products. Could IBM sell Pure servers? Did it even still make Pure servers? I still don’t know.

Remember On-Demand and Smarter-Planet? Marketing brain farts. New products do not magically appear with each new campaign. It is mostly rebranding of existing products and services.

IBM is now renaming its middleware software stack, for example. Everything has Connect in it now, but it’s lipstick on a pig. They are about to do a major sales push on this stuff to unwitting customers:

- API Connect -- really the V5 version of the hastily built, feature-lacking, bug-laden API Management product, except with Strongloop stuff crammed in for the build and deploy aspects -- bolted-on stuff that isn’t really integrated.