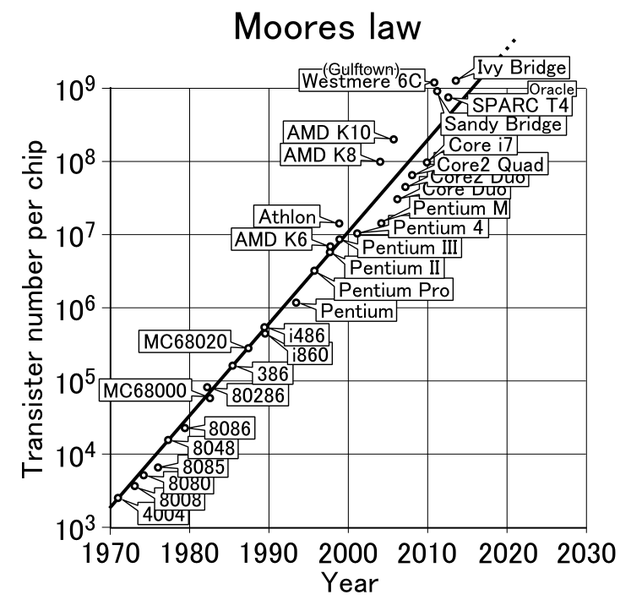

No law is more powerful or important in Silicon Valley than Moore’s Law -- the simple idea that transistor density is continually increasing which means computing power goes up just as costs and energy consumption go down. It’s a clever idea we rightly attribute to Gordon Moore. The power lies in the Law’s predictability. There’s no other trillion dollar business where you can look down the road and have a pretty clear idea what you’ll get. Moore’s Law lets us take chances on the future and generally get away with them. But what happens when you break Moore’s Law? That’s what I have been thinking about lately. That’s when destinies change.

There may have been many times that Moore’s Law has been broken. I’m sure readers will tell us. But I only know of two times -- once when it was quite deliberate and in the open and another time when it was more like breaking and entering.

The first time was at the Computer Science Lab at Xerox PARC. I guess it was Bob Taylor’s idea but Bob Metcalfe explains it so well. The idea back in the early 1970s was to invent the future by living in the future. "We built computers and a network and printers the way we thought they’d be in 10 years," Metcalfe told me long ago. "It was far enough out to be almost impossible yet just close enough to be barely affordable for a huge corporation. And by living in the future we figured out what worked and what didn’t".

Moore’s Law is usually expressed as silicon doubling in computational power every 18 months, but I recently did a little arithmetic and realized that’s pretty close to a 100X performance improvement every decade. One hundred is a much more approachable number than 2 and a decade is more meaningful than 18 months if you are out to create the future. Cringely’s Nth Law, then, says that Gordon Moore didn’t think big enough, because 100X is something you can not only count on but strive for. One hundred is a number worth taking a chance.

The second time we broke Moore’s Law was in the mid-to-late 1990s but that time we pretended to be law abiding. More properly, I guess, we pretended that the world was more advanced than it really was, and the results -- good and bad -- were astounding.

I’m talking about the dot-com era, a glorious yet tragic part of our technological history that we pretend didn’t even happen. We certainly don’t talk about it much. I’ve wondered why? It’s not just that the dot-com meltdown of 2001 was such a bummer, I think, but that it was overshadowed by the events of 9/11. We already had a technology recession going when those airliners hit, but we quickly transferred the blame in our minds to terrorists when the recession suddenly got much worse.

So for those who have forgotten it or didn’t live it here’s my theory of the euphoria and zaniness of the Internet as an industry in the late 1990s during what came to be call the dot-com bubble. It was clear to everyone from Bill Gates down that the Internet was the future of personal computing and possibly the future of business. So venture capitalists invested billions of dollars in Internet startup companies with little regard to how those companies would actually make money.

The Internet was seen as a huge land grab where it was important to make companies as big as they could be as fast as they could be to grab and maintain market share whether the companies were profitable or not. For the first time companies were going public without having made a dime of profit in their entire histories. But that was seen as okay -- profits would eventually come.

The result of all this irrational exuberance was a renaissance of ideas, most of which couldn’t possibly work at the time. While we tend to think of Silicon Valley being built on Moore’s Law making computers continually cheaper and more powerful, the dot-com bubble era only pretended to be built on Moore’s Law. It was built mainly on hype.

In order for many of those 1990s Internet schemes to succeed the cost of server computing had to be brought down to a level that was cheaper even than could be made possible at the time by Moore’s Law. This was because the default business model of most dot-com startups was to make their money from advertising and there was a strict limit on how much advertisers were willing to pay.

For a while it didn’t matter because venture capitalists and then Wall Street investors were willing to make up the difference, but it eventually became obvious that an Alta-Vista with its huge data centers couldn’t make a profit from Internet search alone.

The dot-com meltdown of 2001 happened because the startups ran out of investors to fund their Super Bowl commercials. When the last dollar of the last yokel had been spent on the last Herman Miller office chair, the VCs had, for the most part, already sold their holdings and were gone. Thousands of companies folded, some of them overnight. And the ones that did survive -- including Amazon and Google and a few others -- did so because they’d figured out how to actually make money on the Internet.

Yet the 1990s built the platform for today’s Internet successes. More fiberoptic cable was pulled than we will ever require and that was a blessing. Web 2.0, then mobile and cloud computing each learned to operate within their means. And all the while the real Moore’s Law was churning away and here we are, 12 years later and enjoying 200 times the performance we knew in 2001. Everything we wanted to do then we can do easily today for a fraction of the cost.

We had been trying to live 10 years in the future and just didn’t know it.