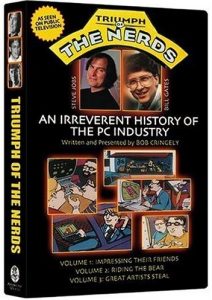

Tenth in a series. Robert X. Cringely's brilliant tome about the rise of the personal computing industry continues, looking at programming languages and operating systems.

Published in 1991, Accidental Empires is an excellent lens for viewing not just the past but future computing.

CHAPTER FOUR

AMATEUR HOUR

You have to wonder what it was we were doing before we had all these computers in our lives. Same stuff, pretty much. Down at the auto parts store, the counterman had to get a ladder and climb way the heck up to reach some top shelf, where he’d feel around in a little box and find out that the muffler clamps were all gone. Today he uses a computer, which tells him that there are three muffler clamps sitting in that same little box on the top shelf. But he still has to get the ladder and climb up to get them, and, worse still, sometimes the computer lies, and there are no muffler clamps at all, spoiling the digital perfection of the auto parts world as we have come to know it.

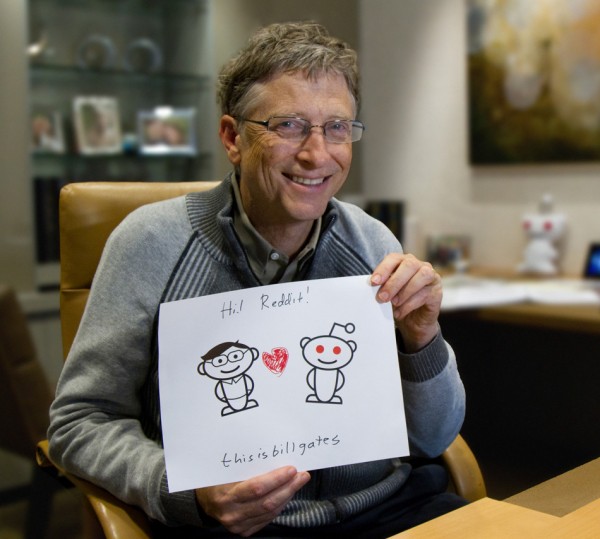

What we’re often looking for when we add the extra overhead of building a computer into our businesses and our lives is certainty. We want something to believe in, something that will take from our shoulders the burden of knowing when to reorder muffler clamps. In the twelfth century, before there even were muffler clamps, such certainty came in the form of a belief in God, made tangible through the building of cathedrals -- places where God could be accessed. For lots of us today, the belief is more in the sanctity of those digital zeros and ones, and our cathedral is the personal computer. In a way, we’re replacing God with Bill Gates.

Uh-oh.

The problem, of course, is with those zeros and ones. Yes or no, right or wrong, is what those digital bits seem to signify, looking so clean and unconnected that we forget for a moment about that time in the eighth grade when Miss Schwerko humiliated us all with a true-false test. The truth is, that for all the apparent precision of computers, and despite the fact that our mothers and Tom Peters would still like to believe that perfection is attainable in this life, computer and software companies are still remarkably imprecise places, and their products reflect it. And why shouldn’t they, since we’re still at the fumbling stage, where good and bad developments seem to happen at random.

Look at Intel, for example. Up to this point in the story, Intel comes off pretty much as high-tech heaven on earth. As the semiconductor company that most directly begat the personal computer business, Intel invented the microprocessor and memory technologies used in PCs and acted as an example of how a high-tech company should be organized and managed. But that doesn’t mean that Bob Noyce’s crew didn’t screw up occasionally.

There was a time in the early 1980s when Intel suffered terrible quality problems. It was building microprocessors and other parts by the millions and by the millions these parts tested bad. The problem was caused by dust, the major enemy of computer chip makers. When your business relies on printing metallic traces that are only a millionth of an inch wide, having a dust mote ten times that size come rolling across a silicon wafer means that some traces won’t be printed correctly and some parts won’t work at all. A few bad parts are to be expected, since there are dozens, sometimes hundreds, printed on a single wafer, which is later cut into individual components. But Intel was suddenly getting as many bad parts as good, and that was bad for business.

Semiconductor companies fight dust by building their components in expensive clean rooms, where technicians wear surgical masks, paper booties, rubber gloves, and special suits and where the air is specially filtered. Intel had plenty of clean rooms, but it still had a big dust problem, so the engineers cleverly decided that the wafers were probably dusty before they ever arrived at Intel. The wafers were made in the East by Monsanto. Suddenly it was Monsanto’s dust problem.

Monsanto engineers spent months and millions trying to eliminate every last speck of dust from their silicon wafer production facility in South Carolina. They made what they thought was terrific progress, too, though it didn’t show in Intel’s production yields, which were still terrible. The funny thing was that Monsanto’s other customers weren’t complaining. IBM, for example, wasn’t complaining, and IBM was a very picky customer, always asking for wafers that were extra big or extra small or triangular instead of round. IBM was having no dust problems.

If Monsanto was clean and Intel was clean, the only remaining possibility was that the wafers somehow got dusty on their trip between the two companies, so the Monsanto engineers hired a private investigator to tail the next shipment of wafers to Intel. Their private eye uncovered an Intel shipping clerk who was opening incoming boxes of super-clean silicon wafers and then counting out the wafers by hand into piles on a super-unclean desktop, just to make sure that Bob Noyce was getting every silicon wafer he was paying for.

The point of this story goes far beyond the undeification of Intel to a fundamental characteristic of most high-tech businesses. There is a business axiom that management gurus spout and that bigshot industrialists repeat to themselves as a mantra if they want to sleep well at night. The axiom says that when a business grows past $1 billion in annual sales, it becomes too large for any one individual to have a significant impact. Alas, this is not true when it’s a $1 billion high-tech business, where too often the critical path goes right through the head of one particular programmer or engineer or even through the head of a well-meaning clerk down in the shipping department. Remember that Intel was already a $1 billion company when it was brought to its knees by desk dust.

The reason that there are so many points at which a chip, a computer, or a program is dependent on just one person is that the companies lack depth. Like any other new industry, this is one staffed mainly by pioneers, who are, by definition, a small minority. People in critical positions in these organizations don’t usually have backup, so when they make a mistake, the whole company makes a mistake.

My estimate, in fact, is that there are only about twenty-five real people in the entire personal computer industry -- this shipping clerk at Intel and around twenty-four others. Sure, Apple Computer has 10,000 workers, or says it does, and IBM claims nearly 400,000 workers worldwide, but has to be lying. Those workers must be temps or maybe androids because I keep running into the same two dozen people at every company I visit. Maybe it’s a tax dodge. Finish this book and you’ll see; the companies keep changing, but the names are always the same.

Intel begat the microprocessor and the dynamic random access memory chip, which made possible MITS, the first of many personal computer companies with a stupid name. And MITS, in turn, made possible Microsoft, because computer hardware must exist, or at least be claimed to exist, before programmers can even envision software for it. Just as cave dwellers didn’t squat with their flint tools chipping out parking brake assemblies for 1967 Buicks, so programmers don’t write software that has no computer upon which to run. Hardware nearly always leads software, enabling new development, which is why Bill Gates’s conversion from minicomputers to microcomputers did not come (could not come) until 1974, when he was a sophomore at Harvard University and the appearance of the MITS Altair 8800 computer made personal computer software finally possible.

Like the Buddha, Gates’s enlightenment came in a flash. Walking across Harvard Yard while Paul Allen waved in his face the January 1975 issue of Popular Electronics announcing the Altair 8800 microcomputer from MITS, they both saw instantly that there would really be a personal computer industry and that the industry would need programming languages. Although there were no microcomputer software companies yet, 19-year-old Bill’s first concern was that they were already too late. “We realized that the revolution might happen without us”, Gates said. After we saw that article, there was no question of where our life would focus”.

“Our life!” What the heck does Gates mean here -- that he and Paul Allen were joined at the frontal lobe, sharing a single life, a single set of experiences? In those days, the answer was “yes”. Drawn together by the idea of starting a pioneering software company and each convinced that he couldn’t succeed alone, they committed to sharing a single life -- a life unlike that of most other PC pioneers because it was devoted as much to doing business as to doing technology.

Gates was a businessman from the start; otherwise, why would he have been worried about being passed by? There was plenty of room for high-level computer languages to be developed for the fledgling platforms, but there was only room for one first high-level language. Anyone could participate in a movement, but only those with the right timing could control it. Gates knew that the first language -- the one resold by MITS, maker of the Altair -- would become the standard for the whole industry. Those who seek to establish such de facto standards in any industry do so for business reasons.

“This is a very personal business, but success comes from appealing to groups”, Gates says. “Money is made by setting de facto standards”.

The Altair was not much of a consumer product. It came typically as an unassembled $350 kit, clearly targeting only the electronic hobbyist market. There was no software for the machine, so, while it may have existed, it sure didn’t compute. There wasn’t even a keyboard. The only way of programming the computer at first was through entering strings of hexadecimal code by flicking a row of switches on the front panel. There was no display other than some blinking lights. The Altair was limited in its appeal to those who could solder (which eliminated most good programmers) and to those who could program in machine language (which eliminated most good solderers).

BASIC was generally recognized as the easiest programming language to learn in 1975. It automatically converted simple English-like commands to machine language, effectively removing the programming limitation and at least doubling the number of prospective Altair customers.

Since they didn’t have an Altair 8800 computer (nobody did yet), Gates and Allen wrote a program that made a PDP-10 minicomputer at the Harvard Computation Center simulate the Altair’s Intel 8080 microprocessor. In six weeks, they wrote a version of the BASIC programming language that would run on the phantom Altair synthesized in the minicomputer. They hoped it would run on a real Altair equipped with at least 4096 bytes of random access memory. The first time they tried to run the language on a real microcomputer was when Paul Allen demonstrated the product to MITS founder Ed Roberts at the company’s headquarters in Albuquerque. To their surprise and relief, it worked.

MITS BASIC, as it came to be called, gave substance to the microcomputer. Big computers ran BASIC. Real programs had been written in the language and were performing business, educational, and scientific functions in the real world. While the Altair was a computer of limited power, the fact that Allen and Gates were able to make a high-level language like BASIC run on the platform meant that potential users could imagine running these same sorts of applications now on a desktop rather than on a mainframe.

MITS BASIC was dramatic in its memory efficiency and made the bold move of adding commands that allowed programmers to control the computer memory directly. MITS BASIC wasn’t perfect. The authors of the original BASIC, John Kemeny and Thomas Kurtz, both of Dartmouth College, were concerned that Gates and Allen’s version deviated from the language they had designed and placed into the public domain a decade before. Kemeny and Kurtz might have been unimpressed, but the hobbyist world was euphoric.

I’ve got to point out here that for many years Kemeny was president of Dartmouth, a school that didn’t accept me when I was applying to colleges. Later, toward the end of the Age of Jimmy Carter, I found myself working for Kemeny, who was then head of the presidential commission investigating the Three Mile Island nuclear accident. One day I told him how Dartmouth had rejected me, and he said, “College admissions are never perfect, though in your case I’m sure we did the right thing”. After that I felt a certain affection for Bill Gates.

Gates dropped out of Harvard, Allen left his programming job at Honeywell, and both moved to New Mexico to be close to their customer, in the best Tom Peters style. Hobbyists don’t move across country to maintain business relationships, but businessmen do. They camped out in the Sundowner Motel on Route 66 in a neighborhood noted for all-night coffee shops, hookers, and drug dealers.

Gates and Allen did not limit their interest to MITS. They wrote versions of BASIC for other microcomputers as they came to market, leveraging their core technology. The two eventually had a falling out with Ed Roberts of MITS, who claimed that he owned MITS BASIC and its derivatives; they fought and won, something that hackers rarely bothered to do. Capitalists to the bone, they railed against software piracy before it even had a name, writing whining letters to early PC publications.

Gates and Allen started Microsoft with a stated mission of putting “a computer on every desk and in every home, running Microsoft software”. Although it seemed ludicrous at the time, they meant it.

While Allen and Gates deliberately went about creating an industry and then controlling it, they were important exceptions to the general trend of PC entrepreneurism. Most of their eventual competitors were people who managed to be in just the right place at the right time and more or less fell into business. These people were mainly enthusiasts who at first developed computer languages and operating systems for their own use. It was worth the effort if only one person -- the developer himself -- used their product. Often they couldn’t even imagine why anyone else would be interested.

Gary Kildall, for example, invented the first microcomputer operating system because he was tired of driving to work. In the early 1970s, Kildall taught computer science at the Naval Postgraduate School in Monterey, California, where his specialty was compiler design. Compilers are software tools that take entire programs written in a high-level language like FORTRAN or Pascal and translate them into assembly language, which can be read directly by the computer. High-level languages are easier to learn than Assembler, so compilers allowed programs to be completed faster and with more features, although the final code was usually longer than if the program had been written directly in the internal language of the microprocessor. Compilers translate, or compile, large sections of code into Assembler at one time, as opposed to interpreters, which translate commands one at a time.

By 1974, Intel had added the 8008 and 8080 to its family of microprocessors and had hired Gary Kildall as a consultant to write software to emulate the 8080 on a DEC time-sharing system, much as Gates and Allen would shortly do at Harvard. Since there were no microcomputers yet, Intel realized that the best way for companies to develop software for microprocessor-based devices was by using such an emulator on a larger system.

Kildall’s job was to write the emulator, called Interp/80, followed by a high-level language called PL/M, which was planned as a microcomputer equivalent of the XPL language developed for mainframe computers at Stanford University. Nothing so mundane (and useful by mere mortals) as BASIC for Gary Kildall, who had a Ph.D. in compiler design.

What bothered Kildall was not the difficulty of writing the software but the tedium of driving the fifty miles from his home in Pacific Grove across the Santa Cruz mountains to use the Intel minicomputer in Silicon Valley. He could have used a remote teletype terminal at home, but the terminal was incredibly slow for inputting thousands of lines of data over a phone line; driving was faster.

Or he could develop software directly on the 8080 processor, bypassing the time-sharing system completely. Not only could he avoid the long drive, but developing directly on the microprocessor would also bypass any errors in the minicomputer 8080 emulator. The only problem was that the 8080 microcomputer Gary Kildall wanted to take home didn’t exist.

What did exist was the Intellec-8, an Intel product that could be used (sort of) to program an 8080 processor. The Intellec-8 had a microprocessor, some memory, and a port for attaching a Teletype 33 terminal. There was no software and no method for storing data and programs outside of main memory.

The primary difference between the Intellec-8 and a microcomputer was external data storage and the software to control it. IBM had invented a new device, called a floppy disk, to replace punched cards for its minicomputers. The disks themselves could be removed from the drive mechanism, were eight inches in diameter, and held the equivalent of thousands of pages of data. Priced at around $500, the floppy disk drive was perfect for Kildall’s external storage device. KildaU, who didn’t have $500, convinced Shugart Associates, a floppy disk drive maker, to give him a worn-out floppy drive used in its 10,000-hour torture test. While his friend John Torode invented a controller to link the Intellec-8 and the floppy disk drive, Kildall used the 8080 emulator on the Intel time-sharing system to develop his operating system, called CP/M, or Control Program/Monitor.

If a computer acquires a personality, it does so from its operating system. Users interact with the operating system, which interacts with the computer. The operating system controls the flow of data between a computer and its long-term storage system. It also controls access to system memory and keeps those bits of data that are thrashing around the microprocessor from thrashing into each other. Operating systems usually store data in files, which have individual names and characteristics and can be called up as a program or the user requires them.

Gary Kildall developed CP/M on a DEC PDP-10 minicomputer running the TOPS-10 operating system. Not surprisingly, most CP/M commands and file naming conventions look and operate like their TOPS-10-counterparts. It wasn’t pretty, but it did the job.

By the time he’d finished writing the operating system, Intel didn’t want CP/M and had even lost interest in Kildall’s PL/M language. The only customers for CP/M in 1975 were a maker of intelligent terminals and Lawrence Livermore Labs, which used CP/M to monitor programs on its Octopus network.

In 1976, Kildall was approached by Imsai, the second personal computer company with a stupid name. Imsai manufactured an early 8080-based microcomputer that competed with the Altair. In typical early microcomputer company fashion, Imsai had sold floppy disk drives to many of its customers, promising to send along an operating system eventually. With each of them now holding at least $1,000 worth of hardware that was only gathering dust, the customers wanted their operating system, and CP/M was the only operating system for Intel-based computers that was actually available.

By the time Imsai came along, Kildall and Torode had adapted CP/M to four different floppy disk controllers. There were probably 100 little companies talking about doing 8080-based computers, and neither man wanted to invest the endless hours of tedious coding required to adapt CP/M to each of these new platforms. So they split the parts of CP/M that interfaced with each new controller into a separate computer code module, called the Basic Input/Output System, or BIOS. With all the hardware-dependent parts of CP/M concentrated in the BIOS, it became a relatively easy job to adapt the operating system to many different Intel-based microcomputers by modifying just the BIOS.

With his CP/M and invention of the BIOS, Gary Kildall defined the microcomputer. Peek into any personal computer today, and you’ll find a general-purpose operating system adapted to specific hardware through the use of a BIOS, which is now a specialized type of memory chip.

In the six years after Imsai offered the first CP/M computer, more than 500,000 CP/M computers were sold by dozens of makers. Programmers began to write CP/M applications, relying on the operating system’s features to control the keyboard, screen, and data storage. This base of applications turned CP/M into a de facto standard among microcomputer operating systems, guaranteeing its long-term success. Kildall started a company called Intergalactic Digital Research (later, just Digital Research) to sell the software in volume to computer makers and direct to users for $70 per copy. He made millions of dollars, essentially without trying.

Before he knew it, Gary Kildall had plenty of money, fast cars, a couple of airplanes, and a business that made increasing demands on his time. His success, while not unwelcome, was unexpected, which also meant that it was unplanned for. Success brings with it a whole new set of problems, as Gary Kildall discovered. You can plan for failure, but how do you plan for success?

Every entrepreneur has an objective, which, once achieved, leads to a crisis. In Gary Kildall’s case, the objective -- just to write CP/M, not even to sell it -- was very low, so the crisis came quickly. He was a code god, a programmer who literally saw lines of code fully formed in his mind and then committed them effortlessly to the keyboard in much the same way that Mozart wrote music. He was one with the machine; what did he need with seventy employees?

“Gary didn’t give a shit about the business. He was more interested in getting laid”, said Gordon Eubanks, a former student of Kildall who led development of computer languages at Digital Research. “So much went so well for so long that he couldn’t imagine it would change. When it did -- when change was forced upon him -- Gary didn’t know how to handle it.”

“Gary and Dorothy [Kildall's wife and a Digital Research vice-president) had arrogance and cockiness but no passion for products. No one wanted to make the products great. Dan Bricklin [another PC software pionee -- read on] sent a document saying what should be fixed in CP/M, but it was ignored. Then I urged Gary to do a BASIC language to bundle with CP/M, but when we finally got him to do a language, he insisted on PL/i -- a virtually unmarketable language”.

Digital Research was slow in developing a language business to go with its operating systems. It was also slow in updating its core operating system and extending it into the new world of 16-bit microprocessors that came along after 1980. The company in those days was run like a little kingdom, ruled by Gary and Dorothy Kildall.

“In one board meeting”, recalled a former Digital Research executive, “we were talking about whether to grant stock options to a woman employee. Dorothy said, ‘No, she doesn’t deserve options -- she’s not professional enough; her kids visit her at work after 5:00 p.m.’ Two minutes later, Christy Kildall, their daughter, burst into the boardroom and dragged Gary off with her to the stable to ride horses, ending the meeting. Oh yeah, Dorothy knew about professionalism”.

Let’s say for a minute that Eubanks was correct, and Gary Kildall didn’t give a shit about the business. Who said that he had to? CP/M was his invention; Digital Research was his company. The fact that it succeeded beyond anyone’s expectations did not make those earlier expectations invalid. Gary Kildall’s ambition was limited, something that is not supposed to be a factor in American business. If you hope for a thousand and get a million, you are still expected to want more, but he didn’t.

It’s easy for authors of business books to get rankled by characters like Gary Kildall who don’t take good care of the empires they have built. But in fact, there are no absolute rules of behavior for companies like Digital Research. The business world is, like computers, created entirely by people. God didn’t come down and say there will be a corporation and it will have a board of directors. We made that up. Gary Kildall made up Digital Research.

Eubanks, who came to Digital Research after a naval career spent aboard submarines, hated Kildall’s apparent lack of discipline, not understanding that it was just a different kind of discipline. Kildall was into programming, not business.

“Programming is very much a religious experience for a lot of people”, Kildall explained. “If you talk about programming to a group of programmers who use the same language, they can become almost evangelistic about the language. They form a tight-knit community, hold to certain beliefs, and follow certain rules in their programming. It’s like a church with a programming language for a bible”.

Gary Kildall’s bible said that writing a BASIC compiler to go with CP/M might be a shrewd business move, but it would be a step backward technically. Kildall wanted to break new ground, and a BASIC had already been done by Microsoft.

“The unstated rule around Digital Reseach was that Microsoft did languages, while we did operating systems”, Eubanks explained. “It was never stated emphatically, but I always thought that Gary assumed he had an agreement with Bill Gates about this separation and that as long as we didn’t compete with Microsoft, they wouldn’t compete with us”.

Sure.

The Altair 8800 may have been the first microcomputer, but it was not a commercial success. The problem was that assembly took from forty to an infinite number of hours, depending on the hobbyist’s mechanical ability. When the kit was done, the microcomputer either worked or didn’t. If it worked, the owner had a programmable computer with a BASIC interpreter, ready to run any software he felt like writing.

The first microcomputer that was a major commercial success was the Apple II. It succeeded because it was the first microcomputer that looked like a consumer electronic product. You could buy the Apple from a dealer who would fix it if it broke and would give you at least a little help in learning to operate the beast. The Apple II had a floppy disk drive for data storage, did not require a separate Teletype or video terminal, and offered color graphics in addition to text. Most important, you could buy software written by others that would run on the Apple and with which a novice could do real work.

The Apple II still defines what a low-end computer is like. Twenty-third century archaeologists excavating some ancient ComputerLand stockroom will see no significant functional difference between an Apple II of 1978 and an IBM PS/2 of 1992. Both have processor, memory, storage, and video graphics. Sure, the PS/2 has a faster processor, more memory and storage, and higher-resolution graphics, but that only matters to us today. By the twenty-third century, both machines will seem equally primitive.

The Apple II was guided by three spirits. Steve Wozniak invented the earlier Apple I to show it off to his friends in the Homebrew Computer Club. Steve Jobs was Wozniak’s younger sidekick who came up with the idea of building computers for sale and generally nagged Woz and others until the Apple II was working to his satisfaction. Mike Markkula was the semiretired Intel veteran (and one of Noyce’s boys) who brought the money and status required for the other two to be taken at all seriously.

Wozniak made the Apple II a simple machine that used clever hardware tricks to get good performance at a smallish price (at least to produce -- the retail price of a fully outfitted Apple II was around $3,000). He found a way to allow the microprocessor and the video display to share the same memory. His floppy disk controller, developed during a two-week period in December 1977, used less than a quarter the number of integrated circuits required by other controllers at the time. The Apple’s floppy disk controller made it clearly superior to machines appearing about the same time from Commodore and Radio Shack. More so than probably any other microcomputer, the Apple II was the invention of a single person; even Apple’s original BASIC interpreter, which was always available in readonly memory, had been written by Woz.

Woz made the Apple II a color machine to prove that he could do it and so he could use the computer to play a color version of Breakout, a video game that he and Jobs had designed for Atari. Markkula, whose main contributions at Intel had been in finance, pushed development of the floppy disk drive so the computer could be used to run accounting programs and store resulting financial data for small business owners. Each man saw the Apple II as a new way of fulfilling an established need -- to replace a video game for Woz and a mainframe for Markkula. This followed the trend that new media tend to imitate old media.

Radio began as vaudeville over the air, while early television was radio with pictures. For most users (though not for Woz) the microcomputer was a small mainframe, which explained why Apple’s first application for the machine was an accounting package and the first application supplied by a third-party developer was a database -- both perfect products for a mainframe substitute. But the Apple II wasn’t a very good mainframe replacement. The fact is that new inventions often have to find uses of their own in order to find commercial success, and this was true for the Apple II, which became successful strictly as a spreadsheet machine, a function that none of its inventors visualized.

At $3,000 for a fully configured system, the Apple II did not have a big future as a home machine. Old-timers like to reminisce about the early days of Apple when the company’s computers were affordable, but the truth is that they never were.

The Apple II found its eventual home in business, answering the prayers of all those middle managers who had not been able to gain access to the company’s mainframe or who were tired of waiting the six weeks it took for the computer department to prepare a report, dragging the answers to simple business questions from corporate data. Instead, they quickly learned to use a spreadsheet program called VisiCalc, which was available at first only on the Apple II.

VisiCalc was a compelling application -- an application so important that it, alone justified the computer purchase. Such an application was the last element required to turn the microcomputer from a hobbyist’s toy into a business machine. No matter how powerful and brilliantly designed, no computer can be successful without a compelling application. To the people who bought them, mainframes were really inventory machines or accounting machines, and minicomputers were office automation machines. The Apple II was a VisiCalc machine.

VisiCalc was a whole new thing, an application that had not appeared before on some other platform. There were no minicomputer or mainframe spreadsheet programs that could be downsized to run on a microcomputer. The microcomputer and the spreadsheet came along at the same time. They were made for each other.

VisiCalc came about because its inventor, Dan Bricklin, went to business school. And Bricklin went to business school because he thought that his career as a programmer was about to end; it was becoming so easy to write programs that Bricklin was convinced there would eventually be no need for programmers at all, and he would be out of a job. So in the fall of 1977, 26 years old and worried about being washed up, he entered the Harvard Business School looking toward a new career.

At Harvard, Bricklin had an advantage over other students. He could whip up BASIC programs on the Harvard time-sharing system that would perform financial calculations. The problem with Bricklin’s programs was that they had to be written and rewritten for each new problem. He began to look for a more general way of doing these calculations in a format that would be flexible.

What Bricklin really wanted was not a microcomputer program at all but a specialized piece of hardware -- a kind of very advanced calculator with a heads-up display similar to the weapons system controls on an F-14 fighter. Like Luke Skywalker jumping into the turret of the Millennium Falcon, Bricklin saw himself blasting out financials, locking onto profit and loss numbers that would appear suspended in space before him. It was to be a business tool cum video game, a Saturday Night Special for M.B.A.s, only the hardware technology didn’t exist in those days to make it happen.

Back in the semireal world of the Harvard Business School, Bricklin’s production professor described large blackboards that were used in some companies for production planning. These blackboards, often so long that they spanned several rooms, were segmented in a matrix of rows and columns. The production planners would fill each space with chalk scribbles relating to the time, materials, manpower, and money needed to manufacture a product. Each cell on the blackboard was located in both a column and a row, so each had a two-dimensional address. Some cells were related to others, so if the number of workers listed in cell C-3 was increased, it meant that the amount of total wages in cell D-5 had to be increased proportionally, as did the total number of items produced, listed in cell F-7. Changing the value in one cell required the recalculation of values in all other linked cells, which took a lot of erasing and a lot of recalculating and left the planners constantly worried that they had overlooked recalculating a linked value, making their overall conclusions incorrect.

Given that Bricklin’s Luke Skywalker approach was out of the question, the blackboard metaphor made a good structure for Bricklin’s financial calculator, with a video screen replacing the physical blackboard. Once data and formulas were introduced by the user into each cell, changing one variable would automatically cause all the other cells to be recalculated and changed too. No linked cells could be forgotten. The video screen would show a window on a spreadsheet that was actually held in computer memory. The virtual spreadsheet inside the box could be almost any size, putting on a desk what had once taken whole rooms filled with blackboards. Once the spreadsheet was set up, answering a what-if question like “How much more money will we make if we raise the price of each widget by a dime?” would take only seconds.

His production professor loved the idea, as did Bricklin’s accounting professor. Bricklin’s finance professor, who had others to do his computing for him, said there were already financial analysis programs running on mainframes, so the world did not need Dan Bricklin’s little program. Only the world did need Dan Bricklin’s little program, which still didn’t have a name.

It’s not surprising that VisiCalc grew out of a business school experience because it was the business schools that were producing most of the future VisiCalc users. They were the thousands of M.B.A.s who were coming into the workplace trained in analytical business techniques and, even more important, in typing. They had the skills and the motivation but usually not the access to their company computer. They were the first generation of businesspeople who could do it all by themselves, given the proper tools.

Bricklin cobbled up a demonstration version of his idea over a weekend. It was written in BASIC, was slow, and had only enough rows and columns to fill a single screen, but it demonstrated many of the basic functions of the spreadsheet. For one thing, it just sat there. This is the genius of the spreadsheet; it’s event driven. Unless the user changes a cell, nothing happens. This may not seem like much, but being event driven makes a spreadsheet totally responsive to the user; it puts the user in charge in a way that most other programs did not. VisiCalc was a spreadsheet language, and what the users were doing was rudimentary programming, without the anxiety of knowing that’s what it was.

By the time Bricklin had his demonstration program running, it was early 1978 and the mass market for microcomputers, such as it was, was being vied for by the Apple II, Commodore PET, and the Radio Shack TRS-80. Since he had no experience with micros, and so no preference for any particular machine, Bricklin and Bob Frankston, his old friend from MIT and new partner, developed VisiCalc for the Apple II, strictly because that was the computer their would-be publisher loaned them in the fall of 1978. No technical merit was involved in the decision.

Dan Fylstra was the publisher. He had graduated from Harvard Business School a year or two before and was trying to make a living selling microcomputer chess programs from his home. Fylstra’s Personal Software was the archetypal microcomputer application software company. Bill Gates at Microsoft and Gary Kildall at Digital Research were specializing in operating systems and languages, products that were lumped together under the label of systems software, and were mainly sold to hardware manufacturers rather than directly to users. But Fylstra was selling applications direct to retailers and end users, often one program at a time. With no clear example to follow, he had to make most of the mistakes himself, and did.

Since there was no obvious success story to emulate, no retail software company that had already stumbled across the rules for making money, Fylstra dusted off his Harvard case study technique and looked for similar industries whose rules could be adapted to the microcomputer software biz. About the closest example he could find was book publishing, where the author accepts responsibility for designing and implementing the product, and the publisher is responsible for manufacturing, distribution, marketing, and sales. Transferred to the microcomputer arena, this meant that Software Arts, the company Bricklin and Frankston formed, would develop VisiCalc and its subsequent versions, while Personal Software, Fylstra’s company, would copy the floppy disks, print the manuals, place ads in computer publications, and distribute the product to retailers and the public. Software Arts would receive a royalty of 37.5 percent on copies of VisiCalc sold at retail and 50 percent for copies sold wholesale. “The numbers seemed fair at the time,” Fylstra said.

Bricklin was still in school, so he and Frankston divided their efforts in a way that would become a standard for microcomputer programming projects. Bricklin designed the program, while Frankston wrote the actual code. Bricklin would say, “This is the way the program is supposed to look, these are the features, and this is the way it should function”, but the actual design of the internal program was left up to Bob Frankston, who had been writing software since 1963 and was clearly up to the task. Frankston added a few features on his own, including one called “lookup”, which could extract values from a table, so he could use VisiCalc to do his taxes.

Bob Frankston is a gentle man and a brilliant programmer who lives in a world that is just slightly out of sync with the world in which you and I live. (Okay, so it’s out of sync with the world in which you live.) When I met him, Frankston was chief scientist at Lotus Development, the people who gave us the Lotus 1-2-3 spreadsheet. In a personal computer hardware or software company, being named chief scientist means that the boss doesn’t know what to do with you. Chief scientists don’t generally have to do anything; they’re just smart people whom the company doesn’t want to lose to a competitor. So they get a title and an office and are obliged to represent the glorious past at all company functions. At Apple Computer, they call them Apple Fellows, because you can’t have more than one chief scientist.

Bob Frankston, a modified nerd (he combined the requisite flannel shirt with a full beard), seemed not to notice that his role of chief scientist was a sham, because to him it wasn’t; it was the perfect opportunity to look inward and think deep thoughts without regard to their marketability.

“Why are you doing this as a book?” Frankston asked me over breakfast one morning in Newton, Massachusetts. By “this”, he meant the book you have in your hands right now, the major literary work of my career and, I hope, the basis of an important American fortune. “Why not do it as a hypertext file that people could just browse through on their computers?”

I will not be browsed through. The essence of writing books is the author’s right to tell the story in his own words and in the order he chooses. Hypertext, which allows an instant accounting of how many times the words Dynamic Random-Access Memory or fuck appear, completely eliminates what I perceive as my value-added, turns this exercise into something like the Yellow Pages, and totally eliminates the prospect that it will help fund my retirement.

“Oh”, said Frankston, with eyebrows raised. “Okay”.

Meanwhile, back in 1979, Bricklin and Frankston developed the first version of VisiCalc on an Apple II emulator running on a minicomputer, just as Microsoft BASIC and CP/M had been written. Money was tight, so Frankston worked at night, when computer time was cheaper and when the time-sharing system responded faster because there were fewer users.

They thought that the whole job would take a month, but it took close to a year to finish. During this time, Fylstra was showing prerelease versions of the product to the first few software retailers and to computer companies like Apple and Atari. Atari was interested but did not yet have a computer to sell. Apple’s reaction to the product was lukewarm.

VisiCalc hit the market in October 1979, selling for $100. The first 100 copies went to Marv Goldschmitt’s computer store in Bedford, Massachusetts, where Dan Bricklin appeared regularly to give demonstrations to bewildered customers. Sales were slow. Nothing like this product had existed before, so it would be a mistake to blame the early microcomputer users for not realizing they were seeing the future when they stared at their first VisiCalc screen.

Nearly every software developer in those days believed that small businesspeople would be the main users of any financial products they’d develop. Markkula’s beloved accounting system, for example, would be used by small retailers and manufacturers who could not afford access to a time-sharing system and preferred not to farm the job out to an accounting service. Bricklin’s spreadsheet would be used by these same small businesspeople to prepare budgets and forecast business trends. Automation was supposed to come to the small business community through the microcomputer just as it had come to the large and medium businesses through mainframes and minicomputers. But it didn’t work that way.

The problem with the small business market was that small businesses weren’t, for the most part, very businesslike. Most small businesspeople didn’t know what they were doing. Accounting was clearly beyond them.

At the time, sales to hobbyists and would-be computer game players were topping out, and small businesses weren’t buying. Apple and most of its competitors were in real trouble. The personal computer revolution looked as if it might last only five /ears. But then VisiCalc sales began to kick in.

Among the many customers who watched VisiCalc demos at Marv Goldschmitt’s computer store were a few businesspeople -- rare members of both the set of computer enthusiasts and the economic establishment. Many of these people had bought Apple lis, hoping to do real work until they attempted to come to terms with the computer’s forty-column display and lack of lowercase letters. In VisiCalc, they found an application that did not care about lowercase letters, and since the program used a view through the screen on a larger, virtual spreadsheet, the forty-column limit was less of one. For $100, they took a chance, carried the program home, then eventually took both the program and the computer it ran on with them to work. The true market for the Apple II turned out to be big business, and it was through the efforts of enthusiast employees, not Apple marketers, that the Apple II invaded industry.

“The beautiful thing about the spreadsheet was that customers in big business were really smart and understood the benefits right away”, said Trip Hawkins, who was in charge of small business strategy at Apple. “I visited Westinghouse in Pittsburgh. The company had decided that Apple II technology wasn’t suitable, but 1,000 Apple lis had somehow arrived in the corporate headquarters, bought with petty cash funds and popularized by the office intelligentsia”.

Hawkins was among the first to realize that the spreadsheet was a new form of computer life and that VisiCalc -- the only spreadsheet on the market and available at first only on the Apple II -- would be Apple’s tool for entering, maybe dominating, the microcomputer market for medium and large corporations. VisiCalc was a strategic asset and one that had to be tied up fast before Bricklin and Frankston moved it onto other platforms like the Radio Shack TRS-80.

“When I brought the first copies of VisiCalc into Apple, it was clear to me that this was an important application, vital to the success of the Apple II”, Hawkins said. “We didn’t want it to appear on the Radio Shack or on the IBM machine we knew was coming, so I took Dan Fylstra to lunch and talked about a buyout. The price we settled on would have been $1 million worth of Apple stock, which would have been worth much more later. But when I took the deal to Markkula for approval, he said, ‘No, it’s too expensive’”.

A million dollars was an important value point in the early microcomputer software business. Every programmer who bothered to think about money at all looked toward the time when he would sell out for a cool million. Apple could have used ownership of the program to dominate business microcomputing for years. The deal would have been good, too, for Dan Fylstra, who so recently had been selling chess programs out of his apartment. Except that Dan Fylstra didn’t own VisiCalc -- Dan Bricklin and Bob Frankston did. The deal came and went without the boys in Massachusetts even being told.

Reprinted with permission

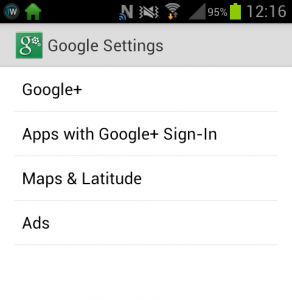

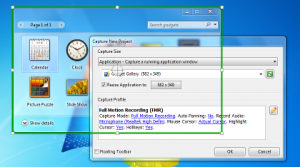

If you have an Android phone, check your apps -- you’ll likely a have a new one lurking there. The green Google Settings app, added today, gives users quick access to various settings for services such as Google+, Apps with Google+ Sign-in, Maps & Latitude, Location, Search, and Ads. The options you see will depend on your device and what’s enabled.

If you have an Android phone, check your apps -- you’ll likely a have a new one lurking there. The green Google Settings app, added today, gives users quick access to various settings for services such as Google+, Apps with Google+ Sign-in, Maps & Latitude, Location, Search, and Ads. The options you see will depend on your device and what’s enabled. Maps & Latitude lets you manage location reporting and sharing, and control which friends can see your current position. You can also enable location history, and turn automatic check-ins on or off.

Maps & Latitude lets you manage location reporting and sharing, and control which friends can see your current position. You can also enable location history, and turn automatic check-ins on or off.

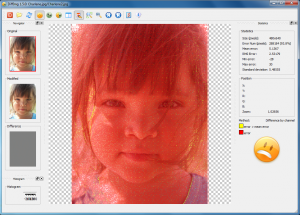

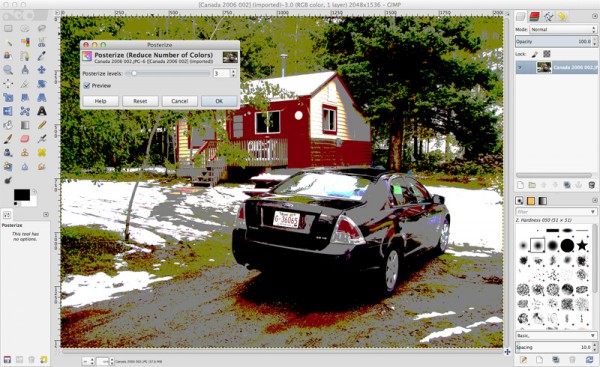

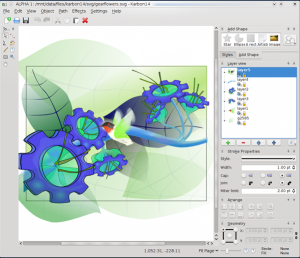

Adobe’s Photoshop Touch app for tablets is a great photo editing tool and now it’s available for handsets running iOS and Android, so you can polish up your snaps before sharing them online, or do something even more creative.

Adobe’s Photoshop Touch app for tablets is a great photo editing tool and now it’s available for handsets running iOS and Android, so you can polish up your snaps before sharing them online, or do something even more creative. continue there, before firing up Photoshop on your PC or Mac and finishing things off. Of course you’ll need to own separate copies of Photoshop for the various different devices.

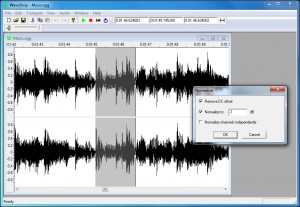

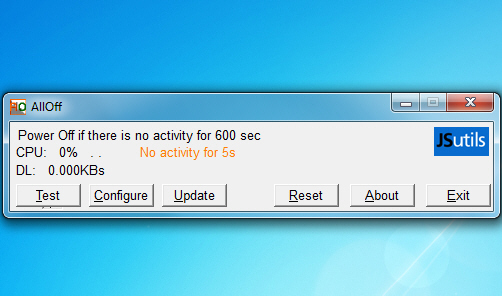

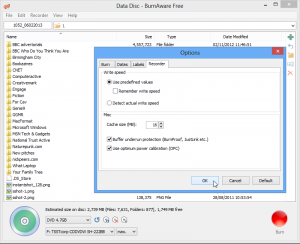

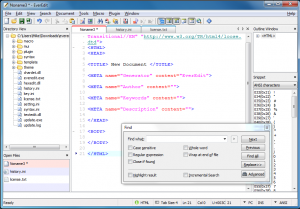

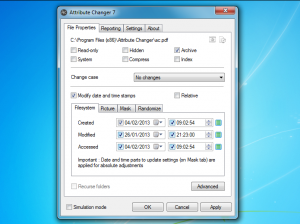

continue there, before firing up Photoshop on your PC or Mac and finishing things off. Of course you’ll need to own separate copies of Photoshop for the various different devices. If you’d like to edit an audio file then there’s plenty of free tools around to help, however most of them are prone to altering your files in unexpected ways. To test this yourself, just open any file, save it with a different name, and compare that file with the original. Even though you’ve not performed any operations on the second file at all, you’ll still often find there are differences, and inevitably that’s going to mean some compromise in sound quality.

If you’d like to edit an audio file then there’s plenty of free tools around to help, however most of them are prone to altering your files in unexpected ways. To test this yourself, just open any file, save it with a different name, and compare that file with the original. Even though you’ve not performed any operations on the second file at all, you’ll still often find there are differences, and inevitably that’s going to mean some compromise in sound quality. The program has some effects you can apply, too. You’re able to amplify audio, for instance, fade it in or out, or scan your file for clipped audio.

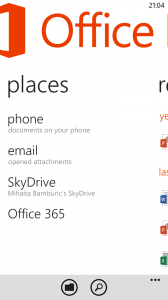

The program has some effects you can apply, too. You’re able to amplify audio, for instance, fade it in or out, or scan your file for clipped audio. My colleague Mihaita Bamburic posted his first impressions on the preview version of

My colleague Mihaita Bamburic posted his first impressions on the preview version of

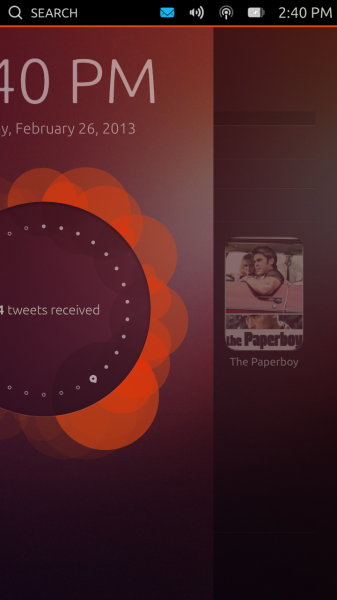

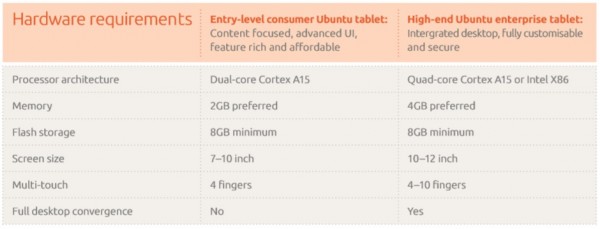

The concept of Canonical taking a stab at the mobile market eludes me. Unless we want to split hairs, which I know will happen, Android already is the Linux ambassador across the globe, so why would the world need

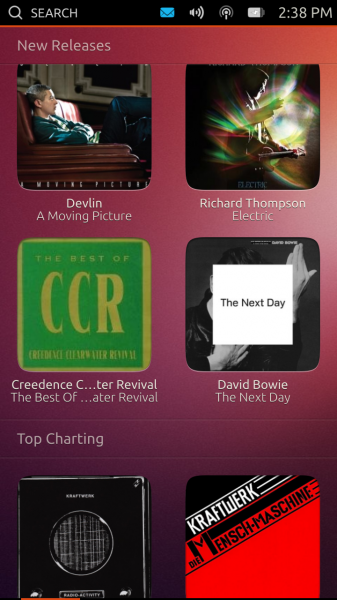

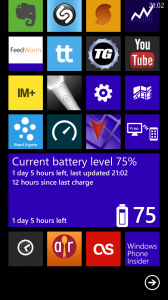

The concept of Canonical taking a stab at the mobile market eludes me. Unless we want to split hairs, which I know will happen, Android already is the Linux ambassador across the globe, so why would the world need  The Homescreens

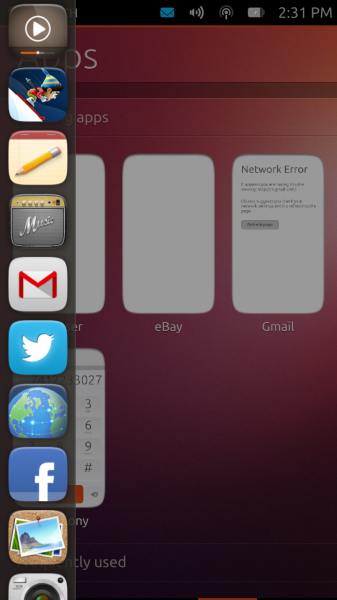

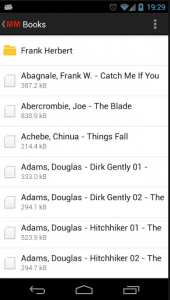

The Homescreens Apps displays the Running Apps, Frequently used, Installed and Available for download categories. There are some interesting items listed, both as installed and available, including Amazon, eBay, Evernote, Pinterest, SoundCloud, Twitter, Wikipedia and YouTube. Obviously listed does not really imply available as well, so the situation might change over time before Ubuntu Touch is released.

Apps displays the Running Apps, Frequently used, Installed and Available for download categories. There are some interesting items listed, both as installed and available, including Amazon, eBay, Evernote, Pinterest, SoundCloud, Twitter, Wikipedia and YouTube. Obviously listed does not really imply available as well, so the situation might change over time before Ubuntu Touch is released. Then there is the status bar, which is a great concept but difficult to work with nicely on the go. I use my smartphone most of the time when I'm out of the house and then I find it difficult to nail the right swipe to see the battery or volume panel (accessible via status bar). Don't get me wrong, it's nice to have different panels for each icon in the status bar but not so great in real-life. I see this as a problem even when stationary for elderly people and people with big thumbs.

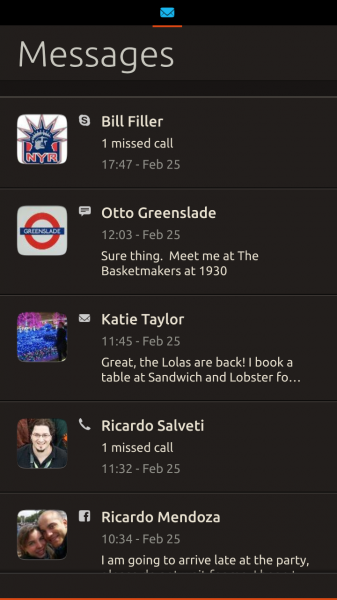

Then there is the status bar, which is a great concept but difficult to work with nicely on the go. I use my smartphone most of the time when I'm out of the house and then I find it difficult to nail the right swipe to see the battery or volume panel (accessible via status bar). Don't get me wrong, it's nice to have different panels for each icon in the status bar but not so great in real-life. I see this as a problem even when stationary for elderly people and people with big thumbs. Astonsoft Ltd has released

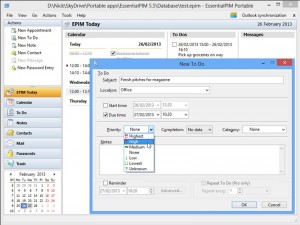

Astonsoft Ltd has released  EssentialPIM for Android users also gain new features with the 1.8.2 release. These include a new Calendar module, which now syncs with EssentialPIM’s own Calendar module in Android as opposed to the native Calendar app. At the present time, Agenda and Day views are available, with more to follow.

EssentialPIM for Android users also gain new features with the 1.8.2 release. These include a new Calendar module, which now syncs with EssentialPIM’s own Calendar module in Android as opposed to the native Calendar app. At the present time, Agenda and Day views are available, with more to follow. Today HP announced

Today HP announced

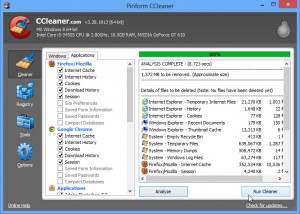

Piriform Software has released

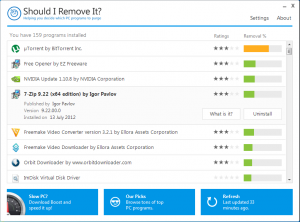

Piriform Software has released  Piriform has also announced that the next release of CCleaner will be version 4.0, which will add system monitoring tools and file-finding capabilities alongside a minor interface refresh and will also debut a brand new program icon. Piriform also claims to be finalizing details of other "exciting" releases. It appears these may be brand new tools to add to its stable, which currently include

Piriform has also announced that the next release of CCleaner will be version 4.0, which will add system monitoring tools and file-finding capabilities alongside a minor interface refresh and will also debut a brand new program icon. Piriform also claims to be finalizing details of other "exciting" releases. It appears these may be brand new tools to add to its stable, which currently include  In-app purchases are a lucrative revenue stream for both Apple and the developers who embrace it. It provides a way to try a game and then unlock the full thing, or gain access to additional features. In Temple Run 2, for example, you can use real money to buy coins and gems to use on unlocking new characters and abilities.

In-app purchases are a lucrative revenue stream for both Apple and the developers who embrace it. It provides a way to try a game and then unlock the full thing, or gain access to additional features. In Temple Run 2, for example, you can use real money to buy coins and gems to use on unlocking new characters and abilities. Although it's Tuesday, it's not "Patch Tuesday", which means we shouldn't expect any updates from Microsoft, but

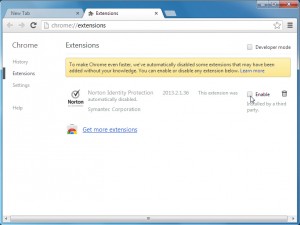

Although it's Tuesday, it's not "Patch Tuesday", which means we shouldn't expect any updates from Microsoft, but  If your browser has been taken over by an aggressive addon then you can try the standard routes to remove it (the "Manage Addons" dialog in IE, for instance). These can be confusing for beginners, though, and may not always work, so avast! has developed a custom

If your browser has been taken over by an aggressive addon then you can try the standard routes to remove it (the "Manage Addons" dialog in IE, for instance). These can be confusing for beginners, though, and may not always work, so avast! has developed a custom  It’s quite possible you might have nothing listed on the Summary page, of course, and in that case you can just click one of the left-hand tabs to view the addons for a particular browser. As we’ve mentioned, this can be done from within the browser anyway, but the Cleanup tool does have a small advantage: by default it excludes add-ons with a "good" rating, so cutting the list down to size and helping you focus on any potential threats.

It’s quite possible you might have nothing listed on the Summary page, of course, and in that case you can just click one of the left-hand tabs to view the addons for a particular browser. As we’ve mentioned, this can be done from within the browser anyway, but the Cleanup tool does have a small advantage: by default it excludes add-ons with a "good" rating, so cutting the list down to size and helping you focus on any potential threats. Accidentally deleting a partition seems like a major disaster when it first happens. Not only have all of its files disappeared, but you can’t even see that drive any more.

Accidentally deleting a partition seems like a major disaster when it first happens. Not only have all of its files disappeared, but you can’t even see that drive any more.

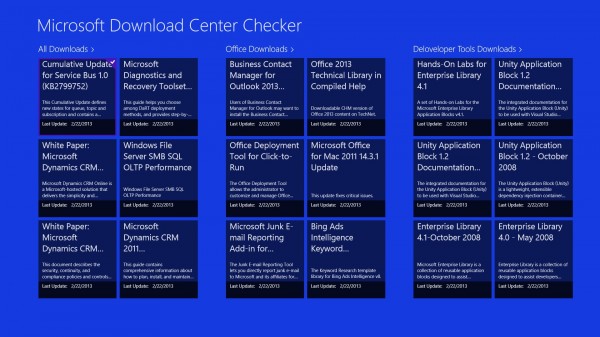

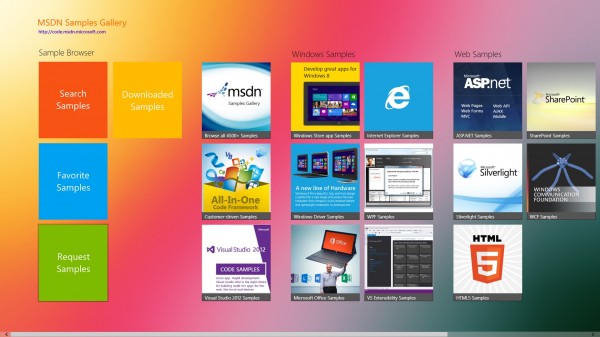

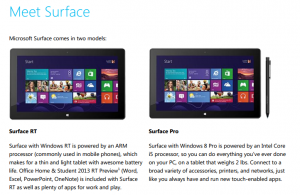

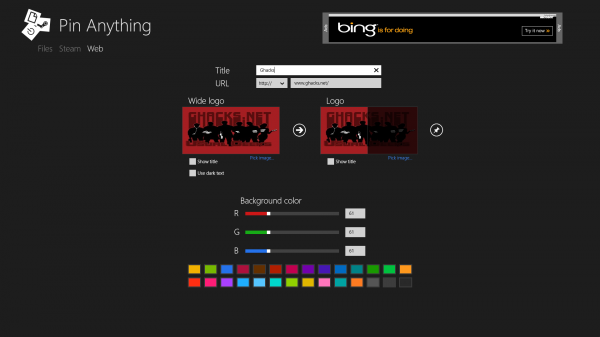

A week ago, Microsoft formally launched

A week ago, Microsoft formally launched

IT departments are picky -- I know from spending time in one during a previous life. However, Box, which still seems to be less-well known than rivals like Dropbox, is surprisingly more popular among large corporations. In fact, the cloud service boasts customers like computer giant HP. The company has also innovated a lot lately, with such offers as

IT departments are picky -- I know from spending time in one during a previous life. However, Box, which still seems to be less-well known than rivals like Dropbox, is surprisingly more popular among large corporations. In fact, the cloud service boasts customers like computer giant HP. The company has also innovated a lot lately, with such offers as

Today the festivities at Mobile World Congress in Barcelona, Spain kicked off. Nokia announced the new

Today the festivities at Mobile World Congress in Barcelona, Spain kicked off. Nokia announced the new

Nokia's augmented reality, map and navigation apps for Windows Phone just went through a name change, and now bear the

Nokia's augmented reality, map and navigation apps for Windows Phone just went through a name change, and now bear the  HERE Maps is similar to the built-in Windows Phone 8 Maps application. It uses the Nokia maps and features online and offline services and turn-by-turn navigation, the latter in over 90 countries according to the Finnish manufacturer. Where the two offerings, from Nokia and Microsoft, mostly differ is in nearby locations (Nokia calls them "places") such as hotels, restaurants, pubs (or bars depending on which side of pond you live) and shopping centers to name a few. Where Maps would show no results, HERE Maps provides a generous POI list (Point of Interest) in the close proximity to my location.

HERE Maps is similar to the built-in Windows Phone 8 Maps application. It uses the Nokia maps and features online and offline services and turn-by-turn navigation, the latter in over 90 countries according to the Finnish manufacturer. Where the two offerings, from Nokia and Microsoft, mostly differ is in nearby locations (Nokia calls them "places") such as hotels, restaurants, pubs (or bars depending on which side of pond you live) and shopping centers to name a few. Where Maps would show no results, HERE Maps provides a generous POI list (Point of Interest) in the close proximity to my location. On Monday, South Korean electronics manufacturer Samsung unveiled a new "end-to-end secure solution" aimed at boosting the company's

On Monday, South Korean electronics manufacturer Samsung unveiled a new "end-to-end secure solution" aimed at boosting the company's  If the latest Java security scares have persuaded you to ditch the technology forever, then removing it from your PC is normally straightforward. Java’s regular uninstaller should do the job in just a few seconds.

If the latest Java security scares have persuaded you to ditch the technology forever, then removing it from your PC is normally straightforward. Java’s regular uninstaller should do the job in just a few seconds. For all this, JavaRA didn’t always quite work as we’d like.

For all this, JavaRA didn’t always quite work as we’d like. Call me crazy, but I love Mondays. Why? Because there is a new AOKP build coming just in time to kick off my week. The team behind the popular custom distribution Android Open Kang Project did not disappoint this time around either. Jelly Bean MR1 Build 4 made its way onto our

Call me crazy, but I love Mondays. Why? Because there is a new AOKP build coming just in time to kick off my week. The team behind the popular custom distribution Android Open Kang Project did not disappoint this time around either. Jelly Bean MR1 Build 4 made its way onto our  Samsung might have received a

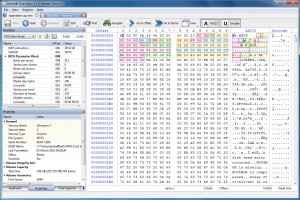

Samsung might have received a  If you’re confident enough with hard drives to have tried editing them before at the sector level, then you’ll know that most disk editing software is, well, less than helpful. Open a drive and you’ll generally be presented with a basic hex view of your data, then left on your own to figure out what it all means. And okay, it’s true, sector editors are only for the most knowledgeable of PC users, but even experts could benefit from a little help, occasionally.

If you’re confident enough with hard drives to have tried editing them before at the sector level, then you’ll know that most disk editing software is, well, less than helpful. Open a drive and you’ll generally be presented with a basic hex view of your data, then left on your own to figure out what it all means. And okay, it’s true, sector editors are only for the most knowledgeable of PC users, but even experts could benefit from a little help, occasionally. If you’re looking for particular data but aren’t sure where it is (the contents of a lost file, for instance), then a Find option will help you locate them. You can search for a specific ANSI, hex or Unicode sequence, and there are even options to use regular expressions or wildcards.

If you’re looking for particular data but aren’t sure where it is (the contents of a lost file, for instance), then a Find option will help you locate them. You can search for a specific ANSI, hex or Unicode sequence, and there are even options to use regular expressions or wildcards. The Lumia 520 boasts a 4-inch display, at a resolution of 800 by 480, and is powered by a 1GHz dual-core Snapdragon processor. It comes with 512MB of RAM and 8GB of internal storage. A Micro SD card slot will allow owners to boost this by a further 64GB, plus users get the standard 7GB of free online SkyDrive storage. The 520 also sports a 5-megapixel rear camera capable of recording 720p HD video. The device comes in the usual range of bright colors - yellow, cyan, red, white and black.

The Lumia 520 boasts a 4-inch display, at a resolution of 800 by 480, and is powered by a 1GHz dual-core Snapdragon processor. It comes with 512MB of RAM and 8GB of internal storage. A Micro SD card slot will allow owners to boost this by a further 64GB, plus users get the standard 7GB of free online SkyDrive storage. The 520 also sports a 5-megapixel rear camera capable of recording 720p HD video. The device comes in the usual range of bright colors - yellow, cyan, red, white and black. The mid-range Lumia 720 has a 4.3-inch display at 800 by 480 with a ClearBlack filter offering better outdoors viewing. It has a dual-core 1GHz Snapdragon CPU, 512MB of RAM, 8GB of internal storage and a microSD card slot. The biggest difference between this model and the 520 is in the photography department. The rear 6.7-megapixel camera has a f1.9 Carl Zeiss lens designed to let in more light, while the front-facing camera has a 1.3-megapixel HD wide angle lens which will allow self-shooters to pack more friends into the shot.

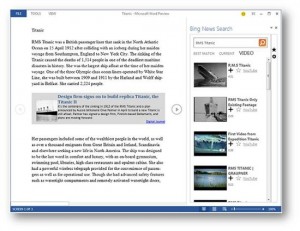

The mid-range Lumia 720 has a 4.3-inch display at 800 by 480 with a ClearBlack filter offering better outdoors viewing. It has a dual-core 1GHz Snapdragon CPU, 512MB of RAM, 8GB of internal storage and a microSD card slot. The biggest difference between this model and the 520 is in the photography department. The rear 6.7-megapixel camera has a f1.9 Carl Zeiss lens designed to let in more light, while the front-facing camera has a 1.3-megapixel HD wide angle lens which will allow self-shooters to pack more friends into the shot. When you’re permanently connected to the internet via one device or another, then checking something on Wikipeda is very easy: just browse to the site, enter the topic and you’ll be reading more within seconds.

When you’re permanently connected to the internet via one device or another, then checking something on Wikipeda is very easy: just browse to the site, enter the topic and you’ll be reading more within seconds.

Microsoft's cloud service, Windows Azure, along with Team Foundation Service,

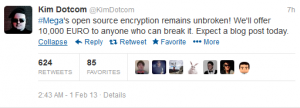

Microsoft's cloud service, Windows Azure, along with Team Foundation Service,  How's this for a helluva endorsement for Windows security over OS X? Today, Microsoft acknowledged falling prey to "similar security intrusion" as Apple and Facebook. They got nabbed by a Java exploit affecting Apple's OS.

How's this for a helluva endorsement for Windows security over OS X? Today, Microsoft acknowledged falling prey to "similar security intrusion" as Apple and Facebook. They got nabbed by a Java exploit affecting Apple's OS.  What's the end of February without some scare tactics? Gartner warns that one-quarter of distributed denial of service attacks this year will be against applications. Really? That low? I'm surprised the number isn't higher. After all, as enterprises shore up the network perimeter, HTTP remains open wide enough to drive a freight train through and for that long duration.

What's the end of February without some scare tactics? Gartner warns that one-quarter of distributed denial of service attacks this year will be against applications. Really? That low? I'm surprised the number isn't higher. After all, as enterprises shore up the network perimeter, HTTP remains open wide enough to drive a freight train through and for that long duration.

Starting out as a rookie among veterans, in a matter of months Windows RT has transformed into an exciting and intriguing alternative to established tablet operating systems. The trigger for the frankly unexpected makeover is the jailbreak which allows enthusiasts to

Starting out as a rookie among veterans, in a matter of months Windows RT has transformed into an exciting and intriguing alternative to established tablet operating systems. The trigger for the frankly unexpected makeover is the jailbreak which allows enthusiasts to  When you need to share files with others, setting up a web server probably won’t be the first idea that comes to mind. It just seems like too bulky a solution, too complex, and so you’d probably opt for something more conventional: setting up a network, using a file sharing service, whatever it might be.

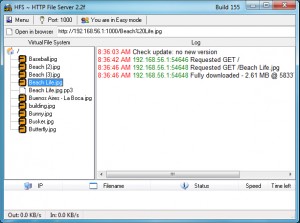

When you need to share files with others, setting up a web server probably won’t be the first idea that comes to mind. It just seems like too bulky a solution, too complex, and so you’d probably opt for something more conventional: setting up a network, using a file sharing service, whatever it might be. And when you’re ready to consider what else you might need from a file sharing tool, there are plenty of options on offer. So you can password-protect particular files and folders, for instance. You might allow users to upload, as well as download files. There are various speed limits and controls to help ensure the program doesn’t tie up all your bandwidth. And there’s dynamic DNS support, a configurable HTML template, a custom scripting language, and lots of configuration settings to help get everything working properly.

And when you’re ready to consider what else you might need from a file sharing tool, there are plenty of options on offer. So you can password-protect particular files and folders, for instance. You might allow users to upload, as well as download files. There are various speed limits and controls to help ensure the program doesn’t tie up all your bandwidth. And there’s dynamic DNS support, a configurable HTML template, a custom scripting language, and lots of configuration settings to help get everything working properly. There's a great saying that applies to new products -- get it while it's hot. Or shall I say, give it while it's hot. LG, sadly, is not familiar with either expression as the South Korean manufacturer has only now finally released the

There's a great saying that applies to new products -- get it while it's hot. Or shall I say, give it while it's hot. LG, sadly, is not familiar with either expression as the South Korean manufacturer has only now finally released the  Alexander Beug has released

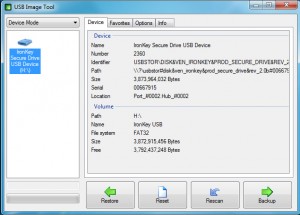

Alexander Beug has released  A new option to define the program’s buffer size may help improve performance, which could be important if you’ll now be using USB Image Tool to back up large USB drives.

A new option to define the program’s buffer size may help improve performance, which could be important if you’ll now be using USB Image Tool to back up large USB drives. Let us get a bit geeky. This was not my original intention, but it is how things turned out in the end. First, I believe I misspoke twice in

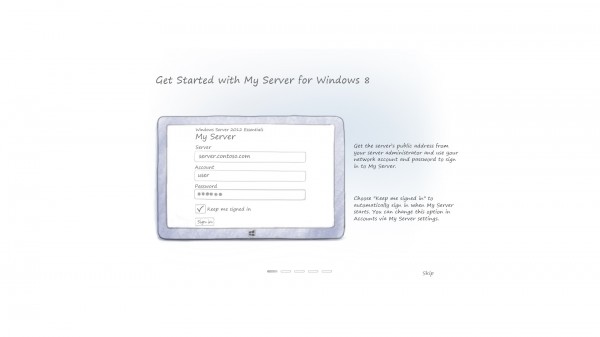

Let us get a bit geeky. This was not my original intention, but it is how things turned out in the end. First, I believe I misspoke twice in  However, the 32-bit server architecture did not support 2012, meaning I moved on to Home Server -- that required 512 MB of RAM, and the server, woefully older than I had thought, only had 256 -- an easy upgrade, but expenses and wife-acceptance-factor for this project were mounting up.

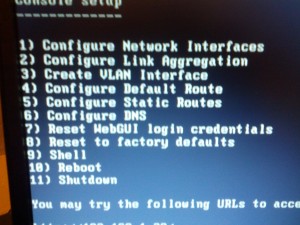

However, the 32-bit server architecture did not support 2012, meaning I moved on to Home Server -- that required 512 MB of RAM, and the server, woefully older than I had thought, only had 256 -- an easy upgrade, but expenses and wife-acceptance-factor for this project were mounting up. There are also some plugins that may interest you. Those can be found on the FreeNAS website. In fact, you can even hook up a network printer to the box, but it gets a bit complicated. Once volumes are configured and working then you are ready to start backing up and sharing files.

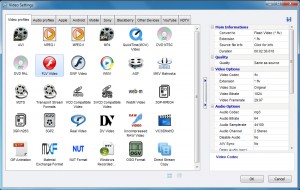

There are also some plugins that may interest you. Those can be found on the FreeNAS website. In fact, you can even hook up a network printer to the box, but it gets a bit complicated. Once volumes are configured and working then you are ready to start backing up and sharing files. If you’re looking for a free video converter then there are now plenty of great free programs around, which is plainly very good news for the end user.

If you’re looking for a free video converter then there are now plenty of great free programs around, which is plainly very good news for the end user. A "Tools" menu provides some useful processing options, allowing you to trim videos, join them, rip and burn video DVDs, and more.

A "Tools" menu provides some useful processing options, allowing you to trim videos, join them, rip and burn video DVDs, and more. The BBC’s iPlayer app is available for both iOS and Android, but owners of Apple devices definitely get the better deal with additional features, such as the ability to download shows to their iPhones or iPads for offline viewing.

The BBC’s iPlayer app is available for both iOS and Android, but owners of Apple devices definitely get the better deal with additional features, such as the ability to download shows to their iPhones or iPads for offline viewing. Google has announced the release of

Google has announced the release of  Elsewhere, Chrome 25 adds support for speech recognition via the Web Speech API, which means you could be talking to websites very soon. Once you’ve installed the new build then you can get a feel for how this could work at Google’s

Elsewhere, Chrome 25 adds support for speech recognition via the Web Speech API, which means you could be talking to websites very soon. Once you’ve installed the new build then you can get a feel for how this could work at Google’s

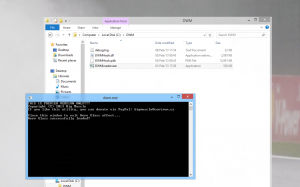

If Windows is proving particularly unreliable on your PC then that could mean a key operating system component has been deleted, or replaced. Fortunately, Windows File Protection (WFP) monitors your key system files, and if any are removed then it can automatically restore the original. And you can also use the System File Checker (sfc.exe /scannow) to manually check for and resolve any problems.

If Windows is proving particularly unreliable on your PC then that could mean a key operating system component has been deleted, or replaced. Fortunately, Windows File Protection (WFP) monitors your key system files, and if any are removed then it can automatically restore the original. And you can also use the System File Checker (sfc.exe /scannow) to manually check for and resolve any problems.

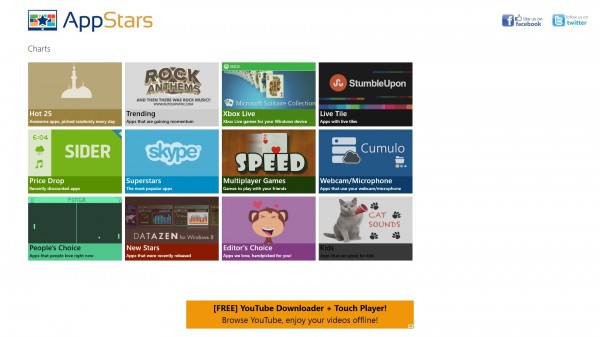

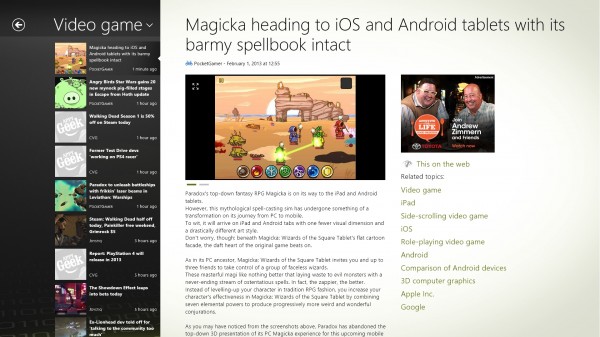

After unveiling the

After unveiling the  A couple of days ago I described the Windows Store as being like a

A couple of days ago I described the Windows Store as being like a

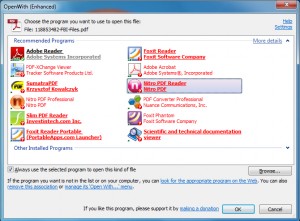

If you’re wondering how to open a particular file on your PC, then right-clicking it and selecting Open With may provide some options -- but only if you’ve already installed an application which can handle that particular file type.

If you’re wondering how to open a particular file on your PC, then right-clicking it and selecting Open With may provide some options -- but only if you’ve already installed an application which can handle that particular file type. There are plenty of programs supported here, at least for some file types. Try OpenWith Enhanced on a PDF file, say, and you’ll be told about Foxit Reader, SumatraPDF, Nitro PDF Reader and Foxit Reader, as well as commercial options like Nitro PDF Professional and Adobe Acrobat.

There are plenty of programs supported here, at least for some file types. Try OpenWith Enhanced on a PDF file, say, and you’ll be told about Foxit Reader, SumatraPDF, Nitro PDF Reader and Foxit Reader, as well as commercial options like Nitro PDF Professional and Adobe Acrobat. Qualcomm's

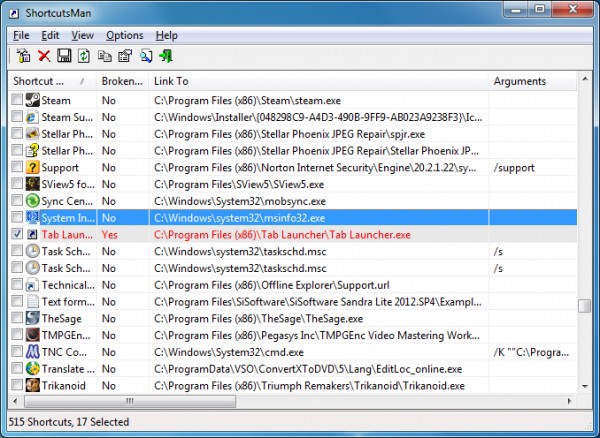

Qualcomm's  There are many ways to break a shortcut. Moving an important file might do it; manually deleting a program is another possibility; and of course too many uninstallers will leave application shortcuts behind. And because there’s no visible sign that a shortcut is broken it’ll just stay there, cluttering your system, until eventually you click it and discover the problem.

There are many ways to break a shortcut. Moving an important file might do it; manually deleting a program is another possibility; and of course too many uninstallers will leave application shortcuts behind. And because there’s no visible sign that a shortcut is broken it’ll just stay there, cluttering your system, until eventually you click it and discover the problem.

I do not generally use our desktop computer. I prefer my laptop, but my wife likes that desktop and uses it daily. She also keeps her precious files on it, and I have the folder set to backup to Crashplan automatically, as well as to sync with the home server. However, she also uses a small four gigabyte USB drive for files -- I assumed ones that she just wishes to move around with her. I was wrong.

I do not generally use our desktop computer. I prefer my laptop, but my wife likes that desktop and uses it daily. She also keeps her precious files on it, and I have the folder set to backup to Crashplan automatically, as well as to sync with the home server. However, she also uses a small four gigabyte USB drive for files -- I assumed ones that she just wishes to move around with her. I was wrong.

Open-source, cross-platform EPUB creator

Open-source, cross-platform EPUB creator  Yesterday Apple rolled out

Yesterday Apple rolled out

Select developers already have access to Google’s futuristic glasses, but now the search giant has launched a competition giving ordinary American citizens the chance to buy a pair before they’re launched, and become a "Glass Explorer" (as Google terms those "bold, creative individuals who want to help shape the technology").

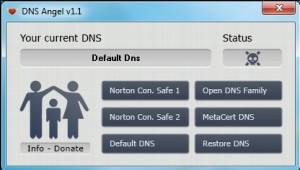

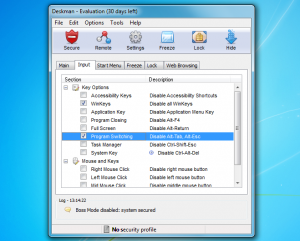

Select developers already have access to Google’s futuristic glasses, but now the search giant has launched a competition giving ordinary American citizens the chance to buy a pair before they’re launched, and become a "Glass Explorer" (as Google terms those "bold, creative individuals who want to help shape the technology"). Parental controls software is normally bulky, complex, and the kind of application which can take some considerable time to configure. There may be lots of files to install, resident components which must always be running in the background, user profiles to create, content filters to customize, and the list goes on.

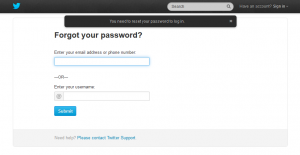

Parental controls software is normally bulky, complex, and the kind of application which can take some considerable time to configure. There may be lots of files to install, resident components which must always be running in the background, user profiles to create, content filters to customize, and the list goes on. But if you do have any problems then clicking "Restore DNS" will restore your original DNS settings, while choosing "Default DNS" tells Windows to obtain your settings automatically (they might be assigned by your router, say).

But if you do have any problems then clicking "Restore DNS" will restore your original DNS settings, while choosing "Default DNS" tells Windows to obtain your settings automatically (they might be assigned by your router, say). I have to be completely honest -- I am not a fan of the default Android keyboard. For people like me who write in languages other than English on a day-to-day basis, it misses the mark entirely, and does not adapt to my writing style either. Ever since I bought my Galaxy Nexus only one Android keyboard has lived up to my expectations -- SwiftKey. And now there's a new version, and it's even better than ever.

I have to be completely honest -- I am not a fan of the default Android keyboard. For people like me who write in languages other than English on a day-to-day basis, it misses the mark entirely, and does not adapt to my writing style either. Ever since I bought my Galaxy Nexus only one Android keyboard has lived up to my expectations -- SwiftKey. And now there's a new version, and it's even better than ever. Apple has released

Apple has released  Apple also promises that the update improves iTunes’ responsiveness when syncing playlists containing a large number of songs. The only documented fix applied is one that resolves issues whereby some users’ libraries weren’t showing their purchases from the iTunes store.

Apple also promises that the update improves iTunes’ responsiveness when syncing playlists containing a large number of songs. The only documented fix applied is one that resolves issues whereby some users’ libraries weren’t showing their purchases from the iTunes store.

Spanish security company Panda Security Ltd has released