Canaux

108470 éléments (108470 non lus) dans 10 canaux

Actualités

(48730 non lus)

Actualités

(48730 non lus)

Hoax

(65 non lus)

Hoax

(65 non lus)

Logiciels

(39066 non lus)

Logiciels

(39066 non lus)

Sécurité

(1668 non lus)

Sécurité

(1668 non lus)

Referencement

(18941 non lus)

Referencement

(18941 non lus)

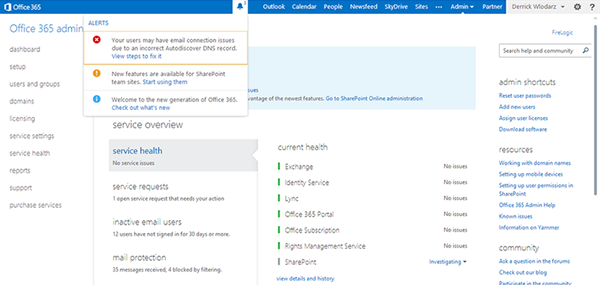

éléments par Derrick Wlodarz

BetaNews.Com

-

SMB Wi-Fi done right: 7 best practices you likely aren't following

Publié: janvier 9, 2019, 10:55am CET par Derrick Wlodarz

If there is one thing that doesn't shock me anymore, it's the fact of how prevalent and pervasive incorrectly deployed Wi-Fi is across the small to midsize business (SMB) landscape. The sober reality is that Wi-Fi has an extremely low barrier to entry thanks to a bevy of options on the market. But a well-tuned setup that accounts for proper coverage levels, speeds, and client counts in a measured manner is almost as much of an art as it is a science. Hence why more often than not, clients are calling us for an SOS to help save them from… [Continue Reading] -

7 big mistakes to avoid when shopping for an SMB broadband ISP

Publié: avril 23, 2018, 1:12pm CEST par Derrick Wlodarz

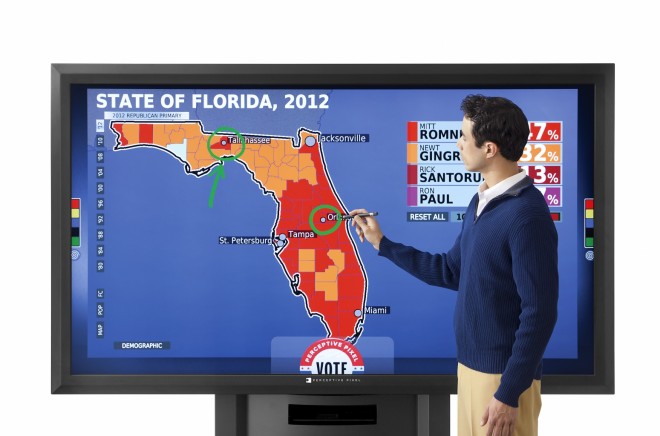

Let me clear the air right off the bat: most SMB owners are making more than one major mistake in their hunt for an Internet Service Provider (ISP). The big players in the ISP space love preying on the SMB market because it's so easy to oversell bandwidth, obfuscate the facts, and generally make the process a "smoke and mirrors" game which serves to their advantage. I'll be the first to admit that broadband shopping is a tricky affair. Service areas for ISPs and their various offerings are as arbitrarily drawn as gerrymandered political districts in the US. Their sales reps… [Continue Reading] -

Crony Capitalism: Zuckerberg and the never-ending stench of Facebook

Publié: avril 2, 2018, 11:17am CEST par Derrick Wlodarz

Taken at face value as an isolated incident, Facebook's most recent data breach leak allegations may seem like a plausible case of corporate malfeasance. But that's giving Zuckerberg way too much credit, as someone who has been gifted every olive branch possible from his global community of users. And yet one who has consistently, and awkwardly, dropped the ball each time. Are we dealing with a case of a CEO who can't keep the wheels straight? Or is there more going on behind the veil then anyone wishes to admit? I've been pondering this question more and more recently. It… [Continue Reading] -

Ditch the external: How I upgraded my Xbox One X HDD to a 2TB SSHD

Publié: janvier 4, 2018, 9:29am CET par Derrick Wlodarz

Ever since game consoles first started seeing internal hard drives, I've been fascinated with hardware previously reserved for PCs rearing their head inside living room systems. The PlayStation 2 toyed with the idea of an expansion bay that could take an HDD, even though it was near useless for 99+ percent of games. But it was the original Xbox which finally shipped with an HDD built in, making this concept a mainstay from then onward. This holiday I took the chance to treat myself with an Xbox One X to replace my original edition Xbox One. I've loved the Xbox… [Continue Reading] -

The case against Net Neutrality: An IT pro's perspective

Publié: décembre 14, 2017, 1:20pm CET par Derrick Wlodarz

As the vote to determine the fate of Net Neutrality regulations looms in the FCC, I've been taking a harder look at where I stand on the issue. Personally, I've got vested interests as a consumer that relies on many net-connected services in my daily life. And professionally, I own an IT business that lives and dies by the availability of countless net-centric ecosystems. But every angle from which I examine the issue upon, I keep coming back to a common conclusion: Net Neutrality just isn't needed. Before you slam your keyboard and roll me in as some blind Trump… [Continue Reading] -

Zuckerberg's #BLM rant and the dangers of corporate Thought Police

Publié: avril 10, 2016, 9:48am CEST par Derrick Wlodarz

Corporate leaders, especially those considered thought leaders, have a firm right and assumed expectation to voice their opinion on public policy. As such, I've got no inherent issues with Facebook's founder and chief, Mark Zuckerberg, proclaiming his positions publicly -- even if I happen to blatantly disagree with them.

Mark and other leaders of their respective industries have something very intrinsically unique that most others do not. That comes in the form of the power of the pulpit. It places their opinion in a position which bring inherent weight and reach which many could only dream of. Yet, this advantage comes with a social responsibility so as to not abuse or otherwise misuse the privilege.

There are two subtle, yet very troubling, problems which stem from Zuckerberg's outright (now quite public) shaming of coworkers who disagree with his political viewpoint on Black Lives Matter. And thanks to Gizmodo, where news of this story first broke, the tainted prism with which Zuckerberg's position is being framed says it all:

Mark Zuckerberg Asks Racist Facebook Employees to Stop Crossing Out Black Lives Matter Slogans

The first area of contention I have, which most of the media has either avoided or forgotten to address, is the notion that Zuckerberg's coworkers who don't see eye to eye with him on Black Lives Matter are, as Gizmodo insinuated, racists.

Not only is this characterization blatantly false and self-serving, but it's also an assertion which a majority of America doesn't agree with Zuckerberg on. As public polling data (referenced below) proves, Zuckerberg's narrow views on BLM are in the minority, with most Americans -- and most importantly, even African Americans -- not having a favorable view of the #BLM cause and preferring the All Lives Matter tagline instead.

The bigger issue at play is perhaps how this may be one of the more important recent examples of corporate figures crossing the line from acceptable political advocacy to outright unwanted thought policing of their staff.

As the face of the largest social network in history, Zuckerberg risks taking his personal beliefs beyond corporate policy positions and instilling a culture of zero dissention among the ranks of his workforce. This blurring of the lines between protected free speech and a future potential of say, a Facebook Charter of Acceptable Free Speech, could spell trouble for not only Facebook employees, but set a terrible precedent for workers in other non-IT industries.

It sounds crazy, Orwellian, and perhaps far fetched, but do realize that this barrier is about to be crossed by one of the largest countries in the world: China. It comes in the form of a little-covered initiative, known as the Social Credit System (SCS), that will become mandatory by 2020 for all citizens of the state.

Some parts of the internet took aim at a chilling photo Mark released from his appearance at Mobile World Congress, surprising hundreds of guests while they were entrenched in Gear VR headsets. Possible allusion to a modern, Orwellian future dictated by the whims of our IT industry leaders? That's up for interpretation, but a reality some say may not be far fetched.

Taking its cues from the American credit ratings system, this far-reaching program will not only take into account financial standing, loan history, purchasing history, and related aspects of personal life, but it goes one scary step further.

Relationships, gossip, social media presence, and political dissent will factor into this "rating" that each person gets. Just as the American credit rating system allows for the indirect control of unwanted behavior in financial decisions, China is hoping to keep its citizens' political thoughts and government opinions in check to a T. Yes, thought policing in its most acute and tyrannical form.

This is also the same country which still actively blocks Facebook access to nearly all citizens within its borders. It's not hard to believe that Mark Zuckerberg would love to expand his social empire into this untapped market.

And if that potentially means playing by China's rules, would he oblige? Would the SCS get unfettered Big Data taps into Facebook's never-ending hooks it enjoys in its users' lives?

That's a great question, which makes you wonder. Given Mark's recent propensity towards directing intra-company censure on political issues he deems unworthy, where would the Facebook founder put his foot down?

Data Suggests Zuckerberg's #BLM Stance Doesn't Match America's

That Facebook is made up of employees which, just like the rest of society, represent America at large is something which can't be denied. To me, per media coverage afforded Zuckerberg in blanketing those who disagree with the tagline Black Lives Matter, the assumption is being made that Mark is either in the majority viewpoint or that Facebook's staff exists in a vacuum. Both assertions couldn't be further from the truth.

Hence why publicly available data, which has been widely distributed online, is critical in establishing the argument that these workers weren't "fringe" activists in any way. Judging by polling data released, a wide majority of Americans don't see eye to eye with the name or the motives of Black Lives Matter.

Numbers released by Rasmussen last August stated the mood of America at large when it comes to #BLM. An overwhelming 64 percent of interviewed blacks claimed that, contrary to Zuckerberg's beliefs, All Lives Matter, not Black Lives Matter, was the phrase which more closely associated with their views. This sentiment held true with 76 percent of other non-black minority voters, as well as 81 percent of whites.

Even further, a majority, or 45 percent of all those interviewed, believe that the justice system is fair and equal to black and Hispanic Americans. And perhaps most shocking, a resounding 70 percent of voters believe that crime levels in low income, inner city communities is a far bigger issue than police discrimination of minorities.

It's not surprising that inner city crime is so high on the list of concern for average Americans. These death tolls in the inner cities are shoveled on local news segments daily with such frequency that most people are numb to their dire meaning. And most acutely, much of this crime epidemic comes in the form of "Black on Black" crime, as penned by DeWayne Wickham of USA Today.

Wickham says:

While blacks are just 12.6 percent of the nation's population, they're roughly half of people murdered in this country each year. The vast majority of these killings are at the hands of other blacks.

And DeWayne's questioning of the response at large by the #BLM movement continues, addressing their refusal to discuss black on black crime:

Why such a parsing of contempt? Maybe the people who've taken to the streets to protest [Trayvon] Martin's killing don't care as much about the loss of other black lives because those killings don't register on the racial conflict meter. Or maybe they've been numbed by the persistence of black-on-black carnage.

As such, the credibility of the #BLM movement at large continues to dissipate in the eyes of Americans. A follow-up Rasmussen study of 1000 likely voters, done mid-November 2015, follows the public's dismay with the group's approaches and message.

According to the numbers published, by a 2-to-1 margin, voters don't think #BLM supports reforms to ensure all Americans are treated fairly by law enforcement. A further 22 percent of those surveyed just "aren't sure" whether the group truly supports its stated goals.

And on the question of whether blacks and other minorities receive equal treatment by authorities, this number only went down one percentage point from the August 2015 study, to 44 percent, of those who believe this is a true statement.

A full 30 percent of African American voters said that #BLM doesn't support reforms, with another 19 percent not being sure. A sizable 55 percent of whites said the same about #BLM.

While the racial makeup of Facebook's employee base has been mentioned numerous times in media recently, this has little bearing on the nature of whether there was just cause for Zuckerberg to so publicly throw his staffers' political viewpoints into the spotlight and frame them as malicious or metaphorically, racist.

As the numbers above prove, the overwhelming majority of the American public which includes black Americans, consider themselves better represented by All Lives Matter. Why is Zuckerberg's personal will and viewpoint more important than that of not only his coworkers, but more importantly, that of America's? It's a question I just can't find a reasonable answer for.

Let's make this very clear up front: while Zuckerberg framed his wrath in the prism that coworkers were espousing racist feelings by replacing #BLM markings with the more inclusive #ALM alternative, this mischaracterization of the larger issue shouldn't be mistaken. Mark has a personal, vested interest in pushing his own beliefs on that of Facebook workers. This would have never been such a story if he allowed public discourse to occur on its own, just as it does outside the protected walls of Facebook offices.

While scouring the web to try and make sense of how Zuckerberg's interpretation that Black Lives Matter inherently, actually, really means All Lives Matter, I found numerous discussions online trying to pin justifications for how BLM places an inherent importance on raising black deaths on a pedestal -- while being supposedly careful to stay inclusive, as its supporters duplicitously claim.

This roundabout, purposefully misguided framing of what most of America finds to be a divisive name is what naturally gives rational thinkers like myself cause for alarm. Why would these people, including Zuckerberg based on his wild publicized reaction, not prefer the term which Americans' at large say represents them best -- in All Lives Matter?

Some say actions speak louder than words, and BLM has been doubly guilty of doing many deeds which go counter to their stated goals of equality. Take for example the Nashville chapter of BLM's attempt to take a page out of the segregation era, and try to host a color-only meeting of their members at the Nashville Public Library. Mind you, this happens to be a place that is taxpayer supported and as such, open to the entire public, regardless of color.

The Nashville BLM chapter leader, Josh Crutchfield, didn't deny that their branch has this rule. "Only black people as well as non-black people of color are allowed to attend the gatherings. That means white people are excluded from attending".

The chapter, naturally, claimed that the Library's decision came down to white supremacy in the local government. If that reasoning makes any sense to the average person who may support BLM's objectives, I'd love to hear in what manner. That a taxpayer funded establishment couldn't discriminate entrance based on color in the year 2016 is quite a disappointment for Nashville BLM. I'm perhaps more surprised that the media hasn't picked up on this story to a greater degree.

In a similar display of rash white shaming and public berating of innocents bystanders, BLM protestors decided to hold an impromptu rally inside the Dartmouth College library -- with hooting and chanting in tow.

Protesters were reportedly shouting “F– you, you filthy white f–-” “f– you and your comfort” and “f– you, you racist s–". The college newspaper went into detail about the incident and the kinds of abuse that innocent students who had nothing to do with taking an opposition view had to endure.

Is this the movement and approach that Zuckerberg so proudly decided to stand behind from his pulpit? While the rest of America is judging BLM in a lens of totality that takes into account its actions, Mark is seemingly blinded by the idealism he perhaps wishes to establish as Facebook's public policy stance.

To put a crescendo on the discussion about BLM's shortcomings, I found the words of 1960's civil rights activist Barbara Reynolds to provide quite the clarity on the issues at play. She wrote a lengthy op/ed on how BLM has the right cause but absolutely the wrong approach:

At protests today, it is difficult to distinguish legitimate activists from the mob actors who burn and loot. The demonstrations are peppered with hate speech, profanity, and guys with sagging pants that show their underwear. Even if the BLM activists aren’t the ones participating in the boorish language and dress, neither are they condemning it.

If BLM wants to become a serious patron of bearing the message it is trying to convey, it needs to ditch the white shaming, hate rhetoric, and brazen acts of violence. And most importantly, it needs to distance itself from the distasteful actions taken by decent portions of its base. Sitting by idly without denouncing bad behavior being committed in the name of a movement paints the movement at large. Perhaps, only then, would Zuckerberg have a leg to stand on when it comes to upholding the right and just side of history.

BLM, to its likely dismay, also doesn't care that the only African American formerly in this year's presidential race, Ben Carson, happens to agree that All Lives Matter more accurately reflects the sentiment he holds. "Of course all lives matter -- and all lives includes black lives," Mr. Carson said. "And we have to stop submitting to those who want to divide us into all these special interest groups and start thinking about what works for everybody".

Thought Policing Goes Digital in China: A Model for Zuckerberg?

If you're interested in getting a feel for the direction of an organization, or a country for that matter, just look at the stated motives of those in charge. And in this prism, China's goals for the Social Credit System have some overt overlapping with Facebook's own mission statement.

Per the Facebook Investor Relations website, Zuckerberg's corporate intentions for the company are quite simple:

Founded in 2004, Facebook’s mission is to give people the power to share and make the world more open and connected. People use Facebook to stay connected with friends and family, to discover what’s going on in the world, and to share and express what matters to them.

It's interesting, then, to read the stated intentions behind China's proposed SCS, as put forth by the State Council (per New Yorker):

Its intended goals are "establishing the idea of a sincerity culture, and carrying forward sincerity and traditional virtues," and its primary objectives are to raise "the honest mentality and credit levels of the entire society" as well as "the over-all competitiveness of the country," and "stimulating the development of society and the progress of civilization".

The words chosen for each directive's goals are markedly different, but the intersecting junction of their larger motives are quite the same: making the world more connected and better as a whole.

Yet for the cautious outsider, it's not difficult to see why you may connect some logical future dots between Facebook and a state-imposed SCS like China is rolling out in 2020. Per the New Yorker piece on how one Chinese company is molding its variant of a SCS, "Tencent’s credit system goes further, tapping into users’ social networks and mining data about their contacts, for example, in order to determine their social-credit score".

It's no secret that China employs one of the grandest firewalls in the world, blocking its millions of users from "sensitive" topics deemed unhealthy and politically off-limits by the State. Facebook's website sits on this massive blacklist... so far. A little negotiating between Mark and the Chinese authorities may change matters quickly.

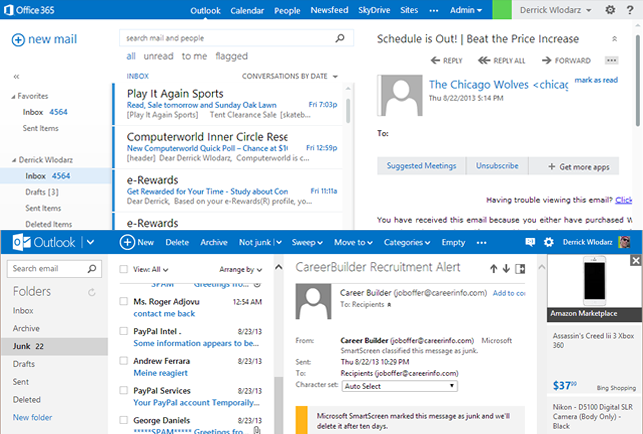

The SCS trials are already live in China, with users having access to mobile apps (one shown above) which gamify the rating process. The various tenets of what makes up one's score -- financial dealings, judicial rulings, mutual contacts, political dissent, and more -- are all built into the algorithms being used. There's no secret in the fact that Facebook APIs feeding this data mining machine would be digital gold to the ruling Communist party in suppressing freedom of thought. (Image Source: ComputerWorld)

Is it far fetched to believe China wouldn't give Zuckerberg passage onto the whitelist if they only agreed to allow the eventual SCS to tap into any data point Mark's datacenters can store and organize?

Let's not forget the lengths to which Mark will go in order to disguise goodwill just to gain a few Facebook users. His company was most recently embarrassed when the Internet Free Basics phone service was barred from India and Egypt because of its hypocritical stance on net neutrality. Zuckerberg's vision of connectivity is firmly seated in an experience that puts Facebook front and center, which Internet Free Basics made no effort to hide.

Mark's critics on Internet Free Basics were so fueled up that they penned enough signatures to send an open letter to Zuckerberg -- spanning support from 65 organizations across 35 countries. The crux of the debate which connects the SCS situation to this latest corporate blunder shines a light on one very critical aspect here: that Mark's company will go to great lengths in order to ensure global penetration.

And having Facebook blocked in China is likely a very troubling, omnipresent problem for the company. Any kind of legalized access for Facebook in the mainland would in some way have to allow for their willingness to oblige in the SCS. It simply defies logic to see this reality end up in any other outcome if the two are to become eventual bedfellows.

The more important moral question here is whether Zuckerberg would have any moral dedication to withholding Facebook from China if SCS penetration is demanded in exchange. It's no secret that Mark's obligation to enhance Facebook's bottom line trumps much of his policy direction.

His company is on the forefront of the H-1B visa debate, arguing that more skilled foreign labor is needed to fill talent shortages here in America -- something, as someone myself who employs those in the IT industry, believes is a big fat falsehood. As others have pointed out, the numbers behind the argument don't add up, and Facebook is just disguising a desire for cheap foreign labor in this newly created false argument.

How dangerous could Facebook's cooperation with the SCS in China be? For average Americans, at least on the short term, quite disinteresting honestly. The dictatorship in China will tweak and hone the SCS, sucking Big Data out of the likes of Facebook for years to further repress its own people who choose to express a free political will. That's always been the big untold agreement in Chinese society; you can have a grand Western-style capitalist economy, but don't dare to import any of those democratic free speech principles.

But the larger moral dilemma at hand is how Zuckerberg would use the experiment in China to encroach on Facebook users, or staff, closer to home. The title of this article alludes to the potential rise of corporate Thought Police. Such a reality doesn't seem as far fetched when the technology is almost there to enable it, and bigwigs like Zuckerberg are showing more willingness to push top-down political agendas.

Zuckerberg isn't shy about the kind of society he wants to foster. Giving us Facebook was the beginning, but what does the next step look like? I believe controlling and censoring the medium may be a logical next step. (Image Source: Yahoo News)

And by all means, I'm not the only one pushing alarm to this possible reality. In the wake of Mark's BLM outburst, piece after piece after scathing piece came out against the notion of a Thought Police encroachment by Mark or other social media behemoths.

Such a reality would likely begin with unpublicized cooperation with the state of China in implementing API hooks between Facebook and their SCS. Naturally, Facebook would then have the technical insight into how China culls and acts on the data gathered, but more importantly, a public precedent for such a civil liberty dystopia.

While there is no automated approach to cleansing Facebook's corporate office whiteboards, lessons learned from a potential SCS implementation with China could very well help enact such technology against its own staff Facebook accounts. Eradicating staff discourse which goes against Mark's agreed upon thought positions would allow for testing broader US-based social media censure, and it could be twisted in such a way to promote adherence to Facebook's corporate mission statement.

If trials went well, could we expect a rollout to all Facebook users? Again, if the dots on the above established logic can be considered viable, this wouldn't be far behind.

It's a dangerous dance to imagine; an ironic reality where the supposed leading platform in internet free speech would become the forefront bastion of political censorship and cleansed open discussion.

Here's hoping such a reality never comes to fruition. But if Mark's now public BLM outburst and subsequent staff shaming is any indication, beware a frightening future of social media that becomes curated by topical whitelists dictated by corporate Thought Police.

Dissenting opinion certainly will need not apply.

Headline Image Source: Cult of Mac

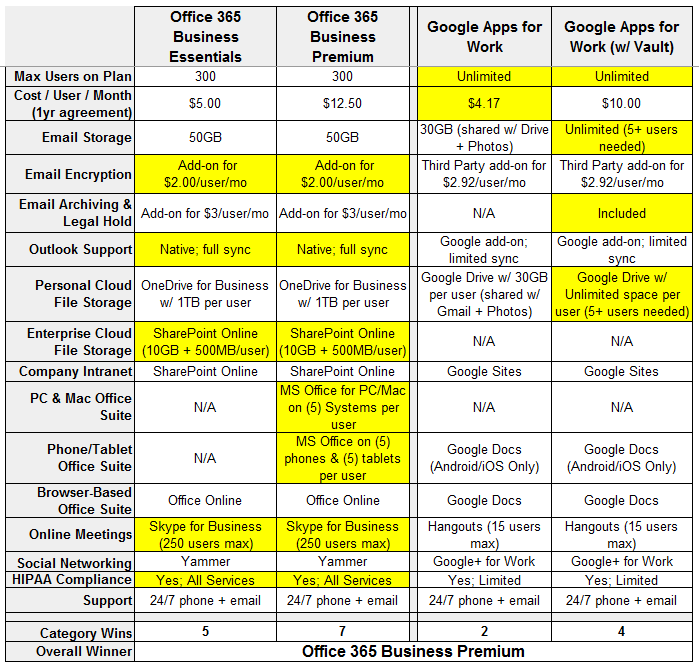

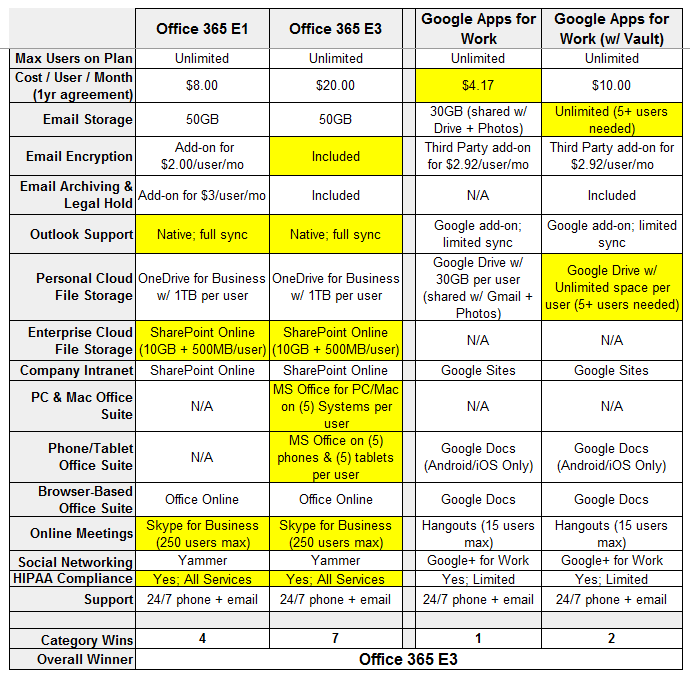

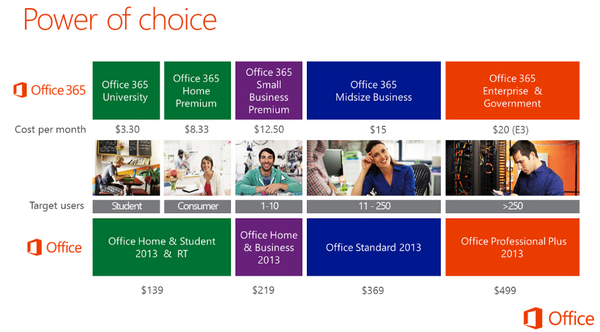

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company FireLogic, with over eight+ years of IT experience in the private and public sectors. He holds numerous technical credentials from Microsoft, Google, and CompTIA and specializes in consulting customers on growing hot technologies such as Office 365, Google Apps, cloud-hosted VoIP, among others. Derrick is an active member of CompTIA's Subject Matter Expert Technical Advisory Council that shapes the future of CompTIA exams across the world. You can reach him at derrick at wlodarz dot net.

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company FireLogic, with over eight+ years of IT experience in the private and public sectors. He holds numerous technical credentials from Microsoft, Google, and CompTIA and specializes in consulting customers on growing hot technologies such as Office 365, Google Apps, cloud-hosted VoIP, among others. Derrick is an active member of CompTIA's Subject Matter Expert Technical Advisory Council that shapes the future of CompTIA exams across the world. You can reach him at derrick at wlodarz dot net. -

Why Apple's shameless fight with the FBI is all about ego, not just cause

Publié: février 22, 2016, 11:57am CET par Derrick Wlodarz

After spending the last few days soaking up as much as possible on the Apple-FBI San Bernardino iPhone spat, the evidence -- in my eyes -- has become crystal clear. Apple's planted itself on the wrong side of history here for numerous reasons, and is using nothing less than a finely scripted legalese tango in defending its ulterior motives.

As a part time, somewhat auxiliary member of the tech media at large, I'm a bit embarrassed at how poorly this story has been covered by my very own colleagues. Many of those who should undeniably have a more nuanced, intricate understanding of the technical tenets being argued here have spent the last week pollinating the internet with talking point, knee-jerk reaction.

Inadvertently, this groupthink is steering Apple's misguided arguments forward to a populace that otherwise relies on the tech media's prowess in unearthing the truth in such matters. This is one such case where technical altruism is blinding the real story at play here, which are Apple's design flaws -- in other words, inadvertent insecurity bugs -- found in the older iPhone 5c.

For those that haven't kept up on this story, you can get a great primer on where the Apple vs FBI situation stems from and its surrounding core facets. ZDNet's Zack Whittaker has a great FAQ post that digs into the major topics at hand in an easy to understand manner. No need to retread already covered ground in this post.

Apple's Framed Narrative in Twisted Prism of Encryption

The tech media is writing story upon story that makes mention of supposed backdoors the FBI is requesting, which entail things like "master keys" which could potentially unlock any encrypted iOS device. While there are far too many media stories I could link to which prove such misinformation dissemination, I'll point to posts like this one on Venturebeat and even coverage on the otherwise usually judicious podcast, This Week in Enterprise Tech (episode 177 is where the Apple/FBI case was dissected at length).

While this self-absolving narrative is making its rounds, let's not forget where this all began. It was Apple itself, in its now famous open letter which was published on Apple's website and signed off by no less than Tim Cook himself. And for that, shame on him.

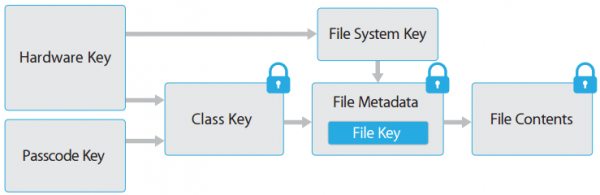

Many in the media have mistaken this to be a case about phone encryption, due to Apple's framing of the discussion in such a light. In reality, the FBI is merely asking Apple to help create a special iOS firmware for a single iPhone 5c which could disable forced-wiping after 10 entries, and altering the timeout delay between entries. Apple's attempt to sway the narrative leads me to believe it is more concerned about corporate image than public safety. (Image Source: Mercury News)

I know very well that as the leader of a massive publicly traded company, Cook has a duty first and foremost to his most critical stakeholders, those being Apple shareholders. But the finer point which Apple forgets in its shameless fight with the FBI is that the very sacred tenets of American democracy and capitalism have allowed his firm to grow to such unprecedented levels. There is very well a balancing act which needs to be distinguished in a free society that stands at the folds between security and privacy.

The FBI is not asking for any kind of encryption "master key" here, let's be very clear. Such a request would be an overreach of the inherent division that is required to ensure the greater security of data for the masses in question here. And such a request would be one that I would, as an IT professional, yet more importantly, a member of this society, be very succinctly be opposed to.

But this is not what has been asked of Apple, and not what's at stake for the company. This move is driven by a PR objective aimed at keeping Apple's ego and image in something it preaches so dearly: security.

FBI's Request Indirectly Forces Apple to Admit iPhone 5c Insecurity

If you're curious as to how I could come to such a conclusion, you can feel free to glean through the same well written, and lengthy, expose on this situation which convinced me on the subject with clear technical validation and reasoning -- not purely emotional knee-jerk reaction. The post is on the blog for a company called Trail of Bits which has noted deep expertise in security research.

Much of my very stance on the subject is also reflected in Mark Wilson's post from a few days ago right here on BetaNews. Even Trevor Pott of The Register penned a rather wordy, but pointedly accurate piece that confirms what the Trail of Bits blog post puts forth as a theory.

"What appears to be involved is a design flaw. Something about the iPhone 5C in question is broken," says Pott in his Register article. That's right, a design flaw which happens to be the complete lacking of the "secure enclave" which is detailed at length in the Trail of Bits blog piece.

If this were a newer A7 or newer powered iPhone, the FBI's chances of getting in without asking for the dreaded pandora's box "master key" (which doesn't exist) would be next to zero. But Apple never included this security facet on its earlier phones, and herein lies the very nuanced tenet of what the FBI truly wants to be able to leverage.

The FBI doesn't want and has never asked Apple for any kind of master key. It's asking for mere assistance in re-engineering its way through a known security flaw in Apple's iPhone 5c device which doesn't tie PIN entry and authentication to the internal data through the use of this secure enclave. While Apple won't admit as much, this is very much so a security flaw that Apple obviously will never be able to fix for iPhone 5c owners, and naturally, has every intention to re-architect the argument on this situation to deflect any potential for this criticism to reach critical mass.

And even more acutely, the FBI and Justice Department aren't asking Apple to make this available to "all" future iPhone 5c devices recovered in the course of policing. The DOJ says Apple has the free will to "keep or destroy" this special firmware after its purpose is rendered for the FBI's requests. So Apple's consumer-focused defense that it is being asked to "hack its own users" is just another attempt to misconstrue the real intentions of law enforcement here.

One important fact which some of the media has glossed over is that the iPhone in question was not even a personal phone of the shooter. The device was actually a work-issued device that was handed out by the San Bernardino County Department of Public Health, and in turn, is considered employer property with all accompanying rights that employers have over the data stored on those devices.

Apple's A7-powered and newer iOS devices all employ an internal lockbox known as the "Secure Encalve" which broker access to encryption keys used to access user data. The iPhone 5c lacks this very item, which makes the FBI's chances at getting into the San Bernardino shooter's iPhone very possible -- and technically proven feasible by security experts. But Apple's ego, partially built on an image of security, naturally forces its arm in trying to trump the FBI's request. (Image Source: Troy Hunt)

I'm not here to use Apple as a pincushion, as the industry at large needs to double down in its attempts to put its actions where its words are about security. But Apple deserves heat here, not only because it's putting shareholders first above national security, but because it has previously been guilty of trumpeting "security through obscurity" as I've covered at length in previous posts.

Any reasonable technology company is going to have bugs and defects in its devices and code. That's the nature of the beast, and understood by IT pros like myself. But Apple has built an empire in part by its clever marketing teams that have flaunted layers of security which supposedly beat and exceed those of any other company's competing products.

Sometimes, it is in the right and marketing matches reality.

But many times, like with the now-dead claims that OS X doesn't get malware which I fought against for years, Apple put greenbacks before fiduciary responsibility to be honest about its software and device capabilities. And while the cessation of the famous "I'm a MAC" advertising campaigns signaled a more subdued competitive standing on the OS X front, Apple's big moneymaker isn't in desktop computers anymore, it's in iPhones that it sells by the millions.

How does this round back to its reluctance to work with the FBI? Very simply, doing so would inadvertently admit that the iPhone 5c indeed has the security flaw which the FBI and the industry has exposed. And the problem for Apple is that it has created an ego bubble for itself which fans have bought into that has security as a notable keystone.

If that keystone falls here, Apple's back to square one with winning back its fans that place i-Devices on a pedestal most other manufacturers only wished they had.

Put in other words, it's Apple's ego at stake here. And it takes that very, very seriously if you haven't noticed.

Apple Has an Undeniable Duty to the Society it Built its Fortunes On

We've clearly established some very agreeable, black and white, facts surrounding this situation based on everything I've linked to above:

- This is not a debate or court-order surrounding any kind of encryption "backdoor" or "master key."

- The FBI is asking for acute access into a single iPhone based on a design flaw which has been exposed.

- Apple has the proven technical ability to render this special firmware locked to the iPhone in question.

- Apple has the court-ordered right to perform the procedures needed in its own facilities, and destroy the software created once complete.

As such, I'm convinced beyond any doubt that the abilities to get into this phone exist, and can be done so in a way to protect the universe of iPhone users at large from massive data grabs by legal overreach. Apple's denial in helping the FBI, as described earlier, is not grounded in technical validity, but rather being driven by a corporate ego that has grown too large for its own good.

Apple's feelgood and impenetrable stances on its device security are at risk of being exposed to the masses. For a company that has built its fortune around peddling a larger-than-life notion about its own security prowess, this would spell downright disaster in the marketplace, especially in the newfound re-emergence it has found in the previously reluctant Enterprise market towards its products.

VOTE NOW: Poll: Should Apple help the FBI unlock the San Bernardino iPhone?

But let's go beyond profitability reports and corporate egos, as the larger extrapolation here is Apple's duty that it owes to the citizens of this very nation. A country that is now in need of a compassionate about face by Apple so we can connect the dots on a terrorist situation that will not only help explain the events leading up to the San Bernardino massacre, but likely expose critical nuggets of information about other future plots or combatants.

Apple's attempt to paint this discussion in a sea of technicalities and promotion of the privacy of its users at large ends up falling on its face when the facts are dissected in sunlight. If that very sunlight means Apple's design flaws must be vaulted into public discussion, so be it. That's the duty it owes its users in being assured that its designs are not merely existing in a lab -- but being tested, sifted, and penetrated to make future generations of hardware better on the whole.

While our democracy has been historically opposed to gross intrusion of privacy, as seen in opposition to ad-hoc phone record dredging, a common sense approach towards nuanced security needs is something we cannot become blind to. Companies and advocates like Apple will try to smokescreen their intentions with public decrees like Tim Cook's in a blanket position on privacy, but even its future has just as much at stake if the terrorists can use an over-extended privacy veil as its own.

The day the Justice Department calls for blanketed encryption"master keys" from Apple is the day I will stand with Apple. But that day is not today, as Apple has not and is not being asked as much.

Do the right thing, Tim Cook. Your company enjoys prosperity through the same democratic society that is pleading with you to put the future of our nation ahead of personal or corporate motives.

If future deaths could have been prevented acutely via that iPhone 5c you refuse to help unlock, what kind of responsibility will fall on Apple's shoulders? Only history will be the judge of that.

Image Credit: klublu/Shutterstock

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company FireLogic, with over eight+ years of IT experience in the private and public sectors. He holds numerous technical credentials from Microsoft, Google, and CompTIA and specializes in consulting customers on growing hot technologies such as Office 365, Google Apps, cloud-hosted VoIP, among others. Derrick is an active member of CompTIA's Subject Matter Expert Technical Advisory Council that shapes the future of CompTIA exams across the world. You can reach him at derrick at wlodarz dot net.

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company FireLogic, with over eight+ years of IT experience in the private and public sectors. He holds numerous technical credentials from Microsoft, Google, and CompTIA and specializes in consulting customers on growing hot technologies such as Office 365, Google Apps, cloud-hosted VoIP, among others. Derrick is an active member of CompTIA's Subject Matter Expert Technical Advisory Council that shapes the future of CompTIA exams across the world. You can reach him at derrick at wlodarz dot net. -

8 big reasons Apple should let Mac OS X onto the PC

Publié: octobre 5, 2015, 2:24pm CEST par Derrick Wlodarz

As an IT professional by day, it's a question that has confounded me for some time. I've tossed it around in my technical circles, trying to get a feel for what true reasons exist for Apple's double standard when it comes to not allowing OS X onto other platforms -- but gladly allowing Windows to run natively via Boot Camp.

How come Apple doesn't allow PC users to install and run OS X on the hardware of their choice?

I know very well there are business reasons it doesn't allow it. And I also know that the company has legal restrictions in place to prevent it from happening as well. But that doesn't answer the why of what I'm digging at; financial and legal restraints are merely artificial boundaries for something that is otherwise quite feasible, as I'll prove below.

Apple makes a lot of money on the hardware it sells with each OS X system, and it is a corporation, so 2+2 here makes sense. It has a moral obligation to shareholders to maximize profits for the business. And as such, it has constructed licensing legalese to help keep the kingdom of Apple computers strong.

But I wanted to step back and take a more holistic, almost philosophical approach to this debate. One that takes into account consumer choice, hardware innovation, technical feasibility, and other points of interest that may or may not have been tossed around.

So that I can get it out in the open, I'll fully admit my curiosity on this subject stems from my own personal objections for why I have never purchased an Apple computer. Some would come to the conclusion that this makes me an Apple hater, but that's merely a convenient way for Apple loyalists to paint me as someone who doesn't have any merit to my opinion. How wrong they are.

I'm a tinkerer at heart, and can't stand the closed nature of the hardware around Apple's computers. Likewise, I've never been satisfied with the limited choice Apple affords buyers of its computers. It has always adhered to a Henry Ford-esque mentality when it comes to choice, and it goes against my every grain of consumer free will in gravitating towards more options, not less.

And perhaps my biggest stoic objection to Apple has always been a philosophical one coming from my dislike of the crux of what supports the Apple OS X computer business: a reluctance to allow OS X onto anything other than Apple-branded hardware. I'm a firm believer of keeping my dollar vote strictly aligned with companies that see eye to eye on things like consumer choice, software freedom, and price competition.

When it comes to these areas which I hold dear, Apple has never satisfied. As such, I've chosen to stay away from its products, which is my option as a consumer.

I know I'm not alone in questioning Apple's long held business practices. PCMag has covered the topic in the past, and online forum goers frequently opine on the merits of Apple's ways. Judging by online commentary, a big portion of Linux users stay on that platform because they refuse to allow Apple to control their system of choice.

Others, like Richard Stallman, go much further in outlining the reasons they refuse to buy Apple, covering things from its reliance on proprietary screws on devices to its love of DRM on most items sold in its online media stores.

For me, as an enthusiast and IT professional, I believe that Apple allowing OS X onto PCs would be a big move in showing the goodwill needed to win back lost trust from people like myself.

Would it happen? Could it happen?

Here's my top list of reasons why it definitely should happen.

8. Isn't Apple's Current OS X Stance Hypocritical?

Apple is no stranger to having zero shame for saying one thing and doing just the opposite when it suits its interests. The most recent example of this blatant double standard when it comes to Apple is its introduction of an aptly named "Move to iOS" app on the Google Play store aimed at -- you guessed it -- converting Android faithful back to Apple land.

Numerous outlets pointed out the hypocrisy of this shameless maneuver, seeing that Apple matter of factly rejects any app submissions into the App Store which merely mention another mobile operating system. Its official App Store submission policy makes no effort to hide this.

It goes without saying that one must ask the obvious: how come Apple has no problem with gladly helping users get Windows to work on its own machines, but refuses to budge in allowing OS X onto PCs? Wouldn't this be the fair, honest approach Apple could take to show its commitment to goodwill and a betterment of the technology world?

Its marketing department has tried to claim as much, in not these exact words, over the last decade or so. In my eyes, this would merely be an extension of its already established corporate mantra.

The Apple faithful see no issue with this, but as someone deeply entrenched in this industry now for a decade already, I've always wondered how no one has the audacity to call Apple out on its arguably biggest double standard.

The fruit logo company has similar opinion disparity when it comes to technology patents. Apple has a history over the last decade of calling out other companies (Samsung, Microsoft, others) in outright copying the "hard work" its company invested into bringing certain items to market. Yet, when caught on the receiving end of such complaints, Apple insinuates that the patent system is "broken".

And on the political front, Tim Cook's outspoken stance on gay rights in the USA pales in contrast to what he has refused to say on the global stage. There's economic convenience in Cook's obsession with gay rights only pertaining to the USA, because a large portion of the global markets Apple sells within have atrocious records on gay rights and women, as Carly Fiorina pointed out. Tim Cook knows full well that causing too much of a stir in many of these Middle Eastern and Asian markets would spell catastrophe for Apple sales there.

It's no secret that Apple is now looking to make inroads even in Iran, where gay people can legally receive the death penalty for their "crime". Where's the outcry from Apple's loyalists?

Time and time again, Apple has shown no reluctance to take stances where economic realities uphold the best return on Cupertino's dollar. Even if it means blatant hypocrisy in keeping such positions, whether it be OS X on PCs or gay rights.

7. OS X Already Runs on (Mostly) Standard PC Parts

Apple has been on an upwards trajectory when it comes to using standard PC parts, ever since it announced it was dropping the horrid PowerPC platform in 2006. This wasn't always the case. The 1990s were replete with Apple Macs that had proprietary boards and cards and memory chips. Repairing these machines with proper parts meant you had to always get the Apple variants -- which came with expected price premiums that kept the Apple hardware market pricing artificially inflated.

But those days are long gone. Apple learned its lesson and has been stocking every Mac desktop and laptop with (mostly) standardized components which can be purchased at no premium by any technician. This is great from a repair standpoint, and even better for another reason: it means that there is little technical roadblock to preventing OS X on traditional PCs. Intel x86 on regular PCs is the same as it is on Macs in almost every regard.

This point was proven factually possible in the market by a company called Psystar which sold Mac clones for a fraction of what Apple sells its own systems for. Apple's legal department was able to squash the startup with ease in the courts, but the crux of the discussion on whether OS X can be reliably installed and sold on non-Apple hardware was already shown as viable.

And today, this mentality lives on in various websites that offer easy instructions for running OS X on nearly any PC system -- a method dubbed "hackintosh" in tech circles online. We won't link to any of these so as to keep Apple's legal team away, but you can do your own searching. It's out there, it works, and proves that the only party standing between OS X on regular PCs is Apple.

6. OS X Could Finally Become a Competitive Desktop Gaming OS

While gaming on OS X is better than it has ever been, that's not saying much. Some popular titles are available on it, but a large portion of hot upcoming or already released games that Windows enjoys have no plans on releasing onto OS X.

Examples include the new Star Wars Battlefront, Metal Gear Solid 5, Battlefield 4, Fallout 4, Rise of the Tomb Raider, and Just Cause 3, to name just a few.

I couldn't find a single example of a title that came out on OS X but not on Windows. Such a case doesn't exist from what I can tell, which explains why PC gaming is Windows territory by and far.

Does it have to stay this way? Absolutely not. Apple could grow OS X into a legit secondary PC gaming platform if it opened up usage on regular PCs. I'm of the belief that there are a few major reasons why the gaming industry doesn't waste its time on porting titles to OS X (on the whole, but not in all cases).

One major obstacle is Apple's arguably low market share, especially on the global market (currently just over 7 percent, according to Net Applications as of Sep 2015). Windows makes up over 80 percent of that space on the desktop/laptop side. It doesn't make fiscal sense to employ the time, energy, and money to make games for OS X with such a small sliver that OS X enjoys. If PC gamers could have the choice to purchase OS X for their PCs, giving Apple the same competitive choice to otherwise new Windows buyers, this may tip the OS X scale on a global level. As such, developers would likely give OS X renewed interest in the platform as a whole.

Another item that stems directly from this low market share perspective is the time and effort that hardware device makers -- namely graphics giants like AMD and nVidia -- have to invest in getting performance on par with where it stands on Windows. The overall mindshare that has been dedicated to this on Windows has been growing for over two decades already. On OS X, comparatively little attention is placed on gaming performance for reasons stated above.

And finally, I think Apple's artificially premium pricing on its own hardware isn't helping matters when it comes to penetration. If educated consumers were given a choice of buying OS X on a plethora of competing systems, many of them would appreciate the choice in cost and quality of their machine. Segments of the market which otherwise can't afford an Apple would now be welcomed into the ecosystem their friends may enjoy, shrinking problem #1 I referenced a few paragraphs earlier.

While the gaming community has never traditionally been one that Apple has cared to cater to, it could easily grow OS X as a gaming competitor to Windows with simply opening OS X up to the PC market.

5. OS X Could Move Into New Avenues

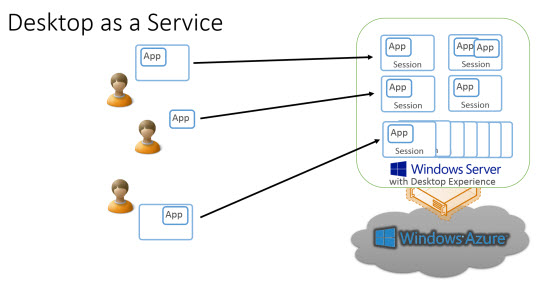

It goes without saying that Apple opening up OS X to the PC market as a whole would have larger ramifications than just placating its critics. There are numerous secondary avenues that some have only dreamed of OS X being usable within, but that nasty licensing roadblock sits in the way. What dividends could reaped from potentially opening up OS X to the masses?

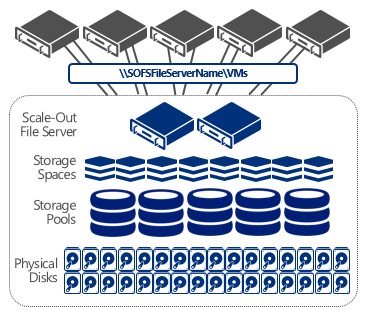

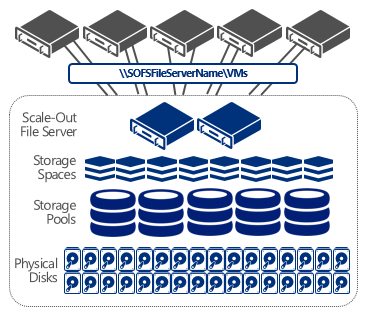

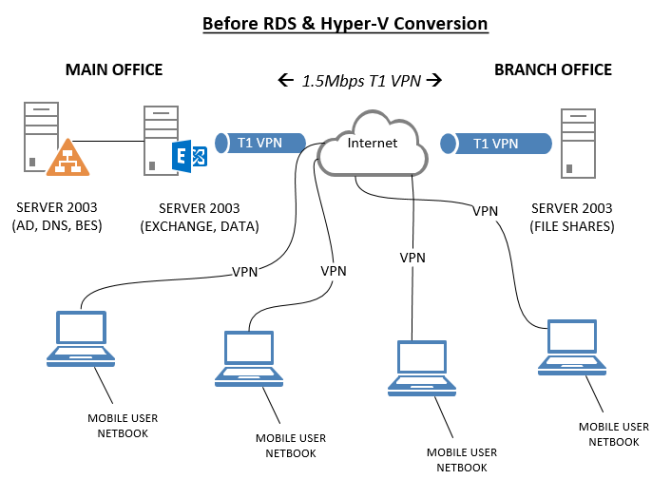

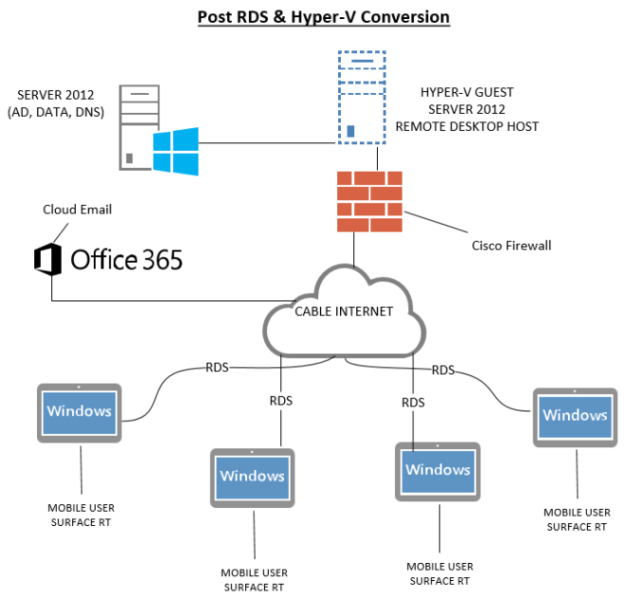

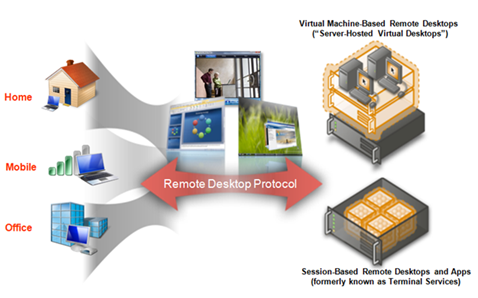

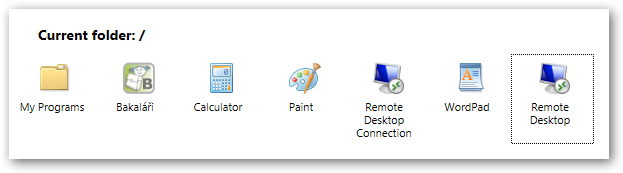

Many, in fact. One major area that my company FireLogic has been involved in implementing for organizations are VDI solutions -- namely Windows RDS backbones running on Hyper-V. I've penned previous deep dives on how fantastic the technology is with Windows Server 2012 R2. But the lowest common denominator in this equation has always been a Windows desktop as the endpoint.

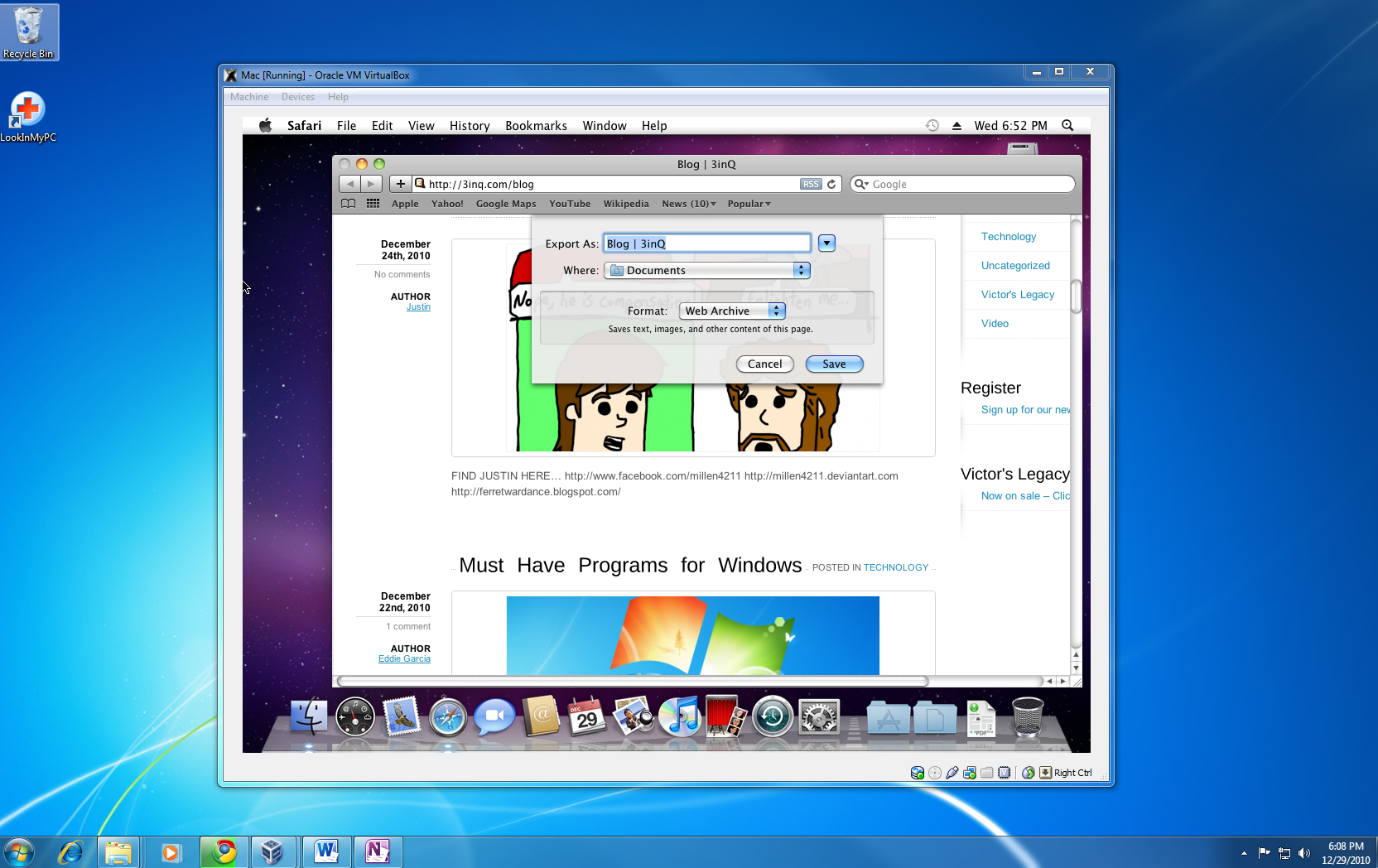

Running OS X in a non-Apple virtual environment has already been proven technically feasible, as shown above as a proof of concept. If Apple tore down the licensing walled garden around OS X, it could turn into a potential VDI endpoint to compete with Windows. Increased competition would mean everyone wins. (Image Source: coolcrew23)

Is it implausible to believe that OS X couldn't be farmed into an RDS-style or Citrix driven environment for hosting end user desktops? If licensing restrictions were taken away, and Apple played nice, this isn't as much of a long stretch as some may believe.

Some offices that have spent countless sums on buying individual Mac desktops for staff could instead opt to keep their familiar work interfaces, but centralize administration and security of the solution on something like Microsoft Hyper-V or VMWare ESXi. Unheard of today, but this could become an easy reality given the will from Apple.

Another current obvious no-go is OEM sales from vendors like Dell, Lenovo, HP, and others. Psystar proved there is a market for non-Apple OS X machines, even if the law wasn't on their side when they went to market. I'd be much less critical of Apple if it allowed others to sell OS X based computers and allow the open free market to set pricing for competing systems.

This would also allow for Apple to move back into being trusted by another big market segment which has soured towards Cupertino over the last decade...

4. The Enterprise May Take Apple Seriously Again

Two years ago, I penned a piece that claimed Apple would never be embraced by the Enterprise ever again. Bold words, and I'm hoping it proves me wrong. It would only benefit the entire industry at large.

But as it stands, Apple has been sealing its fate with the Enterprise market for some years now. It shamelessly discontinued the last vestige of a proper Apple server, the Xserve, and told the community to oddly embrace Mac Minis or Mac Pros as server machines. While some companies have gone to great lengths trying to make sense of how to make this happen -- a select few do succeed with style -- the rest of us are scratching our heads on how the heck Apple intended its style-first systems to ever fit cleanly into network U racks.

It's nice to see that Rubbermaid organizers can double as Mac Mini racks for the office. But it goes to show the shortsighted vision of Apple's intentions for the Enterprise. Opening OS X up to standard x86 PCs would mean businesses could choose to purchase or build proper network closet servers running OS X -- and forego the shenanigans with racking Mac Minis or Mac Pros. (Image Source: Random-Stuff.org)

And while the Enterprise values systems that can be easily repaired with spare parts, Apple places meandering archaic rules around how spare parts can be purchased by IT departments, and even took home the title of having one of the least repairable laptops ever with its 2012 Macbook Pro.

InformationWeek shared results a few years back from its Apple Outlook Survey, providing insight into the Enterprise's feelings on Apple's viability in big business. There were some key figures which I outlined before:

- 47 percent believe Apple's products are too expensive for the value provided.

- Only 11 percent rate Apple's product value as "excellent".

- 39 percent say that Apple is making no efforts to improve enterprise support.

- 35 percent dislike the difficulty of integrating Apple gear with existing infrastructure.

Could the Enterprise change it's tune on Apple? It would take much more than just allowing OS X onto PCs, but I'm a firm believer that this would be a catalyst towards moving channel vendors -- the Dells, the VMWares, the Citrixes, and others -- into helping build and sustain a viable OS X presence in the Enterprise beyond just the iOS penetration we see today, which may not have lasting presence.

Desktop computing is going nowhere quick, contrary to what some have been claiming for years now. Slowing tablet sales are already hitting the market. And recent stats show that a whopping 82 percent of IT Pros are replacing existing laptops/desktops for like systems -- NOT with tablets, as many have wrongly claimed. Only a minimal 9 percent of IT Pros are putting tablets out to replace dying desktops/laptops, which is a slim minority given how many years tablets have been out already in force.

By allowing OS X onto PCs, Apple could potentially reverse its course on the losing end of the Enterprise desktop/laptop market, and in turn, help foster the beginning of a supporting ecosystem dedicated to furthering OS X in the corporate world. It's not guaranteed, but it's as good of a shot as any at this point.

3. Overall Market Share Would Easily Rise

While still doing better than Linux or ChromeOS on the whole, Mac OS X has never been able to rise above the ten percent market on any major market share stats charts. In my eyes, Apple is actually its own worst enemy. It's true.

For starters, the high cost of Apple branded systems is a barrier to entry for a large majority of buyers who would otherwise consider an OS X machine. Apple's cheapest first party systems all hover around the $1000 marker (give or take a few bucks) which is out of bounds for not all, but a good majority of people (especially overseas buyers in emerging markets).

Take away the requirement that only Apple-branded hardware can run OS X, with OEM licensing extended to the market at large, and Apple could reverse the struggling woes of OS X on the traditional laptop/desktop side in my opinion. The market playing field would be substantially opened and leveled for OS X hardware, with a potential par for par competitive option for new buyers considering Windows vs OS X.

This would satisfy many enthusiast critics such as myself, who have long criticized Apple for its artificially inflated pricing tactics of now-standard computer hardware. Bringing down the price point of entry level OS X systems could let consumers decide on the OS of their choice based on functional merit and not just whether their pocket book was large enough.

While there are no guarantees there would be large swings in market share benefiting OS X, I see no reason why Apple couldn't eek out a good 20-30 percent by opening up OEM licensing options for OS X. Increased adoption of OS X could therefore lead to Apple positioning its own systems as the counterparts to Microsoft's Surface devices -- the premium experience for those who can afford it and want Apple's vision of computing on their desk.

But the masses would no longer be held at arm's length from being able to choose OS X if they really wanted to, due to artificial pricing floors. Consumers would end up as winners, and Apple would look like a hero of a company. A win win.

2. Increased Competition for Windows = Consumers Win

In the sub $1000 market for computers, Apple has zero presence today. Aside from refurbished systems or Craigslist hand me downs, you can't go to the store and find a Mac at this lower price point. As such, Windows has a stranglehold on what consumers can buy in this territory.

Sure, ChromeOS is an option and Linux has always been there, but I've written before for why Linux is also its own worst enemy when it comes to market share. For all intents and purposes, Windows controls the sub $1000 market space for computers.

Why does this have to be the de-facto standard? From a functional perspective, and from an ecosystem of apps perspective, OS X is by far the most seasoned alternate option to Windows for traditional desktop/laptop users. Most major desktop apps are cross compatible between both OSes, meaning if it weren't for price, more consumers could opt to go OS X if they really wanted to.

And therein lies my argument for this point. Few would disagree that the intense competition of the Windows ecosystem has not only brought down prices for consumers, but likewise, increased overall quality of hardware and software. Competition drives innovation, not stagnation, and this important fact is why Windows has not only survived, but thrived, as a platform.

One can point to the relative lack of advancement on the Mac from a hardware perspective as one example of Apple's negative hold on OS X. Sure, there is no question Apple is using premium processors and other internals when it comes to raw horsepower, but that's not where I am going here. I'm specifically talking about Windows platform innovations which have come to market and offered entirely new usage experiences for consumers.

Touch on laptops and desktops? Apple is nonexistent there. Convertible hybrids? Apple's nowhere to be seen. Stylus support on desktops or laptops? Again, Apple has never had an inclination to allow such functionality. There are undoubtedly plenty of buyers out there that would love to see some of these options available for purchase.

Apple's tight control over the hardware ecosystem for its OS X platform has stifled its own innovation, and with the growing reliance on iOS-devices for its revenue base, Apple has less and less incentive to steer outside of its comfort zones.

Giving the PC market a chance to do what it does best -- test new ideas for hardware combinations that make sense functionally and fiscally -- is perhaps one way OS X could stay relevant for the long term on the desktop.

Give others a chance to go where you refuse to, Apple.

1. Apple Fans Could Finally Have True Device Choice

This final point will probably have people either in complete agreement or vehement disagreement. But while many of the Apple faithful believe that Apple itself is the only one capable of creating OS X devices adhering to the Apple vision, I beg to differ.

While the status quo has tainted the opinion of many loyal buyers, I would ask loyalists to consider this: at any given point in time, the number of new Apple computers you can choose from on the market is somewhere in the range of 4-6 core models. While there are flavors offering more horsepower or battery life, the devices themselves all never stray too far from a common design baseline.

Many enjoy this limited set of choice in hardware. But from talking with others and reading comments online, there are just as many who hate the Henry Ford approach to hardware sales by Apple. Count me as part of this category.

On the Windows side, buyers have countless choices not only between form factors, but device brands and spec points. This plethora of choice has only benefitted in bringing new concepts to market, and giving consumers the ability to find the device that fits their needs best. Why wouldn't OS X fans benefit from similar open hardware choice?

While Apple's argument has always been that this limited set of hardware increases reliability, does this still hold fervently true? My company still offers residential computer repair for local customers and we get more than a fair share of Mac systems in our office each year that suffer from hardware/software incompatibilities, failed hard drives, incessant "spinning wheels of death", and recently growing with each month, malware infections that some believed were impossible.

If Apple cares about its dedicated fan base as much as it claims to, I would think that giving them the ability to choose the hardware platform that they run OS X on would only be beneficial for building and keeping the trust of its customers. Restricting hardware choice to a limited set of options solely for financial and business reasons may still prove to bring short term success, but I doubt it is viable for the longer term, as computing prices in general continue to fall.

Device choice would not only be limited to traditional form factors for the consumer market as we have come to expect. This could come in the form of OS X servers made by Enterprise giants Dell or Lenovo, just as an example. It could also be POS systems built on OS X for retail. It could even be integration platforms for the auto industry, akin to things like Microsoft and Android Auto already represent.

The penetration of OS X could go beyond the tried and true and open up new markets with more choices for vendors and consumers alike. And while Apple is convinced its fans would be losers in such a scenario, I think that couldn't be further from the truth.

If OS X is to continue to prosper as a platform, let it win in the market based on its own proven merits. It's time for Apple to tear down the moat around OS X and let it be free of its artificial restraints.

Eat your own dogfood, Apple, and consider Thinking Different on this one. You may make new believers out of some of us.

Main Image Credit: McdonnellTech.com

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company FireLogic, with over eight+ years of IT experience in the private and public sectors. He holds numerous technical credentials from Microsoft, Google, and CompTIA and specializes in consulting customers on growing hot technologies such as Office 365, Google Apps, cloud-hosted VoIP, among others. Derrick is an active member of CompTIA's Subject Matter Expert Technical Advisory Council that shapes the future of CompTIA exams across the world. You can reach him at derrick at wlodarz dot net.

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company FireLogic, with over eight+ years of IT experience in the private and public sectors. He holds numerous technical credentials from Microsoft, Google, and CompTIA and specializes in consulting customers on growing hot technologies such as Office 365, Google Apps, cloud-hosted VoIP, among others. Derrick is an active member of CompTIA's Subject Matter Expert Technical Advisory Council that shapes the future of CompTIA exams across the world. You can reach him at derrick at wlodarz dot net. -

Meraki MR Access Points: Enterprise-grade Wi-Fi finally made easy [Review]

Publié: août 17, 2015, 5:46pm CEST par Derrick Wlodarz

"UniFi is the revolutionary Wi-Fi system that combines Enterprise performance, unlimited scalability, a central management controller and disruptive pricing." That's the pitch thrown by Ubiquiti Networks right off the homepage for their popular UniFi line of wireless access point products. In many respects, that statement is right on the money.

But as the old adage goes, sometimes you truly do get what you pay for. And when it comes to UniFi, that tends to be my feeling more and more, seeing the gotchas we have had to deal with. We've continued to choose their access points, primarily in situations where cost is a large factor for our end customer. Who wouldn't want Enterprise level features at a Linksys level price?

I give Ubiquiti more than a decent ounce of credit for its altruistic intentions in the wireless market. They've spent the better part of the last five years trying to offer up an alternative to the big boys of commercial Wi-Fi -- the Ciscos, the Ruckus, the Arubas, etc -- in the form of their UniFi line of products. With their entry level access point, the UniFi Standard (UAP) coming in at under $100 USD out the door, it's hard not to notice them when shopping for your next wireless system upgrade.

When it comes to hardware build quality and aesthetics, their access points are absolutely top notch. The flying-saucer-like design choice of their Standard, LR, and Pro series units looks super cool, especially with the added visual flare of the ring LED that adorns their inner sphere. Their included mounting bracket is easy to install on walls or ceilings, and the access point itself merely "twists" into place to secure for final usage.

I cannot forget to mention that the pure wireless prowess of these units, especially from the UniFi Pro which has become a certain favorite of ours, is simply amazing. At the low price point these little saucers command, the coverage area we can blanket with just a few APs is astonishing. And once configured, they rarely ever need reboots in production -- we have had numerous offices running for 8-10 months or longer between firmware upgrades without a single call from clients about W-iFi downtime.

But design and Wi-Fi power of the hardware itself is about where the fun with UniFi starts and stops.

One of my biggest qualms are with the way UniFi handles administration of its access points with the much-touted software controller that is included at no extra cost. While the new version 4 of the interface is quite clean, it's still riddled by a nasty legacy requirement: Java.

For the longest time, the UniFi controller refused to work properly with Java 8 on any systems I tried to administer from, and I had to keep clients held back on the bug-ridden Java 7 just to maintain working functionality with UniFi. OK, not a dealbreaker, but a pain in the rear. Not to mention the number of times the controller software will crash on loading, with only a reboot fixing the issue. The UniFi forums are full of threads like this discussing workarounds to the endless Java issues.

The software controller woes don't end there. For some new installs, the controller would refuse to "adopt" UniFi units at a client site -- forcing us to go through a careful tango of hardware resets, attempted re-adoptions, and countless manual adoption commands. After trial and tribulation, most units would then connect to the controller, with still some refusing, and ending up being considered DOA duds.

My distaste for the UniFi controller further extends into situations where you have UniFi boxes deployed at numerous branch offices, with control being rendered from a single controller. While Ubiquiti claims clean inter-subnet connectivity at standard layer 3, real world functionality of this feature is much more of a hit and miss affair -- more often than not, on the miss side.

Initial adoption between branches can be time consuming and experimental, and even when connected, units will show up as disconnected for periods of time even though you have a clean site to site tunnel which has experienced zero drops.

Another little "gotcha" that Ubiquiti doesn't advertise heavily, and which is complained about on forums regularly like here and here, is the fact that their cheapest Standard units don't use regular (now standard) 48v PoE. Thinking of deploying 10 or more of their cheapest APs on your campus using existing PoE switches? Not going to happen. You'll need to rig up a less-than-favorable daisy chain of special 24v adapters that Ubiquiti includes with their APs -- one per AP.

Ubiquiti of course offers their own special switches that can post 24v PoE, the ToughSwitch line, but this is little consolation for those that have invested in their own switch hardware already. And I see little excuse for them forcing this on shops, since their Pro and AC level units use standard 48v PoE. A nudged play on their part to get people to buy more expensive APs, or just a technical limitation they had to implement? Take your pick.

I'm not here to deride Ubiquiti on what is otherwise a fantastic piece of hardware. Their end to end execution, however, is where they suffer, but they aren't alone in failing to deliver a well-rounded solution on all fronts.

The common theme I see in the Wi-Fi industry over the last decade or so is that you have rarely been able to get a product that satisfies all of the usual "wants" from business-grade Wi-Fi hardware:

- Good price.

- Easy-to-use management system.

- Great radio hardware and coverage.

- Quality technical support.

- Consistent firmware/software updates.

And therein lies my issue with most of the common vendors in the game. Ubiquiti offers great pricing and hardware, but has a software-based controller with numerous issues and offers zero phone support. Cisco's Aironet line has great hardware and tech support, but comes straddled with expensive hardware controller requirements and complex management and setup. Ruckus sits in a similar arena as Cisco, with some premium pricing to match.

Since our focus is primarily the small-midsize business customers we support, we've been on the prowl for decently priced gear, that comes with rock solid support, ease of management, and ideally gets rid of the need for hardware controllers -- not only due to the added cost, but also the requisite replacement and maintenance costs that go along with such controllers.

Not all hope is lost. Luckily, we found a product line that meets nearly all of our needs.

Enter Meraki

Last year, we grew quite fond of a company called Meraki for their excellent hardware firewalls. To be fair, Meraki isn't its own company anymore -- it's a subsidiary of Cisco now, with some well-to-do rumors saying that Meraki's gear will one day replace all of Cisco's current first-party networking gear. I went so far as to pen a lengthy review of why we standardized on their MX/Z1 line of firewall devices.

After battling with similar hits and misses on the firewall side, toying with the likes of ASAs, Sonicwalls, Fireboxes, and other brands, I found a fresh start with what Meraki offered in firewalls. Competitive price points, in extremely well built hardware packages, with top notch all-American 24/7 phone support when issues arose.

As a growing managed services provider (MSP), our company decided to standardize on Meraki across the board with regards to routers and firewalls. If a customer wishes to use us for managed support, they're either installing a Meraki firewall, or paying a premium for us to support the other guys' gear. That's just how heavily we trust their stuff for the clients who likewise entrust us for IT system uptime and support.

While we had been using Ubiquiti's UniFi access points for a few years already, biting our tongues about the less-than-desirable Java-based software controller, we weren't content with the solution for our most critical client Wi-Fi needs.

Meraki actually offered a webinar with a free (now extinct) MR12 access point, and since then we got hooked on the Meraki magic, as we call it. We used the unit to provide our own office with Wi-Fi until we moved late last year into our current space, and upgraded to the beefy entry-level MR18 access point. The WAP is pretty centrally mounted in our squarish 1300 sq ft office and provides stellar dual-band coverage for our space.

We even decided to pit the Unifi Pro AP against the MR18 and for all intents and purposes, coverage and speed levels were neck and neck. Seeing as the Unifi Pro was a known quantity for us in terms of coverage and stability, this was great to see that Meraki's MR18 was as good as what Ubiquiti was offering us for some time already.

In terms of hardware selection, Meraki offers a competitive set of (6) distinct options that are not overbearing (unlike Engenius, which at any given time has over a dozen access points available) but offers enough choice given the scenario you are installing into.

Our go-to units tend to be the MR18 (802.11a/b/g/n with dual band 2.4/5GHz) or the MR32 (802.11a/b/g/n/ac/bluetooth with dual band 2.4/5GHz). The MR18 is the most cost effective option from Meraki, with the MR32 being installed in situations where AC future proofing is a requirement.

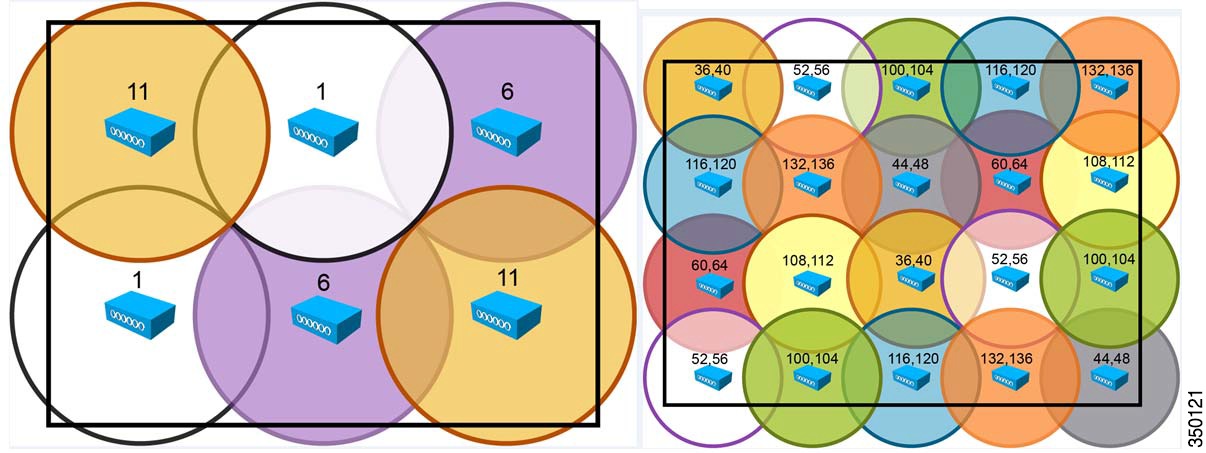

Both WAPs perform similarly in terms of coverage area per unit, with the MR32 having double the potential bandwidth if the right requirements are met on the client side. I wrote at length about the concept of high-bandwidth Wi-Fi and other related topics in a piece on Wi-Fi best practices from last month.

I will note that for some situations, where we are replacing a client's firewall with a Meraki device anyway, we sometimes opt for the Wi-Fi-enabled versions of their routers. The full-size option most SMBs we work with tend to go with an MX64W in such cases, or for very small (or home) offices, the Z1 has been the little champ that could.

I personally use a Meraki Z1 in my own home condo, and have no issues with coverage -- but it definitely cannot compete on par with the beastly radios in a unit such as the MR18. It's about half as powerful as far as coverage goes in my unscientific estimates.

Meraki = No More Hardware Controllers

If you're coming from the lands of Cisco Aironet, Ruckus, Aruba, or any of the other competitors to Meraki (other than Unifi or Aerohive) then you will be quite familiar with the concept of the hardware controller. It's an expensive device, usually starting at $1000 on its own plus licensing, that sits in your network to perform one function: tell your WAPs what they should be doing.

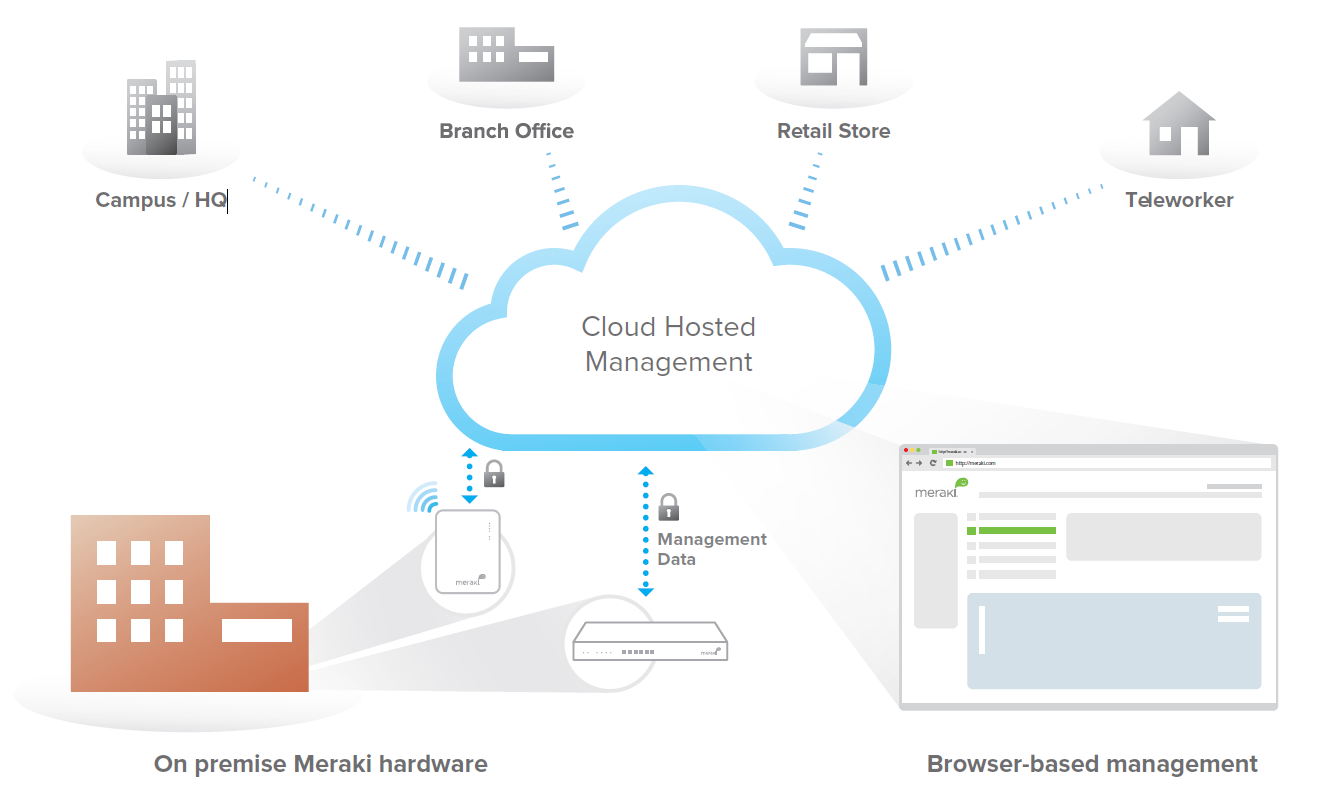

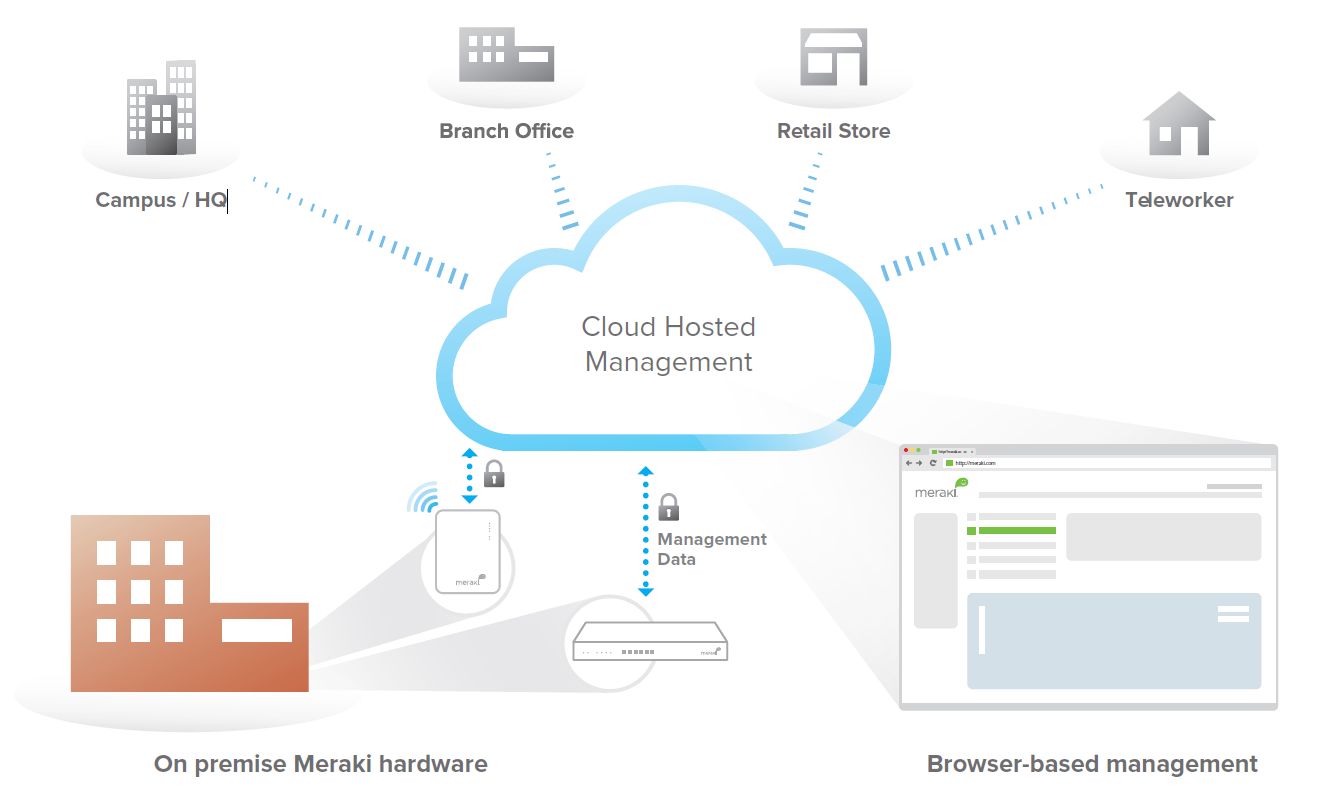

In the era of ever-pervasive connections to the wider web, why in 2015 should we consider this the gold standard of Wi-Fi system control? While the likes of UniFi prefer to rely on a pudgy locally-installed software controller, Meraki has built a cloud-based infrastructure to provide command and control for its MR line of access points.

Meraki ditched the flawed concept of hardware controllers, and instead unified its entire management platform under a single, web-based cloud dashboard. It doesn't cost any extra, doesn't require you to pin up any servers of your own, and it's constantly updated and maintained by the experts.

For all the cloud-detractors out there, don't point your "I distrust the cloud" wands at this solution unless you've tried it. The number of times I haven't been able to access my Meraki dashboard in the last year I can count on one hand -- and even these times were brief, with no on-premise networking gear being affected.