Twentieth in a series. "Market research firms tend to serve the same function for the PC industry that a lamppost does for a drunk", writes Robert X. Cringely in this installment of 1991 classic Accidental Empires. Context is universal forecast that OS/2 would overtake MS-DOS. Analysts were wrong then, much as they are today making predictions about smartphones, tablets and PCs. The insightful chapter also explains vaporware and product leak tactics IBM pioneered, Microsoft refined and Apple later adopted.

In Prudhoe Bay, in the oilfields of Alaska’s North Slope, the sun goes down sometime in late November and doesn’t appear again until January, and even then the days are so short that you can celebrate sunrise, high noon, and sunset all with the same cup of coffee. The whole day looks like that sliver of white at the base of your thumbnail.

It’s cold in Prudhoe Bay in the wintertime, colder than I can say or you would believe -- so cold that the folks who work for the oil companies start their cars around October and leave them running twenty-four hours a day clear through to April just so they won’t freeze up.

Idling in the seemingly endless dark is not good for a car. Spark plugs foul and carburetors gum up. Gas mileage goes completely to hell, but that’s okay; they’ve got the oil. Keeping those cars and trucks running night and pseudoday means that there are a lot of crummy, gas-guzzling, smoke-spewing vehicles in Prudhoe Bay in the winter, but at least they work.

Nobody ever lost his job for leaving a car running overnight during a winter in Prudhoe Bay.

And it used to be that nobody ever lost his job for buying computers from IBM.

But springtime eventually comes to Alaska. The tundra begins to melt, the days get longer than you can keep your eyes open, and the mosquitoes are suddenly thick as grass. It’s time for an oil change and to give that car a rest. When the danger’s gone -- when the environment has improved to a point where any car can be counted on to make it through the night, when any tool could do the job -- then efficiency and economy suddenly do become factors. At the end of June in Prudhoe Bay, you just might get in trouble for leaving a car running overnight, if there was a night, which there isn’t.

IBM built its mainframe computer business on reliable service, not on computing performance or low prices. Whether it was in Prudhoe Bay or Houston, when the System 370/168 in accounting went down, IBM people were there right now to fix it and get the company back up and running. IBM customer hand holding built the most profitable corporation in the world. But when we’re talking about a personal computer rather than a mainframe, and it’s just one computer out of a dozen, or a hundred, or a thousand in the building, then having that guy in the white IBM coveralls standing by eventually stops being worth 30 percent or 50 percent more.

That’s when it’s springtime for IBM.

IBM’s success in the personal computer business was a fluke. A company that was physically unable to invent anything in less than three years somehow produced a personal computer system and matching operating system in one year. Eighteen months later, IBM introduced the PC-XT, a marginally improved machine with a marginally improved operating system. Eighteen months after that, IBM introduced its real second-generation product, the PC-AT, with five times the performance of the XT.

From 1981 to 1984, IBM set the standard for personal computing and gave corporate America permission to take PCs seriously, literally creating the industry we know today. But after 1984, IBM lost control of the business.

Reality caught up with IBM’s Entry Systems Division with the development of the PC-AT. From the AT on, it took IBM three years or better to produce each new line of computers. By mainframe standards, three years wasn’t bad, but remember that mainframes are computers, while PCs are just piles of integrated circuits. PCs follow the price/performance curve for semiconductors, which says that performance has to double every eighteen months. IBM couldn’t do that anymore. It should have been ready with a new line of industry-leading machines by 1986, but it wasn’t. It was another company’s turn.

Compaq Computer cloned the 8088-based IBM PC in a year and cloned the 80286-based PC-AT in six months. By 1986, IBM should have been introducing its 80386-based machine, but it didn’t have one. Compaq couldn’t wait for Big Blue and so went ahead and introduced its DeskPro 386. The 386s that soon followed from other clone makers were clones of the Compaq machine, not clones of IBM. Big Blue had fallen behind the performance curve and would never catch up. Let me say that a little louder: ibm will never catch up.

IBM had defined MS-DOS as the operating system of choice. It set a 16-bit bus standard for the PC-AT that determined how circuit cards from many vendors could be used in the same machine. These were benevolent standards from a market leader that needed the help of other hardware and software companies to increase its market penetration. That was all it took. Once IBM could no longer stay ahead of the performance curve, the IBM standards still acted as guidelines, so clone makers could take the lead from there, and they did. IBM saw its market share slowly start to fall.

But IBM was still the biggest player in the PC business, still had the the greatest potential for wreaking technical havoc, and knew better than any other company how to slow the game down to a more comfortable pace. Here are some market control techniques refined by Big Blue over the years.

Technique No. 1. Announce a direction, not a product. This is my favorite IBM technique because it is the most efficient one from Big Blue’s perspective. Say the whole computer industry is waiting for IBM to come out with its next-generation machines, but instead the company makes a surprise announcement: “Sorry, no new computers this year, but that’s because we are committing the company to move toward a family of computers based on gallium arsenide technology [or Josephson junctions, or optical computing, or even vegetable computing -- it doesn't really matter]. Look for these powerful new computers in two years.”

“Damn, I knew they were working on something big,” say all of IBM’s competitors as they scrap the computers they had been planning to compete with the derivative machines expected from IBM.

Whether IBM’s rutabaga-based PC ever appears or not, all IBM competitors have to change their research and development focus, looking into broccoli and parsnip computing, just in case IBM is actually onto something. By stating a bold change of direction, IBM looks as if it’s grasping the technical lead, when in fact all it’s really doing is throwing competitors for a loop, burning up their R&D budgets, and ultimately making them wait up to two years for a new line of computers that may or may not ever appear. (IBM has been known, after all, to say later, “Oops, that just didn’t work out,” as they did with Josephson junction research.) And even when the direction is for real, the sheer market presence of IBM makes most other companies wait for Big Blue’s machines to appear to see how they can make their own product lines fit with IBM’s.

Whenever IBM makes one of these statements of direction, it’s like the yellow flag coming out during an auto race. Everyone continues to drive, but nobody is allowed to pass.

IBM’s Systems Application Architecture (SAA) announcement of 1987, which was supposed to bring a unified programming environment, user interface, and applications to most of its mainframe, minicomputer, and personal computer lines by 1989, was an example of such a statement of direction. SAA was for real, but major parts of it were still not ready in 1991.

Technique No. 2. Announce a real product, but do so long before you actually expect to deliver, disrupting the market for competitive products that are already shipping.

This is a twist on Technique No. 1 though aimed at computer buyers rather than computer builders. Because performance is always going up and prices are always going down, PC buyers love to delay purchases, waiting for something better. A major player like IBM can take advantage of this trend, using it to compete even when IBM doesn’t yet have a product of its own to offer.

In the 1983-1985 time period, for example, Apple had the Lisa and the Macintosh, VisiCorp had VisiOn, its graphical computing environment for IBM PCs, Microsoft had shipped the first version of Windows, Digital Research produced GEM, and a little company in Santa Monica called Quarterdeck Office Systems came out with a product called DesQ. All of these products -- even Windows, which came from Microsoft, IBM’s PC software partner -- were perceived as threats by IBM, which had no equivalent graphical product. To compete with these graphical environments that were already available, IBM announced its own software that would put pop-up windows on a PC screen and offer easy switching from application to application and data transfer from one program to another. The announcement came in the summer of 1984 at the same time the PC-AT was introduced. They called the new software TopView and said it would be available in about a year.

DesQ had been the hit of Comdex, the computer dealers’ convention held in Atlanta in the spring of 1984. Just after the show, Quarterdeck raised $5.5 million in second-round venture funding, moved into new quarters just a block from the beach, and was happily shipping 2,000 copies of DesQ per month. DesQ had the advantage over most of the other windowing systems that it worked with existing MS-DOS applications. DesQ could run more than one application at a time, too -- something none of the other systems (except Apple’s Lisa) offered. Then IBM announced TopView. DesQ sales dropped to practically nothing, and the venture capitalists asked Quarterdeck for their money back.

All the potential DesQ buyers in the world decided in a single moment to wait for the truly incredible software IBM promised. They forgot, of course, that IBM was not particularly noted for incredible software -- in fact, IBM had never developed PC software entirely on its own before. TopView was true Blue -- written with no help from Microsoft.

The idea of TopView hurt all the other windowing systems and contributed to the death of Vision and DesQ. Quarterdeck dropped from fifty employees down to thirteen. Terry Myers, co-founder of Quarterdeck and one of the few women to run a PC software company, borrowed $20,000 from her mother to keep the company afloat while her programmers madly rewrote DesQ to be compatible with the yet-to-be-delivered TopView. They called the new program DesqView.

When TopView finally appeared in 1985, it was a failure. The product was slow and awkward to use, and it lived up to none of the promises IBM made. You can still buy TopView from IBM, but nobody does; it remains on the IBM product list strictly because removing it would require writing off all development expenses, which would hurt IBM’s bottom line.

Technique No. 3. Don’t announce a product, but do leak a few strategic hints, even if they aren’t true.

IBM should have introduced a follow-on to the PC-AT in 1986 but it didn’t. There were lots of rumors, sure, about a system generally referred to as the PC-2, but IBM staunchly refused to comment. Still, the PC-2 rumors continued, accompanied by sparse technical details of a machine that all the clone makers expected would include an Intel 80386 processor. And maybe, the rumors continued, the PC-2 would have a 32-bit bus, which would mean yet another technical standard for add-in circuit cards.

It would have been suicide for a clone maker to come out with a 386 machine with its own 32-bit bus in early 1986 if IBM was going to announce a similar product a month or three later, so the clone makers didn’t introduce their new machines. They waited and waited for IBM to announce a new family of computers that never came. And during the time that Compaq and Dell, and AST, and the others were waiting for IBM to make its move, millions of PC-ATs were flowing into Fortune 1000 corporations, still bringing in the big bucks at a time when they shouldn’t have still been viewed as top-of-the-line machines.

When Compaq Computer finally got tired of waiting and introduced its own DeskPro 386, it was careful to make its new machine use the 16-bit circuit cards intended for the PC-AT. Not even Compaq thought it could push a proprietary 32-bit bus standard in competition with IBM. The only 32-bit connections in the Compaq machine were between the processor and main memory; in every other respect, it was just like a 286.

Technique No. 4. Don’t support anybody else’s standards; make your own.

The original IBM Personal Computer used the PC-DOS operating system at a time when most other microcomputers used in business ran CP/M. The original IBM PC had a completely new bus standard, while nearly all of those CP/M machines used something called the S-100 bus. Pushing a new operating system and a new bus should have put IBM at a disadvantage, since there were thousands of CP/M applications and hundreds of S-100 circuit cards, and hardly any PC-DOS applications and less than half a dozen PC circuit cards available in 1981. But this was not just any computer start-up; this was IBM, and so what would normally have been a disadvantage became IBM’s advantage. The IBM PC killed CP/M and the S-100 bus and gave Big Blue a full year with no PC-compatible competitors.

When the rest of the world did its computer networking with Ethernet, IBM invented another technology, called Token Ring. When the rest of the world thought that a multitasking workstation operating system meant Unix, IBM insisted on OS/2, counting on its influence and broad shoulders either to make the IBM standard a de facto standard or at least to interrupt the momentum of competitors.

Technique No. 5. Announce a product; then say you don’t really mean it.

IBM has always had a problem with the idea of linking its personal computers together. PCs were cheaper than 3270 terminals, so IBM didn’t want to make it too easy to connect PCs to its mainframes and risk hurting its computer terminal business. And linked PCs could, by sharing data, eventually compete with minicomputer or mainframe time-sharing systems, which were IBM’s traditional bread and butter. Proposing an IBM standard for networking PCs or embracing someone else’s networking standard was viewed in Armonk as a risky proposition. By the mid-1980s, though, other companies were already moving forward with plans to network IBM PCs, and Big Blue just couldn’t stand the idea of all that money going into another company’s pocket.

In 1985, then, IBM announced its first networking hardware and software for personal computers. The software was called the PC Network (later the PC LAN Program). The hardware was a circuit card that fit in each PC and linked them together over a coaxial cable, transferring data at up to 2 million bits per second. IBM sold $200 million worth of these circuit cards over the next couple of years. But that wasn’t good enough (or bad enough) for IBM, which announced that the network cards, while they are a product, weren’t part of an IBM direction. IBM’s true networking direction was toward another hardware technology called Token Ring, which would be available, as I’m sure you can predict by now, in a couple of years.

Customers couldn’t decide whether to buy the hardware that IBM was already selling or to wait for Token Ring, which would have higher performance. Customers who waited for Token Ring were punished for their loyalty, since IBM, which had the most advanced semiconductor plants in the world, somehow couldn’t make enough Token Ring adapters to meet demand until well into 1990. The result was that IBM lost control of the PC networking business.

The company that absolutely controls the PC networking business is headquartered at the foot of a mountain range in Provo, Utah, just down the street from Brigham Young University. Novell Inc. runs the networking business today as completely as IBM ran the PC business in 1983. A lot of Novell’s success has to do with the technical skills of those programmers who come to work straight out of BYU and who have no idea how much money they could be making in Silicon Valley. And a certain amount of its success can be traced directly to the company’s darkest moment, when it was lucky enough to nearly go out of business in 1981.

Novell Data Systems, as it was called then, was a struggling maker of not very good CP/M computers. The failing company threw the last of its money behind a scheme to link its computers together so they could share a single hard disk drive. Hard disks were expensive then, and a California company, Corvus Systems, had already made a fortune linking Apple IIs together in a similar fashion. Novell hoped to do for CP/M computers what Corvus had done for the Apple II.

In September 1981, Novell hired three contract programmers to devise the new network hardware and software. Drew Major, Dale Neibaur, and Kyle Powell were techies who liked to work together and hired out as a unit under the name Superset. Superset -- three guys who weren’t even Novell employees -- invented Novell’s networking technology and still direct its development today. They still aren’t Novell employees.

Companies like Ashton-Tate and Lotus Development ran into serious difficulties when they lost their architects. Novell and Microsoft, which have retained their technical leaders for over a decade, have avoided such problems.

In 1981, networking meant sharing a hard disk drive but not sharing data between microcomputers. Sure, your Apple II and my Apple II could be linked to the same Corvus 10-megabyte hard drive, but your data would be invisible to my computer. This was a safety feature, because the microcomputer operating systems of the time couldn’t handle the concept of shared data.

Let’s say I am reading the text file that contains your gothic romance just when you decide to add a juicy new scene to chapter 24. I am reading the file, adding occasional rude comments, when you grab the file and start to add text. Later, we both store the file, but which version gets stored: the one with my comments, or the one where Captain Phillips finally does the nasty with Lady Margaret? Who knows?

What CP/M lacked was a facility for directory locking, which would allow only one user at a time to change a file. I could read your romance, but if you were already adding text to it, directory locking would keep me from adding any comments. Directory locking could be used to make some data read only, and could make some data readable only by certain users. These were already important features in multiuser or networked systems but not needed in CP/M, which was written strictly for a single user.

The guys from Superset added directory locking to CP/M, they improved CP/M’s mechanism for searching the disk directory, and they moved all of these functions from the networked microcomputer up to a specialized processor that was at the hard disk drive. By November 1981, they’d turned what was supposed to have been a disk server like Corvus’s into a file server where users could share data. Novell’s Data Management Computer could support twelve simultaneous users at the same performance level as a single-user CP/M system.

Superset, not Novell, decided to network the new IBM PC. The three hackers bought one of the first PCs in Utah and built the first PC network card. They did it all on their own and against the wishes of Novell, which just then finally ran out of money.

The venture capitalists whose money it was that Novell had used up came to Utah looking for salvageable technology and found only Superset’s work worth continuing. While Novell was dismantled around them, the three contractors kept working and kept getting paid. They worked in isolation for two years, developing whole generations of product that were never sold to anyone.

The early versions of most software are so bad that good programmers usually want to throw them away but can’t because ship dates have to be met. But Novell wasn’t shipping anything in 1982-1983, so early versions of its network software were thrown away and started over again. Novell was able take the time needed to come up with the correct architecture, a rare luxury for a start-up, and subsequently the company’s greatest advantage. Going broke turned out to have been very good for Novell.

Novell hardware was so bad that the company concentrated almost completely on software after it started back in business in 1983. All the other networking companies were trying to sell hardware. Corvus was trying to sell hard disks. Televideo was trying to sell CP/M boxes. 3Com was trying to sell Ethernet network adapter cards. None of these companies saw any advantage to selling its software to go with another company’s hard disk, computer, or adapter card. They saw all the value in the hardware, while Novell, which had lousy hardware and knew it, decided to concentrate on networking software that would work with every hard drive, every PC, and every network card.

By this time Novell had a new leader in Ray Noorda, who’d bumped through a number of engineering, then later marketing and sales, jobs in the minicomputer business. Noorda saw that Novell’s value lay in its software. By making wiring a nonissue, with Novell’s software—now called Netware—able to run on any type of networking scheme, Noorda figured it would be possible to stimulate the next stage of growth. “Growing the market” became Noorda’s motto, and toward that end he got Novell back in the hardware business but sold workstations and network cards literally at cost just to make it cheaper and easier for companies to decide to network their offices. Ray Noorda was not a popular man in Silicon Valley.

In 1983, when Noorda was taking charge of Novell, IBM asked Microsoft to write some PC networking software. Microsoft knew very little about networking in 1983, but Bill Gates was not about to send his major customer away, so Microsoft got into the networking business.

“Our networking effort wasn’t serious until we hired Darryl Rubin, our network architect,” admitted Microsoft’s Steve Ballmer in 1991.

Wait a minute, Steve, did anyone tell IBM back in 1983 that Microsoft wasn’t really serious about this networking stuff? Of course not.

Like most of Microsoft’s other stabs at new technology, PC networking began as a preemptive strike rather than an actual product. The point of Gates’s agreeing to do IBM’s network software was to keep IBM as a customer, not to do a good product. In fact, Microsoft’s entry into most new technologies follows this same plan, with the first effort being a preemptive strike, the second effort being market research to see what customers really want in a product, and the third try is the real product. It happened that way with Microsoft’s efforts at networking, word processing, and Windows, and will continue in the company’s current efforts in multimedia and pen-based computing. It’s too bad, of course, that hundreds of thousands of customers spend millions and millions of dollars on those early efforts—the ones that aren’t real products. But heck, that’s their problem, right?

Microsoft decided to build its network technology on top of DOS because that was the company franchise. All new technologies were conceived as extensions to DOS, keeping the old technology competitive—or at least looking so—in an increasingly complex market. But DOS wasn’t a very good system on which to build a network operating system. DOS was limited to 640K of memory. DOS had an awkward file structure that got slower and slower as the number of files increased, which could become a major problem on a server with thousands of files. In contrast, Novell’s Netware could use megabytes of memory and had a lightning-fast file system. After all, Netware was built from scratch to be a network operating system, while Microsoft’s product wasn’t.

MS-Net appeared in 1985. It was licensed to more than thirty different hardware companies in the same way that MS-DOS was licensed to makers of PC clones. Only three versions of MS-Net actually appeared, including IBM’s PC LAN program, a dog.

The final nail in Microsoft’s networking coffin was also driven in 1985 when Novell introduced Netware 2.0, which ran on the 80286 processor in IBM’s PC-AT. You could run MS-Net on an AT also but only in the mode that emulated an 8086 processor and was limited to addressing 640K. But Netware on an AT took full advantage of the 80286 and could address up to 16 megabytes of RAM, making Novell’s software vastly more powerful than Microsoft’s.

This business of taking software written for the 8086 processor and porting it to the 80286 normally required completely rewriting the software by hand, often taking years of painstaking effort. It wasn’t just a matter of recompiling the software, of having a machine do the translation, because Microsoft staunchly maintained that there was no way to recompile 8086 code to run on an 80286. Bill Gates swore that such a recompile was impossible. But Drew Major of Superset didn’t know what Bill Gates knew, and so he figured out a way to recompile 8086 code to run on an 80286. What should have taken months or years of labor was finished in a week, and Novell had won the networking war. Six years and more than $100 million later, Microsoft finally admitted defeat.

Meanwhile, back in Boca Raton, IBM was still struggling to produce a follow-on to the PC-AT. The reason that it began taking IBM so long to produce new PC products was the difference between strategy and tactics. Building the original IBM PC was a tactical exercise designed to test a potential new market by getting a product out as quickly as possible. But when the new market turned out to be ten times larger than anyone at IBM had realized and began to affect the sales of other divisions of the company, PCs suddenly became a strategic issue. And strategy takes time to develop, especially at IBM.

Remember that there is nobody working at IBM today who recalls those sun-filled company picnics in Endicott, New York, back when the company was still small, the entire R&D department could participate in one three-legged race, and inertia was not yet a virtue. The folks who work at IBM today generally like the fact that it is big, slow moving, and safe. IBM has built an empire by moving deliberately and hiring third-wave people. Even Don Estridge, who led the tactical PC effort up through the PC-AT, wasn’t welcome in a strategic personal computer operation; Estridge was a second-wave guy at heart and so couldn’t be trusted. That’s why Estridge was promoted into obscurity, and Bill Lowe, who’d proved that he was a company man, a true third waver with only occasional second-wave leanings that could, and were, beaten out of him over time, was brought back to run the PC operations.

As an enormous corporation that had finally decided personal computers were part of its strategic plan, IBM laboriously reexamined the whole operation and started funding backup ventures to keep the company from being too dependent on any single PC product development effort. Several families of new computers were designed and considered, as were at least a couple of new operating systems. All of this development and deliberation takes time.

Even the vital relationship with Bill Gates was reconsidered in 1985, when IBM thought of dropping Microsoft and DOS altogether in favor of a completely new operating system. The idea was to port to the Intel 286 processor operating system software from a California company called Metaphor Computer Systems. The Metaphor software was yet another outgrowth of work done at Xerox PARC and ran then strictly on IBM mainframes, offering an advanced office automation system with a graphical user interface. The big corporate users who were daring enough to try Metaphor loved it, and IBM dreamed that converting the software to run on PCs would draw personal computers seamlessly into the mainframe world in a way that wouldn’t be so directly competitive with its other product lines. Porting Metaphor software would also have brought IBM a major role in application software for its PCs—an area where the company had so far failed.

Since Microsoft wasn’t even supposed to know that this Metaphor experiment was happening, IBM chose Lotus Development to port the software. The programmers at Lotus had never written an operating system, but they knew plenty about Intel processor architecture, since the high performance of Lotus 1-2-3 came mainly from writing directly to the processor, avoiding MS-DOS as much as possible.

Nothing ever came of the Lotus/Metaphor operating system, which turned out to be an IBM fantasy. Technically, it was asking too much of the 80286 processor. The 80386 might have handled the job, but for other strategic reasons, IBM was reluctant to move up to the 386.

IBM has had a lot of such fantasies and done a lot of negotiating and investigating whacko joint ventures with many different potential software partners. It’s a way of life at the largest computer company in the world, where keeping on top of the industry is accomplished through just this sort of diplomacy. Think of dogs sniffing each other.

IBM couldn’t go forever without replacing the PC-AT, and eventually it introduced a whole new family of microcomputers in April 1987. These were the Personal System/2s and came in four flavors: Models 30, 50, 60, and 80. The Model 30 used an 8086 processor, the Models 50 and 60 used an 80286, and the Model 80 was IBM’s first attempt at an 80386-based PC. The 286 and 386 machines used a new bus standard called the Micro Channel, and all of the PS/2s had 3.5-inch floppy disk drives. By changing hardware designs, IBM was again trying to have the market all to itself.

A new bus standard meant that circuit cards built for the IBM PC, XT, or AT models wouldn’t work in the PS/2s, but the new bus, which was 32 bits wide, was supposed to offer so much higher performance that a little more cost and inconvenience would be well worthwhile. The Micro Channel was designed by an iconoclastic (by IBM standards) engineer named Chet Heath and was reputed to beat the shit out of the old 16-bit AT bus. It was promoted as the next generation of personal computing, and IBM expected the world to switch to its Micro Channel in just the way it had switched to the AT bus in 1984.

But when we tested the PS/2s at InfoWorld, the performance wasn’t there. The new machines weren’t even as fast as many AT clones. The problem wasn’t the Micro Channel; it was IBM. Trying to come up with a clever work-around for the problem of generating a new product line every eighteen months when your organization inherently takes three years to do the job, product planners in IBM’s Entry Systems Division simply decided that the first PS/2s would use only half of the features of the Micro Channel bus. The company deliberately shipped hobbled products so that, eighteen months later, it could discover all sorts of neat additional Micro Channel horsepower, which would be presented in a whole new family of machines using what would then be called Micro Channel 2.

IBM screwed up in its approach to the Micro Channel. Had it introduced the whole product in 1987, doubling the performance of competitive hardware, buyers would have followed IBM to the new standard as they had before. They could have led the industry to a new 32-bit bus standard—one where IBM again would have had a technical advantage for a while. But instead, Big Blue held back features and then tried to scare away clone makers by threatening legal action and talking about granting licenses for the new bus only if licensees paid 5 percent royalties on both their new Micro Channel clones and on every PC, XT, or AT clone they had ever built. The only result of this new hardball attitude was that an industry that had had little success defining a new bus standard by itself was suddenly solidified against IBM. Compaq Computer led a group of nine clone makers that defined their own 32-bit bus standard in competition with the Micro Channel. Compaq led the new group, but IBM made it happen.

From IBM’s perspective, though, its approach to the Micro Channel and the PS/2s was perfectly correct since it acted to protect Big Blue’s core mainframe and minicomputer products. Until very recently, IBM concentrated more on the threat that PCs posed to its larger computers than on the opportunities to sell ever more millions of PCs. Into the late 1980s, IBM still saw itself primarily as a maker of large computers.

Along with new PS/2 hardware, IBM announced in 1987 a new operating system called OS/2, which had been under development at Microsoft when IBM was talking with Metaphor and Lotus. The good part about OS/2 was that it was a true multitasking operating system that allowed several programs to run at the same time on one computer. The bad part about OS/2 was that it was designed by IBM.

When Bill Lowe sent his lieutenants to Microsoft looking for an operating system for the IBM PC, they didn’t carry a list of specifications for the system software. They were looking for something that was ready—software they could just slap on the new machine and run. And that’s what Microsoft gave IBM in PC-DOS: an off-the-shelf operating system that would run on the new hardware. Microsoft, not IBM, decided what DOS would look like and act like. DOS was a Microsoft product, not an IBM product, and subsequent versions, though they appeared each time in the company of new IBM hardware, continued to be 100 percent Microsoft code.

OS/2 was different. OS/2 was strategic, which meant that it was too important to be left to the design whims of Microsoft alone. OS/2 would be designed by IBM and just coded by Microsoft. Big mistake.

OS/2 1.0 was designed to run on the 80286 processor. Bill Gates urged IBM to go straight for the 80386 processor as the target for OS/2, but IBM was afraid that the 386 would offer performance too close to that of its minicomputers. Why buy an AS/400 minicomputer for $200,000, when half a dozen networked PS/2 Model 80s running OS/2-386 could give twice the performance for one third the price? The only reason IBM even developed the 386-based Model 80, in fact, was that Compaq was already selling thousands of its DeskPro 386s. Over the objections of Microsoft, then, OS/2 was aimed at the 286, a chip that Gates correctly called “brain damaged.”

OS/2 had both a large address space and virtual memory. It had more graphics options than either Windows or the Macintosh, as well as being multithreaded and multitasking. OS/2 looked terrific on paper. But what the paper didn’t show was what Gates called “poor code, poor design, poor process, and other overhead” thrust on Microsoft by IBM.

While Microsoft retained the right to sell OS/2 to other computer makers, this time around IBM had its own special version of OS/2, Extended Edition, which included a database called the Data Manager, and an interface to IBM mainframes called the Communication Manager. These special extras were intended to tie OS/2 and the PS/2s into their true function as very smart mainframe terminals. IBM had much more than competing with Compaq in mind when it designed the PS/2s. IBM was aiming toward a true counterreformation in personal computing, leading millions of loyal corporate users back toward the holy mother church—the mainframe.

IBM’s dream for the PS/2s, and for OS/2, was to play a role in leading American business away from the desktop and back to big expensive computers. This was the objective of SAA—IBM’s plan to integrate its personal computers and mainframes—and of what they hoped would be SAA’s compelling application, called OfficeVision.

On May 16, 1989, I sat in an auditorium on the ground floor of the IBM building at 540 Madison Avenue. It was a rainy Tuesday morning in New York, and the room, which was filled with bright television lights as well as people, soon took on the distinctive smell of wet wool. At the front of the room stood a podium and a long table, behind which sat the usual IBM suspects—a dozen conservatively dressed, overweight, middle-aged white men.

George Conrades, IBM’s head of U.S. marketing, appeared behind the podium. Conrades, 43, was on the fast career track at IBM. He was younger than nearly all the other men of IBM who sat at the long table behind him, waiting to play their supporting roles. Behind the television camera lens, 25,000 IBM employees, suppliers, and key customers spread across the world watched the presentation by satellite.

The object of all this attention was a computer software product from IBM called OfficeVision, the result of 4,000 man-years of effort at a cost of more than a billion dollars.

To hear Conrades and the others describe it through their carefully scripted performances, OfficeVision would revolutionize American business. Its “programmable terminals” (PCs) with their immense memory and processing power would gather data from mainframe computers across the building or across the planet, seeking out data without users’ having even to know where the data were stored and then compiling them into colorful and easy-to-understand displays. OfficeVision would bring top executives for the first time into intimate -- even casual -- contact with the vital data stored in their corporate computers. Beyond the executive suite, it would offer access to data, sophisticated communication tools, and intuitive ways of viewing and using information throughout the organization. OfficeVision would even make it easier for typists to type and for file clerks to file.

In the glowing words of Conrades, OfficeVision would make American business more competitive and more profitable. If the experts were right that computing would determine the future success or failure of American business, then OfficeVision simply was that future. It would make that success.

“And all for an average of $7,600 per desk,” Conrades said, “not including the IBM mainframe computers, of course.”

The truth behind this exercise in worsted wool and public relations is that OfficeVision was not at all the future of computing but rather its past, spruced up, given a new coat of paint, and trotted out as an all-new model when, in fact, it was not new at all. In the eyes of IBM executives and their strategic partners, though, OfficeVision had the appearance of being new, which was even better. To IBM and the world of mainframe computers, danger lies in things that are truly new.

With its PS/2s and OS/2 and OfficeVision, IBM was trying to get a jump on a new wave of computing that everyone knew was on its way. The first wave of computing was the mainframe. The second wave was the minicomputer. The third wave was the PC.

Now the fourth wave -- generally called network computing -- seemed imminent, and IBM’s big-bucks commitment to SAA and to OfficeVision was its effort to make the fourth wave look as much as possible like the first three. Mainframes would do the work in big companies, minicomputers in medium-sized companies, and PCs would serve small business as well as acting as “programmable terminals” for the big boys with their OfficeVision setups.

Sadly for IBM, by 1991, OfficeVision still hadn’t appeared, having tripped over mountains of bad code, missed delivery schedules, and facing the fact of life that corporate America is only willing to invest less than 10 percent of each worker’s total compensation in computing resources for that worker. That’s why secretaries get $3,000 PCs and design engineers get $10,000 workstations. OfficeVision would have cost at least double that amount per desk, had it worked at all, so today IBM is talking about a new, slimmed-down OfficeVision 2.0, which will probably fail too.

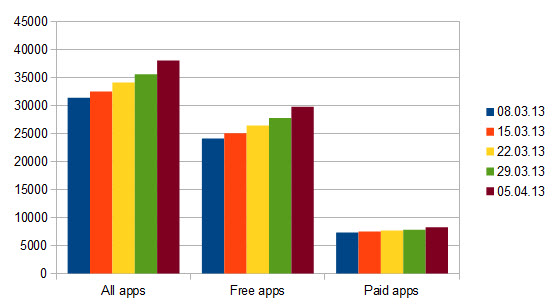

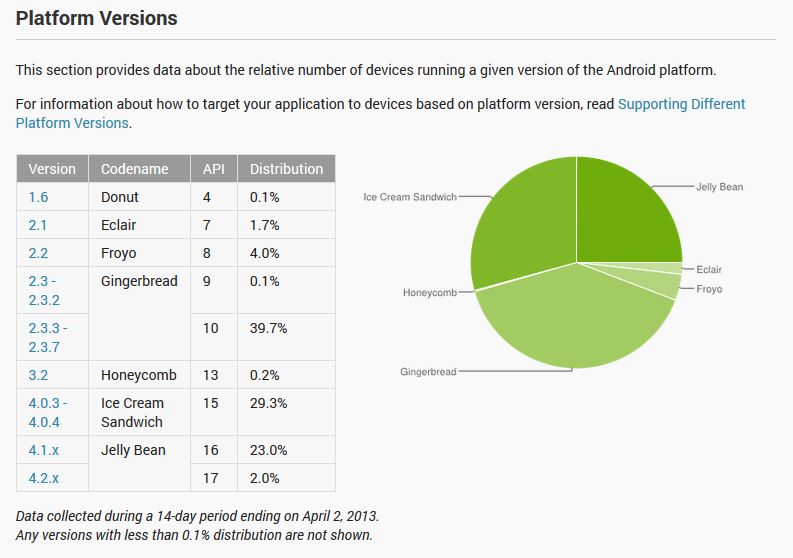

When OS/2 1.0 finally shipped months after the PS/2 introduction, every big shot in the PC industry asked his or her market research analysts when OS/2 unit sales would surpass sales of MS-DOS. The general consensus of analysts was that the crossover would take place in the early 1990s, perhaps as soon as 1991. It didn’t happen.

Time to talk about the realities of market research in the PC industry. Market research firms make surveys of buyers and sellers, trying to predict the future. They gather and sift through millions of bytes of data and then apply their S-shaped demand curves, predicting what will and won’t be a hit. Most of what they do is voodoo. And like voodoo, whether their work is successful depends on the state of mind of their victim/customer.

Market research customers are hardware and software companies paying thousands -- sometimes hundreds of thousands -- of dollars, primarily to have their own hunches confirmed. Remember that the question on everyone’s mind was when unit sales of OS/2 would exceed those of DOS. Forget that OS/2 1.0 was late. Forget that there was no compelling application for OS/2. Forget that the operating system, when it did finally appear, was buggy as hell and probably shouldn’t have been released at all. Forget all that, and think only of the question, which was: When will unit sales of OS/2 exceed those of DOS? The assumption (and the flaw) built into this exercise is that OS/2, because it was being pushed by IBM, was destined to overtake DOS, which it hasn’t. But given that the paying customers wanted OS/2 to succeed and that the research question itself suggested that OS/2 would succeed, market research companies like Dataquest, InfoCorp, and International Data Corporation dutifully crazy-glued their usual demand curves on a chart and predicted that OS/2 would be a big hit. There were no dissenting voices. Not a single market research report that I read or read about at that time predicted that OS/2 would be a failure.

Market research firms tend to serve the same function for the PC industry that a lamppost does for a drunk.

OS/2 1.0 was a dismal failure. Sales were pitiful. Performance was pitiful, too, at least in that first version. Users didn’t need OS/2 since they could already multitask their existing DOS applications using products like Quarterdeck’s DesqView. Independent software vendors, who were attracted to OS/2 by the lure of IBM, soon stopped their OS/2 development efforts as the operating system’s failure became obvious. But the failure of OS/2 wasn’t all IBM’s fault. Half of the blame has to go on the computer memory crisis of the late 1980s.

OS/2 made it possible for PCs to access far more memory than the pitiful 640K available under MS-DOS. On a 286 machine, OS/2 could use up to 16 megabytes of memory and in fact seemed to require at least 4 megabytes to perform acceptably. Alas, this sudden need for six times the memory came at a time when American manufacturers had just abandoned the dynamic random-access memory (DRAM) business to the Japanese.

In 1975, Japan’s Ministry for International Trade and Industry had organized Japan’s leading chip makers into two groups -- NEC-Toshiba and Fujitsu-Hitachi-Mitsubishi -- to challenge the United States for the 64K DRAM business. They won. By 1985, these two groups had 90 percent of the U.S. market for DRAMs. American companies like Intel, which had started out in the DRAM business, quit making the chips because they weren’t profitable, cutting world DRAM production capacity as they retired. Then, to make matters worse, the United States Department of Commerce accused the Asian DRAM makers of dumping -- selling their memory chips in America at less than what it cost to produce them. The Japanese companies cut a deal with the United States government that restricted their DRAM distribution in America -- at a time when we had no other reliable DRAM sources. Big mistake. Memory supplies dropped just as memory demand rose, and the classic supply-demand effect was an increase in DRAM prices, which more than doubled in a few months. Toshiba, which was nearly the only company making 1 megabit DRAM chips for a while, earned more than $1 billion in profits on its DRAM business in 1989, in large part because of the United States government.

Doubled prices are a problem in any industry, but in an industry based on the idea of prices continually dropping, such an increase can lead to panic, as it did in the case of OS/2. The DRAM price bubble was just that—a bubble—but it looked for a while like the end of the world. Software developers who were already working on OS/2 projects began to wonder how many users would be willing to invest the $1,000 that it was suddenly costing to add enough memory to their systems to run OS/2. Just as raising prices killed demand for Apple’s Macintosh in the fall of 1988 (Apple’s primary reason for raising prices was the high cost of DRAM), rising memory prices killed both the supply and demand for OS/2 software.

Then Bill Gates went into seclusion for a week and came out with the sudden understanding that DOS was good for Microsoft, while OS/2 was probably bad. Annual reading weeks, when Gates stays home and reads technical reports for seven days straight and then emerges to reposition the company, are a tradition at Microsoft. Nothing is allowed to get in the way of planned reading for Chairman Bill. During one business trip to South America, for example, the head of Microsoft’s Brazilian operation tried to impress the boss by taking Gates and several women yachting for the weekend. But this particular weekend had been scheduled for reading, so Bill, who is normally very much on the make, stayed below deck reading the whole time.

Microsoft had loyally followed IBM in the direction of OS/2. But there must have been an idea nagging in the back of Bill Gates’s mind. By taking this quantum leap to OS/2, IBM was telling the world that DOS was dead. If Microsoft followed IBM too closely in this OS/2 campaign, it was risking the more than $100 million in profits generated each year by DOS -- profits that mostly didn’t come from IBM. During one of his reading weeks. Gates began to think about what he called “DOS as an asset” and in the process set Microsoft on a collision course with IBM.

Up to 1989, Microsoft followed IBM’s lead, dedicating itself publicly to OS/2 and promising versions of all its major applications that would run under the new operating system. On the surface, all was well between Microsoft and IBM. Under the surface, there were major problems with the relationship. A feisty (for IBM) band of graphics programmers at IBM’s lab in Hursley, England, first forced Microsoft to use an inferior and difficult-to-implement graphics imaging model in Presentation Manager and then later committed all the SAA operating systems, including OS/2, to using PostScript, from the hated house of Warnock— Adobe Systems.

Although by early 1990, OS/2 was up to version 1.2, which included a new file system and other improvements, more than 200 copies of DOS were still being sold for every copy of OS/2. Gates again proposed to IBM that they abandon the 286-based OS/2 product entirely in favor of a 386-based version 2.0. Instead, IBM’s Austin, Texas, lab whipped up its own OS/2 version 1.3, generally referred to as OS/2 Lite. Outwardly, OS/2 1.3 tasted great and was less filling; it ran much faster than OS/2 1.2 and required only 2 megabytes of memory. But OS/2 1.3 sacrificed subsystem performance to improve the speed of its user interface, which meant that it was not really as good a product as it appeared to be. Thrilled finally to produce some software that was well received by reviewers, IBM started talking about basing all its OS/2 products on 1.3 -- even its networking and database software, which didn’t even have user interfaces that needed optimizing. To Microsoft, which was well along on OS/2 2.0, the move seemed brain damaged, and this time they said so.

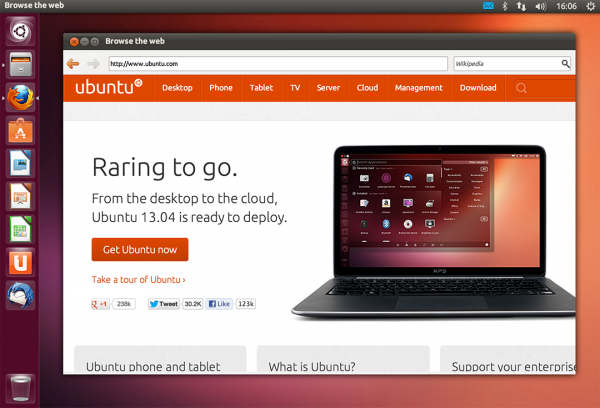

Microsoft began moving away from OS/2 in 1989 when it became clear that DOS wasn’t going away, nor was it in Microsoft’s interest for it to go away. The best solution for Microsoft would be to put a new face on DOS, and that new face would be yet another version of Windows. Windows 3.0 would include all that Microsoft had learned about graphical user interfaces from seven years of working on Macintosh applications. Windows 3.0 would also be aimed at more powerful PCs using 386 processors -- the PCs that Bill Gates expected to dominate business desktops for most of the 1990s. Windows would preserve DOS’s asset value for Microsoft and would give users 90 percent of the features of OS/2, which Gates began to see more and more as an operating system for network file servers, database servers, and other back-end network applications that were practically invisible to users.

IBM wanted to take from Microsoft the job of defining to the world what a PC operating system was. Big Blue wanted to abandon DOS in favor of OS/2 1.3, which it thought could be tied more directly into IBM hardware and applications, cutting out the clone makers in the process. Gates thought this was a bad idea that was bound to fail. He recognized, even if IBM didn’t, that the market had grown to the point where no one company could define and defend an operating system standard by itself. Without Microsoft’s help, Gates thought IBM would fail. With IBM’shelp, which Gates viewed more as meddling than assistance, Microsoft might fail. Time for a divorce.

Microsoft programmers deliberately slowed their work on OS/2 and especially on Presentation Manager, its graphical user interface. “What incentive does Microsoft have to get [OS/2-PM] out the door before Windows 3?” Gates asked two marketers from Lotus over dinner following the 1990 Computer Bowl trivia match in April 1990. “Besides, six months after Windows 3 ships it will have greater market share than PM will ever have. OS/2 applications won’t have a chance.”

Later that night over drinks, Gates speculated that IBM would “fold” in seven years, though it could last as long as ten or twelve years if it did everything right. Inevitably, though, IBM would die, and Bill Gates was determined that Microsoft would not go down too.

The loyal Lotus marketers prepared a seven-page memo about their inebriated evening with Chairman Bill, giving copies of it to their top management. Somehow I got a copy of the memo, too. And a copy eventually landed on the desk of IBM’s Jim Cannavino, who had taken over Big Blue’s PC operations from Bill Lowe. The end was near for IBM’s special relationship with Microsoft.

Over the course of several months in 1990, IBM and Microsoft negotiated an agreement leaving DOS and Windows with Microsoft and OS/2 1.3 and 2.0 with IBM. Microsoft’s only connection to OS/2 was the right to develop version 3.0, which would run on non-Intel processors and might not even share all the features of earlier versions of OS/2.

The Presentation Manager programmers in Redmond, who had been having Nerfball fights with their Windows counterparts every night for months, suddenly found themselves melded into the Windows operation. A cross-licensing agreement between the two companies remained in force, allowing IBM to offer subsequent versions of DOS to its customers and Microsoft the right to sell versions of OS/2, but the emphasis in Redmond was clearly on DOS and Windows, not OS/2.

“Our strategy for the 90′s is Windows -- one evolving architecture, a couple of implementations,” Bill Gates wrote. “Everything we do should focus on making Windows more successful.”

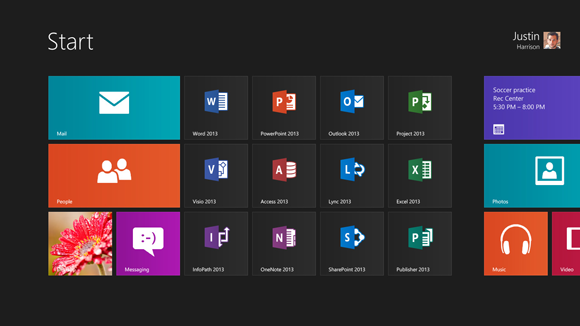

Windows 3.0 was introduced in May 1990 and sold more than 3 million copies in its first year. Like many other Microsoft products, this third try was finally the real thing. And since it had a head start over its competitors in developing applications that could take full advantage of Windows 3.0, Microsoft was more firmly entrenched than ever as the number one PC software company, while IBM struggled for a new identity. All those other software developers, the ones who had believed three years of Microsoft and IBM predictions that OS/2′s Presentation Manager was the way to go, quickly shifted their OS/2 programmers over to writing Windows applications.

Reprinted with permission

Photo Credit: HomeArt/Shutterstock

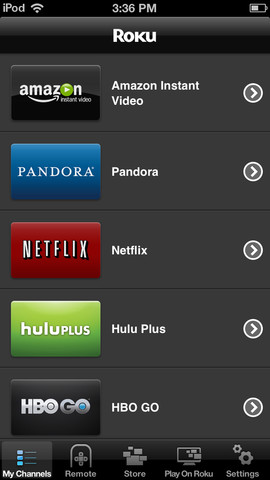

Today's set-top boxes do not all come from the cable or satellite provider and they frequently contain much more functionality than those that do come from the big providers. One is Roku, a company that has been innovating and upgrading at a rather quick pace recently, having only just released the Roku 3 with added functionality.

Today's set-top boxes do not all come from the cable or satellite provider and they frequently contain much more functionality than those that do come from the big providers. One is Roku, a company that has been innovating and upgrading at a rather quick pace recently, having only just released the Roku 3 with added functionality.

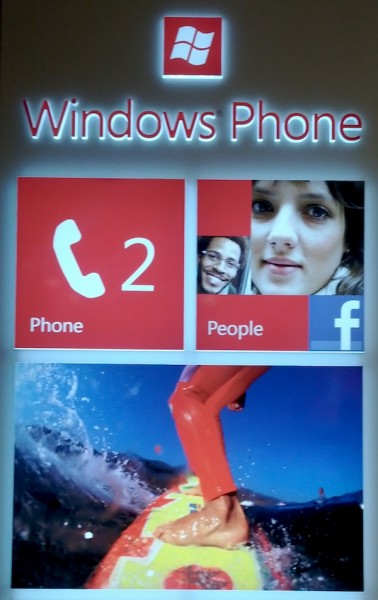

"Windows strength appears to be the ability to attract first time smartphone buyers, upgrading from a feature phone", Mary-Ann Parlato, Kantar Worldpanel ComTech analyst, says about the U.S. handset market for the three months ended in February. "Of those who changed their phone over the last year to a Windows smartphone, 52 percent had previously owned a feature phone".

"Windows strength appears to be the ability to attract first time smartphone buyers, upgrading from a feature phone", Mary-Ann Parlato, Kantar Worldpanel ComTech analyst, says about the U.S. handset market for the three months ended in February. "Of those who changed their phone over the last year to a Windows smartphone, 52 percent had previously owned a feature phone".  The first public beta of

The first public beta of

On Monday, South Korean manufacturer LG announced a new Android flagship smartphone called the Optimus GK. The handset shares its underpinnings with the previously-introduced

On Monday, South Korean manufacturer LG announced a new Android flagship smartphone called the Optimus GK. The handset shares its underpinnings with the previously-introduced  Little more than a month after the company

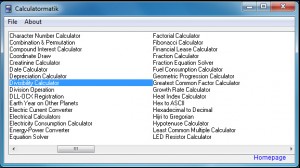

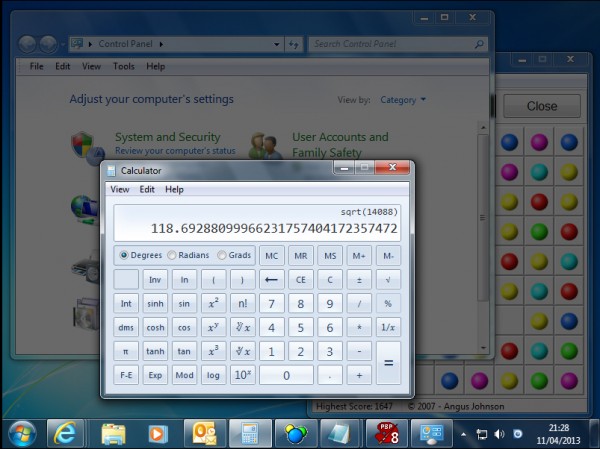

Little more than a month after the company  If you ever need to carry out a quick calculation or unit conversion then there are plenty of online resources which can help. And Google is a great place to start; just enter your calculation in the Search box and see what happens.

If you ever need to carry out a quick calculation or unit conversion then there are plenty of online resources which can help. And Google is a great place to start; just enter your calculation in the Search box and see what happens. If you’re not the mathematical type, don’t worry, there are plenty of more general tools available. Enter your birth date in the Birthday Calculator to see your age in months, weeks, days, hours and minutes, for instance. Dieters may appreciate the Body Mass Index and Basal Metabolic Rate calculators. There are practical calculators to help you figure out mortgage and lease repayments, compound interest and fuel consumption. And you even get tools which really aren’t calculators at all, though they’re still useful: a simple stopwatch, say, or a random password generator.

If you’re not the mathematical type, don’t worry, there are plenty of more general tools available. Enter your birth date in the Birthday Calculator to see your age in months, weeks, days, hours and minutes, for instance. Dieters may appreciate the Body Mass Index and Basal Metabolic Rate calculators. There are practical calculators to help you figure out mortgage and lease repayments, compound interest and fuel consumption. And you even get tools which really aren’t calculators at all, though they’re still useful: a simple stopwatch, say, or a random password generator.

The half-orb nicely elevates the screen, so you can see notifications or easily answer calls with wired or wireless headphones. Two problems: Touching the phone can cause enough slippage to stop charging; the phone is otherwise inaccessible, because it can't be handled. When plugged into the wall, I can check Google+, respond to text messages or go through email -- all of which really needs me to pick up the phone. Voice activation is perhaps an alternative, but not something I tried for this review.

The half-orb nicely elevates the screen, so you can see notifications or easily answer calls with wired or wireless headphones. Two problems: Touching the phone can cause enough slippage to stop charging; the phone is otherwise inaccessible, because it can't be handled. When plugged into the wall, I can check Google+, respond to text messages or go through email -- all of which really needs me to pick up the phone. Voice activation is perhaps an alternative, but not something I tried for this review. Usually when I do a Q&A session with tech firms like

Usually when I do a Q&A session with tech firms like  If you’d like to keep an eye on your kids’ PC activities then you could pay big money for a full-strength parental controls package, with comprehensive monitoring tools, detailed reports and a whole lot more.

If you’d like to keep an eye on your kids’ PC activities then you could pay big money for a full-strength parental controls package, with comprehensive monitoring tools, detailed reports and a whole lot more. There are some technical irritations, too. If the program is running without a system tray icon, for instance, then you can’t access the Options dialog any more. To tweak any settings you have to manually edit its Options.ini file, or delete this and restart the process. This isn’t a critical issue -- there aren’t that many settings and you may never need to change any of them -- but it’s still annoying.

There are some technical irritations, too. If the program is running without a system tray icon, for instance, then you can’t access the Options dialog any more. To tweak any settings you have to manually edit its Options.ini file, or delete this and restart the process. This isn’t a critical issue -- there aren’t that many settings and you may never need to change any of them -- but it’s still annoying.

Most photo editors have a few filters which can turn regular photos into instant works of art: an oil painting, say, or a pencil sketch. But if you’d like more -- or you just want the arty effects, without the photo editing overhead -- then

Most photo editors have a few filters which can turn regular photos into instant works of art: an oil painting, say, or a pencil sketch. But if you’d like more -- or you just want the arty effects, without the photo editing overhead -- then

Cloud-based storage provider SpiderOak has released

Cloud-based storage provider SpiderOak has released

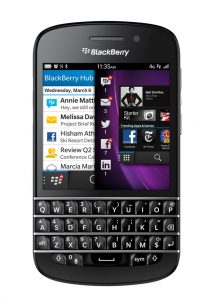

Even though BlackBerry

Even though BlackBerry  The app, according to

The app, according to  This is a question that I never thought I'd ask -- Is the hardware leaving Windows Phone 8 behind its fierce competition? In September last year, I asserted that "

This is a question that I never thought I'd ask -- Is the hardware leaving Windows Phone 8 behind its fierce competition? In September last year, I asserted that " Samsung has announced it will be expanding its ATIV brand name to cover all of its Windows PCs, not just its convertible PC devices. The aim is to create a single cohesive brand for all its Windows 8 products, in a similar way to how the Galaxy brand unifies all of its Android smartphones.

Samsung has announced it will be expanding its ATIV brand name to cover all of its Windows PCs, not just its convertible PC devices. The aim is to create a single cohesive brand for all its Windows 8 products, in a similar way to how the Galaxy brand unifies all of its Android smartphones. For road warriors looking to catch up on the latest events, reply to important business emails, or perform some crucial tasks while traveling, a cellular data connection is a must-have feature for a tablet. The best case scenario -- if Wi-Fi is not available or a safe option -- is to rely on a smartphone in order to tether, which drains its battery in a couple of hours (at best). Definitely not an option for a lot of people.

For road warriors looking to catch up on the latest events, reply to important business emails, or perform some crucial tasks while traveling, a cellular data connection is a must-have feature for a tablet. The best case scenario -- if Wi-Fi is not available or a safe option -- is to rely on a smartphone in order to tether, which drains its battery in a couple of hours (at best). Definitely not an option for a lot of people.

Today is the biggest day of the off-season for NFL fans. We all wait to see who our favorite team selects when the pick rolls around. We wonder if the player will be boon or bust. After all, the Draft is little more than a crap shoot -- ask the San Diego Chargers how that Ryan Leaf kid worked out. On the other hand, there are late round gems to be found -- Terrell Davis was a sixth round pick and Davone Bess went undrafted.

Today is the biggest day of the off-season for NFL fans. We all wait to see who our favorite team selects when the pick rolls around. We wonder if the player will be boon or bust. After all, the Draft is little more than a crap shoot -- ask the San Diego Chargers how that Ryan Leaf kid worked out. On the other hand, there are late round gems to be found -- Terrell Davis was a sixth round pick and Davone Bess went undrafted. The weather is warming up and our thoughts are turning towards vacation time. Where are you heading on that big summer trip? Regardless of the destination you decide on,

The weather is warming up and our thoughts are turning towards vacation time. Where are you heading on that big summer trip? Regardless of the destination you decide on,  If you don't mind overpaying for a cup of coffee then you must read this story. Charitybuzz lists an auction which gives the highest bidder the opportunity to have coffee with Apple CEO Tim Cook at the fruit-logo company's headquarters in Cuppertino, California. The proceeds of the auction will be donated by the man himself to the RFK Center for Justice and Human Rights.

If you don't mind overpaying for a cup of coffee then you must read this story. Charitybuzz lists an auction which gives the highest bidder the opportunity to have coffee with Apple CEO Tim Cook at the fruit-logo company's headquarters in Cuppertino, California. The proceeds of the auction will be donated by the man himself to the RFK Center for Justice and Human Rights. Microsoft gets a lot of press coverage for its

Microsoft gets a lot of press coverage for its

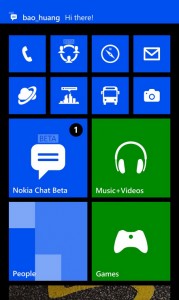

Today, through its Beta Labs blog, Finnish maker Nokia announces a new experimental app for the Lumia Windows Phone lineup. Available only in a select number of markets, Nokia Chat for Windows Phone is designed to connect Lumia users with "friends who use Lumia, Asha, S40, and Symbian devices, and those using Yahoo! Messenger on other mobile devices and platforms".

Today, through its Beta Labs blog, Finnish maker Nokia announces a new experimental app for the Lumia Windows Phone lineup. Available only in a select number of markets, Nokia Chat for Windows Phone is designed to connect Lumia users with "friends who use Lumia, Asha, S40, and Symbian devices, and those using Yahoo! Messenger on other mobile devices and platforms". Not every business embraces BYOD (Bring Your Own Device). The reasons for rejecting it are usually down to security concerns -- firms are understandably worried about their data falling into the wrong hands if the device gets lost or stolen once it leaves the building.

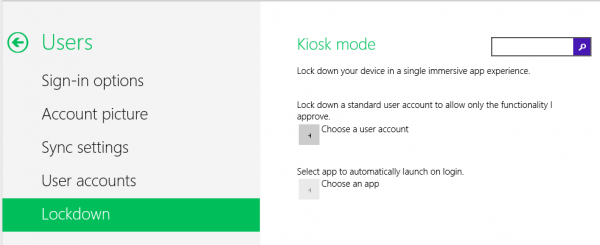

Not every business embraces BYOD (Bring Your Own Device). The reasons for rejecting it are usually down to security concerns -- firms are understandably worried about their data falling into the wrong hands if the device gets lost or stolen once it leaves the building.

Yesterday we informed you that T-Mobile had announced a change of plan concerning its Samsung Galaxy S4 online availability. Due to an "unexpected delay with inventory deliveries", the US mobile operator revealed that the smartphone

Yesterday we informed you that T-Mobile had announced a change of plan concerning its Samsung Galaxy S4 online availability. Due to an "unexpected delay with inventory deliveries", the US mobile operator revealed that the smartphone

During the Consumer Electronics Show in January,

During the Consumer Electronics Show in January,

Burnaware Technologies has released

Burnaware Technologies has released  Fourth in a series. Before I

Fourth in a series. Before I  In comparing Bing’s results with Google I’ve found the two sites often deliver the same results, just in a slightly different order. Bing has some great touches I really like. Search for an artist, like "will.i.am" for example, and Bing will provide quick links to his Twitter account and Facebook page and Klout score. You can also listen to his songs via the MySpace player.

In comparing Bing’s results with Google I’ve found the two sites often deliver the same results, just in a slightly different order. Bing has some great touches I really like. Search for an artist, like "will.i.am" for example, and Bing will provide quick links to his Twitter account and Facebook page and Klout score. You can also listen to his songs via the MySpace player.

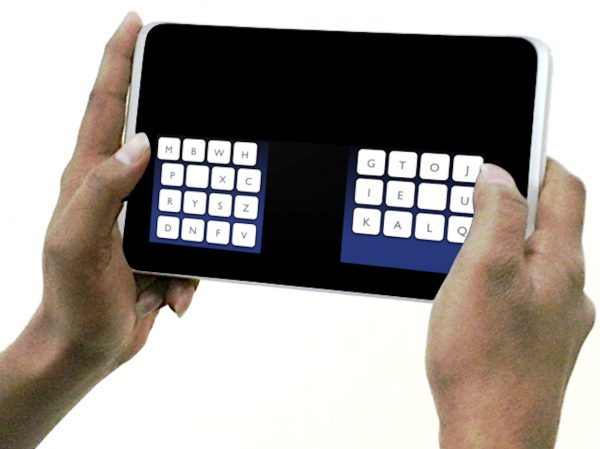

Swype is one of the most appealing and competent third-party keyboards that you can get on Android today, touting more than 250 million users worldwide. The app practically made swipe input popular, a feature which has since been adopted by SwiftKey and even the green droid itself in the

Swype is one of the most appealing and competent third-party keyboards that you can get on Android today, touting more than 250 million users worldwide. The app practically made swipe input popular, a feature which has since been adopted by SwiftKey and even the green droid itself in the  Users can upload their dictionaries onto the cloud in order to take advantage of the accumulated data across all of their devices or simply perform a backup. Swype also comes with a voice-dictation feature and a "smart editor" which "analyzes an entire sentence, flagging potential errors for a quick fix, and includes suggestions for the most likely alternatives".

Users can upload their dictionaries onto the cloud in order to take advantage of the accumulated data across all of their devices or simply perform a backup. Swype also comes with a voice-dictation feature and a "smart editor" which "analyzes an entire sentence, flagging potential errors for a quick fix, and includes suggestions for the most likely alternatives". If you are a T-Mobile customer waiting to receive the

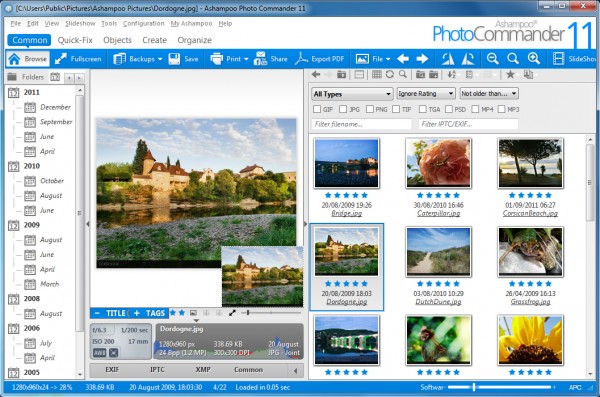

If you are a T-Mobile customer waiting to receive the  Ashampoo has announced the release of

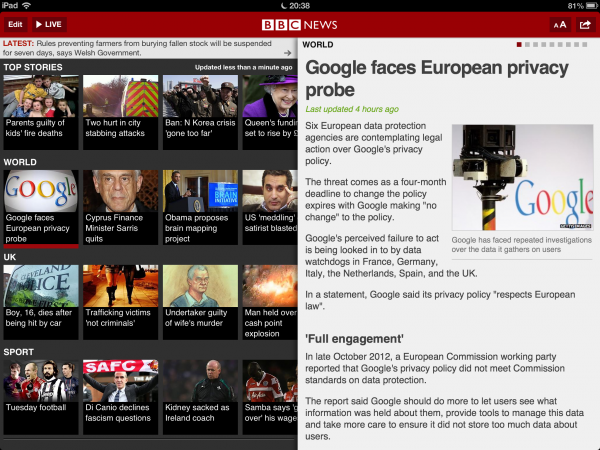

Ashampoo has announced the release of  The BBC tends to favor iOS when it comes to mobile apps. The broadcaster isn’t ignoring Android, it just takes a while to roll out apps for Google’s mobile operating system, and often those apps, when they do arrive, aren’t as slick or don’t have all the features found in the iOS versions.

The BBC tends to favor iOS when it comes to mobile apps. The broadcaster isn’t ignoring Android, it just takes a while to roll out apps for Google’s mobile operating system, and often those apps, when they do arrive, aren’t as slick or don’t have all the features found in the iOS versions.

The measure of a platform's success is applications -- and, contrary to Apple marketing, not how many but which ones. Windows Phone 8 gets a lift today with the addition of

The measure of a platform's success is applications -- and, contrary to Apple marketing, not how many but which ones. Windows Phone 8 gets a lift today with the addition of

Back in January, I was fortunate enough to get an invitation to

Back in January, I was fortunate enough to get an invitation to

It is refreshing to see a big Android manufacturer give something back to the enthusiast and developer community that supports its devices. After the

It is refreshing to see a big Android manufacturer give something back to the enthusiast and developer community that supports its devices. After the  If you own a Lumia Windows Phone and don't mind fiddling with experimental software then Nokia may have something available for you in the app store, kept away from prying eyes. Through the

If you own a Lumia Windows Phone and don't mind fiddling with experimental software then Nokia may have something available for you in the app store, kept away from prying eyes. Through the  Google Street View, which started with a few major cities in the United States back in 2007, has now expanded to 50 nations. Ulf Spitzer, Program Manager for Google Street View,

Google Street View, which started with a few major cities in the United States back in 2007, has now expanded to 50 nations. Ulf Spitzer, Program Manager for Google Street View,

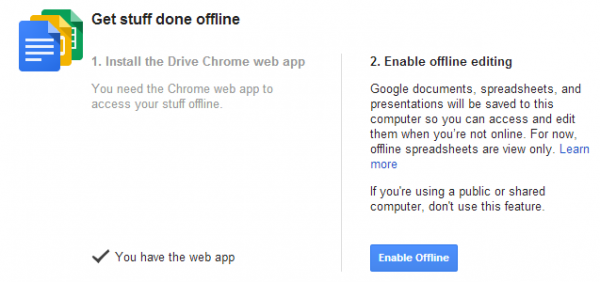

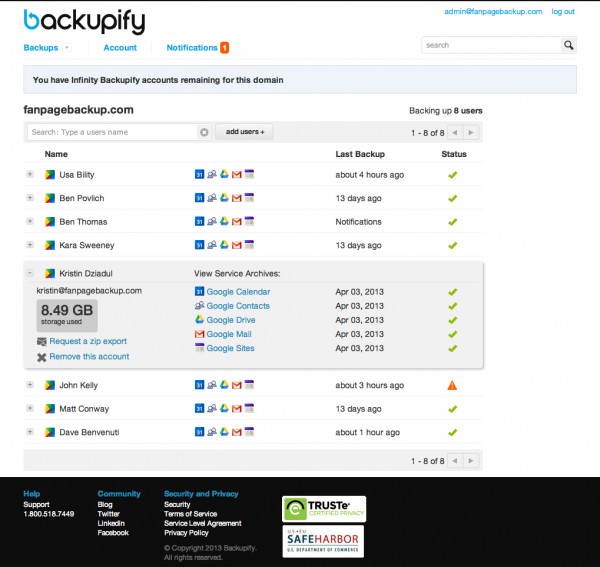

Over five million businesses currently use Google Apps -- a number that is growing all the time -- and while getting to grips with the cloud-based productivity suite is fairly easy, there will always be some staff members who struggle.

Over five million businesses currently use Google Apps -- a number that is growing all the time -- and while getting to grips with the cloud-based productivity suite is fairly easy, there will always be some staff members who struggle. IObit has released

IObit has released

Gmail shows little sign of becoming any less popular, but any iOS user will find that dealing with a Gmail account on an Apple device is not the most pleasant experience.

Gmail shows little sign of becoming any less popular, but any iOS user will find that dealing with a Gmail account on an Apple device is not the most pleasant experience.

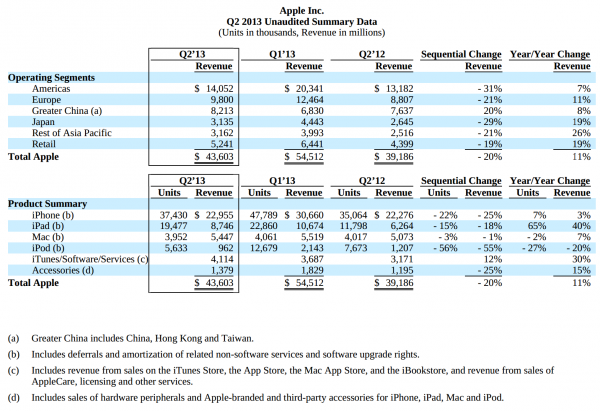

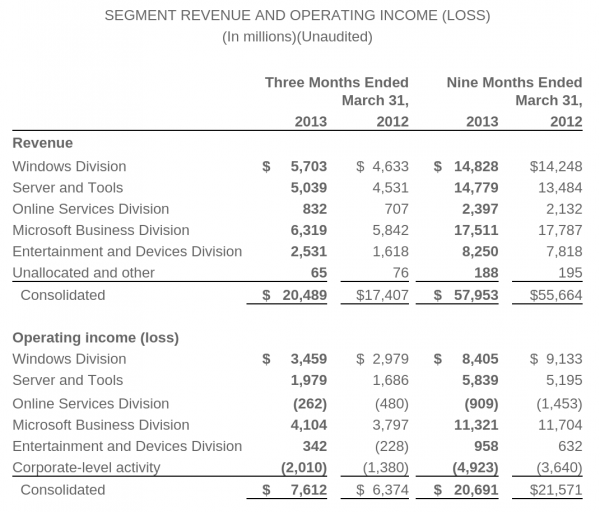

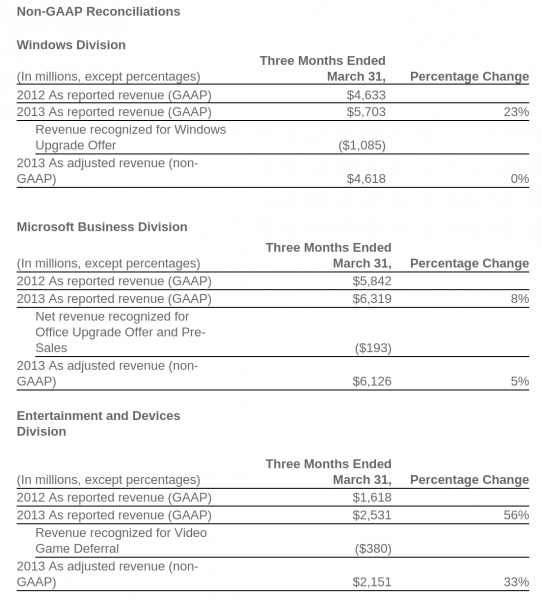

The "Microsoft's dead" meme is one of the most popular among tech bloggers and arm-chair pundit commenters. Posts are everywhere the last 30 days or so, fed this month by reports of record-weak PC shipments. After market close yesterday, with

The "Microsoft's dead" meme is one of the most popular among tech bloggers and arm-chair pundit commenters. Posts are everywhere the last 30 days or so, fed this month by reports of record-weak PC shipments. After market close yesterday, with  Following the lead of a number of high-profile companies like

Following the lead of a number of high-profile companies like  The app is called

The app is called

This may seem a bit ironic, given that Xbox Live spent much of last Saturday

This may seem a bit ironic, given that Xbox Live spent much of last Saturday  Microsoft bet the farm on touch. It came up with an OS that works brilliantly on touch devices and not as well on bog-standard PCs (it’s not bad on them -- far from it -- but it’s not as good).

Microsoft bet the farm on touch. It came up with an OS that works brilliantly on touch devices and not as well on bog-standard PCs (it’s not bad on them -- far from it -- but it’s not as good). Unveiled

Unveiled  If you regularly try out freeware tools then you’ll know many come bundled with annoying adware. This can use all kinds of dubious tactics to install itself on your PC, and getting rid of these irritations later can be a problem (even if you remove the core code, remnants usually remain to clutter your system).

If you regularly try out freeware tools then you’ll know many come bundled with annoying adware. This can use all kinds of dubious tactics to install itself on your PC, and getting rid of these irritations later can be a problem (even if you remove the core code, remnants usually remain to clutter your system). And in our tests

And in our tests  UK polling company YouGov has released the results of its latest

UK polling company YouGov has released the results of its latest  Piriform has released

Piriform has released

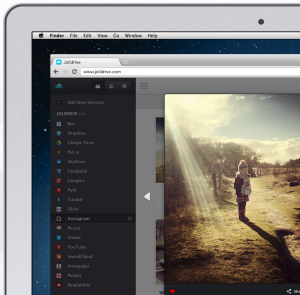

Jolicloud may perhaps be the coolest cloud service you have not yet discovered. Created back in 2009, the company derived from Joli OS into a platform to access your cloud-based online life. It brings together everything you have stored on all of the various cloud services and, if you are like me, then that can be a wide net to cast.

Jolicloud may perhaps be the coolest cloud service you have not yet discovered. Created back in 2009, the company derived from Joli OS into a platform to access your cloud-based online life. It brings together everything you have stored on all of the various cloud services and, if you are like me, then that can be a wide net to cast.

Marissa Meyer is bringing big changes to Yahoo and one of them is apparently getting the old search site back into the public focus with new mobile apps. That process begins today in the world of both Apple and meteorology -- fitting since tornado and thunderstorm season is getting underway and hurricanes are on the horizon.

Marissa Meyer is bringing big changes to Yahoo and one of them is apparently getting the old search site back into the public focus with new mobile apps. That process begins today in the world of both Apple and meteorology -- fitting since tornado and thunderstorm season is getting underway and hurricanes are on the horizon.

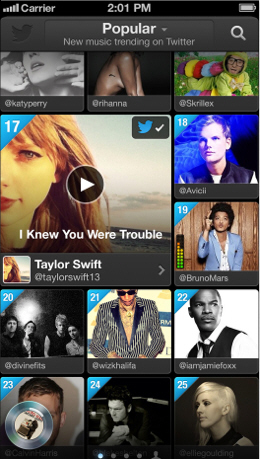

There’s been a lot of talk and rumors flying about Twitter’s new music discovery service, but today the social network revealed the details and launched the first app for it.

There’s been a lot of talk and rumors flying about Twitter’s new music discovery service, but today the social network revealed the details and launched the first app for it. If you are unable to comment on BetaNews stories, our apologies, comment service Disqus suffers service problems this morning. A reader alerted me about 30 minutes ago. When I couldn't comment on any story, I had headed over to Disqus only to get an "unavailable" message.

If you are unable to comment on BetaNews stories, our apologies, comment service Disqus suffers service problems this morning. A reader alerted me about 30 minutes ago. When I couldn't comment on any story, I had headed over to Disqus only to get an "unavailable" message.

IObit, which is best known for its excellent Advanced SystemCare suite for Windows, has a useful all-in-one Android security and performance optimization app called

IObit, which is best known for its excellent Advanced SystemCare suite for Windows, has a useful all-in-one Android security and performance optimization app called  Iolo, the developer responsible for System Mechanic, has an Android app called

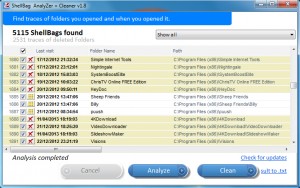

Iolo, the developer responsible for System Mechanic, has an Android app called  Every time you create, modify or access a folder on your PC, Windows records these details in the Registry. There’s nothing shady about this -- the action is a key part of recording your folder view settings, and maintaining a list of your favorite folders -- but it does introduce a privacy risk, as snoopers can use the data to track some of your PC activities.

Every time you create, modify or access a folder on your PC, Windows records these details in the Registry. There’s nothing shady about this -- the action is a key part of recording your folder view settings, and maintaining a list of your favorite folders -- but it does introduce a privacy risk, as snoopers can use the data to track some of your PC activities. If you’re worried about any of this, though, clicking Clean will provide some possible solutions. The program can delete references to particular folder types, scramble dates and times, even securely overwriting this information so there’s no way it can be recovered later. (Which seems like overkill to us, but it’s good to have the option.)

If you’re worried about any of this, though, clicking Clean will provide some possible solutions. The program can delete references to particular folder types, scramble dates and times, even securely overwriting this information so there’s no way it can be recovered later. (Which seems like overkill to us, but it’s good to have the option.) No, I am not talking of the nerdtastic movie from Joss Whedon, but of an app. I have written twice now of my move from an HTPC to Google TV in the living room, with my most recent post surrounding ways to get both live TV and home media to the tiny set top box. For serving up home media I opted for Plex, which seemed the best solution.

No, I am not talking of the nerdtastic movie from Joss Whedon, but of an app. I have written twice now of my move from an HTPC to Google TV in the living room, with my most recent post surrounding ways to get both live TV and home media to the tiny set top box. For serving up home media I opted for Plex, which seemed the best solution. While Plex for Google TV is free,

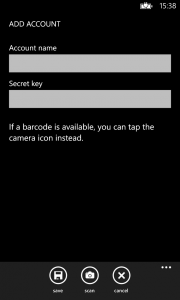

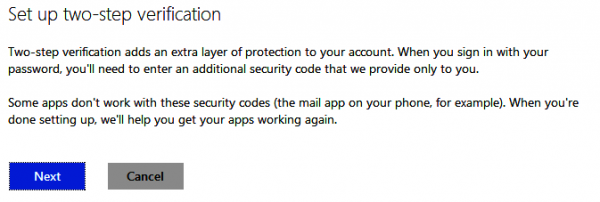

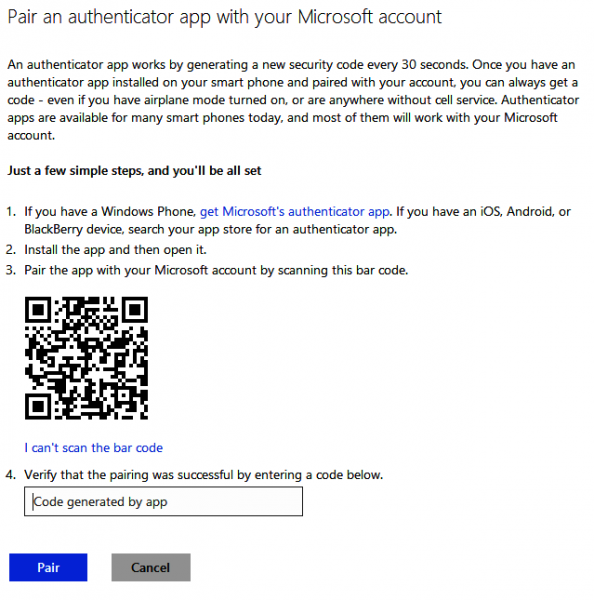

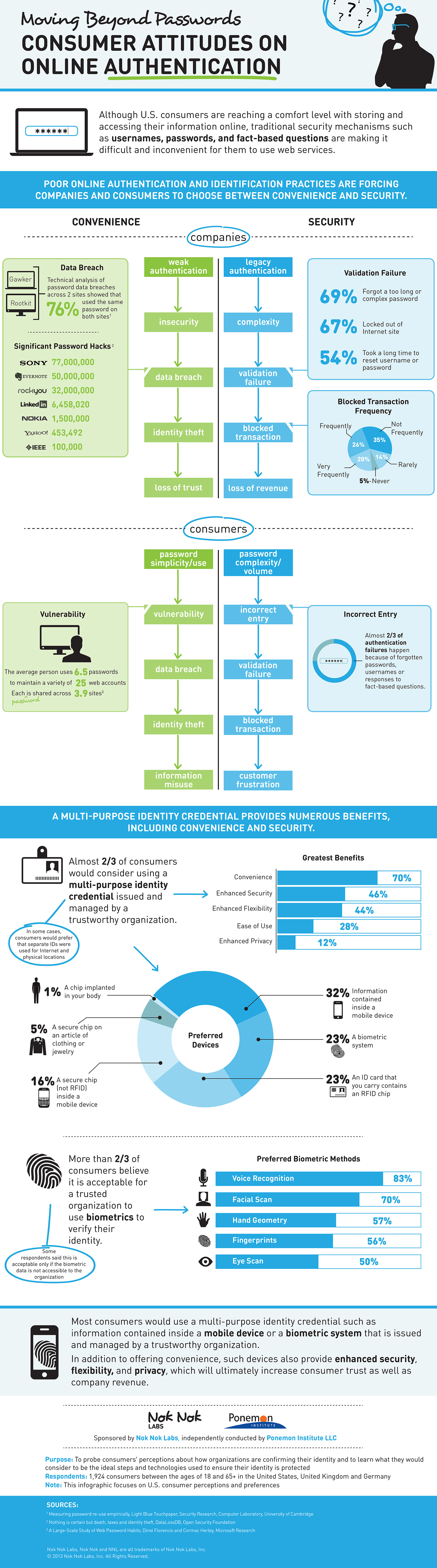

While Plex for Google TV is free,  Let's face it -- secure online authentication is a chore. Except for a couple of people who enjoy using very complex passwords and/or a password manager, most of us find it difficult to use a secure combination of characters for each and every website where we have an account. Two-factor authentication is also not all that comfortable to manage, requiring use of a secondary means of generating a secure code. Often that's a token given by the bank, a text message sent by the service provider, or an app.

Let's face it -- secure online authentication is a chore. Except for a couple of people who enjoy using very complex passwords and/or a password manager, most of us find it difficult to use a secure combination of characters for each and every website where we have an account. Two-factor authentication is also not all that comfortable to manage, requiring use of a secondary means of generating a secure code. Often that's a token given by the bank, a text message sent by the service provider, or an app.

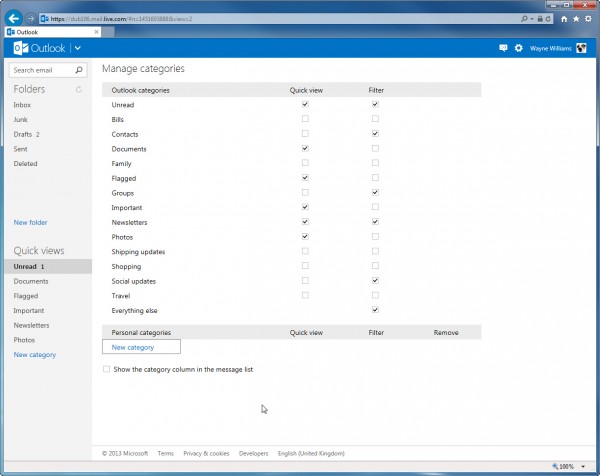

Most of the focus these days seems to be around Outlook.com, as Microsoft anxiously endeavours to move its apps online and turn software into a service. But, while the latest version of Microsoft's productivity suite -- Office 365 Home Premium -- includes the ability to access the apps on the web, ultimately it is still a software suite on your computer.

Most of the focus these days seems to be around Outlook.com, as Microsoft anxiously endeavours to move its apps online and turn software into a service. But, while the latest version of Microsoft's productivity suite -- Office 365 Home Premium -- includes the ability to access the apps on the web, ultimately it is still a software suite on your computer. Little over three weeks after the

Little over three weeks after the  Facebook has released