This is the first of a couple columns about a growing trend in Artificial Intelligence (AI) and how it is likely to be integrated in our culture. Computerworld ran an interesting overview article on the subject yesterday that got me thinking not only about where this technology is going but how it is likely to affect us not just as a people. but as individuals. How is AI likely to affect me? The answer is scary.

Today we consider the general case and tomorrow the very specific.

The failure of Artificial Intelligence. Back in the 1980s there was a popular field called Artificial Intelligence, the major idea of which was to figure out how experts do what they do, reduce those tasks to a set of rules, then program computers with those rules, effectively replacing the experts. The goal was to teach computers to diagnose disease, translate languages, to even figure out what we wanted but didn’t know ourselves.

It didn’t work.

Artificial Intelligence or AI, as it was called, absorbed hundreds of millions of Silicon Valley VC dollars before being declared a failure. Though it wasn’t clear at the time, the problem with AI was we just didn’t have enough computer processing power at the right price to accomplish those ambitious goals. But thanks to Map Reduce and the cloud we have more than enough computing power to do AI today.

The human speed bump. It’s ironic that a key idea behind AI was to give language to computers yet much of Google’s success has been from effectively taking language away from computers -- human language that is. The XML and SQL data standards that underly almost all web content are not used at Google where they realized that making human-readable data structures made no sense when it was computers -- and not humans -- that would be doing the communicating. It’s through the elimination of human readability, then, that much progress has been made in machine learning.

You see in today’s version of Artificial Intelligence we don’t need to teach our computers to perform human tasks: they teach themselves.

Google Translate, for example, can be used online for free by anyone to translate text back and forth between more than 70 languages. This statistical translator uses billions of word sequences mapped in two or more languages. This in English means that in French. There are no parts of speech, no subjects or verbs, no grammar at all. The system just figures it out. And that means there’s no need for theory. It works, but we can’t say exactly why because the whole process is data driven. Over time Google Translate will get better and better, translating based on what are called correlative algorithms -- rules that never leave the machine and are too complex for humans to even understand.

Google Brain. At Google they have something called Google Vision that currently has 16000 microprocessors equivalent to about a tenth of our brain’s visual cortex. It specializes in computer vision and was trained exactly the same way as Google Translate, through massive numbers of examples -- in this case still images (BILLIONS of still images) taken from YouTube videos. Google Vision looked at images for 72 straight hours and essentially taught itself to see twice as well as any other computer on Earth. Give it an image and it will find another one like it. Tell it that the image is a cat and it will be able to recognize cats. Remember this took three days. How long does it take a newborn baby to recognize cats?

This is exactly how IBM’s Watson computer came to win at Jeopardy, just by crunching old episode questions: there was no underlying theory.

Let’s take this another step or two. There have been data-driven studies of MRIs taken of the active brains of convicted felons. This is not in any way different from the Google Vision example except we’re solving for something different -- recidivism, the likelihood that a criminal will break the law again and return to prison after release. Again without any underlying theory Google Vision seems to be able to differentiate between the brain MRIs of felons likely to repeat and those unlikely to repeat. Sounds a bit like that Tom Cruise movie Minority Report, eh? This has a huge imputed cost savings to society, but it still has the scary aspect of no underlying theory: it works because it works.

Scientists then looked at brain MRIs of people while they are viewing those billions of YouTube frames. Crunch a big enough data set of images and their resultant MRIs and the computer can eventually predict from the MRI what the subject is looking at. That’s reading minds and again we don’t know how.

Advance science by eliminating the scientists. What do scientists do? They theorize. Big Data in certain cases makes theory either unnecessary or simply impossible. The 2013 Nobel Prize in Chemistry, for example, was awarded to a trio of biologists who did all their research on deriving algorithms to explain the chemistry of enzymes using computers. No enzymes were killed in the winning of this prize.

Algorithms are currently improving at twice the rate of Moore’s Law.

What’s changing is the emergence of a new Information Technology workflow that goes from the traditional:

1) new hardware enables new software

2) new software is written to do new jobs enabled by the new hardware

3) Moore’s Law brings hardware costs down over time and new software is consumerized.

4) rinse repeat

To the next generation:

1) Massive parallelism allows new algorithms to be organically derived

2) new algorithms are deployed on consumer hardware

3) Moore’s Law is effectively accelerated though at some peril (we don’t understand our algorithms)

4) rinse repeat

What’s key here are the new derive-deploy steps and moving beyond what has always been required for a significant technology leap -- a new computing platform. What’s after mobile, people ask? This is after mobile. What will it look like? Nobody knows and it may not matter.

In 10 years Moore’s Law will increase processor power by 128X. By throwing more processor cores at problems and leveraging the rapid pace of algorithm development we ought to increase that by another 128X for a total of 16,384X. Remember Google Vision is currently the equivalent of 0.1 visual cortex. Now multiply that by 16,384X to get 1,638 visual cortex equivalents. That’s where this is heading.

A decade from now computer vision will be seeing things we can’t even understand, like dogs sniffing cancer today.

We’ve both hit a wall in our ability to generate appropriate theories and found in Big Data a hack to keep improving. The only problem is we no longer understand why things work. How long from there to when we completely lose control?

That’s coming around 2029, according to Ray Kurzweil, when we’ll reach the technological singularity.

That’s the year the noted futurist says $1000 will be able to buy enough computing power to match 10,000 human brains. For the price of a PC, says Ray, we’ll be able to harness more computational power than we can even understand or even describe. A supercomputer in every garage.

Matched with equally fast networks this could mean your computer -- or whatever the device is called -- could search in its entirety in real time every word ever written to answer literally any question. No leaving stones unturned.

Nowhere to hide. Apply this in a world where every electric device is a networked sensor feeding the network and we’ll have not only incredibly effective fire alarms, we’re also likely to have lost all personal privacy.

Those who predict the future tend to overestimate change in the short term and underestimate change in the long term. The Desk Set from 1957 with Katherine Hepburn and Spencer Tracy envisioned mainframe-based automation eliminating human staffers in a TV network research department. That has happened to some extent, though it took another 50 years and people are still involved. But the greater technological threat wasn’t to the research department but to the TV network itself. Will there even be television networks in 2029? Will there even be television?

Nobody knows.

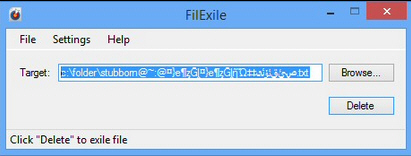

Deleting files from Windows Explorer is usually very easy. Select your target, tap "Del", click the "Yes, I really mean it" button and the object disappears forever. (Or is sent to the Recycle Bin, anyway.)

Deleting files from Windows Explorer is usually very easy. Select your target, tap "Del", click the "Yes, I really mean it" button and the object disappears forever. (Or is sent to the Recycle Bin, anyway.) Every member of the finance department knows the importance -- and the effort it takes -- to perform the monthly, quarterly and year-end close in order to create corporate financial statements. The tabulation and documentation demand complete accuracy and coordination between all the moving parts of an enterprise.

Every member of the finance department knows the importance -- and the effort it takes -- to perform the monthly, quarterly and year-end close in order to create corporate financial statements. The tabulation and documentation demand complete accuracy and coordination between all the moving parts of an enterprise. Peter Minck, Vice President, Business Solutions,

Peter Minck, Vice President, Business Solutions,

BitTorrent has been busily updating its Sync ever since the service first debuted. The organization bills this as having no cloud involved, describing it as "being more private and secure than the public cloud".

BitTorrent has been busily updating its Sync ever since the service first debuted. The organization bills this as having no cloud involved, describing it as "being more private and secure than the public cloud".