By Scott M. Fulton, III and Carmi Levy, Betanews

Scott Fulton, Managing Editor, Betanews: We fully anticipate that you'll be following this morning's Apple premiere news from any one of the many gadget blogs with reporters on the scenes, working hard even as we speak to tweak the geek connections on their 3G iPhones. (Or, if they're lucky, on their Droids.) But at some point, you'll want to be able to step back out of the wilderness, as it were, to catch a breath of reality before going back in.

Scott Fulton, Managing Editor, Betanews: We fully anticipate that you'll be following this morning's Apple premiere news from any one of the many gadget blogs with reporters on the scenes, working hard even as we speak to tweak the geek connections on their 3G iPhones. (Or, if they're lucky, on their Droids.) But at some point, you'll want to be able to step back out of the wilderness, as it were, to catch a breath of reality before going back in.

This is why Betanews contributing analyst Carmi Levy and I have opted, just for your sake, to stay behind with you in the real world today, to bring you our thoughts as to what Apple's move today will mean for those of us out here -- people who prefer to improve technology rather than allow technology to try to improve us.

Carmi Levy, Contributing Analyst, Betanews: Here's my quick brain dump on the tablet-to-be-named-later device that's apparently coming from Apple:

Apple is far from the first company to try to carve out a profitable business in this technological middle ground between full-blown laptops and increasingly capable smartphones. This is a market segment where, for the better part of the past 15 years, countless vendors have impaled themselves on inflated expectations and deflated products that probably won't even make decent museum pieces someday.

From where I sit, if Apple introduces just another device into this technological Death Valley, the company is sunk. But the company never does the "just another device" thing, anyway, so there's little risk of that. Whatever feature set it ultimately has, and whatever it's ultimately called, the magic sauce won't be in the device itself. Rather, it'll be the services behind it. Apple's own playbook -- invented with iPod/iTunes and perfected with iPhone/App Store -- dictates tight integration between device, service, and content provision/purchase/management. The new device will not deviate from this strategy. Rather, it'll extend it into new market sectors.

Since it's worked so well for music and software, a larger form factor device will allow Apple to apply this same approach to similarly struggling sectors whose leaders had previously failed to transition from conventional to Internet-borne business models. Publishers who haven't already worn their knuckles raw on Apple's front door will miss out, as Apple's model could do for the written word what it's already done for the singing one. Newspaper and magazine subscriptions on a dead-simple-easy-to-sync device would be a godsend for an industry that otherwise has no hope. Similarly, movies and other rich content will play better on a 10-inch screen with a lifestyle-friendly interface than on a 3.5-inch smartphone.

There's certainly a risk of consumers balking at the price, and complaining that they have to carry, pay for, and manage yet another device. But if the hardware/software/service bundle is as compelling as Apple's two previous mobile platform smash hits have been, consumers -- Apple fanatics as well as mainstream -- will once again line up in droves to buy in.

Scott Fulton: I think we'll know for certain whether this new design is as hit when we see a response from the fashion accessory industry. The iPod created a form factor that clothing and accessories designers, and even automotive interior designers, could build around. And the iPhone has followed suit in that regard as well. When a fashion accessories designer makes room in his design portfolio for a trendy item that's made to support a particular brand of gadget, you know that designer has reason to believe the market for the gadget has scaled up to the market for clothing, which is huge.

Maybe this would be a great Lifetime reality show challenge: Make a room full of amateur fashion designers craft a trendy accessory for this tablet thing. Imagine a button down shirt with an eleven-inch pocket. Or a clip-on belt holster the size of a Bowie knife.

Here's how I perceive the situation for Apple going in: Back in 2007, there was an urgent, if then under-appreciated, need for the handset form factor to be revolutionized, carried several steps forward. We needed for handsets to get past the incremental, evolutionary phase, and move several years ahead in one fell swoop. Apple accomplished that with a product that will probably be memorialized in history as America's first game-changing product of the 21st century. Steve Jobs was for handsets what Jim Bowie was for knives.

But a similarly urgent need does not exist, I believe, in the field of tablet PCs. While Apple can proceed with the business of revolutionizing the concept of portable, keyboard-less computers with full screens -- and I fully expect Apple to succeed in that department today -- the breadth of its impact on the world at large will be smaller because this is a small market already. Apple could expand the size of that market with a successful design, but that's like adding landfill to the perimeter of an island.

However...only a company in Apple's position could be perfectly fine with that. Let's face it, this could be one of the world's most stunning limited successes. It could be a major investment in a minor market. But few other companies in the world are in a market position to pull this off, to be able to succeed on a limited scale and be good with that. One has to be a little jealous of Apple just for the fact that it can afford to do something great with something small. Not even RIM on one side or HP on the other could really afford a similar gamble.

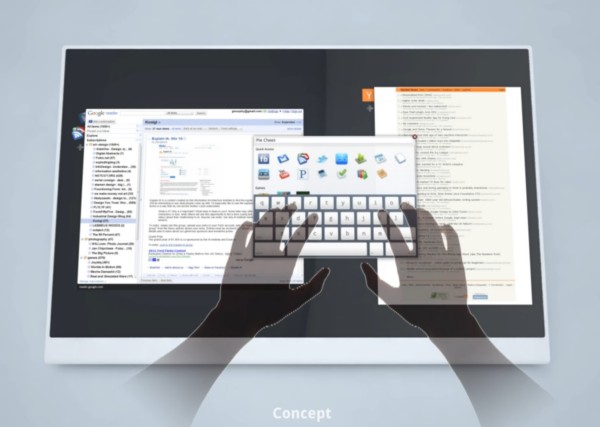

If this tablet design is to have any truly lasting impact on the world at large, it will need to be in how it's operated. It needs to be usable in such a way that it makes user experience (UX) designers in other fields of endeavor, including PC applications, stop in their tracks and realize, "Damn, we should be doing this." In that respect, Apple does have at least some opportunity to make a broader, if more indirect, market impact.

11:39am PT: SF3: It looks like a wrap. Final thoughts from me: I expected a big iPhone. That's pretty much what I'm seeing, except that it's not really a phone. (And it has AT&T, so it's less of a 3G.) I expected an expanded iTunes platform for books, and that's what I'm seeing.

Game-changing innovation in platform design? No. It's an extension of what they have, an "iPhone Maxi."

But as I said in the intro, I don't believe Apple needs to succeed to succeed here. The real success for the company will be getting its feet wet in the CPU production department, to start the growth of its own hardware platform, which will eventually extend to iPhones everywhere.

CL: My bottom line: It's an impressive piece of hardware that borrows liberally from Apple's iPod/iPhone playbook. It'll sell well, and will vanquish bad memories of multiple generations of really lame tablet offerings from other vendors. The big gap in this remains periodical publishing. It remains to be seen whether the New York Times agreement is a one-off, or whether Apple will successfully create a platform that pulls in other newspaper and magazine publishers. The iPad is a promising evolutionary product. The revolution will come when failing industries figure out how to leverage it to fundamentally change the nature of their business.

11:35am PT: CL: I see some cannibalization from higher-end netbooks, but not much. Much as Apple did with the iPhone, it'll skim off the early adopters before adjusting its pricing longer-term.

11:34am PT: CL: They didn't specifically use the term "multitouch" but I'm going to go out on a limb and assume that the "manipulate it with your fingers" line of reasoning suggests that it's multitouch capable.

11:32am PT: Jobs: "Do we have what it takes to establish a third category of products? We think we've got the goods." [via The iPhone Blog]

11:30am PT: SF3: They must be talking about hardware now because the geek blogs all went silent again.

11:28am PT: SF3: Confirmation on the wires, the CoverItLive service was indeed knocked down.

There's very little "multi-" in this product introduction, though. No multi-user. No multi-channel conversations. No multitasking. (Little multitouch, I assume you have to be able to hold down shift while typing capital letters, right?)

11:26am PT: CL:The case seems to offer a modicum of angled support. Not perfect, but better than a featureless slice, I suppose.

Shipping in 60 days. Let the lineups begin.

SF3: Ah, okay, I can see it making a little sense. Not that the keyboard itself is portable, but if you kept it on your desk in the office and took your iPad with you, it makes a little sense. (A little.)

But the pictures they're going to leave us with are of people propping the device up in their laps to type? Not a smart decision.

11:23am PT: CL: The price point is better than we had all initially expected, but still at the high end of a post-recessionary economy that's really enjoying $300 netbooks. It'll sell like hotcakes at this price, but it'll really soar once carrier subsidies increase and up-front costs drop to the same ballpark as an average iPhone.

SF3: Even the $829 price tag for the 64 GB model with 3G included is actually pretty respectable. I always expect the "Apple premium," which is understandable and even desirable from a qualitative standpoint. But this isn't too extravagant at all.

CL: 3G-enabled devices get pretty expensive if you go hog wild with memory.

Hark...is that a keyboard I see? With a dock?

SF3: I'm seeing a picture of an iPad dock on Engadget. I don't see how you could type on that thing.

11:20am PT: SF3: $499 price tag will wake me up. That's where the A4 chip starts kicking in.

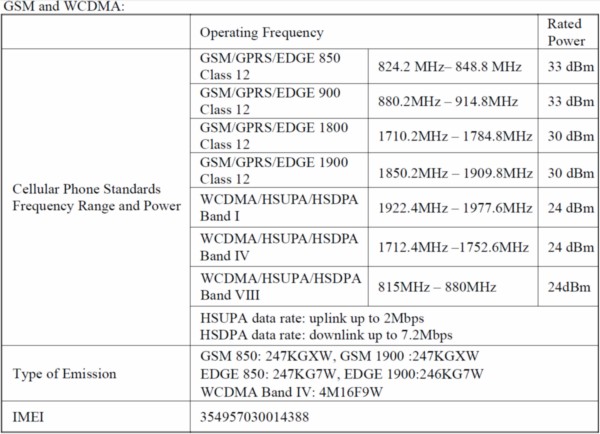

11:18am PT: CL: All iPads unlocked, and all use GSM micro-SIMs. Good news, since it'll let folks with GSM-enabled carriers shop around.

11:17am PT: "Every iPad has the latest and greatest Wi-Fi. But we're going to have models with 3G built in as well."

SF3: At last, the first mention of the words "3G."

CL: Suggests a two-pronged strategy similar to the iPhone and iPod touch. Interesting - and smart.

SF3: I fear an effort to gloss over the implication of "AT&T." Except the fact that I'm not seeing the carrier's name here could be the "One More Thing" part.

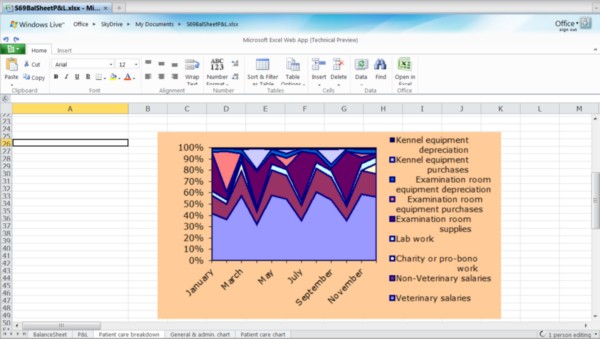

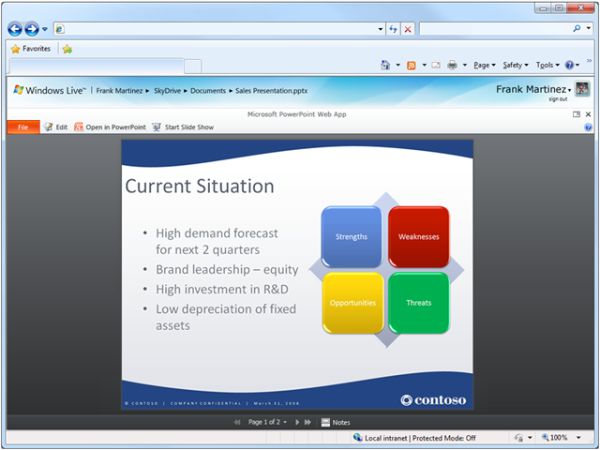

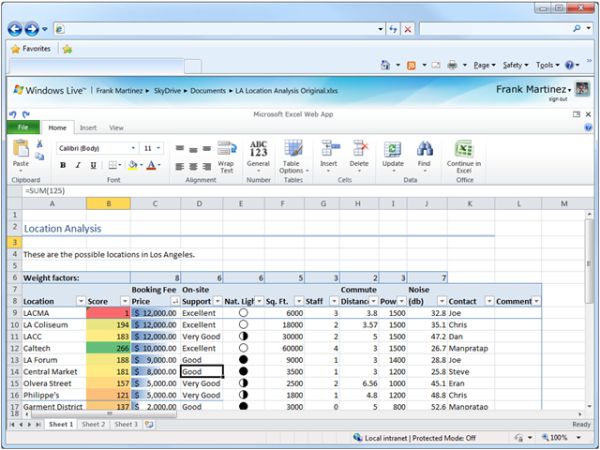

CL: $10 for iWork. Revolutionary price point...for a conventional take on conventional software. Nice, but not out-of-the-park nice. Apple's still missing the cloud-based boat.

AT&T. Sigh. Aren't there any other carriers out there? Why does 2010 dawn with yet more carrier-specific exclusivity and lock-in?

$14.99 for light use and $29.99 for unlimited: Suddenly, laptop/netbook and smartphone data plans look overpriced. Welcome to price erosion across the board...we can all thank Apple for kickstarting the trend.

SF3: Sigh indeed. Here's the part where I start typing Y-A-W-N.

11:13am PT: CL: Possibly. And I think I'm screaming into the wind on the keyboard thing and expansion base because they're both not happening here. But the one-size-fits-all form factor that's dominated the iPhone landscape and now seems to be defining the iPad one can't last forever. At some point, they've got to accommodate different strokes for different folks -- all without diluting the elegant simplicity that got them to the top of the market in the first place.

SF3: I do like the idea of a portable graphical calculator, something I've always hoped the industry could produce. I could imagine any number of real-world uses for such a thing.

11:10am PT: CL: Would be nice to see a third party keyboard that hard-docks into the iPad. An expansion port of some sort might kickstart the industry. Can't tell from the pics what the device's physical expansion capabilities are, though.

SF3: Yea, but wouldn't such a device be a tacit admission that the function doesn't really fit the form? Sort of like the MacBook Air with the hard drive that you plug into it from the outside like an I.V. unit.

11:08am PT: CL: Good to see a new iWork for iPad. Reinforces that you can do more than just watch movies on this thing. I still wouldn't write War and Peace on a glass keyboard, but just having software that's more capable than a text editor is encouraging.

SF3: I think if you put that much forethought into productivity software for a slate form factor, they should have expended an extra ounce of fuel to find some gadget-y way to make the device hold still so you can type on it. Maybe netbooks are cheap, Steve, but at least they fit on your lap. I'm worried that typing on a iPad will be like playing Scrabble on a sheet of ice.

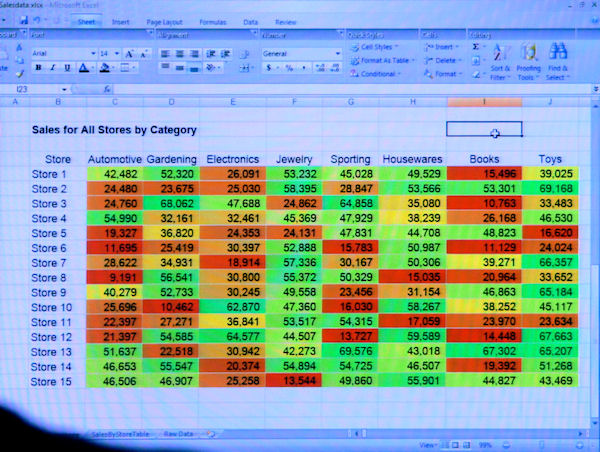

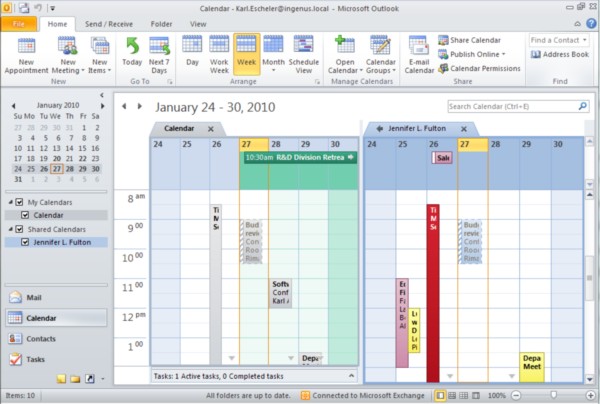

11:04am PT: CL: So iWork has a new UI. Lovely, I'm sure. But productivity apps aren't about the UI. What's underneath, folks? And will these things integrate my iPad experience with my Web experience? Wish I could tack iWork for iPad as a front end onto my Google Apps apps.

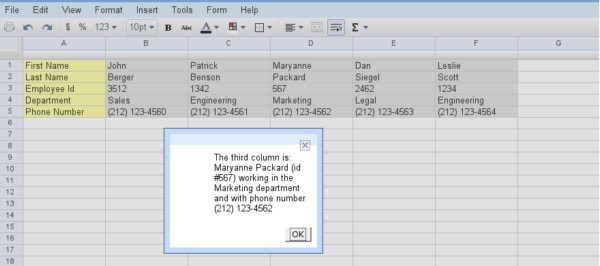

What, geeks don't use spreadsheets? :)

SF3: There was somebody I read here recently...maybe one of our readers will remind me...who pretty much said, geeks don't use spreadsheets. (What, you don't agree? Tell me about it in comments.)

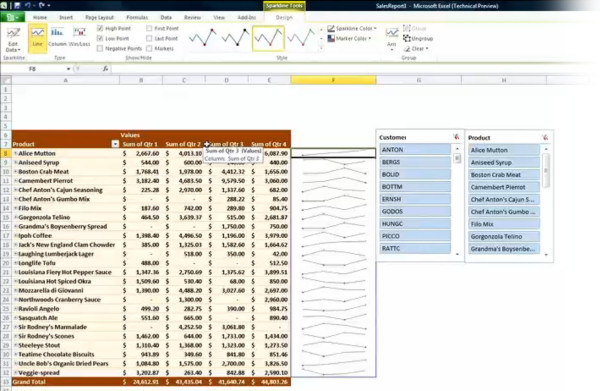

11:03am PT: SF3: Here's the part where all the geek blogs go silent. I'm literally reading one-word paragraphs now. "Spreadsheets." Like there wasn't enough caffeine left to coax them to type the letters, Y-A-W-N.

10:59am PT: CL: Hard to tell from the demo what the screen's quality is as an e-reader. Screen shots LOOK nice, but the only way to really tell is to sit with it (uncomfortably) in one's lap.

Hmm...iBook Store to buy books right on the device.

Let's get this straight: there's an iTunes store for music and video, an App Store for software, and an iBook Store for books. Is this universe getting a little confusing? Is there opportunity for consolidation into one superstore/software environment?

SF3: iBooks is exactly right on target so far -- 100% "iTunes for books."

CL: Another example of using the original iTunes model and extending it into new content. I'd prefer one big umbrella for all my content, but I'm picky that way. I can understand why Apple would focus individual "stores" for each category.

SF3: I think it has to say "books" in the brand. If it really were "iTunes for Books," that would sound too much like a Microsoft branding campaign.

CL: True. Can't rebrand what is already an entrenched brand years after it became entrenched. Too confusing for consumers.

Ah, iWork. Will productivity software become interesting again? Let's see.

10:55am PT: CL: Perhaps. But there's still an industry that sells books and newspapers, antidiluvian as they may both be. And it's an industry that's begging for some sort of transition. I'm going to go way out on a limb and say this is the most mainstream move yet to fundamentally change how this stuff gets produced, delivered and paid for. Well, that last part I'm still waiting for: how are we gonna pay for all this, Steve?

Ooh, bitchslap to Amazon. iBooks intro starts with a backhanded compliment, then an imonous warning: "Amazon's done a great job of pioneering this functionality with the Kindle. We're going to stand on their shoulders and go a little further."

10:55am PT: CL: Disappointed the NYT slice of the presentation focused only on a narrow slice of the end user experience. I know they have lots of ground to cover in very little time, but the publishing angle is so much more fundamental to where this thing will go. Do we really need another gaming demo?

They'll get lynched by the Apple fanboys - I'm betting one of these will be on the ISS before long. At only 1.5 pounds, that's only $15,000 to get it into orbit.

SF3: Back to your point: If this thing is to revolutionize publishing, you'll need to produce more than The New York Times on it. Imagine if the iPod were introduced to the public with a demo of it only playing Stravinsky symphonies.

CL: Agreed. But if the NYT buys in, others will follow. You need a marquee first-adopter, and they don't get more marquee than the Times.

SF3: Problem is, is it the right marquee now, especially for the market Apple's trying to address? Jobs & Co. may think it's the reader of the New York Times Book Review. But that reader is thinking, "Books? Those are old."

CL: You're right, Scott. I think was being a wee bit elitist back there. Gotta grab everyone's attention, and cutting edge gaming does that every time.

What's battery life when this thing's screaming through high performance games, though?

MLB.com...nice graphics! Is there a steroid-enabled mode as well?

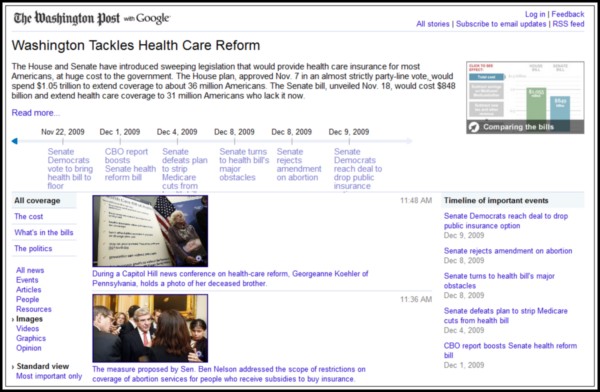

10:50am PT: NYT guy: "We think that we've captured the essence of reading a newspaper… all in a native app."

CL: This, in a nutshell, is the essence of what has ailed the publishing industry all along. They simply haven't had a viable platform to replicate the act of reading it on paper, in an electronic form. If iPad manages to pull this off -- at a price point that doesn't scare consumers off -- we may have just witnessed the landscape shift a little in the right direction.

Note to all newspaper publishers everywhere: Start calling Apple. Now. Do not wait. I'd LOVE for my local paper to bundle in one of these babies with a subscription commitment. Publication subscriptions could be the new carriers of periodical-based lit.

SF3: Back to form factor a second: Just from the photos, I've noticed SVP Scott Forstall trying to fit the device somewhere on his lap; and then there's the guy from the gaming magazine, who plays the game by grasping the device firmly in both hands and suspending it on the desk.

I'm not sure, but I think Sony may already have an edge in device comfort, as far as gaming is concerned.

CL: Agreed, Scott. But ecosystem will trump device design every time. If Apple can flesh out the underlying infrastructure, that'll buy it time to make improvements to the generation 1 hardware. iPhone has followed a similar path, and it's worked well for them so far. The hardware need not be perfect out of the gate. Just good enough. Which in Apples case, is never much of an issue.

SF3: Maybe, but I'm imagining, who's the first guy/lady who will give this device the "Richard Feynman" test (suspending the O-ring in a cup of ice water): Who's the guy who will come on CNBC first and say, "I can't find a place to _put_ this thing in order to use it? And demonstrate the point in a living-room setting.

10:44am PT: CL:I think Pixel Double gives Apple a quick and dirty way to leverage everything that's already out there. Longer term, I suspect developers will to at least a certain extent target one platform - iPad - or the other - iPhone/iPod touch - because some things simply work better on larger screens and larger form factor devices than others.

10:43am PT: CL: Question is, how much of the current iPhone/iPod touch app inventory run unmodified on the iPad. What's the user experience like? Do these apps scale well onto the new device?

"We built the iPad to run virtually every one of these apps unmodified right out of the box. We can do that in two ways ??" do it with pixel for pixel accuracy in a black box, or we can pixel-double and run them in full-screen."

"Virtually every one" is never as great as it sounds.

SF3: "Pixel Double?" That reminds me of the graphics modes on the Apple II. Remember when the //gs came out? It could run with "really great graphics," assuming you wanted to plunk down $60 more bucks for the incompatible high-res version of "Carmen Sandiego." But then it could still run the "thousands of Apple ][ programs." Albeit in a graphically defeated mode.

CL: Now for the main event: talking directly about The New York Times. NYT's Martin Nisenholtz discussing the paper's plans for iPad.

10:38am PT: CL: Good point, Scott. This also allows it to scale by starting on a net-new platform. As its popularity moves up the growth curve, it'll allow Apple to ramp its own pipeline as well. Diving into smaller form factors right now wouldn't have allowed Apple that opportunity to grow gradually.

SF3: That gets back to my broader theory: Apple doesn't _need_ to have a _stunning_ success right now in its history, to be successful. It could simply use another good enough product in its arsenal. So far, that's what I'm seeing here, "good enough." It extends the iPhone OS into an "economy size" form factor, it doesn't really create a new platform as much as extend the existing (healthy) one.

CL: Showing unmodified iPhone game on the iPad. This is crucial for Apple - leveraging the existing software base, developer community and tools means it doesn't have to start from scratch building a new marketplace.

Apple is also learning its lessons: New SDK, including iPad tools, is being released today, too. Nicely thought out.

10:34am PT: CL: Wonder what Intel has to say about Apple's choice of processor. Has Apple just hit another architecture-related fork in the road a la 68XXX-to-PowerPC, or PPC-to-Intel?

16-64 GB of storage. Can't tell if that's expandable...looking hard at the screen grabs to see if one of them's a memory card slot. The one under the power button looks suspiciously like one. Or am I just being hopeful?

SF3: I think Apple needed an opportunity to stretch its legs coming out of the cocoon, in terms of developing the heart of its own hardware. Starting too miniaturized might put it in danger, so I'm thinking the tablet form factor was necessary to give Apple a starting point for itself -- so it can work up to producing chips for its own iPhones later.

10:31am PT: SF3: Or you do like Douglas Adams, you take your towel with you wherever you go. A kind of rubber track pad. Maybe that's the iPad fashion accessory we've been looking for.

CL: Screen - size is much better for watching a movie than an iPhone/iPod touch. But aspect ratio isn't HD-optimized. Welcome to an eternally letter-boxed future. And don't get me started about how to hold this thing at the right angle for a 2-hour movie.

SF3: I can't cross my legs for that long.

CL: 1.5 pounds, a half-inch thick. No doubt, it's a great piece of tech to slip into a carry-on bag. Watch it carefully during security screening, though. We now have the hottest device for thieves.

SF3: Okay, now we're talking my language about something: 1.0 GHz Apple A4 chip. Apple's chip factory goes to work at last.

CL: Carmi Levy: "iPad is powered by our own custom silicon. Our own chip. It's called the A4, and it screams." 1GHz. 16, 32, or 64GB of flash storage. "It's got the latest in wireless: 802.11n, WiFi, and Bluetooth 2.1 + EDR."

10:27am PT: SF3: I'm starting to wonder about the form factor. I'm looking at the Gizmodo photos, and I'm seeing Steve sitting easily in his chair perusing Web pages, but already I'm noticing something: It doesn't appear that there's a convenient place for a user to put this thing. With an iPhone, you have one hand holding the phone and another one typing. What do you do with a slick surface device with only simulated keys resting against your freshly washed blue jeans?

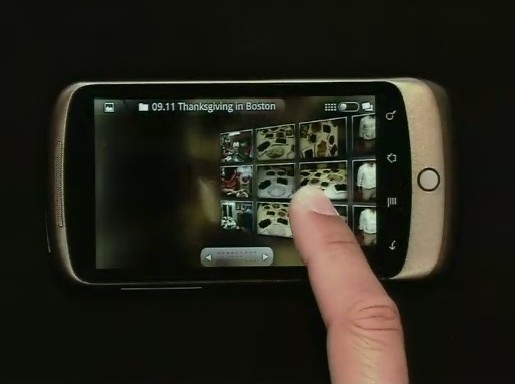

10:24am PT: CL: "If I'm on a Mac, I can get events, places and faces from iPhoto." Hmm, that rather confirms it to me: they still want you to believe every Apple product will somehow be richer if you hang it off of a Mac as well.

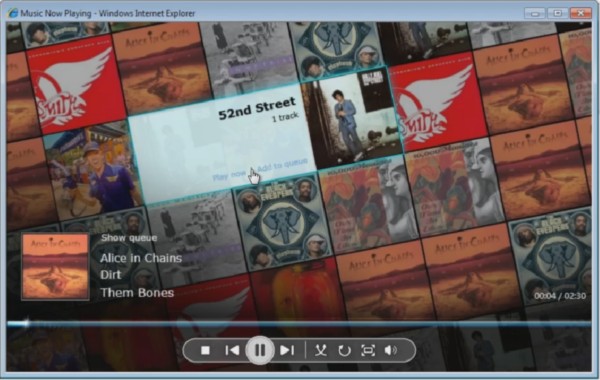

The return of album artwork. Today's music consumers forget how much fun it was to read the liner notes. Maybe there IS hope for the music industry yet.

10:21am PT: SF3: You begin to wonder what the platform extension for this will look like on an iMac.

CL: Does every Apple demo have to be laden with countles uses of the word "gorgeous"? We get it...please move on.

SF3: It's as if they'd hired Charles Nelson Reilly to right the script.

CL: ...and if Apple will offer a preferential experience on Macs - like iTunes was in the early days - or deliver consistent multiplatform capability right off the hop. I wonder if the company is losing its "drive Mac growth" ethos...or if it really even matters any more.

I think Steve would look great in the center square, Scott. But only if Florence Henderson is on with him, too.

10:18am PT: SF3: That gets back to that whole, what kind of _platform_ are they building for it, issue. The content delivery model. Smartly, Jobs is saving that part of the introduction until later in the event.

Leave it to Canadians to be erudite and considered when providing feedback online, Scott :) I think the masses still see this as a hardware play, while a small, bigger-picture-thinking minority understands the what's really changed here. 8+ years after iPod, after all, most folks still think it's just a slick media player.

SF3: Notice how soon Jobs started right into the iPad -- with the iPhone introduction, he took some time, built up some expectation.

CL: I believe the speed with which he dove right into iPad suggests there's more to this. He's building to something else...and it ain't just the device. Waiting for news on partnerships, ecosystems, the lovely glue that keeps everything underneath together and vital.

10:16am PT: CL: Desktop UI looks like an interesting mix of OS X - the dock on the bottom - and iPhone/iPod touch (the app icons on the desktop itself.)

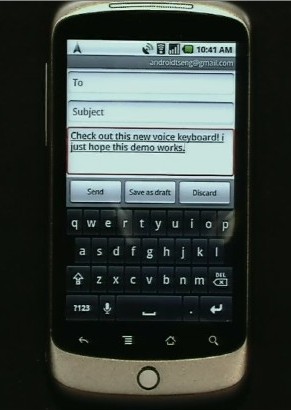

Giant on-screen keyboard. I hope the keys are bigger than my big, fat, glass-challenged fingers. For large volume text entry, this still isn't the holy grail.

Facebook was the first web page shown. New York Times the next. Waiting to see the publishing play. Don't let me down, Steve!

Keyboard looks like a digitally rendered version of the current Mac-standard 'board. Looks nice...

"Steve. "More intimate than a laptop....and it's so much more capable than a smartphone." Me: Intimate? Yeesh. Some things we don't really want to know.

Using the NYT again for his web demo. I think the story here may be a little deeper than just hardware, folks. Keep going, Mr. Jobs...

10:12am PT: CL: iPad it is. Going to have to provide elocution lessons to Apple Store employees to ensure they don't confuse customers. iPad = iPod?

Desktop UI looks like an interesting mix of OS X - the dock on the bottom - and iPhone/iPod touch (the app icons on the desktop itself.)

10:08am PT: SF3: It's extraordinary how few of the "live feeds" are actually updating, or have even started. I think CoverItLive.com, which provides some of the back end for these guys, may have actually crashed.

CL: "Live" doesn't mean the same thing that it once did. Then again, "new and improved" has never been new OR improved, so I guess nothing's really changed.

10:05am PT: The event has begun with the now-customary iPod status report. But judging from the reports I'm reading, that update is flying by at lightning speed. My guess is, expect content partners to take the stage, and Apple will need time to fit them all in.

9:58am PT: SF3: I have to say, I'm impressed with some of the questions I'm seeing on the live blog feeds with user feedback. On Canada's National Post live blog, for instance, is a fellow asking, why does there appear to be so much emphasis on the hardware aspect of this, when it's the underlying platform that will make or break this venture? The implication here being, Apple needs to extend iTunes into larger-screen media in such a way that it has some reach to hardware beyond just this iTablet/iSlate (following the iTunes for Windows philosophy).

Copyright Betanews, Inc. 2010

![A recent mockup of the likely default appearance of Firefox 4.0. [Courtesy Mozilla]](http://images.betanews.com/media/4966.jpg)

"We all want to have control over our personal information. This is why the EU has rules on the protection of personal data," Reding said. "Our rights are clear and must be respected. Whenever your personal information is collected, whenever it is processed, and whenever it is used, these are the high standards we must live up to...We are facing common security challenges from international crime and terrorism. We have been confronted with devastating attacks in Europe and in the United States. We are working hard with the US to confront these challenges."

"We all want to have control over our personal information. This is why the EU has rules on the protection of personal data," Reding said. "Our rights are clear and must be respected. Whenever your personal information is collected, whenever it is processed, and whenever it is used, these are the high standards we must live up to...We are facing common security challenges from international crime and terrorism. We have been confronted with devastating attacks in Europe and in the United States. We are working hard with the US to confront these challenges."

When a massive Microsoft corporate reorganization on September 20, 2005 vaulted Robbie Bach into the role of President of the Entertainment & Devices division, the explanation at the time was to enable the company to focus on devices where the goal was to promote devices, and on platforms where the goal was to promote devices. Xbox was a device, whatever MP3 player the company would decide to produce was a device, and obviously cell phones are devices should Microsoft ever choose to enter that business in earnest.

When a massive Microsoft corporate reorganization on September 20, 2005 vaulted Robbie Bach into the role of President of the Entertainment & Devices division, the explanation at the time was to enable the company to focus on devices where the goal was to promote devices, and on platforms where the goal was to promote devices. Xbox was a device, whatever MP3 player the company would decide to produce was a device, and obviously cell phones are devices should Microsoft ever choose to enter that business in earnest. It's a development that indicates that Microsoft realizes the importance of these assets as platforms, rather than as mere devices -- a realization made feasible by the work of Bach and Allard. And yet off they go to parts unknown.

It's a development that indicates that Microsoft realizes the importance of these assets as platforms, rather than as mere devices -- a realization made feasible by the work of Bach and Allard. And yet off they go to parts unknown.

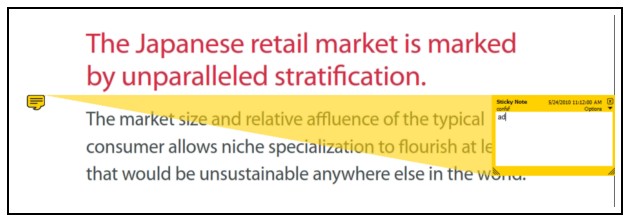

The latest hybrid notebook storage device announced today by Seagate Technology, the

The latest hybrid notebook storage device announced today by Seagate Technology, the ![Seagate's cross-section depiction of its new Momentus XT hybrid SSD/HDD. [Courtesy Seagate]](http://images.betanews.com/media/5024.jpg) Fast-forward to today, when Seagate product marketing manager Joni Clark triumphantly announces to Betanews that Momentus XT will not be bound to ReadyDrive at all.

Fast-forward to today, when Seagate product marketing manager Joni Clark triumphantly announces to Betanews that Momentus XT will not be bound to ReadyDrive at all.![A slide from a Seagate presentation revealing the benchmark test results for 'real-world' applications with its Momentus XT hybrid SSD/HDD drive. [Courtesy Seagate]](http://images.betanews.com/media/5025.jpg)

"Within a Digital Single Market, citizens should be able to enjoy commercial services and cultural entertainment across borders. But EU online markets are still separated by barriers which hamper access to pan-European telecoms services, digital services and content," Comm. Kroes told a press conference in Brussels yesterday. "Today there are four times as many music downloads in the US as in the EU because of the lack of legal offers and fragmented markets. The Commission therefore intends to open up access to legal online content by simplifying copyright clearance, management and cross-border licensing. Other actions include making electronic payments and invoicing easier and simplifying online dispute resolution."

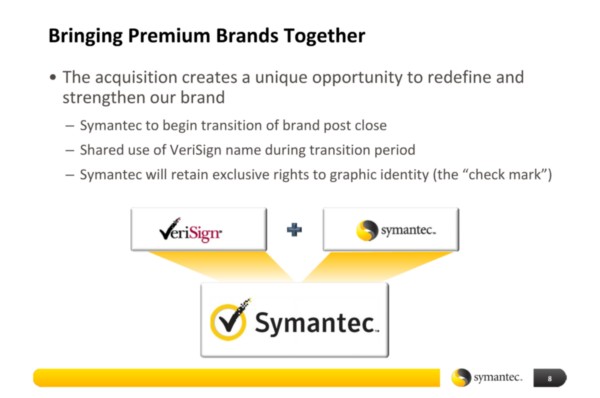

"Within a Digital Single Market, citizens should be able to enjoy commercial services and cultural entertainment across borders. But EU online markets are still separated by barriers which hamper access to pan-European telecoms services, digital services and content," Comm. Kroes told a press conference in Brussels yesterday. "Today there are four times as many music downloads in the US as in the EU because of the lack of legal offers and fragmented markets. The Commission therefore intends to open up access to legal online content by simplifying copyright clearance, management and cross-border licensing. Other actions include making electronic payments and invoicing easier and simplifying online dispute resolution." On the surface, it might sound like one of those amateurish conclusions a blogger might reach after having just read the press release: Symantec, a software company now mainly known for security products, acquires some assets from a non-competitor in order to get that company's logo. But in the deal between Symantec and VeriSign announced yesterday, there is no mistaking the fact that the antivirus products maker acquired, among other things, the single asset that just last week VeriSign argued was the ticket to its own future stability: quite literally, its own logo.

On the surface, it might sound like one of those amateurish conclusions a blogger might reach after having just read the press release: Symantec, a software company now mainly known for security products, acquires some assets from a non-competitor in order to get that company's logo. But in the deal between Symantec and VeriSign announced yesterday, there is no mistaking the fact that the antivirus products maker acquired, among other things, the single asset that just last week VeriSign argued was the ticket to its own future stability: quite literally, its own logo.

In a decision handed down in US District Court in New York this afternoon, representatives of the recording industry won summary judgment against P2P file-sharing software maker LimeWire, in a patent infringement suit

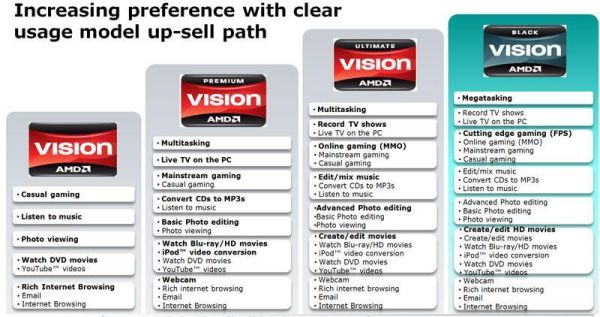

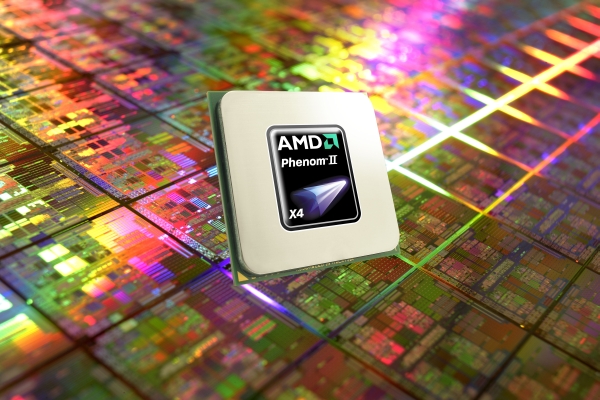

In a decision handed down in US District Court in New York this afternoon, representatives of the recording industry won summary judgment against P2P file-sharing software maker LimeWire, in a patent infringement suit  Ever since it stopped billing itself as a producer of "replacements" for Intel CPUs, AMD has struggled with the platform question: the need for OEMs to produce PCs based on pre-determined patterns. Manufacturers can achieve price breaks when they buy parts in bulk, and platforms can help them do that; likewise, they can reap even more benefits down the road from selling popular platforms to the public.

Ever since it stopped billing itself as a producer of "replacements" for Intel CPUs, AMD has struggled with the platform question: the need for OEMs to produce PCs based on pre-determined patterns. Manufacturers can achieve price breaks when they buy parts in bulk, and platforms can help them do that; likewise, they can reap even more benefits down the road from selling popular platforms to the public.

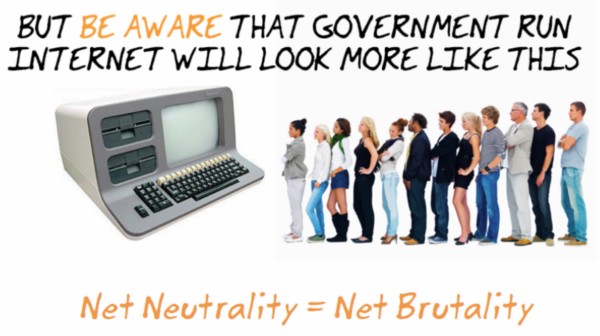

"The Commission shall apply and enforce any regulation governing the rates, terms, conditions, provisioning, or use of an information service (including any transmission component of an information service whether or not the transmission component is offered for a fee directly to the public or to such class of users as to be effectively available directly to the public regardless of the facilities used) or an Internet access service on a nondiscriminatory basis between and among broadband network providers, service providers, application providers, and content providers."

"The Commission shall apply and enforce any regulation governing the rates, terms, conditions, provisioning, or use of an information service (including any transmission component of an information service whether or not the transmission component is offered for a fee directly to the public or to such class of users as to be effectively available directly to the public regardless of the facilities used) or an Internet access service on a nondiscriminatory basis between and among broadband network providers, service providers, application providers, and content providers."

![A concept for the 'app-tab' functionality to be built into Firefox 4. [Courtesy Mozilla]](http://images.betanews.com/media/4965.jpg)

![Permissions and limitations can be set on a per-site basis in Firefox 4. [Courtesy Mozilla]](http://images.betanews.com/media/4967.jpg)

With Congress' dance card already overflowing with major social and policy reforms, including in the financial sector, the likelihood that it could pass a major reform to the Telecommunications Act for Internet regulation during the Obama Administration (however long it lasts) is quite low. Faced with a pair of no-win scenarios, the FCC last week opted to propose a "Third Way" for broadband regulation that could at least get its foot back in the door -- a way that literally asks judges and attorneys-general to substitute "telephone" for "broadband" in various clauses of existing law, except for those sections where doing so wouldn't make any sense.

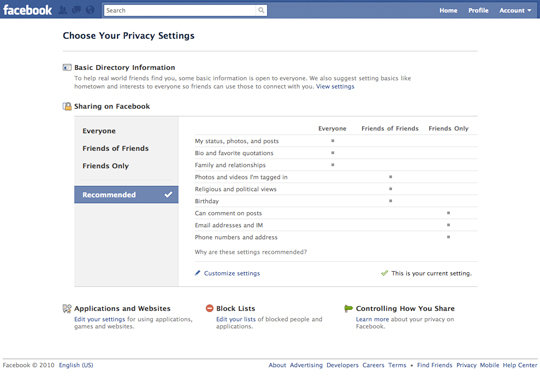

With Congress' dance card already overflowing with major social and policy reforms, including in the financial sector, the likelihood that it could pass a major reform to the Telecommunications Act for Internet regulation during the Obama Administration (however long it lasts) is quite low. Faced with a pair of no-win scenarios, the FCC last week opted to propose a "Third Way" for broadband regulation that could at least get its foot back in the door -- a way that literally asks judges and attorneys-general to substitute "telephone" for "broadband" in various clauses of existing law, except for those sections where doing so wouldn't make any sense. In a signal that Facebook is taking the messages of last week seriously -- messages that included a privacy complaint filed last week with the US Federal Trade Commission by the Electronic Privacy Information Center (EPIC) (

In a signal that Facebook is taking the messages of last week seriously -- messages that included a privacy complaint filed last week with the US Federal Trade Commission by the Electronic Privacy Information Center (EPIC) (

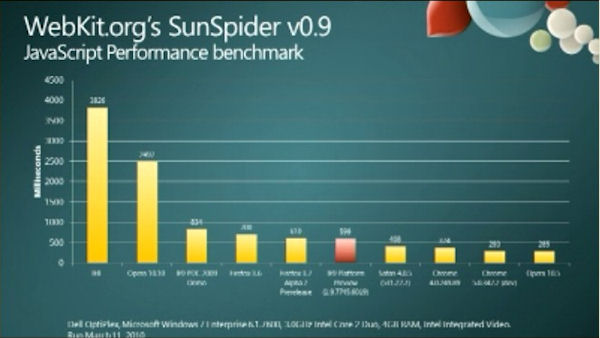

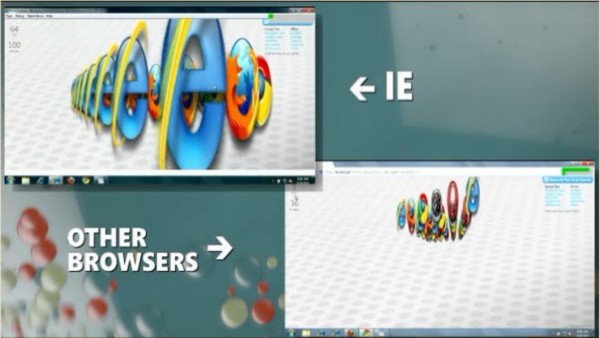

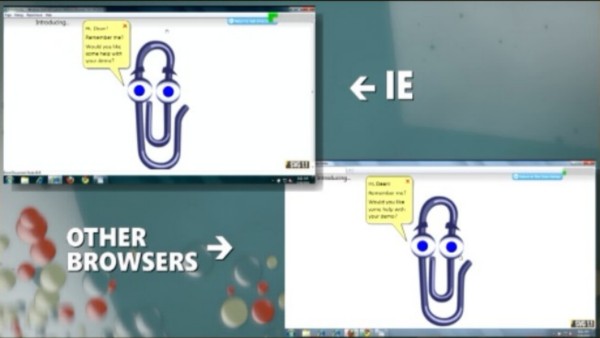

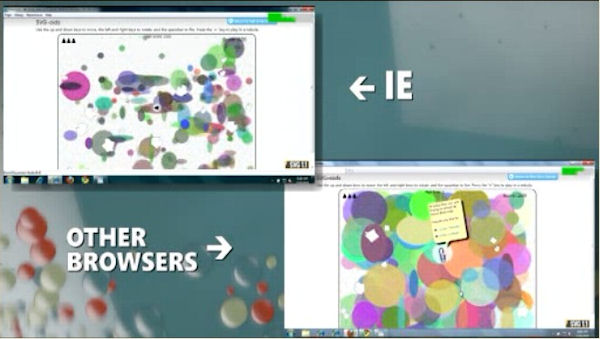

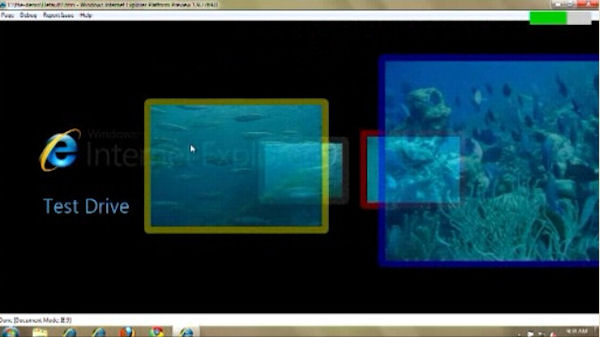

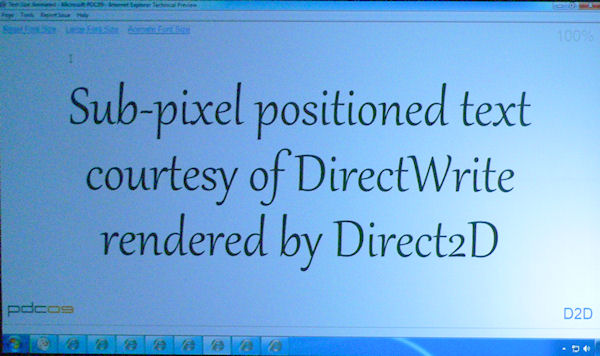

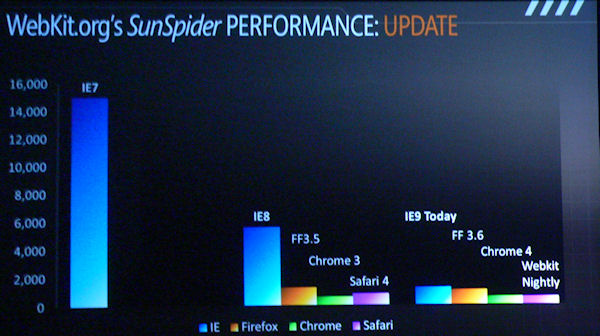

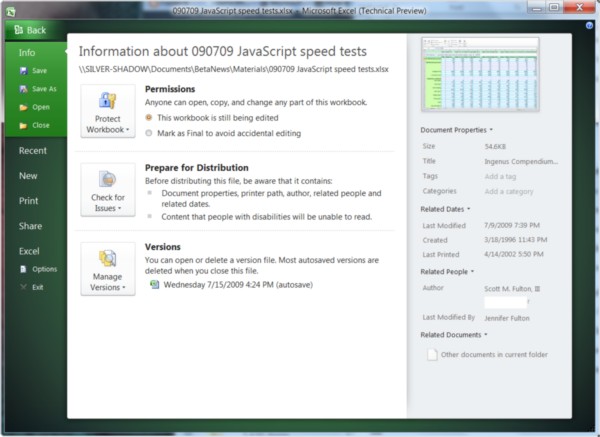

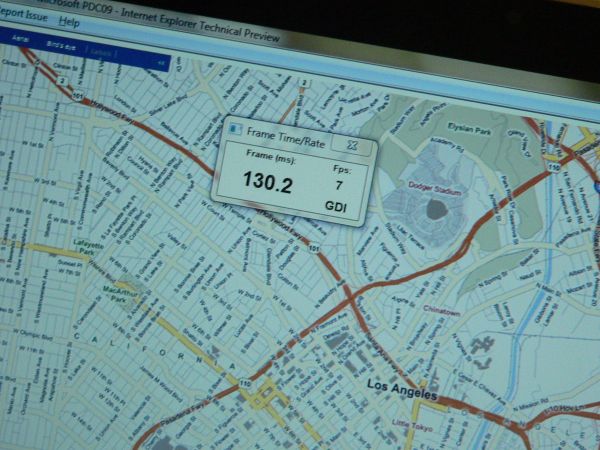

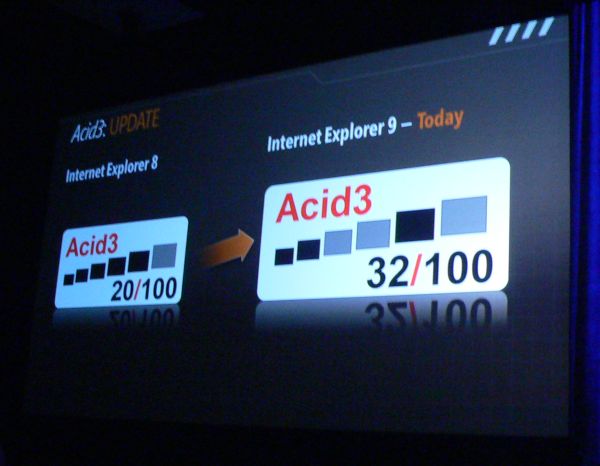

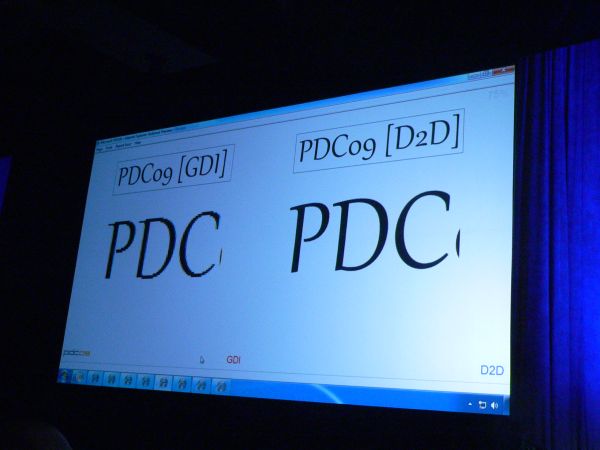

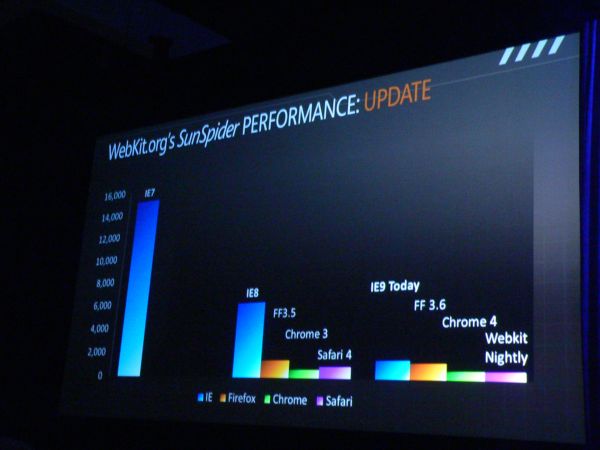

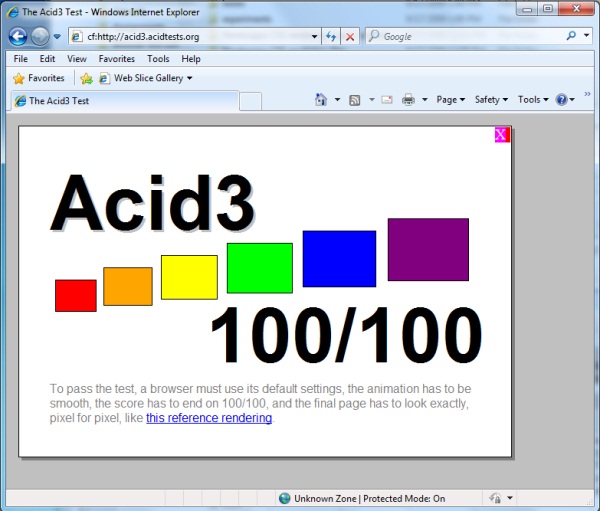

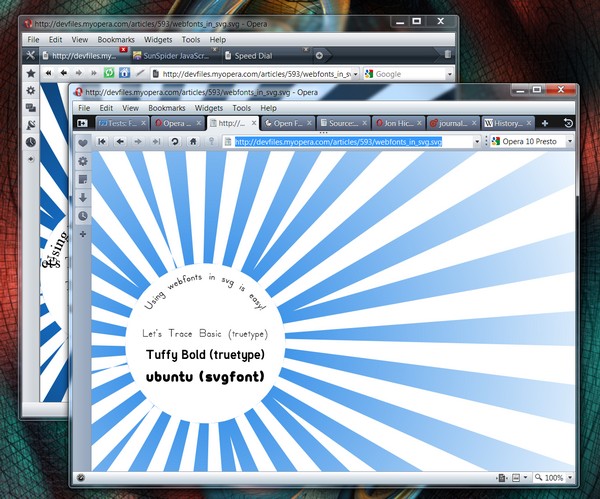

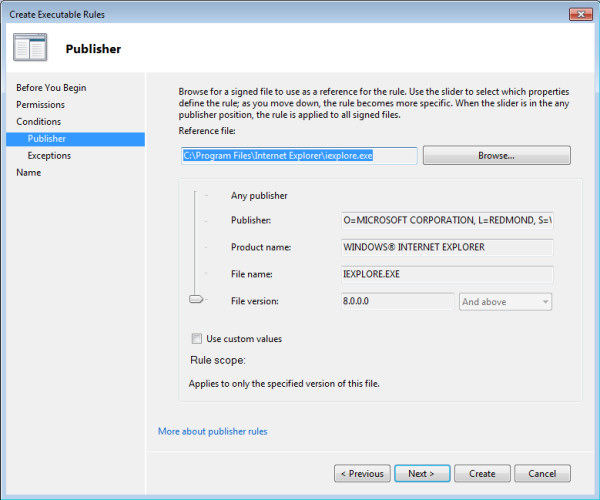

This week, Microsoft continued its public demonstration that it's working hard to improve the underlying Internet Explorer platform, with a release on Wednesday of the second preview of just the platform of the next IE9 -- not a fully functional Web browser, but a demonstration of where the rendering engine is going. Again, it's just a minimal front end on top of a rendering engine, and its main purpose is to show off the HTML 5, SVG, and JavaScript functionality pointed to on Microsoft's IE9 test site.

This week, Microsoft continued its public demonstration that it's working hard to improve the underlying Internet Explorer platform, with a release on Wednesday of the second preview of just the platform of the next IE9 -- not a fully functional Web browser, but a demonstration of where the rendering engine is going. Again, it's just a minimal front end on top of a rendering engine, and its main purpose is to show off the HTML 5, SVG, and JavaScript functionality pointed to on Microsoft's IE9 test site.

Since 1996, the Federal Communications Commission accepted, and has positively argued even up until two months ago, that broadband Internet service is an information service under US law -- an enhancement to telecommunications service that is regulated under Title I of the Communications Act. But when the FCC made its strongest effort to censure a carrier for net neutrality violations -- its fine of Comcast for throttling BitTorrent -- the DC Circuit Court said that wouldn't fly under Title I.

Since 1996, the Federal Communications Commission accepted, and has positively argued even up until two months ago, that broadband Internet service is an information service under US law -- an enhancement to telecommunications service that is regulated under Title I of the Communications Act. But when the FCC made its strongest effort to censure a carrier for net neutrality violations -- its fine of Comcast for throttling BitTorrent -- the DC Circuit Court said that wouldn't fly under Title I. "A covered entity may not sell, share, or otherwise disclose covered information to an unaffiliated party without first obtaining the express affirmative consent of the individual to whom the covered information relates," the draft reads. "A covered entity that has obtained express affirmative consent from an individual must provide the individual with the opportunity, without charge, to withdraw such consent at any time thereafter."

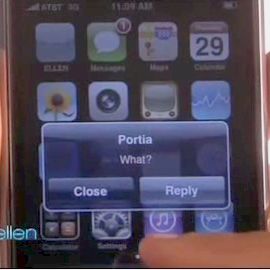

"A covered entity may not sell, share, or otherwise disclose covered information to an unaffiliated party without first obtaining the express affirmative consent of the individual to whom the covered information relates," the draft reads. "A covered entity that has obtained express affirmative consent from an individual must provide the individual with the opportunity, without charge, to withdraw such consent at any time thereafter." Technically, comedians and comedy writers cannot be held liable for certain copyright violations, especially if their parodies are presented in the context of a comedy show. But that doesn't mean major sponsors can't pull strings other than legal ones; and Tuesday morning, comedienne Ellen DeGeneres found herself apologizing -- in her own self-deprecating way, of course -- for a parody of an iPhone commercial that appeared on Monday's show.

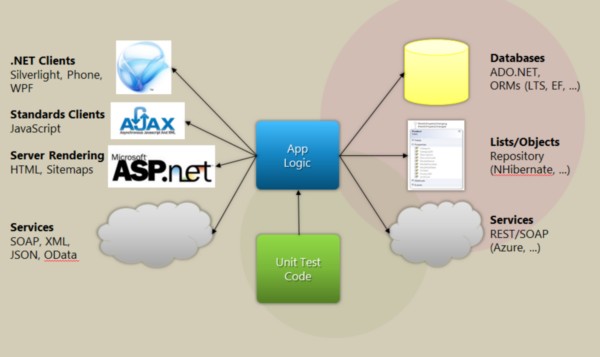

Technically, comedians and comedy writers cannot be held liable for certain copyright violations, especially if their parodies are presented in the context of a comedy show. But that doesn't mean major sponsors can't pull strings other than legal ones; and Tuesday morning, comedienne Ellen DeGeneres found herself apologizing -- in her own self-deprecating way, of course -- for a parody of an iPhone commercial that appeared on Monday's show. Case in point: AJAX, which might never have happened, Hammond believes, had Microsoft not implemented the object identifier XMLHTTPRequest in Internet Explorer 5 in 1999. "That opened up the whole door for this rich Internet application movement with AJAX and JavaScript," Hammond reminded Betanews in a recent interview. "Over time, basically, every other browser adopted that, even though it was never really part of any kind of standards-setting process or specification, and it created a lot of value."

Case in point: AJAX, which might never have happened, Hammond believes, had Microsoft not implemented the object identifier XMLHTTPRequest in Internet Explorer 5 in 1999. "That opened up the whole door for this rich Internet application movement with AJAX and JavaScript," Hammond reminded Betanews in a recent interview. "Over time, basically, every other browser adopted that, even though it was never really part of any kind of standards-setting process or specification, and it created a lot of value."

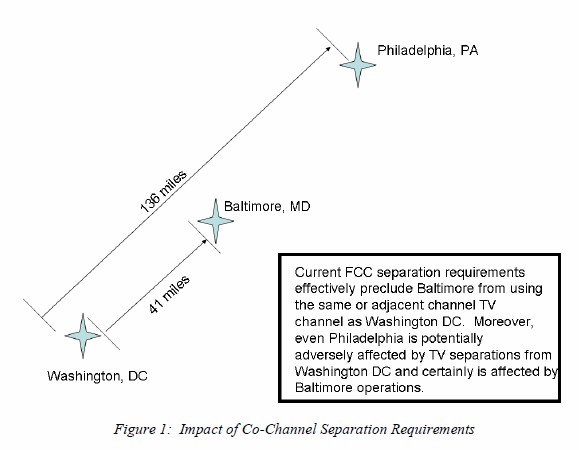

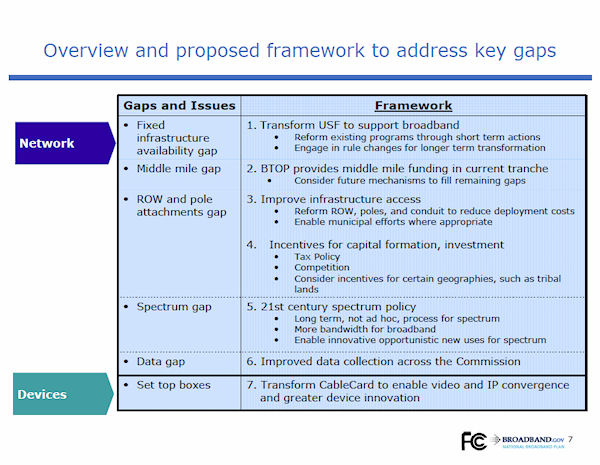

There are a handful of issues of contention that broadcasters (who transmit content over the public airwaves) have with the Federal Communications Commission's Broadband Plan. One such outstanding dispute concerns the FCC's proposed reallocation of unused digital spectrum from broadcast to broadband purposes -- a way to get at least some of the estimated 180 MHz of spectrum wireless operators say they need, without another complete re-auction.

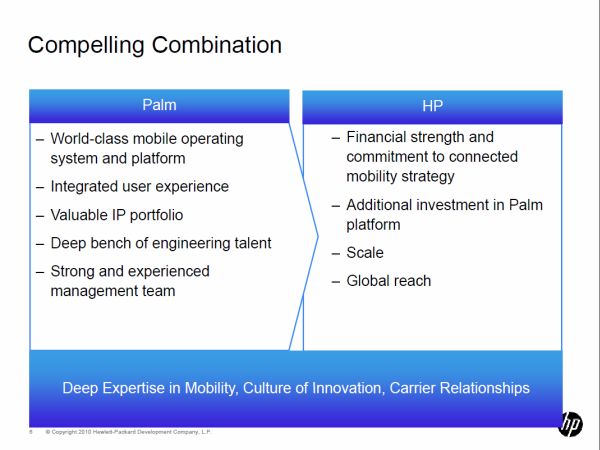

There are a handful of issues of contention that broadcasters (who transmit content over the public airwaves) have with the Federal Communications Commission's Broadband Plan. One such outstanding dispute concerns the FCC's proposed reallocation of unused digital spectrum from broadcast to broadband purposes -- a way to get at least some of the estimated 180 MHz of spectrum wireless operators say they need, without another complete re-auction. But waving in front of HP's obvious red flags were several more obvious white ones:

But waving in front of HP's obvious red flags were several more obvious white ones:

Indeed, last week, Rubin was talking with us about the synergies that could

Indeed, last week, Rubin was talking with us about the synergies that could

Perhaps the headline here should be, "HTC doesn't acquire Palm." In any event, our question from last week, "What if nobody wants Palm?" has just been rendered moot: Hewlett-Packard has just announced it has agreed to acquire the assets of Palm Inc. for $5.70 per share, or approximately $1.2 billion.

Perhaps the headline here should be, "HTC doesn't acquire Palm." In any event, our question from last week, "What if nobody wants Palm?" has just been rendered moot: Hewlett-Packard has just announced it has agreed to acquire the assets of Palm Inc. for $5.70 per share, or approximately $1.2 billion.

Amid news yesterday of a discovery by an independent programmer of what appeared to be another door left open for Web apps to access Facebook users' personal data, Sen. Chuck Schumer (D - N.Y.) called upon the Federal Trade Commission to take the next step in forming the equivalent of a US "privacy commissioner."

Amid news yesterday of a discovery by an independent programmer of what appeared to be another door left open for Web apps to access Facebook users' personal data, Sen. Chuck Schumer (D - N.Y.) called upon the Federal Trade Commission to take the next step in forming the equivalent of a US "privacy commissioner."

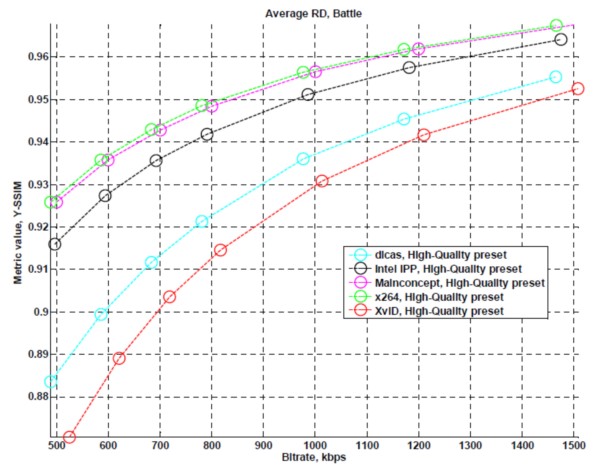

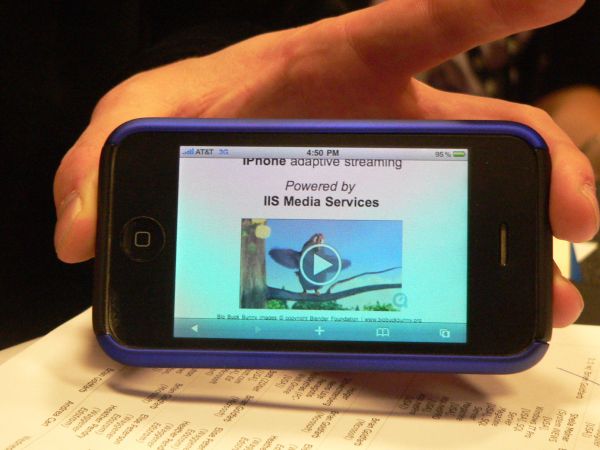

One of Silverlight video's biggest advantages to date has been the server's ability to tweak the bitrate of video playback as it's being played back, and as the bandwidth of the connection varies. It's the

One of Silverlight video's biggest advantages to date has been the server's ability to tweak the bitrate of video playback as it's being played back, and as the bandwidth of the connection varies. It's the

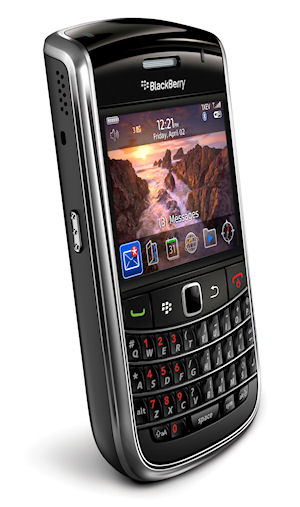

The Pearl's appeal to date has been its form factor -- not wide like a sandwich loaf slice, but thinner than a bar of soap. The tradeoff has been the keyboard: There's not enough room for the traditional BlackBerry QWERTY, so the new Pearl 9100 features a 20-key layout that, for some, takes some getting used to. An optional Pearl 9105 model includes a more traditional 14-key layout.

The Pearl's appeal to date has been its form factor -- not wide like a sandwich loaf slice, but thinner than a bar of soap. The tradeoff has been the keyboard: There's not enough room for the traditional BlackBerry QWERTY, so the new Pearl 9100 features a 20-key layout that, for some, takes some getting used to. An optional Pearl 9105 model includes a more traditional 14-key layout. Yet the 9650 isn't decked out like the 9700, or even like the new Pearl -- it's not really a 3G phone. When RIM says it "supports" 3G, it's referring to EV-DO, which is a bit more like "2.5G." It's a CDMA phone, and will premiere in the US on Sprint.

Yet the 9650 isn't decked out like the 9700, or even like the new Pearl -- it's not really a 3G phone. When RIM says it "supports" 3G, it's referring to EV-DO, which is a bit more like "2.5G." It's a CDMA phone, and will premiere in the US on Sprint.

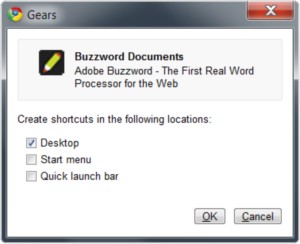

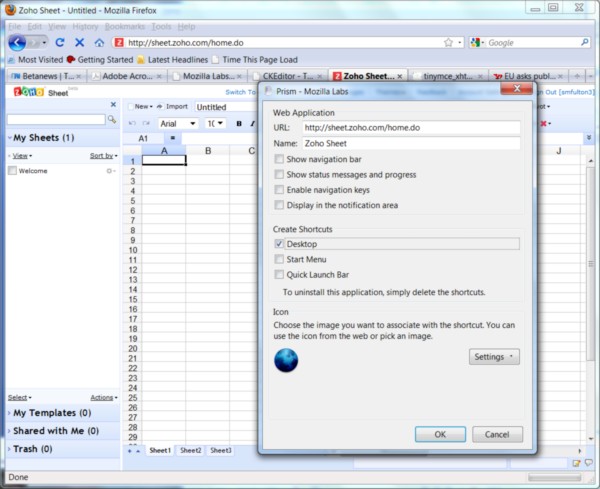

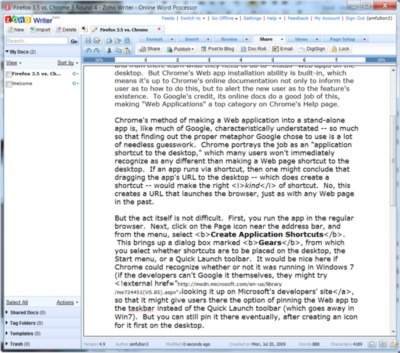

While bitterness continues over the implications of Sections 3.3.1 through 3.3.3 of Apple's recently modified Developers' Agreement (

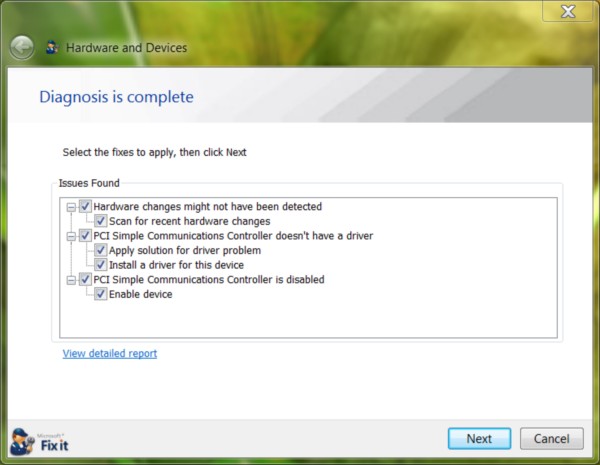

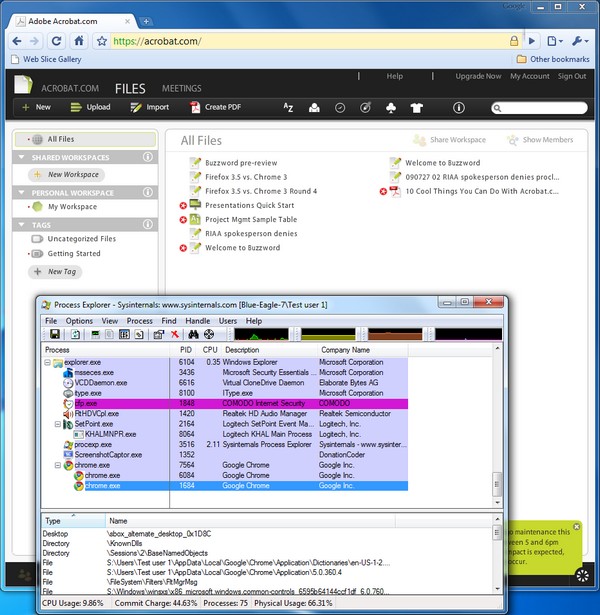

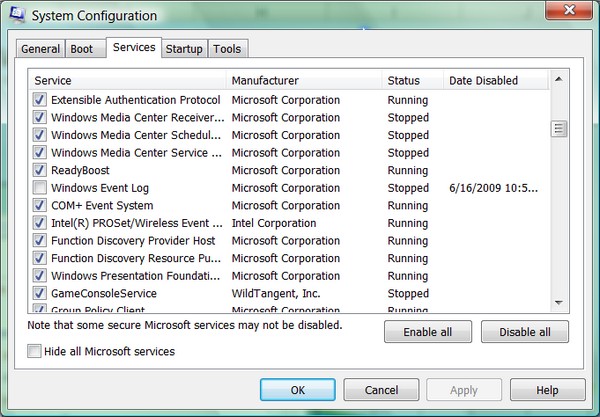

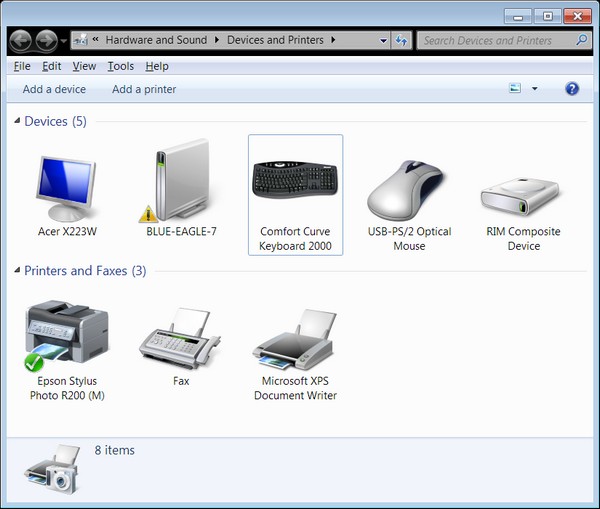

While bitterness continues over the implications of Sections 3.3.1 through 3.3.3 of Apple's recently modified Developers' Agreement ( Many instances of malware on Windows-based systems masquerade themselves as system services -- the various independent processes that respond to requests from both the operating system and applications with functions that users typically need. Network connectivity and printing are among the more common Windows services; and if you've ever perused the processes list of Task Manager (or, better yet,

Many instances of malware on Windows-based systems masquerade themselves as system services -- the various independent processes that respond to requests from both the operating system and applications with functions that users typically need. Network connectivity and printing are among the more common Windows services; and if you've ever perused the processes list of Task Manager (or, better yet,  "Today, the Web exists mostly as a series of unstructured links between pages. And this has been a powerful model, but it's really just the start," said Zuckerberg. "The Open Graph puts people at the center of the Web. It means that the Web can become a set of personally and semantically meaningful connections between people and things. I am friends with you. I am attending this event. I like this band. These connections aren't just happening on Facebook, they're happening all over the Web. And today, with the Open Graph, we're going to bring all of these together."

"Today, the Web exists mostly as a series of unstructured links between pages. And this has been a powerful model, but it's really just the start," said Zuckerberg. "The Open Graph puts people at the center of the Web. It means that the Web can become a set of personally and semantically meaningful connections between people and things. I am friends with you. I am attending this event. I like this band. These connections aren't just happening on Facebook, they're happening all over the Web. And today, with the Open Graph, we're going to bring all of these together."

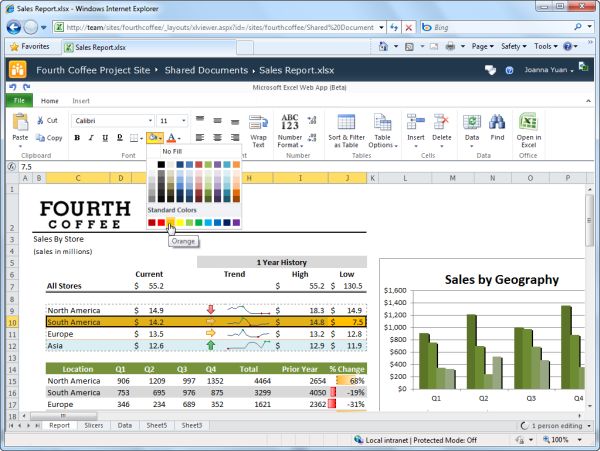

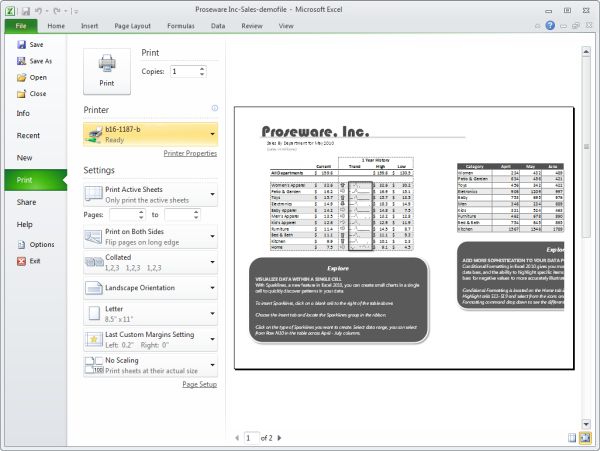

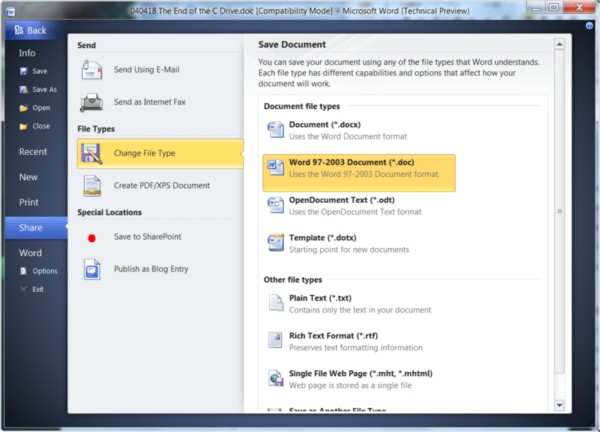

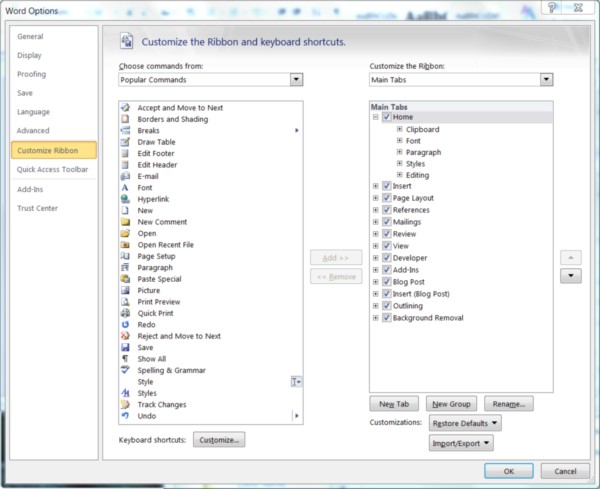

The first volume licensing arrangements for Microsoft Office 2010 will be made through company partners on May 1, almost two weeks earlier than expected. This news today from the company's Office Engineering team, which released the final build of all versions of the company's principal applications suite today.

The first volume licensing arrangements for Microsoft Office 2010 will be made through company partners on May 1, almost two weeks earlier than expected. This news today from the company's Office Engineering team, which released the final build of all versions of the company's principal applications suite today. MARKHAM ERICKSON, OIC: I think George is right with point #1, that the DC Circuit didn't obliterate the concept of ancillary authority; that legal theory still exists. The court just further described what they think ancillary authority means. It means that anything you're doing under Title I has to be tied to a specific statutory mandate under Titles II, III, or VI of the Communications Act; and that what the FCC was doing in the Comcast decision -- relying primarily on Section 706 and 230 of the Communications Act -- neither of those sections provided a statutory mandate, and they were mere policy statements rather than statutory mandates.

MARKHAM ERICKSON, OIC: I think George is right with point #1, that the DC Circuit didn't obliterate the concept of ancillary authority; that legal theory still exists. The court just further described what they think ancillary authority means. It means that anything you're doing under Title I has to be tied to a specific statutory mandate under Titles II, III, or VI of the Communications Act; and that what the FCC was doing in the Comcast decision -- relying primarily on Section 706 and 230 of the Communications Act -- neither of those sections provided a statutory mandate, and they were mere policy statements rather than statutory mandates.

![Adobe Flash Catalyst converts a multi-layered graphic from Photoshop into a workable Web app front panel. [Screenshot courtesy Adobe]](http://images.betanews.com/media/4833.jpg)

![A sophisticated order management system appears within a Web browser framework (IE8) using Silverlight 4 and WCF RIA Services. [Screenshot courtesy Microsoft.]](http://images.betanews.com/media/4834.jpg)

A long-planned hearing on Capitol Hill to discuss the Federal Communications Commission's Broadband Plan took on new meaning yesterday, a week after

A long-planned hearing on Capitol Hill to discuss the Federal Communications Commission's Broadband Plan took on new meaning yesterday, a week after  What saved Intel's neck during the worst part of the last economic downturn was the Atom processor, the heart of netbooks that started selling well as consumers' budgets tightened. Now that the 2008-09 dip is over, and even businesses' budget belts are loosening, the company's attention returns to the server side of the equation.

What saved Intel's neck during the worst part of the last economic downturn was the Atom processor, the heart of netbooks that started selling well as consumers' budgets tightened. Now that the 2008-09 dip is over, and even businesses' budget belts are loosening, the company's attention returns to the server side of the equation. In the history of anything whatsoever, timing is rarely, if ever, coincidental. More often these days, however, the strategy behind it looks confusing. Just days before it's scheduled to hold its developers conference in San Francisco (tomorrow and Thursday), Twitter revealed that it is in the process of either acquiring or building applications that will compete directly with the Twitter clients these developers will be taught how to build.

In the history of anything whatsoever, timing is rarely, if ever, coincidental. More often these days, however, the strategy behind it looks confusing. Just days before it's scheduled to hold its developers conference in San Francisco (tomorrow and Thursday), Twitter revealed that it is in the process of either acquiring or building applications that will compete directly with the Twitter clients these developers will be taught how to build.

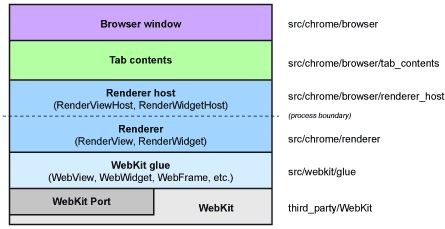

"Notice that there is now a process boundary, and it sits below the API boundary," reads

"Notice that there is now a process boundary, and it sits below the API boundary," reads  In a pair of blog posts since

In a pair of blog posts since  It was perhaps one of the most drawn-out, painful launches in Intel's long history: the introduction last February of Tukwila, the latest generation of its Itanium 64-bit processor architecture. Not everyone in the Itanium Solutions Alliance hung on for the five-year ride, with Unisys having been its most prominent drop-out last year, citing competitor HP's dominance in the field. Microsoft held on for the entire stretch; but last week, the company announced it would not lend its support to whatever the generation after Tukwila might become.

It was perhaps one of the most drawn-out, painful launches in Intel's long history: the introduction last February of Tukwila, the latest generation of its Itanium 64-bit processor architecture. Not everyone in the Itanium Solutions Alliance hung on for the five-year ride, with Unisys having been its most prominent drop-out last year, citing competitor HP's dominance in the field. Microsoft held on for the entire stretch; but last week, the company announced it would not lend its support to whatever the generation after Tukwila might become. "At the national level, establishing a public performance right in sound recordings and eliminating the exemption for terrestrial broadcasters follows principles of US copyright law," wrote Kerry, the brother of the Senate Foreign Relations Committee Chairman and former presidential candidate. "In the words of the Supreme Court, 'The encouragement of individual effort by personal gain is the best way to advance public welfare through the talents of authors and inventors...' Consistent with this historic rationale for copyright, providing fair compensation to America's performers and record companies through a broad public performance right in sound recordings is a matter of fundamental fairness to performers. It would also provide a level playing field for all broadcasters to compete in the current environment of rapid technological change, including the Internet, satellite, and terrestrial broadcasters. In today's digital music marketplace, where US performers and record labels are facing both unprecedented challenges and opportunities, the Department believes that providing such incentives for America's performing artists and recording companies is more important than ever."

"At the national level, establishing a public performance right in sound recordings and eliminating the exemption for terrestrial broadcasters follows principles of US copyright law," wrote Kerry, the brother of the Senate Foreign Relations Committee Chairman and former presidential candidate. "In the words of the Supreme Court, 'The encouragement of individual effort by personal gain is the best way to advance public welfare through the talents of authors and inventors...' Consistent with this historic rationale for copyright, providing fair compensation to America's performers and record companies through a broad public performance right in sound recordings is a matter of fundamental fairness to performers. It would also provide a level playing field for all broadcasters to compete in the current environment of rapid technological change, including the Internet, satellite, and terrestrial broadcasters. In today's digital music marketplace, where US performers and record labels are facing both unprecedented challenges and opportunities, the Department believes that providing such incentives for America's performing artists and recording companies is more important than ever."

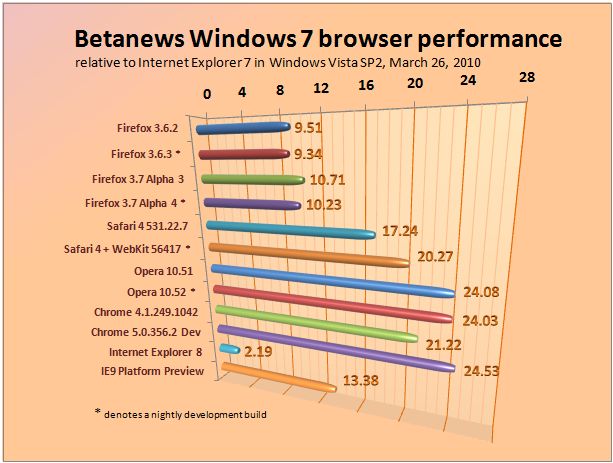

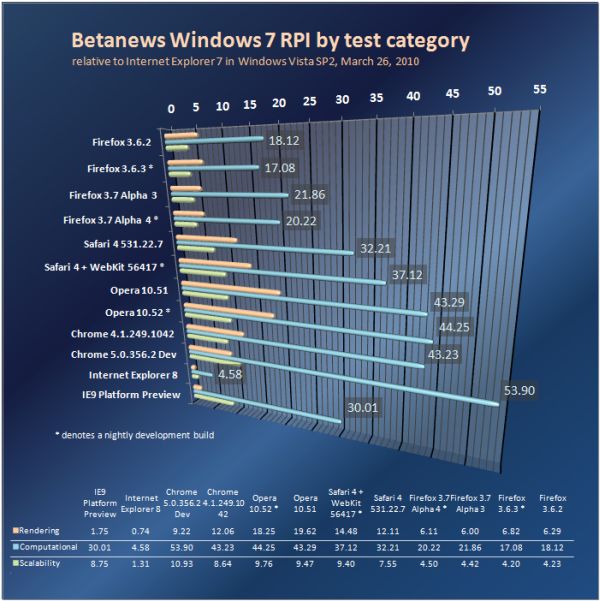

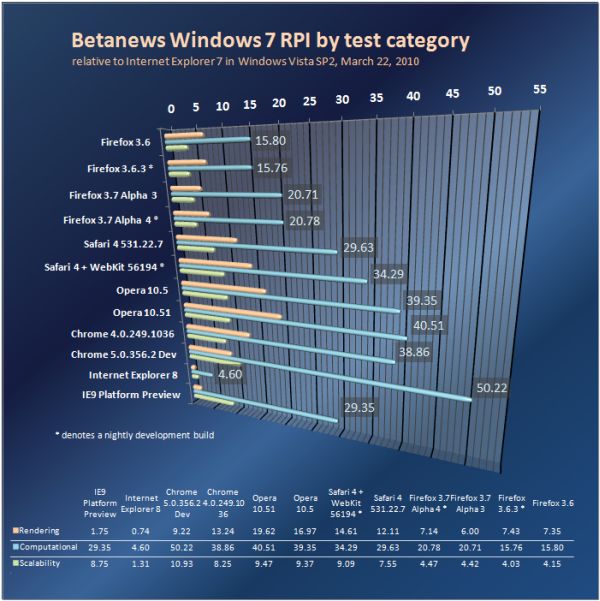

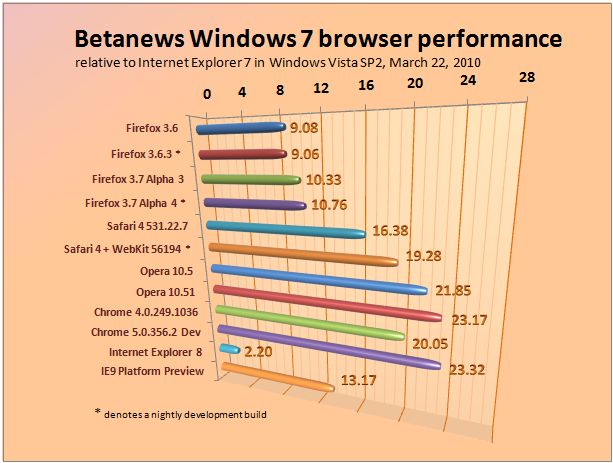

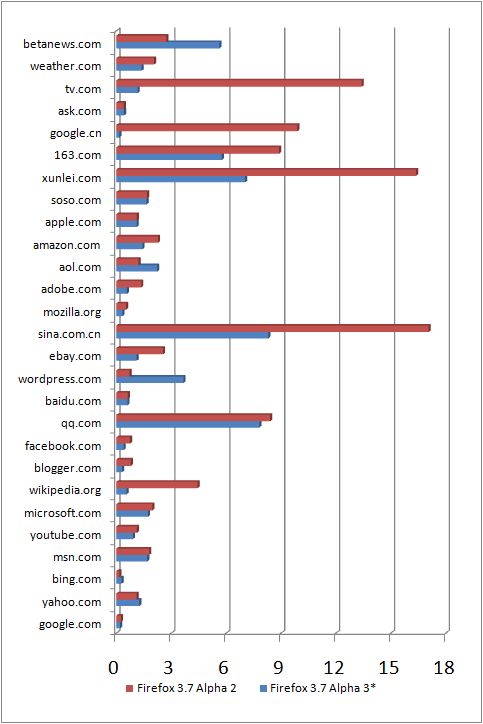

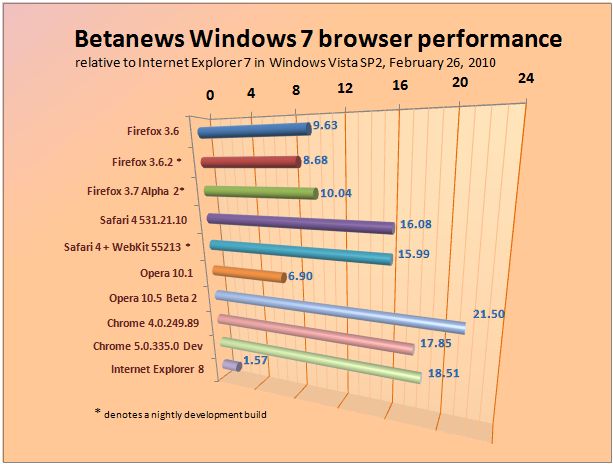

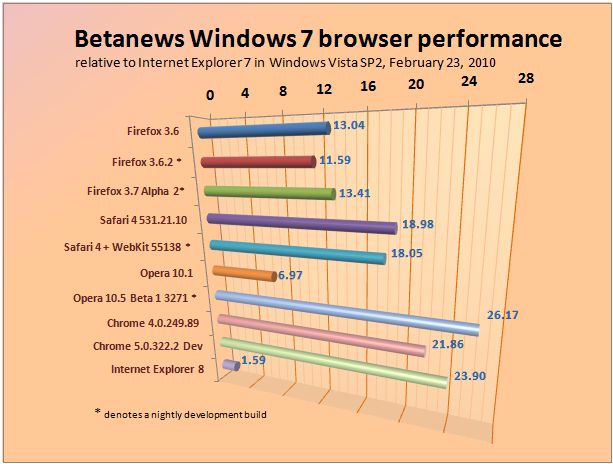

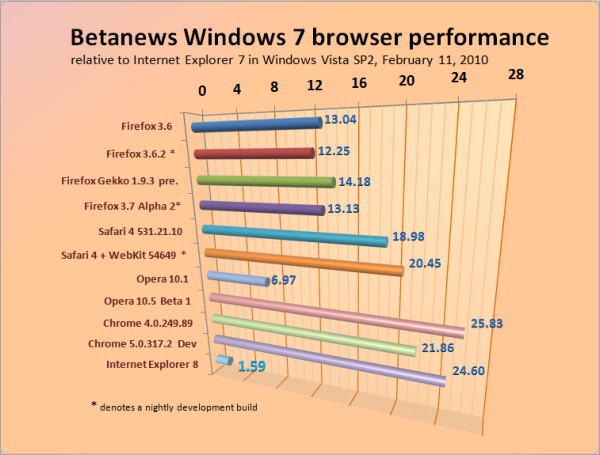

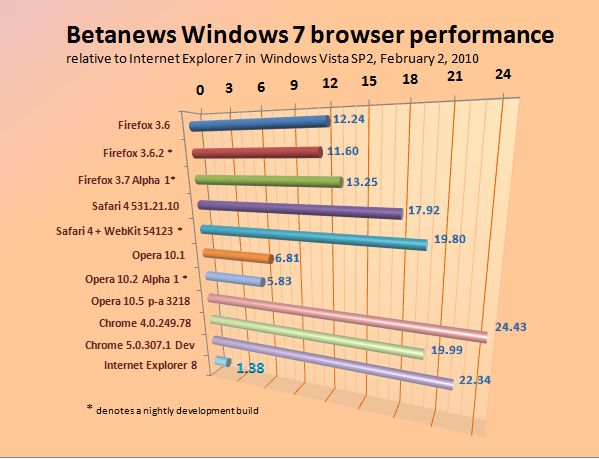

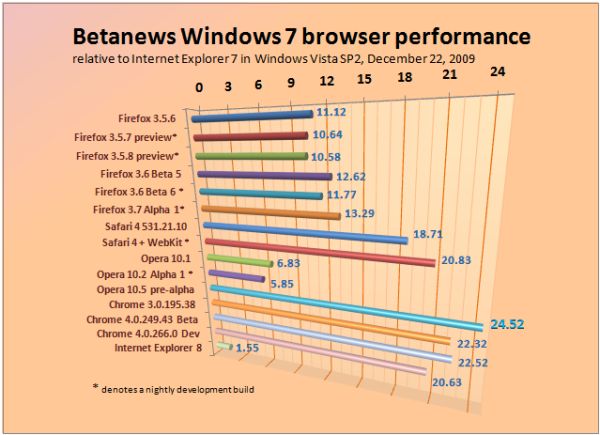

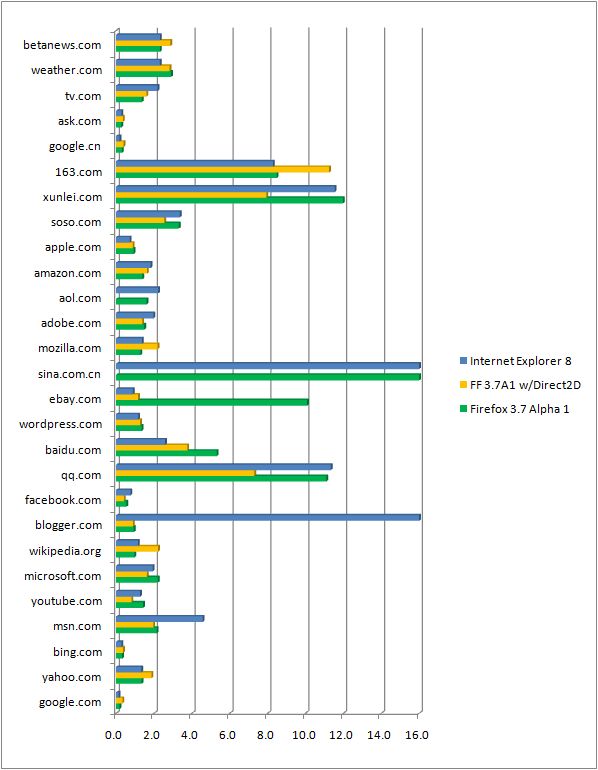

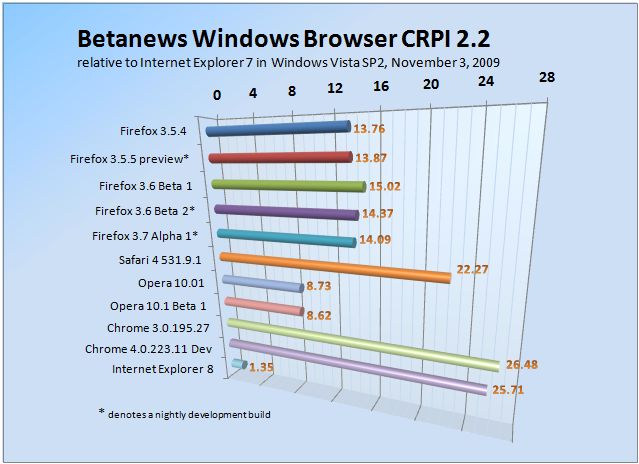

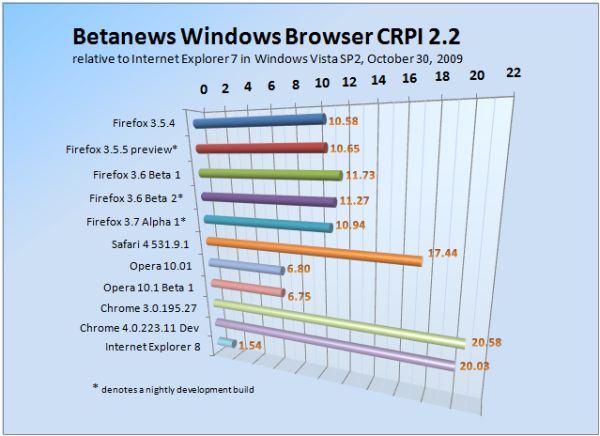

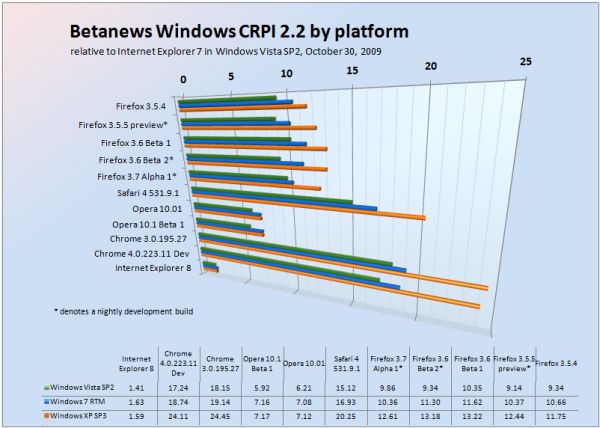

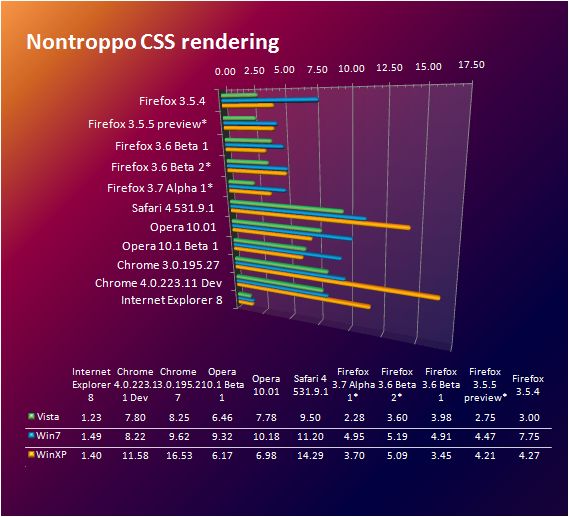

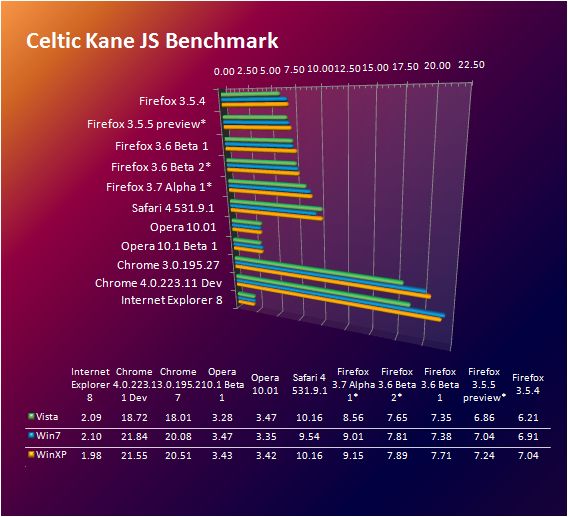

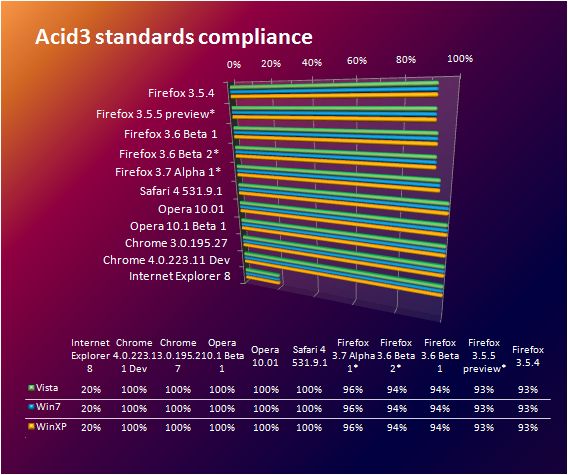

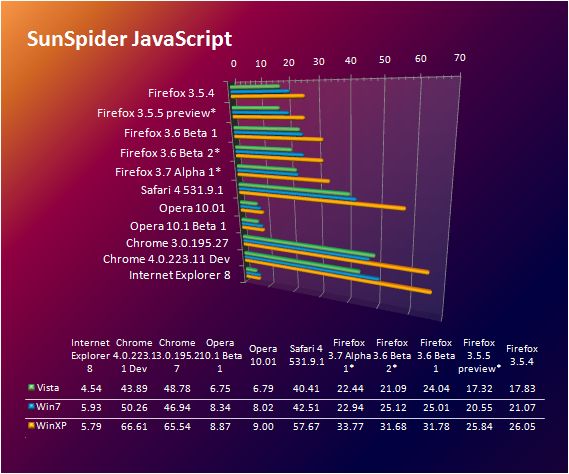

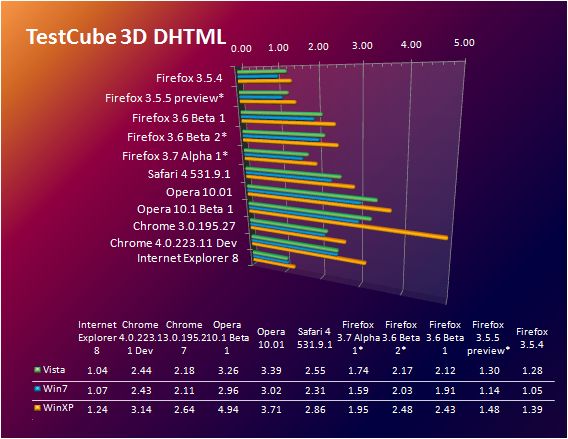

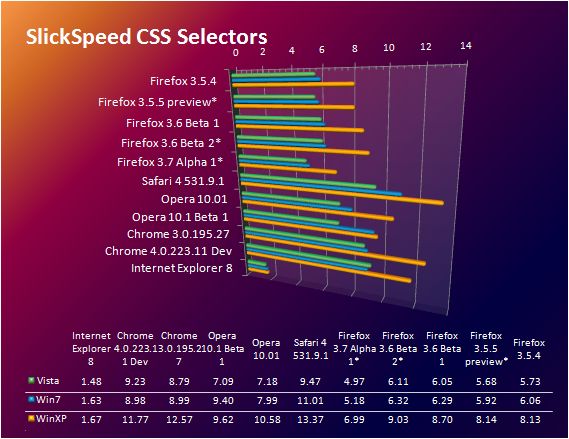

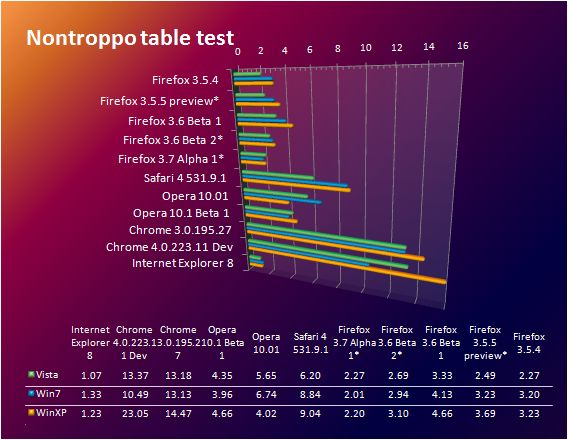

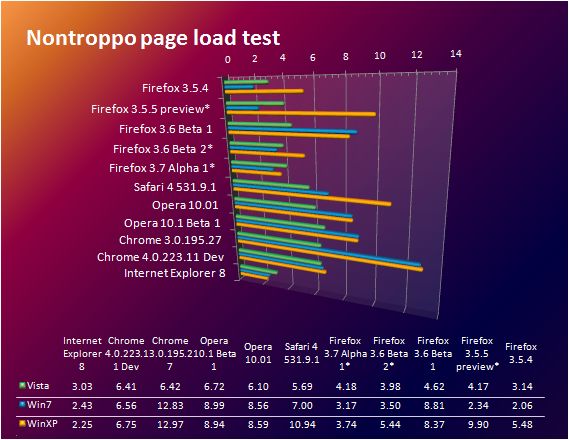

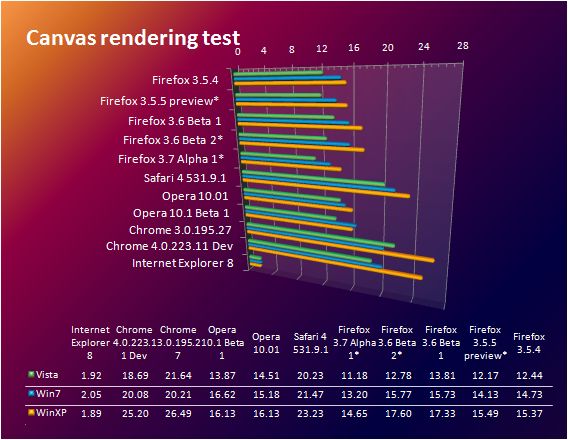

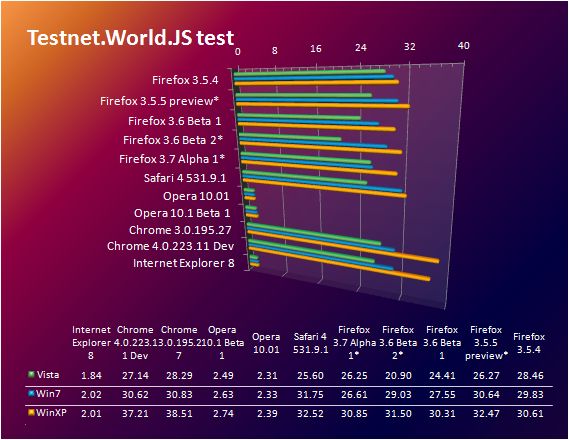

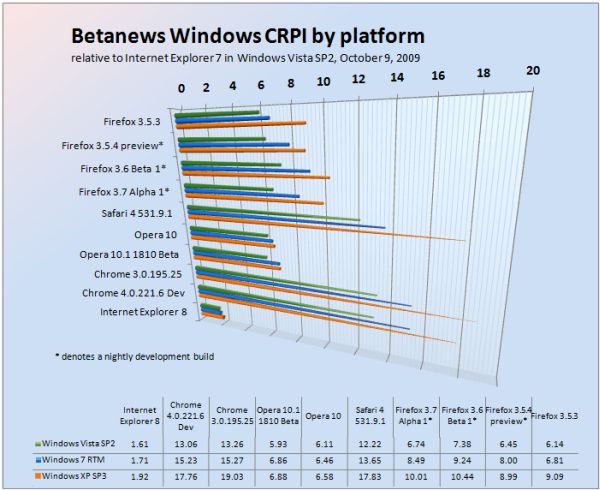

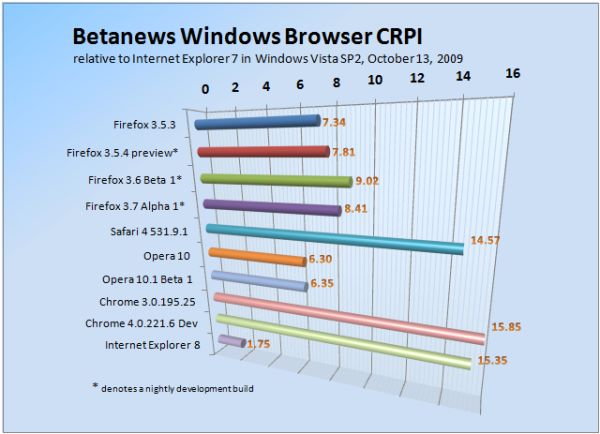

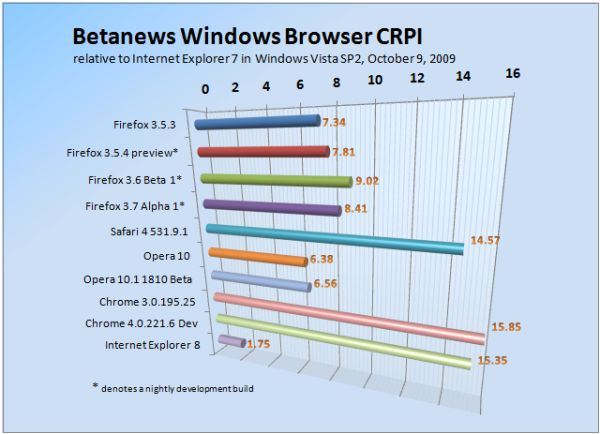

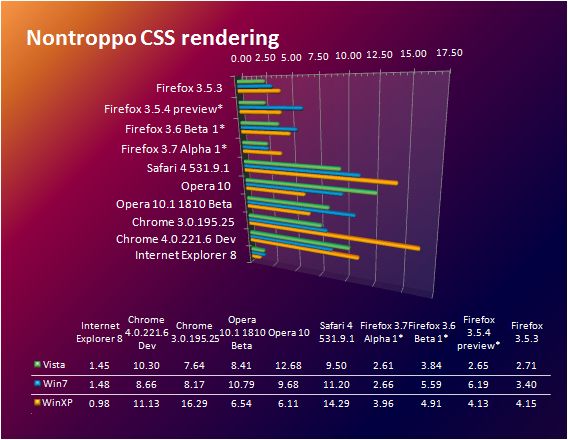

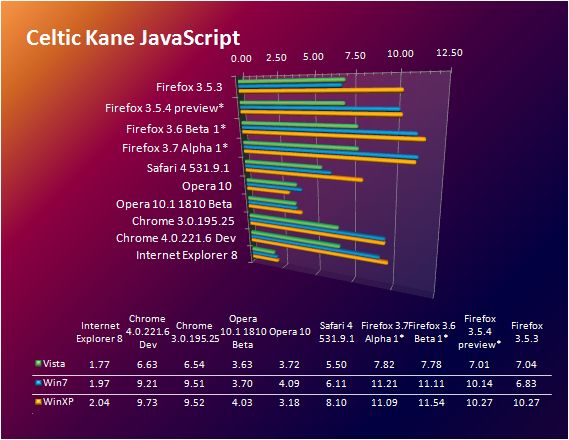

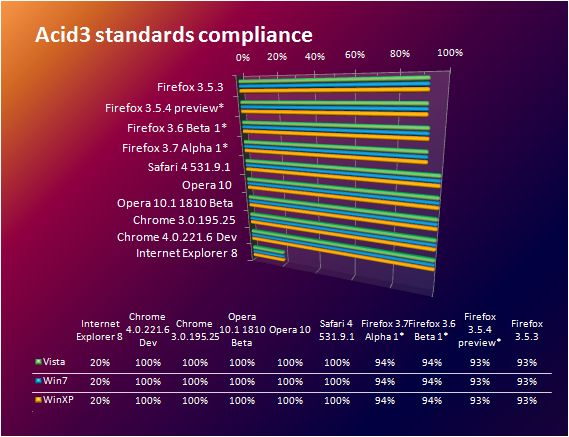

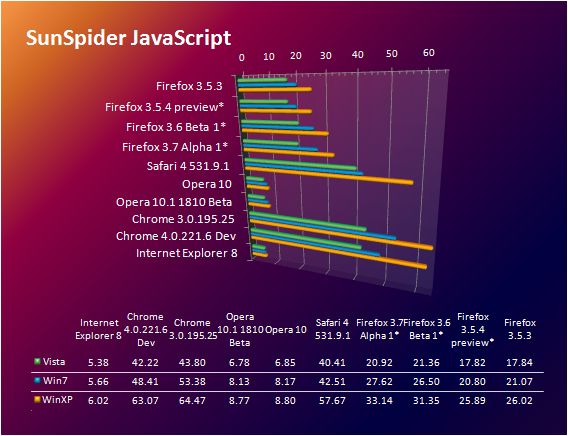

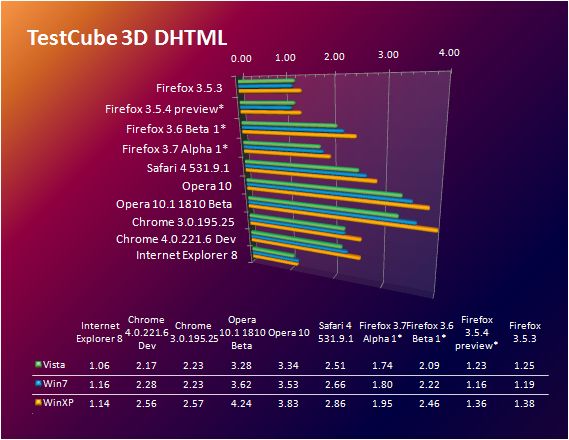

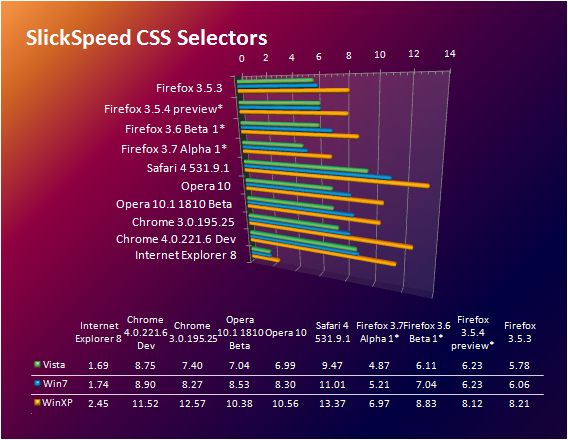

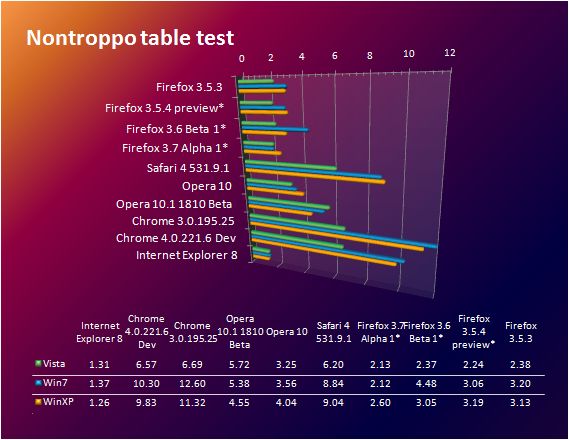

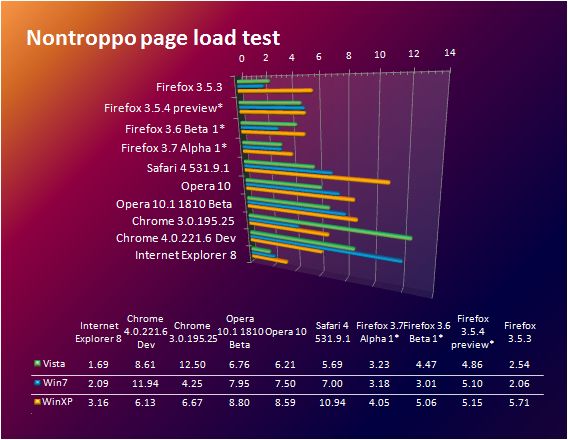

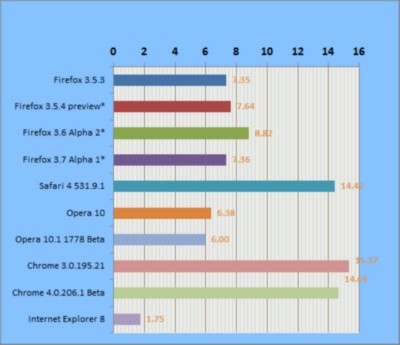

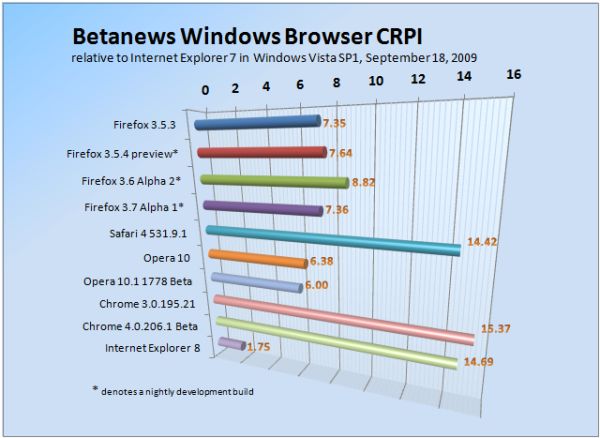

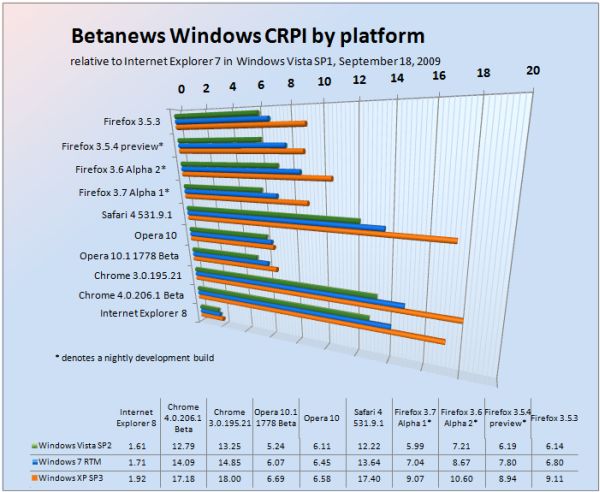

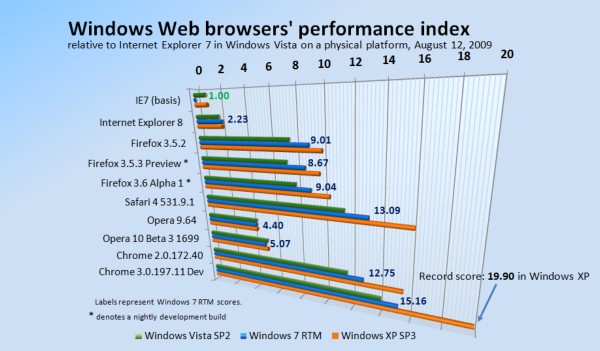

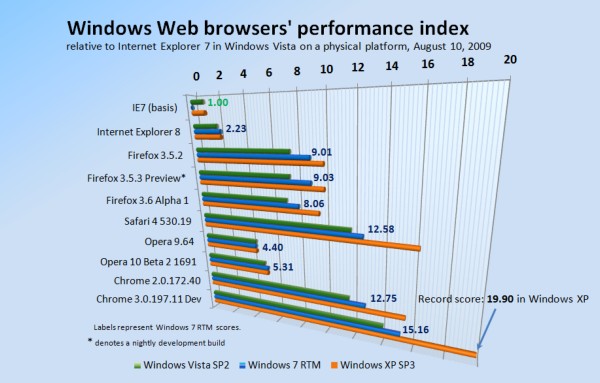

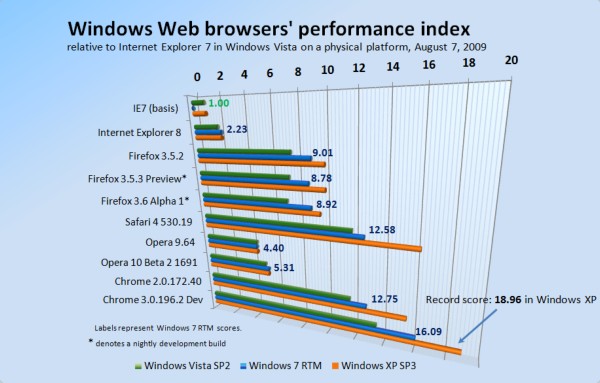

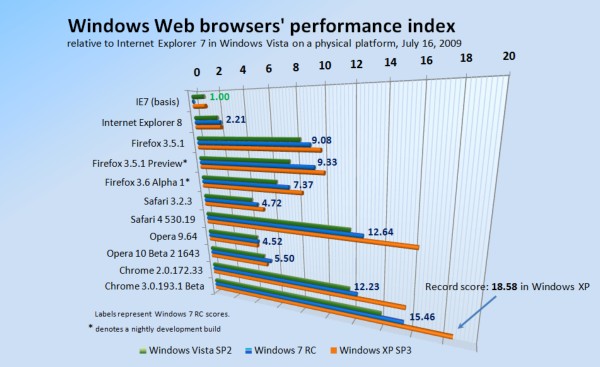

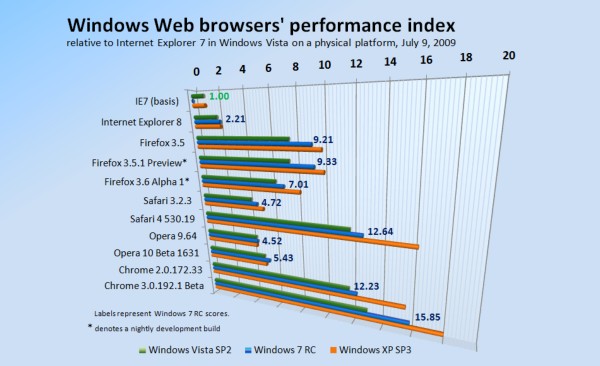

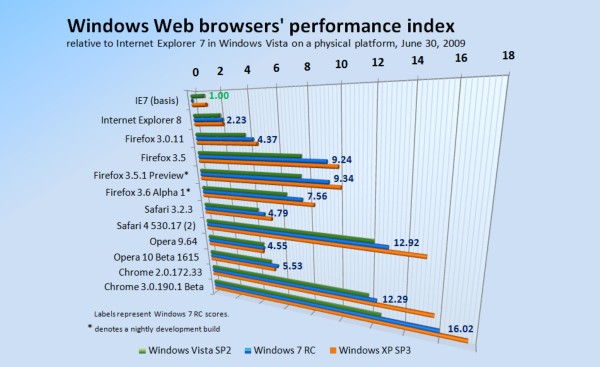

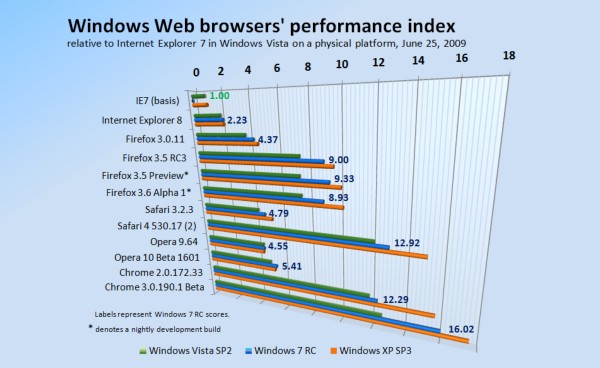

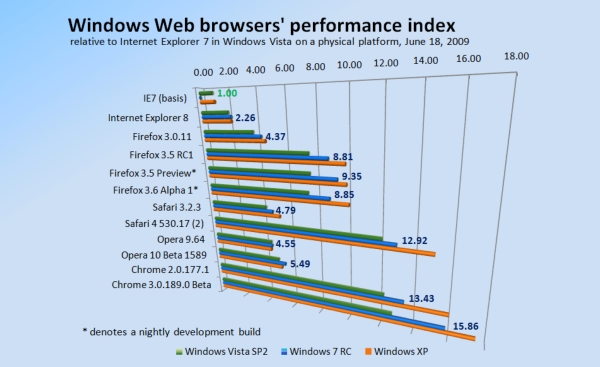

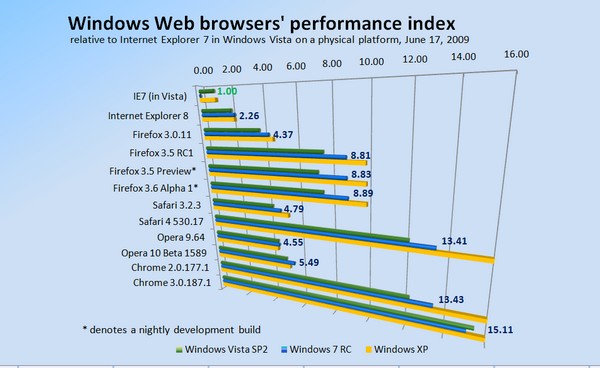

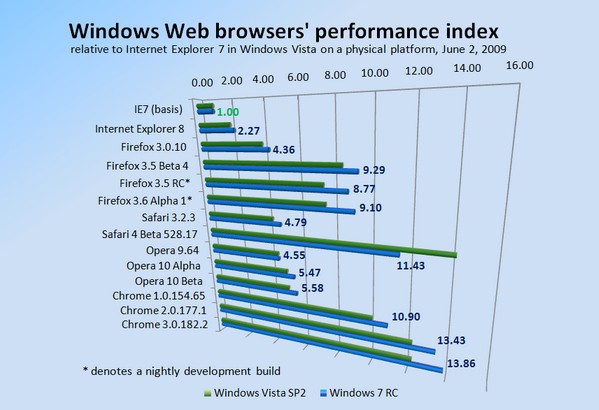

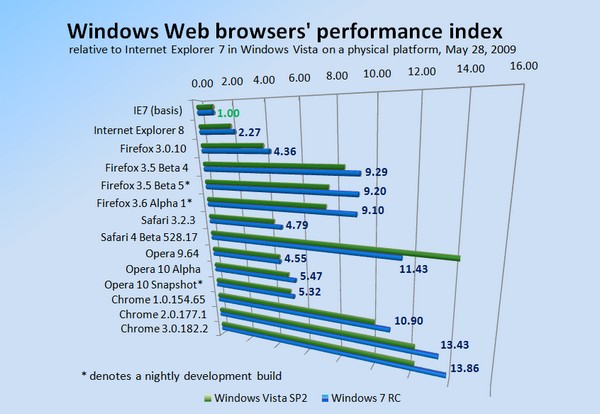

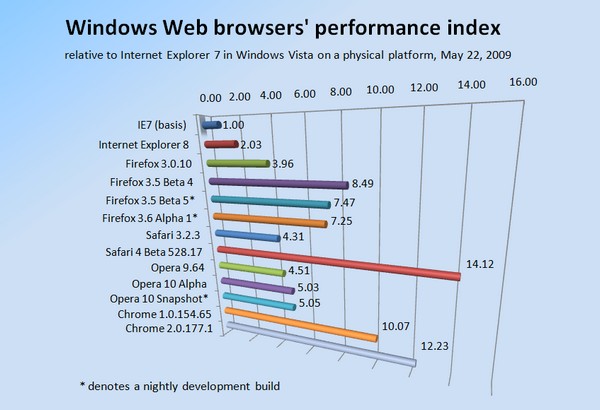

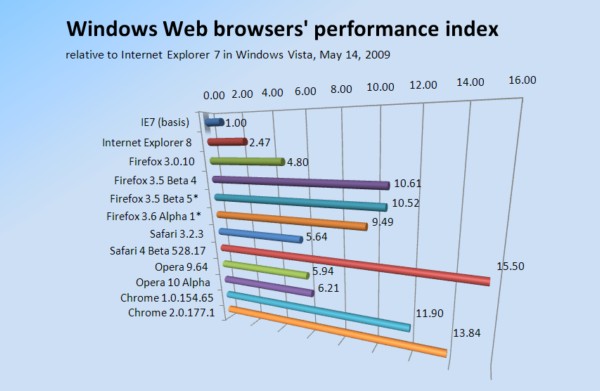

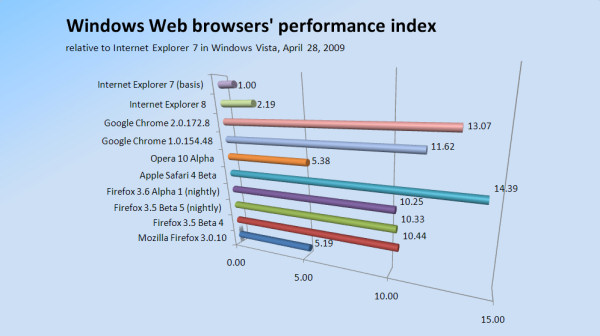

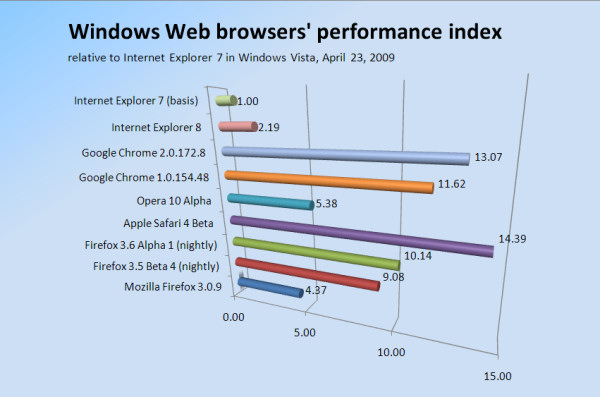

In the latest check of progress in the development of the major Web browsers for Windows, the brand that helped Betanews launch its regular browser performance tests appears to be making a comeback effort: With each new daily build, the WebKit browser engine -- running in Apple's Safari 4.0.5 chassis -- gains computational speed that it was sorely lacking.

In the latest check of progress in the development of the major Web browsers for Windows, the brand that helped Betanews launch its regular browser performance tests appears to be making a comeback effort: With each new daily build, the WebKit browser engine -- running in Apple's Safari 4.0.5 chassis -- gains computational speed that it was sorely lacking.

Although

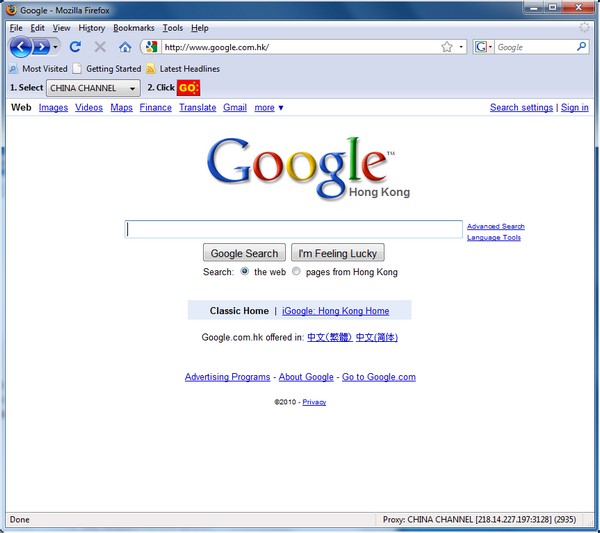

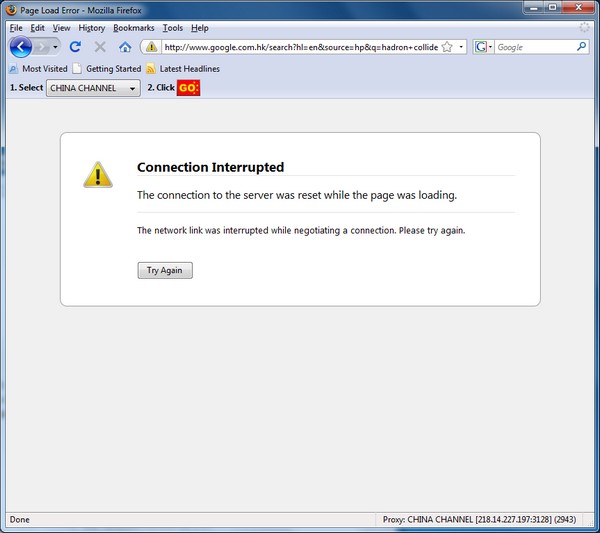

Although  By mid-morning, however, it appeared the gig was up, as every request for a proxy connection ended up being blocked. ChinaChannel rotates its proxy requests through a list of services (including aiya.com.cn, chinanetcenter.com, and a few services whose names are now blanked out in their "Access Denied" messages) that, at one time, were open. Requests to Aiya were met with this message: "Access control configuration prevents your request from being allowed at this time. Please contact your service provider if you feel this is incorrect."

By mid-morning, however, it appeared the gig was up, as every request for a proxy connection ended up being blocked. ChinaChannel rotates its proxy requests through a list of services (including aiya.com.cn, chinanetcenter.com, and a few services whose names are now blanked out in their "Access Denied" messages) that, at one time, were open. Requests to Aiya were met with this message: "Access control configuration prevents your request from being allowed at this time. Please contact your service provider if you feel this is incorrect."

Microsoft's choice not to provide a migration path for mobile apps developers to move their native code from Windows Mobile 6.5 to Windows Phone 7 (with one notable exception), has claimed its biggest casualty to date: Mozilla, whose Firefox Mobile project, codenamed "Fennec," appeared to be the most promising alternative browser to IE Mobile, will discontinue development for all Microsoft handset platforms including Windows Mobile 6.5. This announcement was made yesterday by Mozilla mobile project leader Stuart Parmenter.

Microsoft's choice not to provide a migration path for mobile apps developers to move their native code from Windows Mobile 6.5 to Windows Phone 7 (with one notable exception), has claimed its biggest casualty to date: Mozilla, whose Firefox Mobile project, codenamed "Fennec," appeared to be the most promising alternative browser to IE Mobile, will discontinue development for all Microsoft handset platforms including Windows Mobile 6.5. This announcement was made yesterday by Mozilla mobile project leader Stuart Parmenter.

Version 196.75 of Nvidia's GeForce/Ion drivers were indeed responsible for fan overheating problems reported by users. That's the verdict from Nvidia, which in a second round of responses to customer concerns has released version 197.73, which it assures users doesn't have the problem.

Version 196.75 of Nvidia's GeForce/Ion drivers were indeed responsible for fan overheating problems reported by users. That's the verdict from Nvidia, which in a second round of responses to customer concerns has released version 197.73, which it assures users doesn't have the problem.

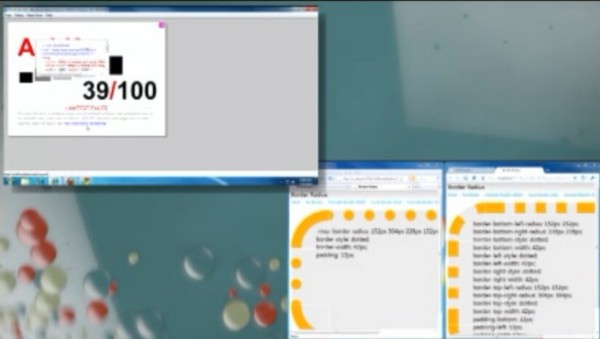

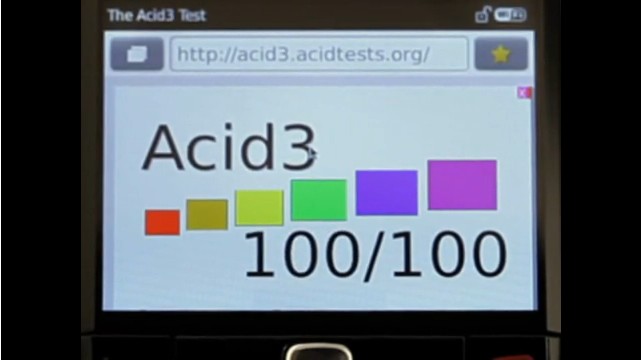

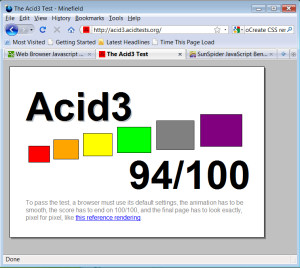

Meanwhile, the IE9 preview posts a 55% score on

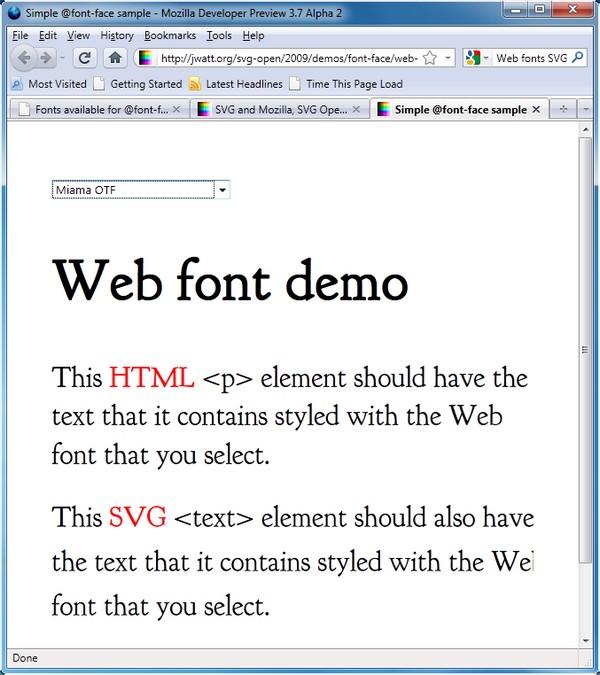

Meanwhile, the IE9 preview posts a 55% score on  HTML 5 in large print, SVG in small print

HTML 5 in large print, SVG in small print

This afternoon, Microsoft lifted the curtain on the first Internet Explorer 9 technology preview for developers. Initial demos at MIX 10 in Las Vegas by IE9 team leader Dean Hachamovitch reveal a minimum of end user features at this point -- the preview is described as a lightweight frame on top of a highly improved chassis.

This afternoon, Microsoft lifted the curtain on the first Internet Explorer 9 technology preview for developers. Initial demos at MIX 10 in Las Vegas by IE9 team leader Dean Hachamovitch reveal a minimum of end user features at this point -- the preview is described as a lightweight frame on top of a highly improved chassis.

The apps demonstrations were rapid-fire this morning, which is actually a bit unusual for a Microsoft conference that typically demos tools and PC applications in a casual fashion. Helping WP7S to lay some claim to coolness, Microsoft's Jeff Sandquist successfully demonstrated a good-looking, easy-to-use version of an app/service made popular on the iPhone: Shazam. And surprise, wouldn't you know it, it just happened to successfully identify the song "I'm a Bee" by The Black-Eyed Peas (good thing it was censored a bit; this is, after all, a family-friendly conference). I wonder whether Shazam would have recognized the Neil Innes version?

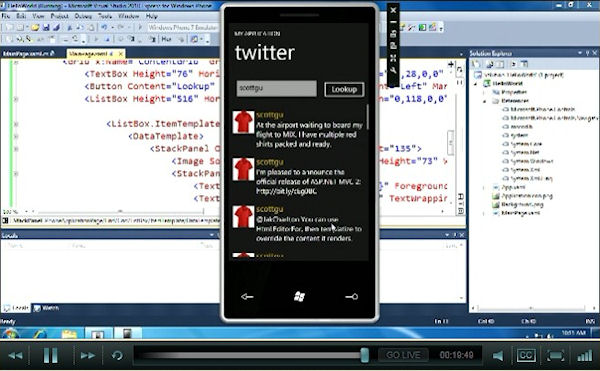

The apps demonstrations were rapid-fire this morning, which is actually a bit unusual for a Microsoft conference that typically demos tools and PC applications in a casual fashion. Helping WP7S to lay some claim to coolness, Microsoft's Jeff Sandquist successfully demonstrated a good-looking, easy-to-use version of an app/service made popular on the iPhone: Shazam. And surprise, wouldn't you know it, it just happened to successfully identify the song "I'm a Bee" by The Black-Eyed Peas (good thing it was censored a bit; this is, after all, a family-friendly conference). I wonder whether Shazam would have recognized the Neil Innes version? And while we're on the subject of family friendliness, what's a Microsoft conference without a little torture? Taking after the Nintendo Wii's implementation of "Mii's" -- the little people you create to look like you, or like someone, to play your games for you inside the Wii environment -- Scott Guthrie showed off a little Windows Phone "tool" called "Mannequin." It's a little doll you can dress up and manipulate using the phone's multitouch, whom you can carry around with you (be careful not to shake the phone too hard), and whom you can torture at your leisure.

And while we're on the subject of family friendliness, what's a Microsoft conference without a little torture? Taking after the Nintendo Wii's implementation of "Mii's" -- the little people you create to look like you, or like someone, to play your games for you inside the Wii environment -- Scott Guthrie showed off a little Windows Phone "tool" called "Mannequin." It's a little doll you can dress up and manipulate using the phone's multitouch, whom you can carry around with you (be careful not to shake the phone too hard), and whom you can torture at your leisure.

Screenshot of an early build of the Icarus Scene Engine, an OpenGL-based 3D scene editor that is itself rendered in 3D, using the

Screenshot of an early build of the Icarus Scene Engine, an OpenGL-based 3D scene editor that is itself rendered in 3D, using the

The nice thing about the Internet, or so I've been told, is that it has all this information. Perhaps you've noticed this lately, but the big problem has been that there's no one way to get at this information with any kind of consistency.

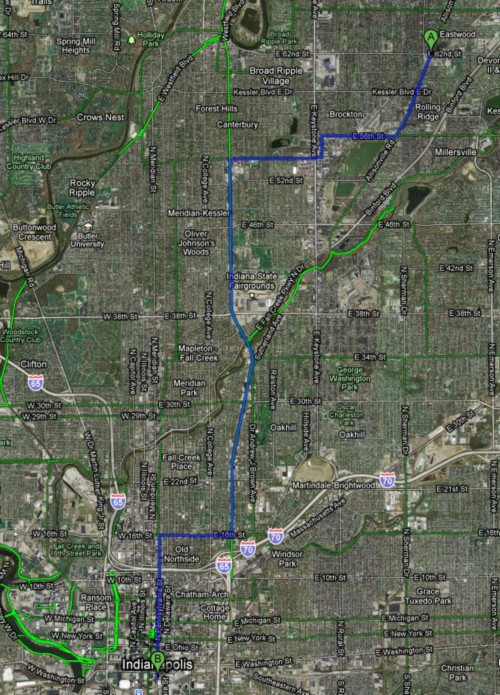

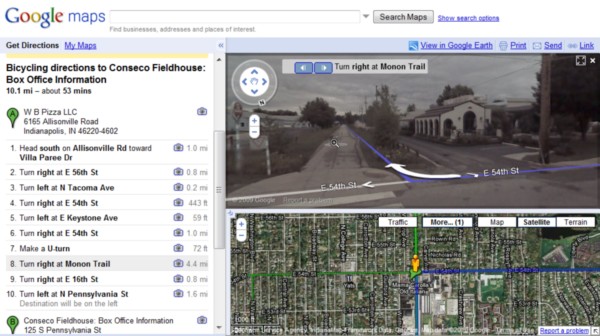

The nice thing about the Internet, or so I've been told, is that it has all this information. Perhaps you've noticed this lately, but the big problem has been that there's no one way to get at this information with any kind of consistency. Here's what I mean: This screenshot shows the suggested route from one of my favorite neighborhood pizza joints to Conseco downtown. Now, Google knows that Allisonville Road recently added a bike lane (not a good one, it's in dotted green), that it leads to a nicely protected sidewalk down Fall Creek Parkway, and that would be the most direct route downtown. Indeed, if I were a pedestrian, that's the route it would suggest. But as a cyclist, I know that's not the route I want -- crossing onto Fall Creek over Binford Blvd. means running across ten lanes of traffic without a crosswalk, where motorists are speeding through on a shortcut to I-69.

Here's what I mean: This screenshot shows the suggested route from one of my favorite neighborhood pizza joints to Conseco downtown. Now, Google knows that Allisonville Road recently added a bike lane (not a good one, it's in dotted green), that it leads to a nicely protected sidewalk down Fall Creek Parkway, and that would be the most direct route downtown. Indeed, if I were a pedestrian, that's the route it would suggest. But as a cyclist, I know that's not the route I want -- crossing onto Fall Creek over Binford Blvd. means running across ten lanes of traffic without a crosswalk, where motorists are speeding through on a shortcut to I-69.

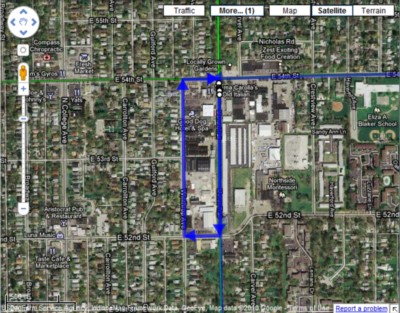

But it can only do this if it gets its front end right first. The slightly incorrect portion of its suggested route for my trip downtown was a 72-foot diversion that actually does show up when you zoom in the map. But partly because the granularity of the line-dragging routine does not appear to be as fine as the map's own zoom capability, and partly because the U-turn is a three-step process which Google Maps presumes must lead from point to point to point in every circumstance, my attempt to simply shave off the U-turn in the directions was mistaken as a way for me to take a lap around the strip mall parking lot, shown here.

But it can only do this if it gets its front end right first. The slightly incorrect portion of its suggested route for my trip downtown was a 72-foot diversion that actually does show up when you zoom in the map. But partly because the granularity of the line-dragging routine does not appear to be as fine as the map's own zoom capability, and partly because the U-turn is a three-step process which Google Maps presumes must lead from point to point to point in every circumstance, my attempt to simply shave off the U-turn in the directions was mistaken as a way for me to take a lap around the strip mall parking lot, shown here.

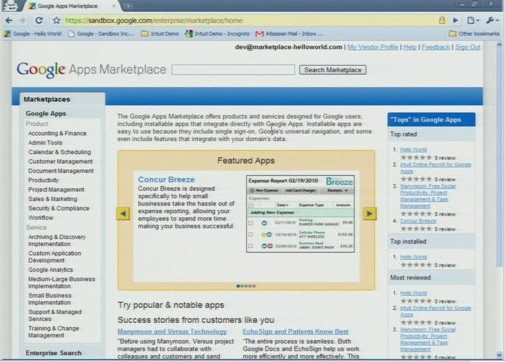

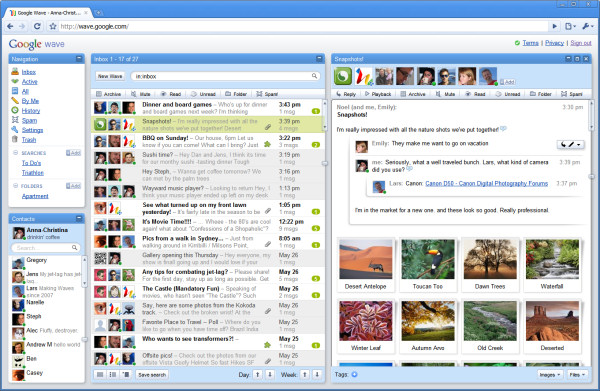

11:10 pm EST March 9, 2010 · "The Google Apps Marketplace...[is] a great way to discover, to find, and install applications into your business. But not just any applications -- applications that are deeply integrated with Google Apps...that enable a single sign-on, that enable different kinds of cloud-based software to share data," explained Vice President of Engineering Vic Gondotra to the Campfire One attendees. "Applications that integrate with the navigation, integrate with the user interface of the tools that your employees already know and love and use every day."

11:10 pm EST March 9, 2010 · "The Google Apps Marketplace...[is] a great way to discover, to find, and install applications into your business. But not just any applications -- applications that are deeply integrated with Google Apps...that enable a single sign-on, that enable different kinds of cloud-based software to share data," explained Vice President of Engineering Vic Gondotra to the Campfire One attendees. "Applications that integrate with the navigation, integrate with the user interface of the tools that your employees already know and love and use every day."

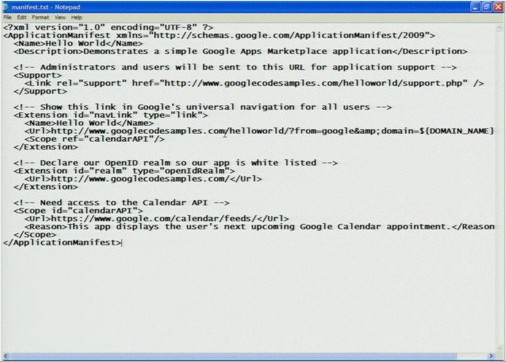

Glaser outlined another point of connection: "If you've ever used Google Apps, you've noticed at the top left of the screen, right above your mail or your calendar, there's a nav bar. That means you're a click or two away from getting at any of the other apps in the Google Apps suite...Well, if you have an application, you probably want it to be a part of the same navigation model, part of the same nav bar, so your users are a click or two away from not only the built-in Google Apps, but also from your app. How do you do that? You put an entry in the manifest -- a few lines of XML, you tell us, 'Here's the string that I want to have show up in the menu, and here's the link that it should go to when somebody clicks on it.'"

Glaser outlined another point of connection: "If you've ever used Google Apps, you've noticed at the top left of the screen, right above your mail or your calendar, there's a nav bar. That means you're a click or two away from getting at any of the other apps in the Google Apps suite...Well, if you have an application, you probably want it to be a part of the same navigation model, part of the same nav bar, so your users are a click or two away from not only the built-in Google Apps, but also from your app. How do you do that? You put an entry in the manifest -- a few lines of XML, you tell us, 'Here's the string that I want to have show up in the menu, and here's the link that it should go to when somebody clicks on it.'"

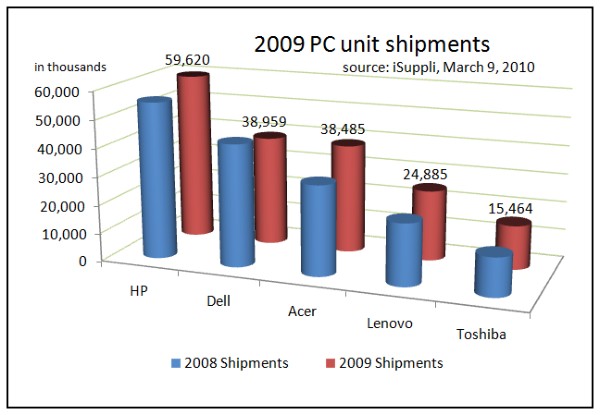

With the economic sinkhole of 2008-09 now a figment of many technology companies' past, most PC manufacturers are back on their regularly scheduled growth curve. Last month, Dell had indicated to investors that it was returning to that curve as well, reporting "product shipments...up at double-digit rates year-over-year" during its end-of-fiscal year 2010 earnings report.

With the economic sinkhole of 2008-09 now a figment of many technology companies' past, most PC manufacturers are back on their regularly scheduled growth curve. Last month, Dell had indicated to investors that it was returning to that curve as well, reporting "product shipments...up at double-digit rates year-over-year" during its end-of-fiscal year 2010 earnings report.

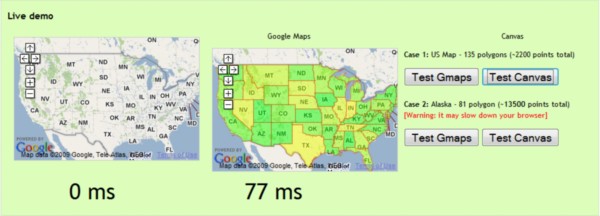

Should the next version of HTML, the Web standard that embodies how pages are laid out and constructed, include explicit specifications for inline, 2D dynamic graphics? There's valid arguments on both sides. One side believes that the ability to plot charts and animations would have been part of the original HTML standard anyway, had the technology existed on the back end in the beginning; giving HTML 2D graphics now, they say, plugs a hole left open for too long. Another believes the HTML5 standard should simply specify an API for plug-ins, to let separate groups of engineers evolve a methodology for plotting graphics at their own pace, and on their own track.

Should the next version of HTML, the Web standard that embodies how pages are laid out and constructed, include explicit specifications for inline, 2D dynamic graphics? There's valid arguments on both sides. One side believes that the ability to plot charts and animations would have been part of the original HTML standard anyway, had the technology existed on the back end in the beginning; giving HTML 2D graphics now, they say, plugs a hole left open for too long. Another believes the HTML5 standard should simply specify an API for plug-ins, to let separate groups of engineers evolve a methodology for plotting graphics at their own pace, and on their own track.

Two years ago, during a private premiere event entitled "Heroes Happen Here" held at the same Nokia Theater in Los Angeles where the Emmy Awards are now staged, and introduced by none other than Tom Brokaw, Microsoft rolled out a truckload of new server software product lines to help cement the company's new prominence in businesses and enterprises. One of the "heroes" that day, as Microsoft phrased it, was

Two years ago, during a private premiere event entitled "Heroes Happen Here" held at the same Nokia Theater in Los Angeles where the Emmy Awards are now staged, and introduced by none other than Tom Brokaw, Microsoft rolled out a truckload of new server software product lines to help cement the company's new prominence in businesses and enterprises. One of the "heroes" that day, as Microsoft phrased it, was  "And then there's a question of who would pay for that," he said. "Well, maybe markets will make it work, but if not, there are other models: use taxes for those who use the Internet. We pay a fee to put phone service in rural areas, we pay a tax on our airline ticket for security. You could say it's a public safety issue and do it with general taxation."

"And then there's a question of who would pay for that," he said. "Well, maybe markets will make it work, but if not, there are other models: use taxes for those who use the Internet. We pay a fee to put phone service in rural areas, we pay a tax on our airline ticket for security. You could say it's a public safety issue and do it with general taxation."

In a precedent-setting win for the company perceived as the originator of "time-shift" video recording, the Federal Circuit Court of Appeals has fully affirmed a lower court's judgment that satellite service provider EchoStar's software continued to infringe upon TiVo's patents even after making significant changes to address its complaints. This despite what EchoStar (whose DVR boxes are also used by former sister company Dish Network) had called "Herculean" efforts to steer clear of TiVo's intellectual property, and a

In a precedent-setting win for the company perceived as the originator of "time-shift" video recording, the Federal Circuit Court of Appeals has fully affirmed a lower court's judgment that satellite service provider EchoStar's software continued to infringe upon TiVo's patents even after making significant changes to address its complaints. This despite what EchoStar (whose DVR boxes are also used by former sister company Dish Network) had called "Herculean" efforts to steer clear of TiVo's intellectual property, and a

Italian Prime Minister Silvio Berlusconi continues to own some of that country's largest publishing and broadcasting institutions, including Mediaset. That corporation filed a half-billion euro lawsuit against YouTube in July 2008, ordering it to remove all instances of copyrighted content that allegedly infringe upon Mediaset's portfolio -- including clips from soccer games and the reality show "Big Brother." Each hour someone watches Il Grande Fratello on YouTube is one less hour of watching it on Canale 5. Last December, a Rome court ruled in Mediaset's favor.

Italian Prime Minister Silvio Berlusconi continues to own some of that country's largest publishing and broadcasting institutions, including Mediaset. That corporation filed a half-billion euro lawsuit against YouTube in July 2008, ordering it to remove all instances of copyrighted content that allegedly infringe upon Mediaset's portfolio -- including clips from soccer games and the reality show "Big Brother." Each hour someone watches Il Grande Fratello on YouTube is one less hour of watching it on Canale 5. Last December, a Rome court ruled in Mediaset's favor. For any other company besides Google, a week like this would be interpreted by some in the press as the beginning of the end, and it's only Wednesday. However, an individual breakdown of every bad story, element by element, reveals the company may not be deluged so much by a hailstorm of controversy as a cavalcade of unfortunately simultaneous snowballs, none of which may end up leaving any lasting damage.

For any other company besides Google, a week like this would be interpreted by some in the press as the beginning of the end, and it's only Wednesday. However, an individual breakdown of every bad story, element by element, reveals the company may not be deluged so much by a hailstorm of controversy as a cavalcade of unfortunately simultaneous snowballs, none of which may end up leaving any lasting damage.

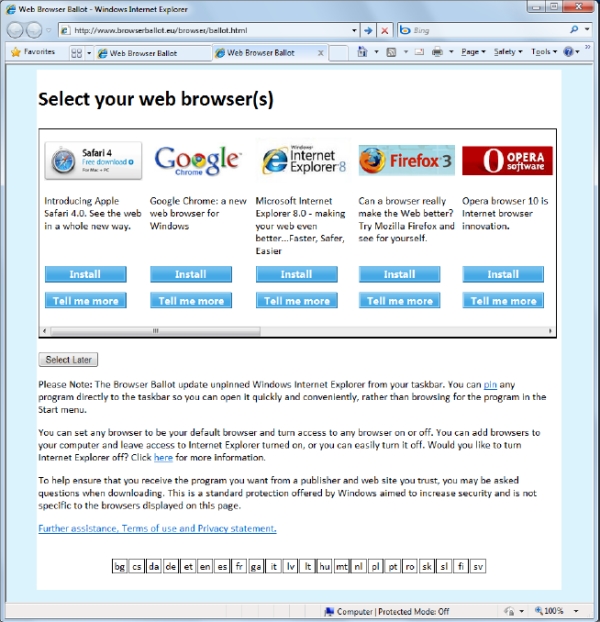

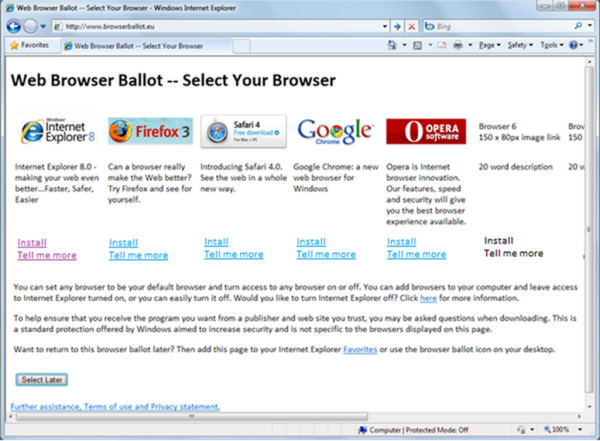

One of the more obvious, but little mentioned, facts about the upcoming Windows Web browser "choice screen," to be

One of the more obvious, but little mentioned, facts about the upcoming Windows Web browser "choice screen," to be

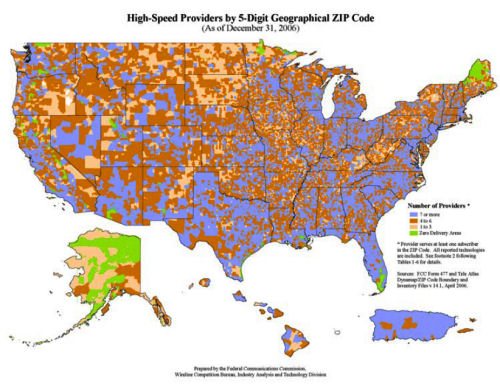

"Building world-class broadband that connects all Americans is our generation's great infrastructure challenge," the Chairman told the audience of state public utility commission members. "Some compare high-speed Internet to building the interstate highway system in the 1950s. It's a tempting comparison, but imperfect. In terms of transformative power, broadband is more akin to the advent of electricity. Both broadband and electricity are what some call 'general purpose technologies' -- technologies that are a means to a great many ends, enabling innovations in a wide array of human endeavors. Electricity reshaped the world -- extending day into night, kicking the Industrial Revolution into overdrive, and enabling the invention of a countless number of devices and equipment that today we can't imagine being without. Now in the 21st century, it is high-speed Internet that is reshaping our economy and our lives more profoundly than any technology since electricity, and with at least as much potential for advancing prosperity and opportunity, creating jobs, and improving our lives."