By Scott M. Fulton, III, Betanews

[ME's NOTE: This article was originally published on January 30, 2009, here in Betanews. I'm reprinting it today in honor of the memory of a man I refer to in this article, who was one of my early mentors in computing and in business, and who passed away last October 26: Elmer Zen "E.Z." Million, the proprietor of the original Southwest Computer Conference, later the CEO of private aircraft services company Million Air, and occasional candidate for some lofty, high Oklahoma office. He was a brilliant businessman, a true fiscal conservative who really did teach me how to run a business, through long hours in his office poring over accurately written ledgers. And he was the absolute antithesis of everything people assumed a "computer pioneer" was, but he was all of that and more. I dedicate this to E.Z.'s enduring memory.]

The reason there's a Macintosh today is not because of some brilliant flash of engineering genius, as many revisionists like to believe. It's because Apple had the audacity to make a few big mistakes first, and learn from them.

The main reason I wasn't escorted out of those first computer conferences, even though they typically displayed signs that expressly forbade anyone under 18 from entering, was because I looked the part of someone older who knew what he was doing. The moustache and the tailored suit somehow helped, like a rookie NASCAR driver who wanted to fit in with the big boys in the pit crews.

It helped even more to know some people. Three decades ago now, I'd gotten to know a fellow who was one of the first great regional conference organizers, a promoter and business consultant whose given name truly was Elmer Zen Million. At first, he called me "The Kid," which always made me shrink a little because that's exactly what I tried not to look like at the time. After a few years, I was Scott to him and he was E.Z., and I was exempt from the 18-or-under rule...until one year when it finally didn't matter. I was a private consultant, earning a small living, and just introducing myself to the publishers who would soon jump-start my career.

It was the winter of 1983, one month before the rule wouldn't matter anymore. By this time, a local computer store called High Technology had become one of Apple Computer's top-selling independent retailers. I used to hang around that store and drum up business for myself, finding clients and helping them set up Apple II and Atari 800 computers. They didn't mind because I'd end up sending my own customers back to them for more software, which was a high-margin business then. Folks were more interested in buying a computer that ran something weird-sounding like VisiCalc or Electric Pencil if they knew they'd have the help of someone who could give them a hand.

So High Tech had purchased the prime space at one of E.Z.'s semi-annual computer conferences, and I was there to help set up. The store manager had reserved a big chunk of his floor display for the arrival of a computer he hadn't seen yet. It was coming directly from Apple, its delivery was already a few days late, and all we knew about it was that it was not the "Apple IV" that had been rumored, and that it would cost ten thousand dollars.

"So are you sure you want The Kid around?" asked one fellow. "Who, Scott?" replied the High Tech man. "Are you kidding? I don't even have an instruction manual for this thing. He's the only hope we have."

The crate arrived after lunch, literally looking like the "major award" shipped to Ralphie's dad in the movie A Christmas Story. We were told to move our food and drinks a respectable distance from this major device since we wouldn't know how delicate it would be, or how sensitive to soda pop drops and the grease from hot dogs. Some workmen gently extracted the device from its container, a process which consumed two hours, during which I probably consumed a six-pack of Dr. Pepper. And when it was eventually set up, it was missing its startup disk.

![Apple's 're-invented' Lisa, model 1 (1983) [Photo credit: ComputerHistory.org]](http://images.betanews.com/media/2642.jpg) A High Tech associate eventually found it back at the store and drove it downtown, but in the meantime, we sat pondering what this new thing was going to do. "Lisa," we'd concluded, must be a code-name and not the final brand. Somebody thought it would eventually be the Apple IV anyway, but the High Tech manager had heard from Cupertino that the Roman numerals had been declared history after "III."

A High Tech associate eventually found it back at the store and drove it downtown, but in the meantime, we sat pondering what this new thing was going to do. "Lisa," we'd concluded, must be a code-name and not the final brand. Somebody thought it would eventually be the Apple IV anyway, but the High Tech manager had heard from Cupertino that the Roman numerals had been declared history after "III."

I saw that Lisa came with a "puck." At least that's what I thought it was called; two years earlier, hanging around another computer conference, a guy from Tektronix instructed me on how to use its CAD/CAM system. It came with a digitizer device that you placed on a table called a "puck," and you could also slide it along the left side of the table to select functions for the program.

I had met a guy the year before who called it a "mouse," but I thought it was a stupid name, and surely not the one anyone would settle upon. It was only several years later, after sorting through the mountain of business cards I'd collected over the years, that I realized, in one of those "holy-crap" moments, that the guy was Doug Engelbart.

And since I had also been privy to a demonstration of the Xerox STAR Workstation a year or so earlier (although the fellow there also refused to call it a "mouse"), I was the one designated to flip the switch on Lisa. It took me about an hour to figure out how to boot the thing. You couldn't even pull out the diskette by yourself; a software switch made the disk slide out slowly and deliberately, like teasing a sideways sloth and being teased back. Even E.Z. laughed at me as he walked by, at one point saying, "Who would've thought Apple would be the one to make The System That Stumped Scott?"

It was mid-afternoon, and only when the electric sloth stopped spitting out cherry-bomb icons did we start drawing a crowd. Although I did hear one fellow praise the cherry-bombs, with language that stuck with me: "You know, if you think about it, that's not a bad deal," he said. "Imagine an operating system that's so smart that it knows it's hosed."

We spent the next several hours trying to guess how this most "intuitive" of systems worked, and I took extensive notes. By "we" at this point, I mean about a few hundred people -- an audience had formed outside our table. Some brought out some Samsonite folding chairs, and E.Z. started making the rounds to make sure everyone was comfortable and had refreshments.

We guessed wrong far more often than we guessed right. Ideas for what to do next were being shouted fast and furiously from folks in the crowd. The idea with Lisa was that you had this document, which you tore off from this on-screen pad using the puck. Then you used a menu to decide what to do with this open scrap of paper. Once we found LisaDraw, we started going to town with it. That's when I could let the puck go for awhile and let other people (carefully, now, this thing costs ten grand) experiment with making the arrow move the way their hands moved.

The most amazing thing I remember was how many folks were afraid of it. Psychologists who've studied the history of advertising have pointed out that it's color that attracts people to a new gadget first and foremost. People thought of the Apple II, and even Apple's logo, as being about color; this thing was monochrome and beige, like a brick of vanilla ice cream left to melt in the sun. We had decided "Lisa" couldn't possibly have been Jobs' or Wozniak's girlfriend -- perhaps a junior-high-school Spanish teacher, but not anyone close.

[Photo credit: An original Lisa advertisement, from ComputerHistory.org]

Next: The world that made the Mac...

The world that made the Mac

When younger folks today (I don't have to pretend I'm old enough anymore) ask me what it felt like to experience a Macintosh for the first time, expecting a moment of revelation as though I'd set foot on Mars, it's hard for them to understand this embryo of the Mac in the context of the world we early developers lived in. While we appreciated the Apple II for having accelerated the pace of evolution in computing, and for having been smart enough to let people tinker with its insides like with the Altair 8800 a mere three years before the II premiered, most of us in the business had the sincere impression that Apple least of all understood what our work was about. The Apple III was proof -- the only way it could run good software was when it could step down into Apple II emulation mode. And the Lisa didn't even have that.

What it did have was Motorola's 68000 processor, and now we could really see what a world of difference it would eventually bring to our lives. Back in the early '80s, the CPU ran not only the operating system and the software but whatever graphics the software was capable of doing -- it wasn't shipped off to some co-processor. Simply watching the mouse pointer move fluidly on the screen was impressive to us at the time -- more amazing, even, then creating our first plaid-patterned polygons with LisaDraw for no particular reason.

But also, the world of computing was full of so many more great names than today. Sure, IBM was marching in and would set the tone for the next few decades, but we still had Commodore, Atari, Osborne, KayPro, Ohio Scientific, HP (which had its own designs for business computers at the time), Exidy, Sinclair, Apollo, and the brand which brought me into this business in the first place, Radio Shack. The world was full, and new ideas in hardware were coming out everywhere. Sure, some geniuses in particular rocked the world, but from our vantage point, that's what everyone was doing...that's what we were doing. Steve Jobs was one of our rock stars, sure. But we had a plethora of others -- Jay Miner, Adam Osborne, Chuck Peddle, Clive Sinclair; the writers like Rodnay Zaks and Peter McWilliams; the great publishers we loved (some whom I'd later work for) like David Ahl, David Bunnell, Wayne Green; and the brilliant man whose name is so long forgotten, but who may have contributed at least as much if not more to our foundation of computing than anyone else, Gary Kildall.

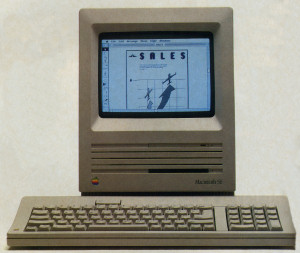

It started to come together with the advent of the Macintosh Plus, with 512K -- enough memory to actually run software -- and the numeric keypad.

So when the Macintosh first entered the scene for us, you have to understand, "new" for us came every three months or so. Even then, some of us were still puzzling over the Lisa. Though we all loved the "1984" ad, our expectations of the first Mac were based on our supposition that it would be an attempt to correct the failures of the Lisa. Being priced $8,000 less certainly helped.

But even the first Macs weren't brilliant, not really. They suffered from what we all perceived to be Steve Jobs' basic nature to go with whatever he had at the time, explaining that it's all by design, and if we didn't get it, then it's our fault. The first Mac was a closed system -- oh sure, it had a serial interface that was being "pioneered" by Apple, for the connection of external devices that we were promised but never actually saw. But we couldn't get hard drives to work with the first Macs, no matter how hard we tried (SCSI would only come later). The very first Mac-only conferences, sponsored by the nation's Apple II users' groups -- easily the most friendly and the greatest computer users who ever walked this planet at any time in our history -- were literally showered with businesses whose missions were to connect real peripherals to these things. We had laser printers, for crying out loud, and they were beautiful, but we had to fit our documents on these floppy diskettes; and without compression, we were using one diskette per document easily.

And because opening up one's Macintosh to do something horrible and unsanctioned, such as hiding a hard disk, constituted a violation of the sacred and sacrosanct Apple Warranty, none of these businesses were given accreditation by Apple, and many of them were scared to even employ the Apple logo in their brochures for fear of retribution. Thus the first Mac users' groups -- the offshoots of the Apple II groups -- flourished despite Apple. In fact, at about the time he was ousted from his CEO position, many leaders of the Apple community were tired of Steve Jobs and his bloviating nature, and were more than happy to see him replaced with the down-to-earth, all-business, no-frills approach offered by John Sculley.

Throughout the duration of the 1980s, the Macintosh was never the most powerful 68000-based computer you could buy. In terms of raw processing speed, the Atari ST (the focus of my career for about four years) blew the Mac away in every single challenge, yet that computer was being peddled by a company that was as clueless about computing as FEMA was about hurricanes. And for sheer fun and excitement and creativity, the Commodore Amiga ran circles around the Mac at warp speed. Both the ST and Amiga had orders of magnitude better software going into 1987. Meanwhile, the world's best software authors all wanted to write for Mac and were stymied by all the hoops Apple made them jump through just to be certified, to get development kits, to attend the seminars, and to be treated in kind. Then something happened round about 1988, in the era of the Mac SE and the Mac IIci, when the 68020 and 68030 processors roared to life. The software got better, the systems became more reliable...HyperCard entered the public vernacular. And Apple became more desperate, more humble, and more willing to let other companies enter into its realm. There was an opening, for the first time. System 7 took bold steps forward in functionality and principle. It really took five long, painful years for the Mac to truly be born.

Indeed, the Mac's greatness derives from its designers' willingness to break barriers. Not everything they tried was novel, and let's face it, a good deal of it (just like Windows) was stolen from someone else. Many of the concept's original ideas fell flat on their face, which is the key reason none of us boot up with Workshop disks today.

The first great Macintosh: The Mac SE

The true brilliance of Macintosh is the ideal that computing can have one way of working that we can believe in and stick to. That brilliance was inside the crate the Lisa was delivered in, but it may have been too hard to notice on day one, when I flipped the switch for the first time. Back in the late '70s and early '80s, when systems crashed, we lost everything we were working on, and sometimes the disk it was stored on; even the Lisa brought forth the idea that an operating system can be all-encompassing, that you could be "in" the Lisa rather than "in" dBASE or VisiCalc or Valdocs. The computer itself could define the way its user worked.

Granted, that was a great idea that was probably born in Gary Kildall's mind before anyone else's, but Apple made it work first. It took a lot of time and patience, and some swearing -- most of which has been forgotten by revisionist history. But if you were there in the room when the switch was flipped, or if you can imagine sitting there on a folding chair and watching it happen and sharing the joys and the frustrations in equal measure, then you can truly appreciate what the Mac has brought us.

[Photo credits: Scans of the Macintosh Plus (1985) and Mac SE (1986) from Byte Magazine]

Copyright Betanews, Inc. 2009