When scoping out new servers for customers, we usually look towards Dell, as their boxes have the right mix of price, performance, expandability, and quality that we strive for. RAID card options these days are fairly plentiful, with our sweet spot usually ending up on the PERC H700 series cards that Dell preinstalls with its midrange to higher end PowerEdge server offerings.

But recently we were forced into using one of its lower end RAID cards, the H200 PCIe offering. This internal card was one of the few dedicated RAID options certified to work in a refurbished server we had to put back into production, a Dell R210 1u rack unit. The specs looked fine and dandy in nearly all respects, except for one area that I like to avoid: the lack of dedicated battery backed flash cache.

This is clearly denoted on Dell's full PERC rundown site. You can dive into the benefits of BBF on this excellent Dell whitepaper. For the uninitiated, battery backed flash caches on RAID cards allows for near bulletproof data safety in the face of power failure, while increasing performance of RAID arrays considerably.

I didn't think going with an H200 for an R210 refurbishment would be the end of world. Even lacking a dedicated cache unit on the card, I knew full well that disk drives in a RAID have their own onboard cache that works pretty well on its own. Heck, most drives made in the last ten years have some form of dedicated cache space to provide workstations some performance benefits of writing to cache before writing to the disk.

The advent of SSDs has rendered the need for caching mechanisms almost extinct, but the reality of the situation in the server world is still that spinning disks make up a majority of the current and ongoing new server installations. Until SSD prices come down to the cost/GB levels of what SATA or SAS can provide, spinning hard drives will be the cream of the crop for the foreseeable future.

And this described the exact situation we were in for this customer Dell R210 rebuild. We paired a dual set of (rather awesome) Seagate 600GB SAS 15K drives together in a RAID 1 for this H200 controller so that we could pass a single volume up to Windows Server 2012 R2. Our storage needs for this client were rather low, so employing Windows Storage Spaces was a moot point for this rollout. It also doesn't help that the R210 only has a pair of 3.5-inch internal drive bays; not the 4-8 hot swap bays we usually have at our disposal on T4xx series Dell servers.

The problem? After we loaded on a fresh copy of Windows Server 2012 R2, things just seemed strange. Slow, as a matter of fact. Too slow for comfort. All of the internal hardware on this server tested out just fine. The hard drives were brand new, along with the RAID card. Even the SAS cable we used was a brand new Startech mini SAS unit, freshly unwrapped from the factory, and otherwise testing out fine.

It seems that we weren't alone in our sluggishness using default settings on the PERC H200 card. The Dell forums had numerous posts like this describing issues with speed, and the fine folks at Spiceworks were seeing the same problems. After some Googling, I even found sites like this one by Blackhat Research that detailed extensive testing done on these lower end RAID cards proving our suspicions true.

Most people are recommending that these low end H200/H300 cards be replaced ad hoc with H700 and above level cards, but this wasn't an option for us. The Dell R210 is rather limited on what RAID options it will work with. And seeing how many other parts we doled out money for on this particular server, going back would have proved to be a mess and a half.

What Is Dell Doing to Cripple the Performance on These Cards?

I didn't want to take suspicion at face value without some investigation of our own. While collective hearsay is rather convincing, I wanted to see for myself what was going on here.

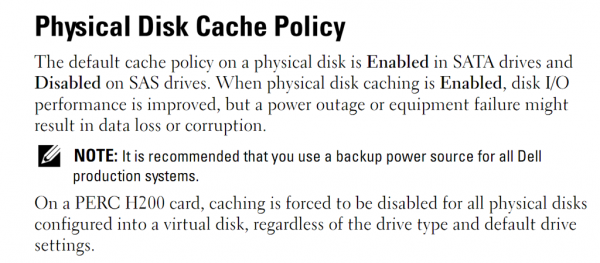

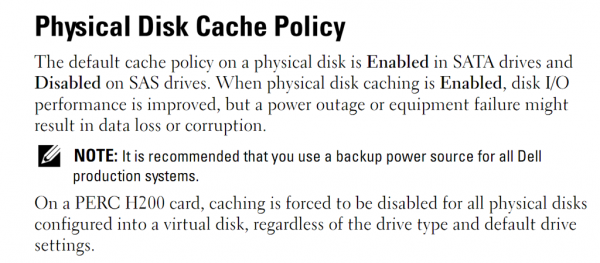

The culprit at the heart of these performance issues seems to be Dell's boneheaded policy of disabling the native disk cache on SAS drives, even though they seem to leave it enabled for SATA disks as a matter of default configuration for H300 level controllers.

Blackhat Research pinned a nice screenshot of this language in a Dell user manual:

Since we are using the H200 card on our R210, it wouldn't matter if we had SATA drives in our RAID array. They were going to disable the cache regardless.

Dell is going out on a limb here to make the lives of server admins rather hellish in situations where low end RAID cards are in use. Selling such lower end RAID cards without BBF is not my issue -- cutting costs on lower spec'ed hardware is the name of the game.

But to assume that those installing these servers aren't educated enough to be using battery backup units in any way? That's a bit presumptuous on its part, and honestly, anyone installing servers into a production environment without proper battery backup power to the core system likely shouldn't be in the driver's seat.

I know very well it can happen, and likely does happen (I've seen it first hand at client sites, so I digress), but then again, you can assume lots of things that may or may not be done. A car may be purchased with AWD, and a car manufacturer may disable the functionality if the vehicle is sold in Arizona, assuming that the driver will never encounter snow. Is that potential reality something that should be used to decide configuration for all users of the product? Of course not.

So Dell disabling the cache on hard drives for users of its low end RAID cards is a bit of an overstepping of boundaries in my opinion. One that has likely caused, judging by what I am reading, legions of PERC purchasers to completely dump their product for something else due to an incoherent decision by Dell's server team.

I decided to do a little testing of my own in an effort to resurrect this server rebuild and not have to move to a plan B. All firmware was updated on all ends -- server BIOS, RAID card fw, as well as the firmware on the hard drives. We made sure the latest PERC driver for this card was pulled down onto the server (which happened to come from Windows Update).

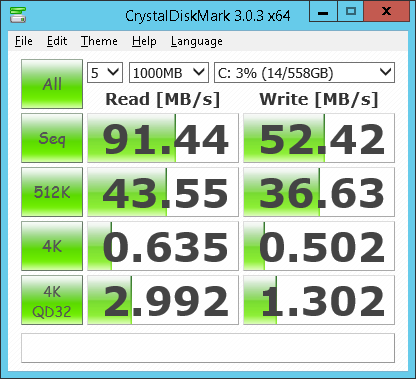

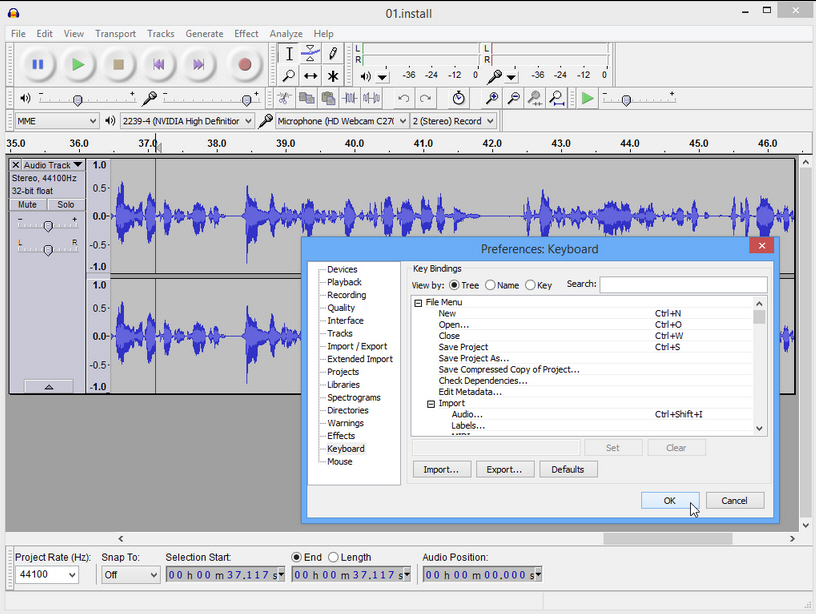

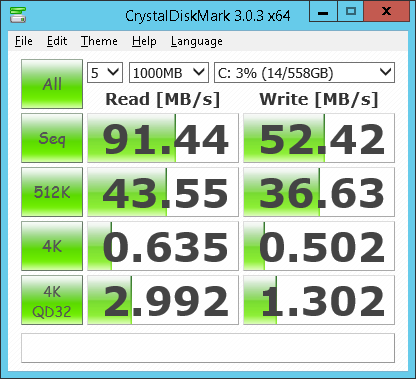

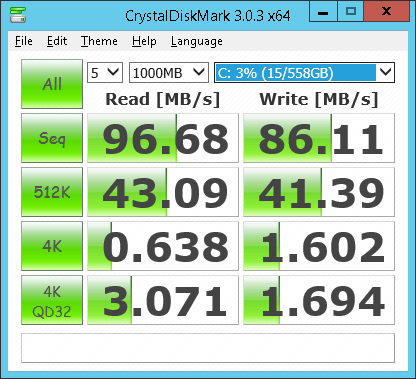

Prior to enabling the local cache of the underlying disks, here are the results of a single run of CrystalDiskMark 3.0.3:

Not terrible numbers, but I knew something was up, and seeing how everyone on forums was up in arms over this H200 controller, turning that cache policy back on was likely a requirement at this point for workable performance.

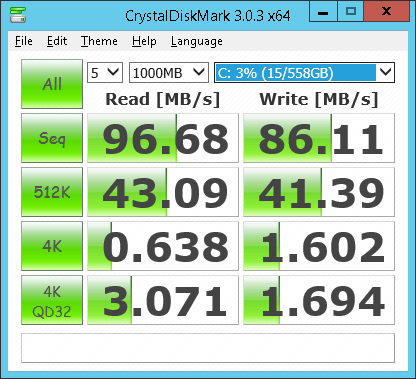

I ended up using LSI's MegaRaid Manager utility to get the cache enabled on the card, and here are the numbers we saw after a full server reboot:

The biggest change from prior to the cache being enabled? Look at the sequential write figure with caching at 86.11MB/s compared to the pre-caching figure of 52.42 MB/s. That's more than a 64 percent change for the better -- pretty darn big if you ask me.

The pair of 4K write tests experienced decent boosts, as did the sequential read number ever so slightly, which I'm not sure can be directly attributed to the cache enablement (but I may be wrong). The 512K write test went up slightly, too. All in all, flipping a single switch improved my numbers across the board.

Is Dell crippling these cards by turning off the native HDD cache? Most definitely, and the numbers above prove it.

How Can You Easily Rectify This Problem?

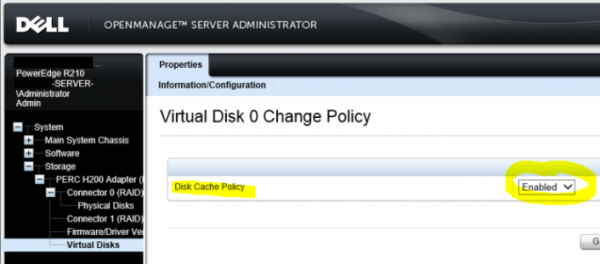

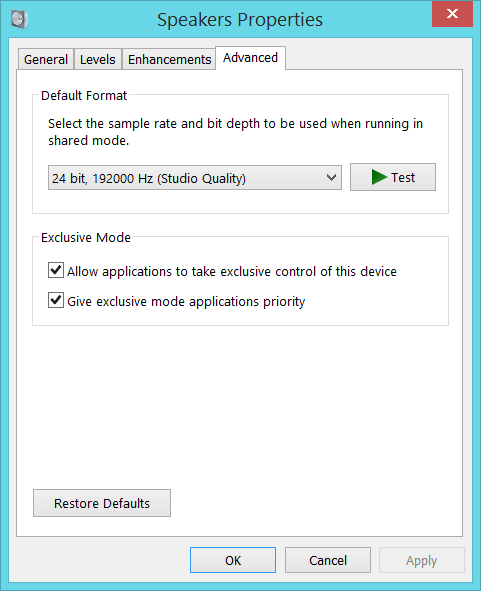

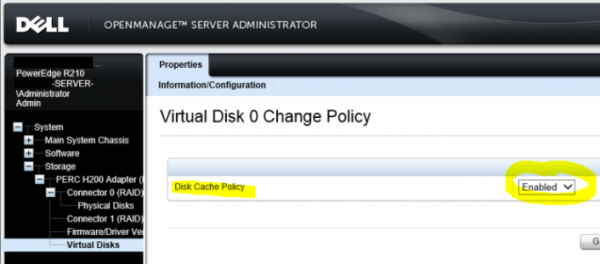

While I used the LSI MegaRaid Manager utility (from the maker of the actual card that Dell rebrands), you can use the more kosher Dell OpenManage Server Administrator utility that is tailor made for overseeing Dell servers. It's available for free download on any supported Dell PowerEdge unit (nearly every one in existence), and it only takes a few minutes to install.

After going into the utility, just navigate down into the virtual disk(s) in question, and you will find the Disk Cache Policy as set to "disabled" most likely (if it's an H200, most definitely). Just flip the selection to Enabled and hit save, and reboot your box just to ensure the change takes place.

Once your server comes back up, you will have the performance improvements that local cache can provide. While not as powerful in whole as a dedicated BBF like on the H700/H800 RAID cards from Dell, this is as good as it's going to get.

Run your own tests to see what kind of results you are seeing. CrystalDiskMark is a great utility that can measure disk drive performance on any Windows system, including servers, and is a good baseline utility that can tell you what kind of numbers you are getting from your drives.

Should Dell continue to cripple future generations of low end RAID cards by doing this to customers? I hope not. The safety of your server data is up to you as the customer in the end, and if you choose not to use a proper battery backup power source like an APC or Tripp Lite unit, then so be it.

But having to dig through forums to see why my H200 card is behaving abnormally slow, none of which is from my own doing in misconfiguration of any sort, is downright troubling.

Here's hoping someone from Dell is reading the legions of forum posts outlining this dire situation and changes their stance on newer RAID cards for future servers. Trimming features off your RAID cards is acceptable practice, but not when you begin forcing otherwise default functionality off on disk drives that offer caching.

Long story short? Stick to getting more capable Dell RAID cards, like the PERC H700 series units, when possible. You can avoid this necessary roundabout then altogether, and you've got yourself a higher quality, more capable RAID card, anyway.

Photo Credit: nito/Shutterstock

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company FireLogic, with over eight+ years of IT experience in the private and public sectors. He holds numerous technical credentials from Microsoft, Google, and CompTIA and specializes in consulting customers on growing hot technologies such as Office 365, Google Apps, cloud-hosted VoIP, among others. Derrick is an active member of CompTIA's Subject Matter Expert Technical Advisory Council that shapes the future of CompTIA exams across the world. You can reach him at derrick at wlodarz dot net.

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company FireLogic, with over eight+ years of IT experience in the private and public sectors. He holds numerous technical credentials from Microsoft, Google, and CompTIA and specializes in consulting customers on growing hot technologies such as Office 365, Google Apps, cloud-hosted VoIP, among others. Derrick is an active member of CompTIA's Subject Matter Expert Technical Advisory Council that shapes the future of CompTIA exams across the world. You can reach him at derrick at wlodarz dot net.

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company