Watching my RSS streams in Feedly on a daily basis has had my head spinning lately. It's not the usual flood of tech news getting to me. It's all the stories hitting recently about the so-called Internet of Things. For a topic that has so little to show for it in the real world thus far, it sure garners a disproportionate amount of attention in the tech media. So what gives?

Perhaps someone can fill me in on what this Internet of Things is supposed to look like. Is it a different internet? Is it a network solely designated for these newfound "things" that need to talk to every other "thing" out there? Or is it just more of what we already see in the market: giving every device possible an IP address to sit on. I'm just as perplexed at this bogus concept as Mike Elgan from Computerworld. He's calling it a wild idea that is rightly "doomed from the start" for numerous reasons.

Cisco's been one of the biggest commercial entities pushing its take on this blurry vision, in the form of an "Internet of Everything" (IoE). I've seen its print and TV ads come and go, and I'm left more stumped after each successive passing.

What the heck is it pushing? Have a look and judge for yourself if such commercials mean much of anything:

Don't get me wrong; Cisco's done a lot of great things for the modern world. It is debatably one of the fathers of the internet backbone as we know it. And it's got some pretty decent equipment that I happen to use for customer projects. But not for one second am I going to sit here believing in this second-coming of a machine-2-machine (M2M) communication revolution.

Cisco has even gone so far as to release a handy "Connections Counter" which is a running tally of all the devices that make up the firm's notion of an IoE. That counter alone makes me feel like I'm behind the times, as the only devices in my home which have an IP are my smartphone, computers, and sprinkling of home theater gear. Cisco claims we'll have 50 billion devices on the IoE by 2020? Time will tell, but I'm skeptical.

The whole concept at large is fraught with heavenly assumptions about compatibility, industry standards that vendors agree on, and penetration of these new-age devices. Not to mention that we've seen how such an unchecked drive towards machine autonomy can end up, at least metaphorically. The SkyNet storyline from the Terminator movies is but one example of what we should ultimately be afraid of. And not for the reasons you may be pondering.

SkyNet: A Metaphor for what the Internet of Things May Become

If you haven't seen the Terminator series (my favorite being T2, no less) you may not have the intrinsic insight as to what parallels I'm drawing between the IoT and the fictional SkyNet network. For the unitiated, here's a brief synopsis, of which you can read about in-depth on Wikipedia.

SkyNet is portrayed as an autonomous US military command and control computer network that employed the use of advanced artificial intelligence to make its own decisions. The program was intended to bring rational and calculated decision making to the way the US utilized its military assets, but as movie-goers quickly found out, SkyNet grows too dangerous for its own good. It launches nukes at Russia as a pre-emptive effort to save the USA, but this brings about nuclear destruction from the Russians in retaliation. In all, 3 billion people end up dying in this "machines gone wild" nightmare scenario, and SkyNet ultimately spends the rest of its existence in the Terminator series attempting to battle humanity to the very end -- all for the sake of self-preservation.

Text alone doesn't do the storyline justice, and I highly recommend you check out at least the first two movies in the series if you haven't already. It's a shocking, chilling scenario that is great movie fare, but I'm pinning it up as a direct allusion to what kind of doomsday the current day Internet of Things is shaping up to resemble. What the heck am I getting at? Don't worry; it has nothing to do with raving mad cyborgs chasing John Connor.

The notion of machines run via artificial intelligence, autonomous of human control, has been around since Terminator hit the movie theatres in 1984. Exactly thirty years later, we're toying with the same concept once again in an alternate dimension. Toasters that talk to ovens which communicate with fridges, all of which connect back to the internet. What could possibly go wrong? (Image Source: Terminator 2 - movie)

Movie fiction shouldn't be scaring you about the potential downsides of an Internet of Things, there's plenty of real-world worries. Take for example this case of Philips Hue lights that were hacked and attacked, as reported by Ars Technica back in August. This otherwise neat technology that allows for people to control their lighting systems from afar (even over the net) was completely exploited, to the point where entire lighting arrays in places such as hospitals or other critical 24/7 operations could be compromised entirely. You can read the full article on how it happened and what Philips is doing to fix it, but this is just the tip of the iceberg for what an Internet of Things doomsday could look like.

Hacking someone's home lights are one thing. But there's also news that has arisen just about a week ago from Proofpoint Inc which claims to have spotted what I think is my biggest fear from this IoT: devices that are taken over in command and control botnets for the purpose of spam, DDoS attacks, and much more.

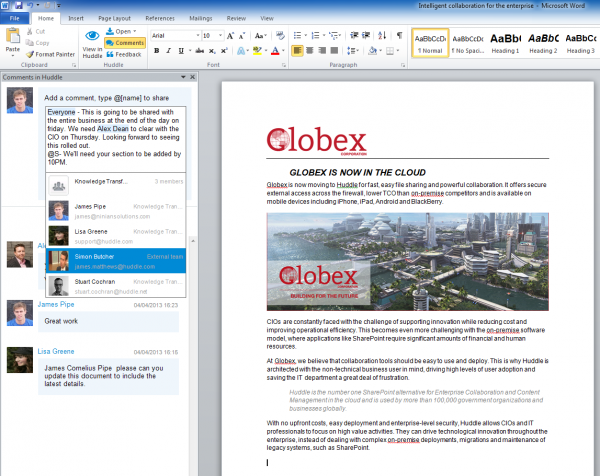

A snippet of its findings just to frame the discussion here:

A more detailed examination suggested that while the majority of mail was initiated by "expected" IoT devices such as compromised home-networking devices (routers, NAS), there was a significant percentage of attack mail coming from other non-traditional sources, such as connected multi-media centers, televisions and at least one refrigerator.

And if you're thinking it must be referring to just a bunch of "old Windows devices", as many like to presume, this is far from the case. The press release describes that devices of all corners of the computing and media electronics industry were represented. ARM devices. Embedded Realtek-driven media players. NAS boxes. Game consoles. And even set top boxes (yeah, your believed-to-be secure DVR).

Things that have never seen an ounce of Windows code are all suffering the same dreadful fate from attacker exploitation. In this case, they're doing it for the traditional purpose of peddling spam onto the internet. But keep in mind this Internet of Things is just starting to take shape. If your fridge was responsible for sending out millions of spam messages in a year, how the heck would you ever know?

Regardless of the fact that there is no antivirus software, as silly as it sounds, for fridges yet, what kind of protections are manufacturers going to put into place to ensure this doesn't become the norm? A decade of Windows XP users have fought back the legions of infections and botnets that used to make up the Windows malware scene. Manufacturers are going to make the same flawed pitch that Apple has made to its godly following: our products don't get viruses.

Fast forward to a day, ten or 15 years from now, when this Internet of Things has begun to take shape. Your fridge has an IP address. Your home's electrical backbone is connected to the internet. Even your car jumps onto your home Wi-Fi when sitting in the driveway. If malware writers start to deliver the kinds of things computers have dealt with over the last 20+ years, what would we ideally do? Most average consumers can barely remember to renew their AV licensing -- how on earth would they be able to spot that their car is infected with malware connected to a botnet?

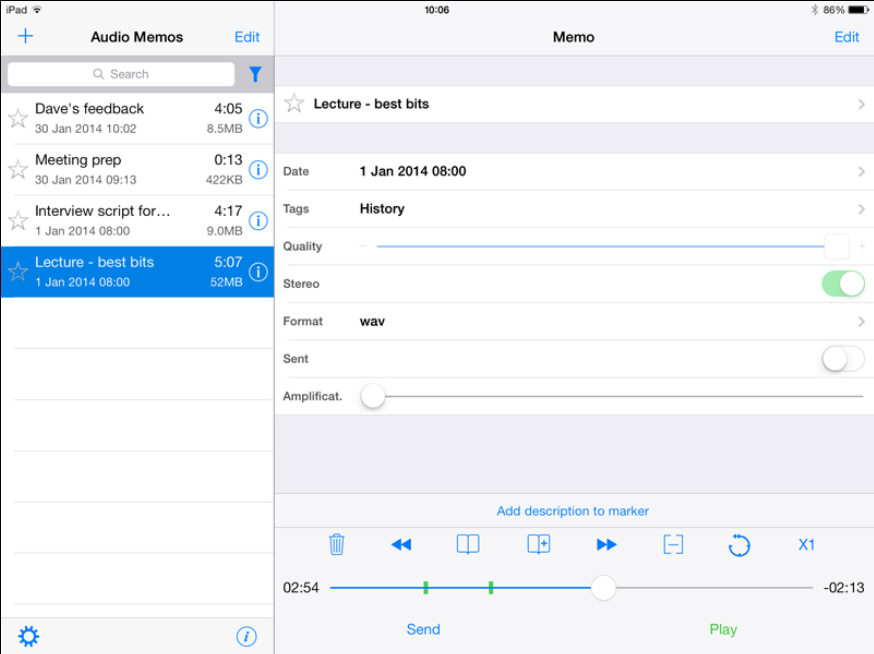

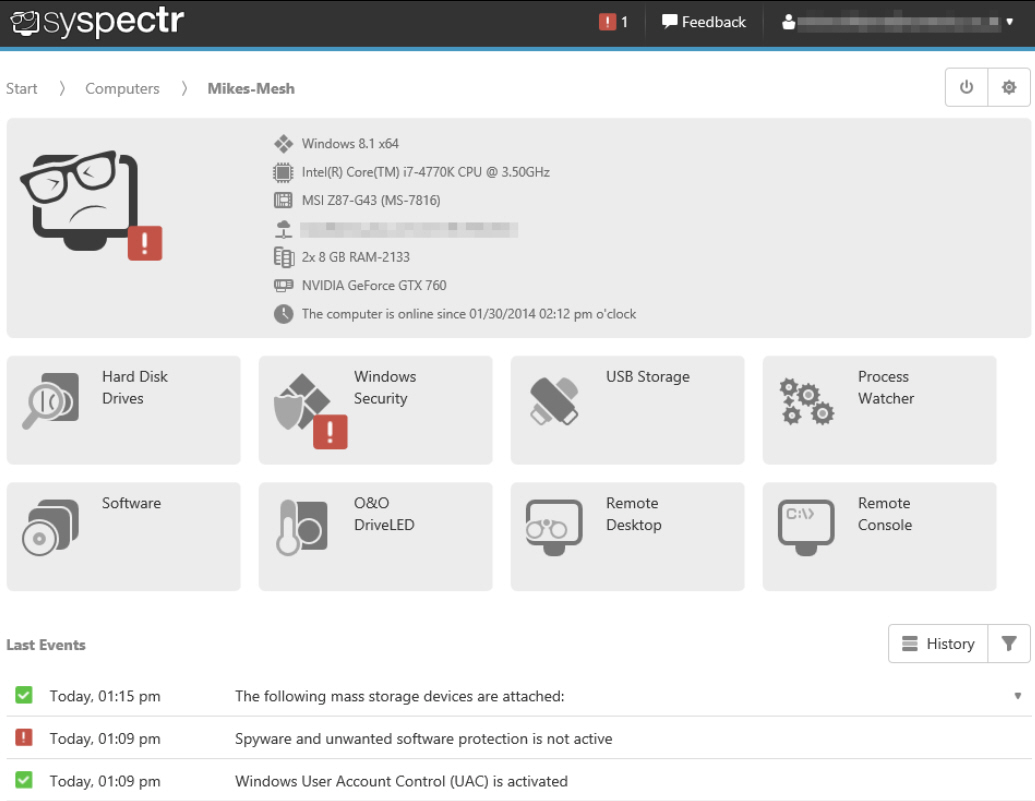

Security research firm Proofpoint is already shedding light on the reality that net-enabled fridges are making up the ranks of modern day spam botnets. Still interested in achieving technology nirvana in the form of checking email off your fridge? Count me out. (Image Source: GUI Champs Forums)

Even scarier are the possibilities of what such an interconnected home could experience at the hands of attackers. If it's currently possible to take over someone's entire lighting array (of the Philips kind only -- for now), what if attackers truly took their cunning skills to the next level?

Imagine sophisticated thieves working with hackers who take down your home's electricity from afar. They communicate via encrypted voice chats over Lync with bandits hiding out in a large van outside your home. They happen to tap into the home alarm system and render it useless as well. If they're good, they'll take over your router which controls traffic for your VoIP home phone system and redirect all calls to their own endpoint. Ocean's Eleven style.

The rest isn't too hard to map out. You can leave it up to your own imagination. Theft, kidnapping, etc. The possibilities become limitless when the bad guys have a disproportionate upper hand against the technologies you believed were able to keep you safe. And that's the Internet of Things I'm most afraid of. The one that unlocks a Pandora's Box of unknown.

Universal Remotes Have Been Around for 30 Years -- And They Still Suck

Wikipedia claims that the first universal remote came out back in 1985, thanks to Magnavox (a Philips company; yes, the same Philips that made the exploitable Hue light system above). Mike Elgan makes numerous connections to the messy future of the IoT in his Computerworld article, and I think it's a brilliant analogy.

If you've ever looked into the universal remote market, or tried some of the devices available, you likely have good reference as to what Mike and myself are alluding to. In the 30 years that these remotes have been available, have they gotten any easier to configure? Has their compatibility truly matured to the level you would expect? And better yet, how usable are they in real life? You're probably laughing at the ridiculousness of universal remotes like I am while writing this, because they're anything but universal or easy.

Even if you can find a unit that successfully works with all of your televisions and receivers, you're likely to be roadblocked when it comes time to configure your set top box. Or, if you're like me, and have a HD projector for a television, none of the remotes will be able to talk to it (I have a BenQ SP890, if you're wondering). And even if -- a big if -- you can get everything configured, try teaching your technologically-challenged significant other how to use the darn thing.

If you've given up in frustration, you're not alone. The consumer electronics industry has failed abysmally at solving one of the seemingly easiest conundrums, IR compatibility in remotes. A full 30 years after the first universal remote hit the market, electronics users near ubiquitously hate them for the reasons I mentioned. Every manufacturer would prefer you to use their remote for all your devices. Besides, they think they know best. What proper consumer electronics corporation wouldn't take this stance?

Remotes aren't alone in their anger-inducing compatibility deadspins. Betamax and VHS duked it out for years in the marketplace. HD-DVD and Blu-Ray rehashed a similar battle royale which ended only a few years back. Even smartphones are suffering from the same compatibility fragmentation when it comes to connectors and power adapters. Apple continues to think that the world wants to continue upholding its custom connector designs, while the rest of the sane phone market trods along with micro USB. So we're stuck as the lab rats in this giant consumerization experiment, purchasing adapters for dongles for converters just to connect simple things like phones to our computing devices.

Even HDMI can be considered a standard which should have never been allowed to overtake the market. The body that backs HDMI has created a "pay to play" ecosystem where vendors who incorporate the tech must pay thousands a year in "adopter agreement" fees, and then also pay another $0.15 in royalties per device sold. I try to use DisplayPort when possible to fight the good fight here, but it's tough when HDMI is becoming further entrenched into most media electronics. Take a guess who's footing the bill for these standards-taxes? You are, at the register, when you buy anything with an HDMI port on it like a laptop, TV, receiver, cable, etc.

Most IT pros forget that if it weren't for the forces that backed DDR memory more than ten years ago, we would have been living with expensive RIMM chips for our computers to this day. Just check eBay to see how inflated the pricing is for this KIA technology. Some of it comes down to supply and demand, but just as much is from the "Rambus Tax" that was charged back to memory chip makers.

Catch my drift here? If this is what we have to contend with today, what could possibly be waiting for us in an Internet of Things scenario, as ascribed by the likes of feel-good Cisco? The only vestige of true standardization that has a chance of saving the IoT are the vendor-neutral underpinnings of the internet. DNS, IPv6, MAC addresses, etc etc.

But the same forces who have fought the standards wars in the computer arena, to which we can thank unneeded connectors like FireWire and Thunderbolt to name a few, are likely to be pitching their own tents in the IoT race. I wouldn't be surprised if there was a competing connection technology to Bluetooth and Wi-Fi to get introduced, which gets major backing and comes with licensing fees, and fractures a bevy of devices that hit the marketplace. So we'll be at it again, fighting with non-conforming standards that require expensive converters and adapters.

Mind you, the above examples merely entail simple entities like connectors, ports, and digital storage formats. Yet we're supposed to believe that ambulances are going to communicate with stoplights while transmitting patient info back to a hospital in real time? I'll believe it when I see it.

The Greener Side of the IoT

I've been quite harsh on the M2M predestined future being forced down upon us. There are, however, some interesting things going on in this arena; things that don't require as much cause for alarm.

Computerworld reported just a few days ago about the work of Zepp, a California based company that is working on sensors for all walks of the sporting world. It currently has sensors in the works for baseball, golf, and tennis, but has been infused with more cash that will undoubtedly allow it to expand its horizons.

As a lifelong hockey player, I would be quite curious in trying some of the eventual gear that this company will hopefully create. Ideally, I may be able to skate down the rink, take a shot, and have statistics sent back to my phone about how long I had possession of the puck, how hard my shot was, and the positioning of every shot I took in an entire game. The system would be able to recognize which shots were actual goals, and designate them as such in my data analysis. So theoretically, after a game, I could sit down and view a dashboard showing me where I'm having the most success on the rink and where I need improvement. This is wicked in theory, and I don't think it's too far off, judging by what Zepp has already brought into reality.

Zepp is a company that currently makes some of the sensors which make up the Internet of Things some are predicting. As a baseball player, you may be curious about the speed of impact for your last swing and also what height you hit the ball at. A golfer may be able to up his/her game by finding that their follow through on a Par-3 drive just isn't what they thought it was. This kind of insight was only possible on virtual reality sims -- until now. (Image Source: Engadget)

I could imagine this being taken to the next level, and becoming something that sports teams begin to leverage. Say, for example, a basketball team could use such sensors on both players and on the basketballs used during pre-game warmups. The balls would be able to send back live data on which players are having the best shot completion percentage, what parts of the court they are playing best in, what teammates they were working best with, and so on. Coaches could then analyze the data from the 20 min warmup period to make dynamic changes to a lineup. The tech media is raving about Big Data and its impact on business decisions. I think we can justifiably call this Little Data working to a team's advantage!

Microsoft Research has been busy on the home side of things, furthering a project it is calling the Lab of Things. There's a neat intro video on what the project entails right at the home page, and it covers just what Microsoft envisions for its own Internet of Things. Through a piece of software called HomeOS, tech enthusiasts can already piece together their own home IoT ecosystems with pre-fab sensors and gizmos that can watch over light levels, temperatures, provide webcam views into rooms, and much more.

But with so much focus being spent on wooing us into the IoT, little talk has been dedicated to describing what failsafes manufacturers are going to implement to prevent these SkyNet-like armies of spambots. How will consumers be able to get insight as to whether their devices have been compromised? Will firmware updates become seamless and painless, or will we have to chase firmware release files for all of our IoT gear on a monthly basis? What kind of security standards will be agreed upon in the industry to prevent this from becoming a wild west of machinery working at the beholden of criminals?

It's scary enough to hear that the US electrical grid is already the target of daily cyberattacks which are not getting any weaker. And these are complex, advanced networks pieced together with some of the best industrial, engineering, and technical minds in the world. If they're having a hard time keeping up, how are consumers going to fight back when their net-connected toasters and fridges are overrun? Not even Cisco is answering that one yet.

Mike Elgan made the assertion that the Internet of Things may never happen. I have to agree, and even if it does rear its head, it's going to be an unfulfilled fantasy for years (er, decades) to come due to all the rightful concerns about compatibility, security, etc.

If the manufacturers can't answer basic questions of how we're going to securely envelop ourselves in a sea of M2M-enabled devices, I'll opt to sit back and see how this unfolds. As much as I love the Terminator series, the last thing I need is a personal SkyNet arming itself in my home. Metaphorically, of course.

Photo Credit: Oliver Sved/Shutterstock

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company FireLogic, with over eight+ years of IT experience in the private and public sectors. He holds numerous technical credentials from Microsoft, Google, and CompTIA and specializes in consulting customers on growing hot technologies such as Office 365, Google Apps, cloud-hosted VoIP, among others. Derrick is an active member of CompTIA's Subject Matter Expert Technical Advisory Council that shapes the future of CompTIA exams across the world. You can reach him at derrick at wlodarz dot net.

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company FireLogic, with over eight+ years of IT experience in the private and public sectors. He holds numerous technical credentials from Microsoft, Google, and CompTIA and specializes in consulting customers on growing hot technologies such as Office 365, Google Apps, cloud-hosted VoIP, among others. Derrick is an active member of CompTIA's Subject Matter Expert Technical Advisory Council that shapes the future of CompTIA exams across the world. You can reach him at derrick at wlodarz dot net.

The Windows XP death clock is ticking away. While Microsoft has extended support for malware protection, do not be fooled -- XP will be officially unsupported on April 8. If Microsoft has its druthers, these XP users will upgrade to Windows 8 and maybe even buy a new computer.

The Windows XP death clock is ticking away. While Microsoft has extended support for malware protection, do not be fooled -- XP will be officially unsupported on April 8. If Microsoft has its druthers, these XP users will upgrade to Windows 8 and maybe even buy a new computer.

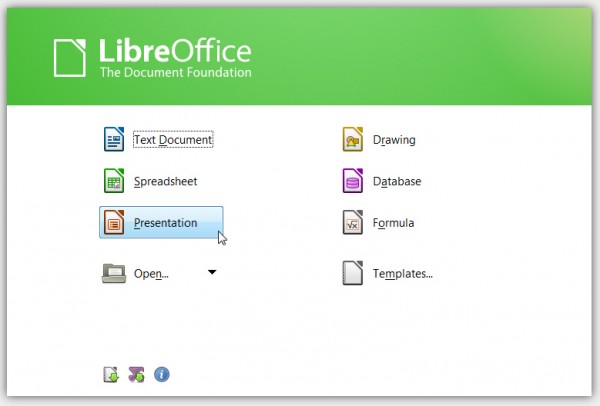

The Document Foundation has announced

The Document Foundation has announced

I have since decided to give Windows 8.1 another chance on my MacBook Air as I wanted to see whether it would be better for work. I installed it, configured it, installed a couple of big apps (like Office 2013) and was pleased with it for a while. I switched back to OS X though because live tiles did not work for me, the touchpad wasn't as gesture-friendly as it is in Mavericks and, surprisingly, I was actually better served by OS X for all my other needs.

I have since decided to give Windows 8.1 another chance on my MacBook Air as I wanted to see whether it would be better for work. I installed it, configured it, installed a couple of big apps (like Office 2013) and was pleased with it for a while. I switched back to OS X though because live tiles did not work for me, the touchpad wasn't as gesture-friendly as it is in Mavericks and, surprisingly, I was actually better served by OS X for all my other needs.

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company

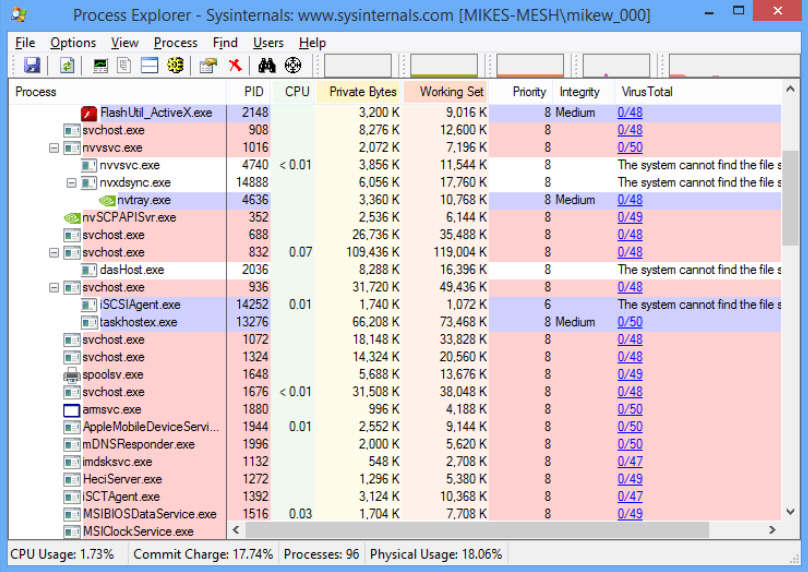

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company  Windows Sysinternals has released

Windows Sysinternals has released

There has been a lot of talk lately about Bitcoin, a digital currency that aims to provide the security of cash and is more convenient than a credit card. Just under a year ago, the "cryptocurrency" -- so named for its reliance on cryptography in order to operate -- was traded somewhere between

There has been a lot of talk lately about Bitcoin, a digital currency that aims to provide the security of cash and is more convenient than a credit card. Just under a year ago, the "cryptocurrency" -- so named for its reliance on cryptography in order to operate -- was traded somewhere between  Nick Vahalik is Technical sales engineer at

Nick Vahalik is Technical sales engineer at