Ninth in a series. Robert X. Cringely's brilliant look at the rise of the personal computing industry continues, explaining why PCs aren't mini-mainframes and share little direct lineage with them.

Published in 1991, Accidental Empires is an excellent lens for viewing not just the past but future computing.

ACCIDENTAL EMPIRES — CHAPTER THREE

WHY THEY DON’T CALL IT COMPUTER VALLEY

Reminders of just how long I’ve been around this youth-driven business keep hitting me in the face. Not long ago I was poking around a store called the Weird Stuff Warehouse, a sort of Silicon Valley thrift shop where you can buy used computers and other neat junk. It’s right across the street from Fry’s Electronics, the legendary computer store that fulfills every need of its techie customers by offering rows of junk food, soft drinks, girlie magazines, and Maalox, in addition to an enormous selection of new computers and software. You can’t miss Fry’s; the building is painted to look like a block-long computer chip. The front doors are labeled Enter and Escape, just like keys on a computer keyboard.

Weird Stuff, on the other side of the street, isn’t painted to look like anything in particular. It’s just a big storefront filled with tables and bins holding the technological history of Silicon Valley. Men poke through the ever-changing inventory of junk while women wait near the door, rolling their eyes and telling each other stories about what stupid chunk of hardware was dragged home the week before.

Next to me, a gray-haired member of the short-sleeved sport shirt and Hush Puppies school of 1960s computer engineering was struggling to drag an old printer out from under a table so he could show his 8-year-old grandson the connector he’d designed a lifetime ago. Imagine having as your contribution to history the fact that pin 11 is connected to a red wire, pin 18 to a blue wire, and pin 24 to a black wire.

On my own search for connectedness with the universe, I came across a shelf of Apple III computers for sale for $100 each. Back in 1979, when the Apple III was still six months away from being introduced as a $3,000 office computer, I remember sitting in a movie theater in Palo Alto with one of the Apple III designers, pumping him for information about it.

There were only 90,000 Apple III computers ever made, which sounds like a lot but isn’t. The Apple III had many problems, including the fact that the automated machinery that inserted dozens of computer chips on the main circuit board didn’t push them into their sockets firmly enough. Apple’s answer was to tell 90,000 customers to pick up their Apple III carefully, hold it twelve to eighteen inches above a level surface, and then drop it, hoping that the resulting crash would reseat all the chips.

Back at the movies, long before the Apple Ill’s problems, or even its potential, were known publicly, I was just trying to get my friend to give me a basic description of the computer and its software. The film was Barbarella, and all I can remember now about the movie or what was said about the computer is this image of Jane Fonda floating across the screen in simulated weightlessness, wearing a costume with a clear plastic midriff. But then the rest of the world doesn’t remember the Apple III at all.

It’s this relentless throwing away of old technology, like the nearly forgotten Apple III, that characterizes the personal computer business and differentiates it from the business of building big computers, called mainframes, and minicomputers. Mainframe technology lasts typically twenty years; PC technology dies and is reborn every eighteen months.

There were computers in the world long before we called any of them “personal”. In fact, the computers that touched our lives before the mid-1970s were as impersonal as hell. They sat in big air-conditioned rooms at insurance companies, phone companies, and the IRS, and their main function was to screw up our lives by getting us confused with some other guy named Cringely, who was a deadbeat, had a criminal record, and didn’t much like to pay parking tickets. Computers were instruments of government and big business, and except for the punched cards that came in the mail with the gas bill, which we were supposed to return obediently with the money but without any folds, spindling, or mutilation, they had no physical presence in our lives.

How did we get from big computers that lived in the basement of office buildings to the little computers that live on our desks today? We didn’t. Personal computers have almost nothing to do with big computers. They never have.

A personal computer is an electronic gizmo that is built in a factory and then sold by a dealer to an individual or a business. If everything goes as planned, the customer will be happy with the purchase, and the company that makes the personal computer, say Apple or Compaq, won’t hear from that customer again until he or she buys another computer. Contrast that with the mainframe computer business, where big computers are built in a factory, sold directly to a business or government, installed by the computer maker, serviced by the computer maker (for a monthly fee), financed by the computer maker, and often running software written by the computer maker (and licensed, not sold, for another monthly fee). The big computer company makes as much money from servicing, financing, and programming the computer as it does from selling it. It not only wants to continue to know the customer, it wants to be in the customer’s dreams.

The only common element in these two scenarios is the factory. Everything else is different. The model for selling personal computers is based on the idea that there are millions of little customers out there; the model for selling big computers has always been based on the idea that there are only a few large customers.

When IBM engineers designed the System 650 mainframe in the early 1950s, their expectation was to build fifty in all, and the cost structure that was built in from the start allowed the company to make a profit on only fifty machines. Of course, when computers became an important part of corporate life, IBM found itself selling far more than fifty -- 1,500, in fact -- with distinct advantages of scale that brought gross profit margins up to the 60 to 70 percent range, a range that computer companies eventually came to expect. So why bother with personal computers?

Big computers and little computers are completely different beasts created by radically different groups of people. It’s logical, I know, to assume that the personal computer came from shrinking a mainframe, but that’s not the way it happened. The PC business actually grew up from the semiconductor industry. Instead of being a little mainframe, the PC is, in fact, more like an incredibly big chip. Remember, they don’t call it Computer Valley. They call it Silicon Valley, and it’s a place that was invented one afternoon in 1957 when Bob Noyce and seven other engineers quit en masse from Shockley Semiconductor.

William Shockley was a local boy and amateur magician who had gone on to invent the transistor at Bell Labs in the late 1940s and by the mid-1950s was on his own building transistors in what had been apricot drying sheds in Mountain View, California.

Shockley was a good scientist but a bad manager. He posted a list of salaries on the bulletin board, pissing off those who were being paid less for the same work. When the work wasn’t going well, he blamed sabotage and demanded lie detector tests. That did it. Just weeks after they’d toasted Shockley’s winning the Nobel Prize in physics by drinking champagne over breakfast at Dinah’s Shack, a red clapboard restaurant on El Camino Real, the “Traitorous Eight”, as Dr. S. came to call them, hit the road.

For Shockley, it was pretty much downhill from there; today he’s remembered more for his theories of racial superiority and for starting a sperm bank for geniuses in the 1970s than for the breakthrough semiconductor research he conducted in the 1940s and 1950s. (Of course, with several fluid ounces of Shockley semen still sitting on ice, we may not have heard the last of the doctor yet.)

Noyce and the others started Fairchild Semiconductor, the archetype for every Silicon Valley start-up that has followed. They got the money to start Fairchild from a young investment banker named Arthur Rock, who found venture capital for the firm. This is the pattern that has been followed ever since as groups of technical types split from their old companies, pick up venture capital to support their new idea, and move on to the next start-up. More than fifty new semiconductor companies eventually split off in this way from Fairchild alone.

At the heart of every start-up is an argument. A splinter group inside a successful company wants to abandon the current product line and bet the company on some radical new technology. The boss, usually the guy who invented the current technology, thinks this idea is crazy and says so, wishing the splinter group well on their new adventure. If he’s smart, the old boss even helps his employees to leave by making a minority investment in their new company, just in case they are among the 5 percent of start-ups that are successful.

The appeal of the start-up has always been that it’s a small operation, usually led by the smartest guy in the room but with the assistance of all players. The goals of the company are those of its people, who are all very technically oriented. The character of the company matches that of its founders, who were inevitably engineers—regular guys. Noyce was just a preacher’s kid from Iowa, and his social sensibilities reflected that background.

There was no social hierarchy at Fairchild -- no reserved parking spaces or executive dining rooms -- and that remained true even later when the company employed thousands of workers and Noyce was long gone. There was no dress code. There were hardly any doors; Noyce had an office cubicle, built from shoulder-high partitions, just like everybody else. Thirty years later, he still had only a cubicle, along with limitless wealth.

They use cubicles, too, at Hewlett-Packard, which at one point in the late 1970s had more than 50,000 employees, but only three private offices. One office belonged to Bill Hewlett, one to David Packard, and the third to a guy named Paul Ely, who annoyed so many coworkers with his bellowing on the telephone that the company finally extended his cubicle walls clear to the ceiling. It looked like a freestanding elevator shaft in the middle of a vast open office.

The Valley is filled with stories of Bob Noyce as an Everyman with deep pockets. There was the time he stood in a long line at his branch bank and then asked the teller for a cashier’s check for $1.3 million from his personal savings, confiding gleefully that he was going to buy a Learjet that afternoon. Then, after his divorce and remarriage, Noyce tried to join the snobbish Los Altos Country Club, only to be rejected because the club did not approve of his new wife, so he wrote another check and simply duplicated the country club facilities on his own property, within sight of the Los Altos clubhouse. “To hell with them,” he said.

As a leader, Noyce was half high school science teacher and half athletic team captain. Young engineers were encouraged to speak their minds, and they were given authority to buy whatever they needed to pursue their research. No idea was too crazy to be at least considered, because Noyce realized that great discoveries lay in crazy ideas and that rejecting out of hand the ideas of young engineers would just hasten that inevitable day when they would take off for their own start-up.

While Noyce’s ideas about technical management sound all too enlightened to be part of anything called big business, they worked well at Fairchild and then at Noyce’s next creation, Intel. Intel was started, in fact, because Noyce couldn’t get Fairchild’s eastern owners to accept the idea that stock options should be a part of compensation for all employees, not just for management. He wanted to tie everyone, from janitors to bosses, into the overall success of the company, and spreading the wealth around seemed the way to go.

This management style still sets the standard for every computer, software, and semiconductor company in the Valley today, where office doors are a rarity and secretaries hold shares in their company’s stock. Some companies follow the model well, and some do it poorly, but every CEO still wants to think that the place is being run the way Bob Noyce would have run it.

The semiconductor business is different from the business of building big computers. It costs a lot to develop a new semiconductor part but not very much to manufacture it once the design is proved. This makes semiconductors a volume business, where the most profitable product lines are those manufactured in the greatest volume rather than those that can be sold in smaller quantities with higher profit margins. Volume is everything.

To build volume, Noyce cut all Fairchild components to a uniform price of one dollar, which was in some cases not much more than the cost of manufacturing them. Some of Noyce’s partners thought he was crazy, but volume grew quickly, followed by profits, as Fairchild expanded production again and again to meet demand, continually cutting its cost of goods at the same time. The concept of continually dropping electronic component prices was born at Fairchild. The cost per transistor dropped by a factor of 10,000 over the next thirty years.

To avoid building a factory that was 10,000 times as big, Noyce came up with a way to give customers more for their money while keeping the product price point at about the same level as before. While the cost of semiconductors was ever falling, the cost of electronic subassemblies continued to increase with the inevitably rising price of labor. Noyce figured that even this trend could be defeated if several components could be built together on a single piece of silicon, eliminating much of the labor from electronic assembly. It was 1959, and Noyce called his idea an integrated circuit. “I was lazy,” he said. “It just didn’t make sense to have people soldering together these individual components when they could be built as a single part.”

Jack Kilby at Texas Instruments had already built several discrete components on the same slice of germanium, including the first germanium resistors and capacitors, but Kilby’s parts were connected together on the chip by tiny gold wires that had to be installed by hand. TI’s integrated circuit could not be manufactured in volume.

The twist that Noyce added was to deposit a layer of insulating silicon oxide on the top surface of the chip—this was called the “planar process” that had been invented earlier at Fairchild —and then use a photographic process to print thin metal lines on top of the oxide, connecting the components together on the chip. These metal traces carried current in the same way that Jack Kilby’s gold wires did, but they could be printed on in a single step rather than being installed one at a time by hand.

Using their new photolithography method, Noyce and his boys put first two or three components on a single chip, then ten, then a hundred, then thousands. Today the same area of silicon that once held a single transistor can be populated with more than a million components, all too small to be seen.

Tracking the trend toward ever more complex circuits, Gordon Moore, who cofounded Intel with Noyce, came up with Moore’s Law: the number of transistors that can be built on the same size piece of silicon will double every eighteen months. Moore’s Law still holds true. Intel’s memory chips from 1968 held 1,024 bits of data; the most common memory chips today hold a thousand times as much -- 1,024,000 bits -- and cost about the same.

The integrated circuit -- the IC -- also led to a trend in the other direction -- toward higher price points, made possible by ever more complex semiconductors that came to do the work of many discrete components. In 1971, Ted Hoff at Intel took this trend to its ultimate conclusion, inventing the microprocessor, a single chip that contained most of the logic elements used to make a computer. Here, for the first time, was a programmable device to which a clever engineer could add a few memory chips and a support chip or two and turn it into a real computer you could hold in your hands. There was no software for this new computer, of course -- nothing that could actually be done with it -- but the computer could be held in your hands or even sold over the counter, and that fact alone was enough to force a paradigm shift on Silicon Valley.

It was with the invention of the microprocessor that the rest of the world finally disappointed Silicon Valley. Until that point, the kids at Fairchild, Intel, and the hundred other chipmakers that now occupied the southern end of the San Francisco peninsula had been farmers, growing chips that were like wheat from which the military electronics contractors and the computer companies could bake their rolls, bagels, and loaves of bread -- their computers and weapon control systems. But with their invention of the microprocessor, the Valley’s growers were suddenly harvesting something that looked almost edible by itself. It was as though they had been supplying for years these expensive bakeries, only to undercut them all by inventing the Twinkie.

But the computer makers didn’t want Intel’s Twinkies. Microprocessors were the most expensive semiconductor devices ever made, but they were still too cheap to be used by the IBMs, the Digital Equipment Corporations, and the Control Data Corporations. These companies had made fortunes by convincing their customers that computers were complex, incredibly expensive devices built out of discrete components; building computers around microprocessors would destroy this carefully crafted concept. Microprocessor-based computers would be too cheap to build and would have to sell for too little money. Worse, their lower part counts would increase reliability, hurting the service income that was an important part of every computer company’s bottom line in those days.

And the big computer companies just didn’t have the vision needed to invent the personal computer. Here’s a scene that happened in the early 1960s at IBM headquarters in Armonk, New York. IBM chairman Tom Watson, Jr., and president Al Williams were being briefed on the concept of computing with video display terminals and time-sharing, rather than with batches of punch cards. They didn’t understand the idea. These were intelligent men, but they had a firmly fixed concept of what computing was supposed to be, and it didn’t include video display terminals. The briefing started over a second time, and finally a light bulb went off in Al Williams’s head. “So what you are talking about is data processing but not in the same room!” he exclaimed.

IBM played for a short time with a concept it called teleprocessing, which put a simple computer terminal on an executive’s desk, connected by telephone line to a mainframe computer to look into the bowels of the company and know instantly how many widgets were being produced in the Muncie plant. That was the idea, but what IBM discovered from this mid-1960s exercise was that American business executives didn’t know how to type and didn’t want to learn. They had secretaries to type for them. No data were gathered on what middle managers would do with such a terminal because it wasn’t aimed at them. Nobody even guessed that there would be millions of M.B.A.s hitting the streets over the following twenty years, armed with the ability to type and with the quantitative skills to use such a computing tool and to do some real damage with it. But that was yet to come, so exit teleprocessing, because IBM marketers chose to believe that this test indicated that American business executives would never be interested.

In order to invent a particular type of computer, you have to want first to use it, and the leaders of America’s computer companies did not want a computer on their desks. Watson and Williams sold computers but they didn’t use them. Williams’s specialty was finance; it was through his efforts that IBM had turned computer leasing into a goldmine. Watson was the son of God -- Tom Watson Sr. -- and had been bred to lead the blue-suited men of IBM, not to design or use computers. Watson and Williams didn’t have computer terminals at their desks. They didn’t even work for a company that believed in terminals. Their concept was of data processing, which at IBM meant piles of paper cards punched with hundreds of rectangular, not round, holes. Round holes belonged to Univac.

The computer companies for the most part rejected the microprocessor, calling it too simple to perform their complex mainframe voodoo. It was an error on their part, and not lost on the next group of semiconductor engineers who were getting ready to explode from their current companies into a whole new generation of start-ups. This time they built more than just chips and ICs; they built entire computers, still following the rules for success in the semiconductor business: continual product development; a new family of products every year or two; ever increasing functionality; ever decreasing price for the same level of function; standardization; and volume, volume, volume.

It takes society thirty years, more or less, to absorb a new information technology into daily life. It took about that long to turn movable type into books in the fifteenth century. Telephones were invented in the 1870s but did not change our lives until the 1900s. Motion pictures were born in the 1890s but became an important industry in the 1920s. Television, invented in the mid-1920s, took until the mid-1950s to bind us to our sofas.

We can date the birth of the personal computer somewhere between the invention of the microprocessor in 1971 and the introduction of the Altair hobbyist computer in 1975. Either date puts us today about halfway down the road to personal computers’ being a part of most people’s everyday lives, which should be consoling to those who can’t understand what all the hullabaloo is about PCs. Don’t worry; you’ll understand it in a few years, by which time they’ll no longer be called PCs.

By the time that understanding is reached, and personal computers have wormed into all our lives to an extent far greater than they are today, the whole concept of personal computing will probably have changed. That’s the way it is with information technologies. It takes us quite a while to decide what to do with them.

Radio was invented with the original idea that it would replace telephones and give us wireless communication. That implies two-way communication, yet how many of us own radio transmitters? In fact, the popularization of radio came as a broadcast medium, with powerful transmitters sending the same message -- entertainment -- to thousands or millions of inexpensive radio receivers. Television was the same way, envisioned at first as a two-way visual communication medium. Early phonographs could record as well as play and were supposed to make recordings that would be sent through the mail, replacing written letters. The magnetic tape cassette was invented by Phillips for dictation machines, but we use it to hear music on Sony Walkmans. Telephones went the other direction, since Alexander Graham Bell first envisioned his invention being used to pipe music to remote groups of people.

The point is that all these technologies found their greatest success being used in ways other than were originally expected. That’s what will happen with personal computers too. Fifteen years from now, we won’t be able to function without some sort of machine with a microprocessor and memory inside. Though we probably won’t call it a personal computer, that’s what it will be.

It takes new ideas a long time to catch on -- time that is mainly devoted to evolving the idea into something useful. This fact alone dumps most of the responsibility for early technical innovation in the laps of amateurs, who can afford to take the time. Only those who aren’t trying to make money can afford to advance a technology that doesn’t pay.

This explains why the personal computer was invented by hobbyists and supported by semiconductor companies, eager to find markets for their microprocessors, by disaffected mainframe programmers, who longed to leave their corporate/mainframe world and get closer to the machine they loved, and by a new class of counterculture entrepreneurs, who were looking for a way to enter the business world after years of fighting against it.

The microcomputer pioneers were driven primarily to create machines and programs for their own use or so they could demonstrate them to their friends. Since there wasn’t a personal computer business as such, they had little expectation that their programming and design efforts would lead to making a lot of money. With a single strategic exception -- Bill Gates of Microsoft -- the idea of making money became popular only later.

These folks were pursuing adventure, not business. They were the computer equivalents of the barnstorming pilots who flew around America during the 1920s, putting on air shows and selling rides. Like the barnstormers had, the microcomputer pioneers finally discovered a way to live as they liked. Both the barnstormers and microcomputer enthusiasts were competitive and were always looking for something against which they could match themselves. They wanted independence and total control, and through the mastery of their respective machines, they found it.

Barnstorming was made possible by a supply of cheap surplus aircraft after World War I. Microcomputers were made possible by the invention of solid state memory and the microprocessor. Both barnstorming and microcomputing would not have happened without previous art. The barnstormers needed a war to train them and to leave behind a supply of aircraft, while microcomputers would not have appeared without mainframe computers to create a class of computer professionals and programming languages.

Like early pilots and motorists, the first personal computer drivers actually enjoyed the hazards of their primitive computing environments. Just getting from one place to another in an early automobile was a challenge, and so was getting a program to run on the first microcomputers. Breakdowns were frequent, even welcome, since they gave the enthusiast something to brag about to friends. The idea of doing real work with a microcomputer wasn’t even considered.

Planes that were easy to fly, cars that were easy to drive, computers that were easy to program and use weren’t nearly as interesting as those that were cantankerous. The test of the pioneer was how well he did despite his technology. In the computing arena, this meant that the best people were those who could most completely adapt to the idiosyncrasies of their computers. This explains the rise of arcane computer jargon and the disdain with which “real programmers” still often view computers and software that are easy to use. They interpret “ease of use” as “lack of challenge”. The truth is that easy-to-use computers and programs take much more skill to produce than did the hairy-chested, primitive products of the mid-1970s.

Since there really wasn’t much that could be done with microcomputers back then, the great challenge was found in overcoming the adversity involved in doing anything. Those who were able to get their computers and programs running at all went on to become the first developers of applications.

With few exceptions, early microcomputer software came from the need of some user to have software that did not yet exist. He needed it, so he invented it. And son of a gun, bragging about the program at his local computing club often dragged from the membership others who needed that software, too, wanted to buy it, and an industry was born.

Reprinted with permission

Yesterday Apple rolled out iOS 6.1.2 for compatible iPads, iPhones and iPod touch devices, touting the fix of an Exchange calendar bug that might boost network activity and decrease battery life. And, as customary with a new iteration of iOS 6, there's also a new version of the popular evasi0n jailbreak tool. Evad3rs, the team responsible for the first iOS 6 jailbreak tool, released evasi0n 1.4 shortly after iOS 6.1.2 rolled out.

Yesterday Apple rolled out iOS 6.1.2 for compatible iPads, iPhones and iPod touch devices, touting the fix of an Exchange calendar bug that might boost network activity and decrease battery life. And, as customary with a new iteration of iOS 6, there's also a new version of the popular evasi0n jailbreak tool. Evad3rs, the team responsible for the first iOS 6 jailbreak tool, released evasi0n 1.4 shortly after iOS 6.1.2 rolled out.

Select developers already have access to Google’s futuristic glasses, but now the search giant has launched a competition giving ordinary American citizens the chance to buy a pair before they’re launched, and become a "Glass Explorer" (as Google terms those "bold, creative individuals who want to help shape the technology").

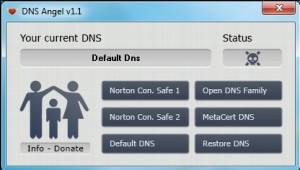

Select developers already have access to Google’s futuristic glasses, but now the search giant has launched a competition giving ordinary American citizens the chance to buy a pair before they’re launched, and become a "Glass Explorer" (as Google terms those "bold, creative individuals who want to help shape the technology"). Parental controls software is normally bulky, complex, and the kind of application which can take some considerable time to configure. There may be lots of files to install, resident components which must always be running in the background, user profiles to create, content filters to customize, and the list goes on.

Parental controls software is normally bulky, complex, and the kind of application which can take some considerable time to configure. There may be lots of files to install, resident components which must always be running in the background, user profiles to create, content filters to customize, and the list goes on. But if you do have any problems then clicking "Restore DNS" will restore your original DNS settings, while choosing "Default DNS" tells Windows to obtain your settings automatically (they might be assigned by your router, say).

But if you do have any problems then clicking "Restore DNS" will restore your original DNS settings, while choosing "Default DNS" tells Windows to obtain your settings automatically (they might be assigned by your router, say). I have to be completely honest -- I am not a fan of the default Android keyboard. For people like me who write in languages other than English on a day-to-day basis, it misses the mark entirely, and does not adapt to my writing style either. Ever since I bought my Galaxy Nexus only one Android keyboard has lived up to my expectations -- SwiftKey. And now there's a new version, and it's even better than ever.

I have to be completely honest -- I am not a fan of the default Android keyboard. For people like me who write in languages other than English on a day-to-day basis, it misses the mark entirely, and does not adapt to my writing style either. Ever since I bought my Galaxy Nexus only one Android keyboard has lived up to my expectations -- SwiftKey. And now there's a new version, and it's even better than ever. Apple has released

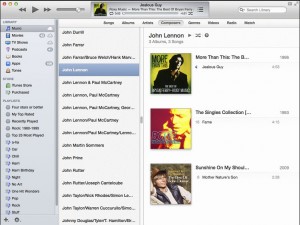

Apple has released  Apple also promises that the update improves iTunes’ responsiveness when syncing playlists containing a large number of songs. The only documented fix applied is one that resolves issues whereby some users’ libraries weren’t showing their purchases from the iTunes store.

Apple also promises that the update improves iTunes’ responsiveness when syncing playlists containing a large number of songs. The only documented fix applied is one that resolves issues whereby some users’ libraries weren’t showing their purchases from the iTunes store.

Spanish security company Panda Security Ltd has released

Spanish security company Panda Security Ltd has released  The update follows on from the release of Panda Cloud Antivirus 2.1, which added anti-exploit technology to both free and Pro versions, providing users with protection against malware that exploits so-called zero-day vulnerabilities in unpatched software such as Java, Adobe and Microsoft Office. Like traditional signature-based malware, suspicious files are sent to the cloud for analysis, but in this case their behavior is tracked to determine whether or not they pose a potential risk.

The update follows on from the release of Panda Cloud Antivirus 2.1, which added anti-exploit technology to both free and Pro versions, providing users with protection against malware that exploits so-called zero-day vulnerabilities in unpatched software such as Java, Adobe and Microsoft Office. Like traditional signature-based malware, suspicious files are sent to the cloud for analysis, but in this case their behavior is tracked to determine whether or not they pose a potential risk. UK Telecoms regulator Ofcom has

UK Telecoms regulator Ofcom has

Apple may be

Apple may be