Canaux

108470 éléments (108470 non lus) dans 10 canaux

Actualités

(48730 non lus)

Actualités

(48730 non lus)

Hoax

(65 non lus)

Hoax

(65 non lus)

Logiciels

(39066 non lus)

Logiciels

(39066 non lus)

Sécurité

(1668 non lus)

Sécurité

(1668 non lus)

Referencement

(18941 non lus)

Referencement

(18941 non lus)

éléments par Steven Walling

BetaNews.Com

-

Useful Excel project management and tracking templates

Publié: août 19, 2019, 8:07pm CEST par Anthony Stevens

Managing a project is no easy feat. The immense amount of responsibility and critical decision-making skills required to see a project through can sometimes overwhelm even the most seasoned managers. Just imagine what it would be like for first-timers. Even experts in the field need all the help they can get. And as always, it’s Excel to the rescue! Whether you’re an experienced project manager or a newbie on the lookout for tools to track and organize tasks, you’ll find Excel to be a valuable ally. To make things more convenient for you, we have compiled some of our favorite… [Continue Reading] -

The art of workplace motivation: How to keep IT staff engaged under high-growth pressure

Publié: mars 30, 2019, 9:06am CET par Steve Shead

IT teams, particularly in the tech and health tech worlds, face a high level of pressure in today’s environment -- whether it is supporting first-to-market launches or ensuring the highest level of security to prevent data breaches. But how do you keep an IT team engaged, motivated while ensuring they are "always on?" According to a recent Gallup poll, only 2 in 10 employees strongly agree their performance is managed in a way that motivates them to do outstanding work. That frustration can lead to serious retention issues. In fact, LinkedIn’s most recent talent turnover report indicated the tech software… [Continue Reading] -

5 sure-fire ways to kill your company's innovation

Publié: décembre 3, 2018, 12:39pm CET par Steven L. Blue

Innovation is difficult to come by. It is a fleeting concept that eludes most companies. In fact, the odds of a new product idea reaching full commercialization are less than 4 percent. And that is the best case. But, there are 5 sure-fire ways you can make certain innovation never sees the light of day at your company: 1.) Don’t make innovation a top priority and an "all-hands" job requirement. Many CEO’s want innovation but only after "the real work" gets done. Here is a new flash: if you want to survive you better make innovation "the real work". Make… [Continue Reading] -

Dispelling 5 common myths about desktop printers in the office

Publié: septembre 12, 2016, 8:40pm CEST par Steven Hastings

They may seem like technology from the days of old, but make no mistake -- desktop printers still can hold an important place in British businesses today. Not only do they help create efficient and flexible printing management, but they also can help keep British office workers at their most productive, rendering them potentially one of the most important products for IT managers in 2016. The value of desktop printers is often overlooked due to advancements of new printer technology services, including an array of enterprise-orientated features, however, the traditional role of the printer mustn’t be forgotten. Desktop printers intertwined within a… [Continue Reading] -

Security Analytics: What it is and what it is not

Publié: mai 16, 2016, 4:56pm CEST par Steven Grossman

There’s a misconception in the cyber security industry that many IT, security executives and vendors subscribe to. They equate security analytics to SIEM and user and entity behavior analytics (UEBA). They use the three terms interchangeably as if they are all one of the same and solve the same problems. As a result, companies waste time, leave gaps in their visibility, ability to execute and ultimately fail to minimize their cyber risk. In a report released this month, analyst firm Forrester states, "Security analytics has garnered a lot of attention during the past few years. However, marketing hype and misunderstandings… [Continue Reading] -

Stick to the script: 5 ways to keep your software development outsourcing on track

Publié: mai 6, 2016, 8:07pm CEST par Steve Mezak

The good news -- you’ve found a software development team that you’re comfortable with and work well alongside. They fit so well into your company’s culture, you even begin to view them as you would any internal employee. While this is all well and good, it’s not to say that you won’t run into challenges along the way -- from day-to-day communication gaps to even uncertainties in tracking of overall progress. Certainly no relationship is perfect, but it falls within your job description as the project manager or product owner to ensure outsourced projects stay on track and more importantly… [Continue Reading] -

UEBA is only one piece of the cyber risk management puzzle

Publié: avril 14, 2016, 4:48pm CEST par Steven Grossman

Just like perimeter protection, intrusion detection and access controls, user and entity behavioral analytics ("UEBA") is one piece of the greater cyber risk management puzzle.

UEBA is a method that identifies potential insider threats by detecting people or devices exhibiting unusual behavior. It is the only way to identify potential threats from insider or compromised accounts using legitimate credentials, but trying to run down every instance of unusual behavior without greater context would be like trying to react to every attempted denial of service attack. Is the perceived attack really an attack or is it a false positive? Is it hitting a valued asset? Is that asset vulnerable to the attack? It is time for cyber risk management to be treated like other enterprise operational risks, and not a collection of fragmented activities occurring on the ground.

Analogous to fighting a war, there needs to be a top down strategic command and control that understands the adversary and directs the individual troops accordingly, across multiple fronts. There also needs to be related "situational awareness" on the ground, so those on the front lines have a complete picture and can prioritize their efforts.

When it comes to cyber risk management, it means knowing your crown jewels and knowing the specific threats to which they are vulnerable. If an information asset is not strategically valuable and does not provide a gateway to anything strategic, it should get a lot less focus than important data and systems. If an important asset has vulnerabilities that are likely to be exploited, they should be remediated before vulnerabilities that are unlikely to be hit. It seems logical, but few large enterprises are organized in a way where they have a comprehensive understanding of their assets, threats and vulnerabilities to prioritize how they apply their protection and remediation resources.

Even "at the front", UEBA is only a threat detection tool. It uncovers individuals or technologies that are exhibiting unusual behavior but it doesn’t take into account greater context like the business context of the user’s activities, associated vulnerabilities, indicators of attack, value of the assets at risk or the probability of an attack. By itself, UEBA output lacks situational awareness, and still leaves SOC analysts with the task of figuring out if the events are truly problematic or not. If the behavior, though unusual, is justified, then it is a false positive. If the threat is to corporate information that wouldn’t impact the business if it were compromised, it’s a real threat, but only worth chasing down after higher priority threats have been mitigated. For example, let’s say through UEBA software, it is identified that an employee on the finance team is logging into a human resources application that he typically would not log into. UEBA is only informing the incident responder of a potential threat. The SOC will have to review the activity, determine if it is legitimate, if not, check if the user has access privileges to access sensitive information in the application, see if their laptop has a compromise that may indicate a compromised account and then make what is at best a not so educated guess that will often result in inaccurate handling. Just as important, the SOC analyst will likely do all of their homework and handle the incident appropriately, but without the right context they may have wasted a lot of time chasing down a threat that of low importance relative to others in the environment.

A true "inside-out" approach to cyber risk management begins with an understanding of the business impact of losing certain information assets. The information assets that, if compromised, would create the most damage are the information CISOs, line-of-business and application owners, SOC investigators, boards of directors and everyone else within the company should focus on protecting the most. They should determine where those assets are located, how they may be attacked, if they are vulnerable to those attacks and the probability of it all happening. Once that contextualized information is determined, everyone within the company can prioritize their efforts to minimize cyber risk.

Photo credit: arda savasciogullari / Shutterstock

Steven Grossman is Vice President of Program Management, Bay Dynamics. He has over 20 years of management consulting experience working on the right solutions with security and business executives. At Bay Dynamics, Steven is responsible for ensuring our clients are successful in achieving their security and risk management goals. Prior to Bay Dynamics, Steven held senior positions at top consultancies such as PWC and EMC. Steven holds a BA in Economics and Computer Science from Queens College.

Steven Grossman is Vice President of Program Management, Bay Dynamics. He has over 20 years of management consulting experience working on the right solutions with security and business executives. At Bay Dynamics, Steven is responsible for ensuring our clients are successful in achieving their security and risk management goals. Prior to Bay Dynamics, Steven held senior positions at top consultancies such as PWC and EMC. Steven holds a BA in Economics and Computer Science from Queens College. -

Taking enterprise security to the next level with two-factor authentication

Publié: novembre 30, 2015, 11:15am CET par Steve Watts

Two-factor authentication (2FA) has been about for much longer than you think. For a decade or more we have been used to being issued with a card reader (in essence a hardware token device) to use with our bank card and Personal Identification Number (PIN) when looking to complete our internet banking transactions.

2FA technology has also, over the past years, been employed by seven of the ten largest social networking sites (including Facebook, Twitter and LinkedIn) as their authentication measure of choice.

Because of this, the use of the technology has become widespread in the consumer realm, with consumers well versed in how to use 2FA and the importance of it to keep their private data safe from prying eyes. So why can’t the same be said about the largest businesses? Surely the time is right for businesses to look at the user’s authentication method of choice?

Fancy a Coffee?

The need for employees to be able to login to systems and business-critical applications remotely is increasing, due to the increasing propensity for staff to work remotely; whether that is from a home office, a hotel lobby or accompanied by a skinny decaf sugar-free vanilla syrup latte in one of the seemingly never-ending array of coffee shops.

This has become something that has kept even the calmest CIO up at night as they try to balance the requirements of remote workers with the challenge of authenticating users all over the world on a multitude of devices. Passwords are intrinsically and fatally flawed, but 2FA can provide a simple solution to keep sensitive corporate information secure -- regardless of where it is accessed

Boardrooms must now take the technology seriously. Seemingly every week there is a widespread data breach hitting news headlines. In fact, recent research of some 692 security professionals from both global businesses and government agencies found that almost half (47 percent) have suffered a material security breach in the past two years.

Many of these could have been averted through the implementation of 2FA. The technology is all things to all people, meaning users can have the same user name and password for numerous business apps yet you won’t get into a TalkTalk type scenario as the second factor required for authentication is generally hashed, unknown and randomized for each login.

With the Ponemon Institute now suggesting that the average cost of a data breach is an eye watering £2.47 million ($3.79 million) the cost to the business of a data breach could be increasingly catastrophic and shouldn’t be ignored.

Time For 2FA

Using 2FA can help lower the number of cases of identity theft on the Internet, as well as phishing via email, because the criminal would need more than just the user’s name and password details, and often something the user themselves doesn’t know if your extra authentication layer should be a one-time passcode.

Central to the growing popularity of 2FA is the fact that the technology provides assurance to businesses that only authorized users are able to gain access to critical information (whether it be customer records, financial data or valuable intellectual property). This helps them maintain compliancy to a plethora of industry regulations such as PCI Data Security Standards, GCSx CoCo, HIPAA, or SOX.

Another core benefit of 2FA is that it is a key example of a technology that compliments the prevalence of BYOD (bring your own device) rather than conflicts against it, as staff can use their existing smartphones for authentication input. This convenience of integrating the "something you have" of 2FA with something employees are already used to carrying is a benefit to users, while also circumventing the need for capital expenditure costs for the organization.

Also, by using devices staff are already familiar with, 2FA reduces potential training time. In summary, businesses empower employees with an easy-to-use solution that provides a consistent experience, drastically reducing login time and human error.

While 2FA empowers users, CIOs and IT decision makers also benefit from a flexible solution that can be hosted how, where and when they prefer. 2FA is built to suit any business, as it supports both on premise and cloud hosting and management, making it a strong contender for any CIO changing their security systems.

By using existing infrastructure, on premise deployment is often convenient, swift and straightforward, while cloud services are appropriately supported by the 2FA provider. This gives decision makers full control and flexibility over the solution, which can be rolled out to departments and employees at their discretion.

The Solution Is in Our Pockets

We are constantly told that users are the weakest link in corporate security. Yet with 2FA becoming as ubiquitous as taking a selfie is for the modern masses, the information security technology being seen by many as the holy grail of authentication could be the one that is literally already at the users’ fingertips.

And with the number of mobile phones now exceeding the number of people on the planet according to GSMA Intelligence the input mechanism is, quite literally, in all our pockets.

Steve Watts, co-founder of SecurEnvoyImage.

Published under license from ITProPortal.com, a Net Communities Ltd Publication. All rights reserved.

Photo Credit: wk1003mike/Shutterstock

-

Combating insider threats: The pillars of an effective program

Publié: novembre 27, 2015, 12:18pm CET par Steven Grossman

Insider threats can be the most dangerous threats to an organization -- and they’re difficult to detect through standard information security methods. That’s partially because the majority of employees unknowingly pose a risk while performing their regular business activities.

According to data we collected from analyzing the behaviors of more than a million insiders across organizations, in approximately 90 percent of data loss prevention incidents, the employees are legitimate users who innocently send out data for business purposes. They are exhibiting normal behavior to their peers and department, even though it might be in violation of the established business policy and a significant risk to their employer.

Adding to the challenge, IT and security teams are getting killed trying to make sense of the mountains of alerts, most of which do not identify the real problem because the insider is often not tripping a specific switch. They spot check millions of alerts, hoping to find the most pertinent threats, but more often than not end up overlooking the individual creating the actual risk. For example, a large enterprise we worked with had 35 responders spot-checking millions of data loss prevention incidents, and even with such heavy manpower, they would most often focus on the wrong employees. Their investigation and remediation efforts were not prioritized, and in turn, they couldn’t make sense of the abundance of alerts because they were looking at them one by one.

To build an effective insider threat program, companies need to start with a solid foundation. It’s critical they identify the most important assets and the insiders who have the highest level of access to those assets. Then, they should practice good cybersecurity hygiene: ensure data loss prevention and endpoint agents are in place and working; check that access controls are configured so that insiders can only access information they need; establish easily actionable security policies, such as making sure insiders use strong and unique passwords for their corporate and personal accounts; and encourage a company-wide culture that focuses on data protection through targeted security awareness training and corporate communication surrounding security.

Once the foundation is in place, monitor users’ behaviors and respond accordingly. By understanding their behavioral patterns, companies can identify when employees are acting unusually, typically an indicator that the user is up to no good -- or is being impersonated by a criminal. For example, when you go through a security checkpoint at the airport, the officers checking your identification ask you questions. They do not care about your responses; they are mainly looking at how you respond. Do you seem nervous? Are you sweating? They watch your behavior to determine if you could be a potential safety risk -- this same principal applies to insider threat programs.

When creating insider threat programs, oftentimes security teams focus on rules: they define what’s considered abnormal or risky behavior and then the team flags insiders whose actions fall into those definitions. However, this method can leave many organizations vulnerable -- chasing the latest attack, rather than preventing it. Rules are created based on something risky someone did in the past, which led to a compromise. The criminals can easily familiarize themselves with the rules and get past them. Rules do not help detect the “slow and low” breaches where insiders take out a small amount of information during a lengthy period of time so that the behavior goes undetected by security tools. And they do not combine activities across channels, such as someone accessing unusual websites and trying to exfiltrate data.

Enterprises need to understand what’s normal versus abnormal and then further analyze that behavior to determine if it’s malicious or non-malicious. By focusing on a subset of insiders -- those who access a company’s most critical data -- and how they normally behave, they can create a targeted list of individuals who need investigating. In a large enterprise, the list can be long, and organizations need to optimize how they respond. For those employees who are non-maliciously endangering the company, companies should provide targeted security awareness training that specifies exactly what each person did to put the company at risk and how they can minimize their risk. Most employees acting in good faith will be more careful once they understand the risk they pose to their employer. For third party vendor users, share information with the main vendor contact about who specifically is putting the organization at risk and what they are doing. Then, the vendor can handle the situation accordingly, reducing everybody’s risk.

An insider threat program should also focus on monitoring performance and communicating progress and challenges to C-level executives and the Board of Directors. Enterprises should show them what they are doing and the impact of their investment in security tools and programs, as well as explain any challenges they need to overcome. An effective insider threat program requires support from the highest level of individuals in an organization. With everyone on the same page, organizations can constantly reassess their program to truly understand their security alerts and reduce the likelihood of setting off false red flags—ensuring they’re catching and predicting the real threats, and removing them before they do any long-term damage.

Image Credit: Andrea Danti/Shutterstock

Steven Grossman is Vice President of Program Management, Bay Dynamics. He has over 20 years of management consulting experience working on the right solutions with security and business executives. At Bay Dynamics, Steven is responsible for ensuring our clients are successful in achieving their security and risk management goals. Prior to Bay Dynamics, Steven held senior positions at top consultancies such as PWC and EMC. Steven holds a BA in Economics and Computer Science from Queens College.

Steven Grossman is Vice President of Program Management, Bay Dynamics. He has over 20 years of management consulting experience working on the right solutions with security and business executives. At Bay Dynamics, Steven is responsible for ensuring our clients are successful in achieving their security and risk management goals. Prior to Bay Dynamics, Steven held senior positions at top consultancies such as PWC and EMC. Steven holds a BA in Economics and Computer Science from Queens College. -

What you need to know about cyber insurance

Publié: septembre 7, 2015, 7:58pm CEST par Steve Watts

Cyber insurance is an important element for companies as it covers the damage and liability caused by a hack, which are usually excluded from traditional liability coverage.

Stricter data privacy notification laws, government incentives, cloud adoption and the increase in high-profile hacks and data breaches have all contributed to the significant increase in the number of companies offering and buying cyber insurance.

All companies face varying levels of risk, which warrants the need for a cyber insurance policy. You can look at two candidates for such a policy: first, companies that store data from external sources like retailers, healthcare companies and financial services firms; and secondly, any company that stores employee data.

Customer information, such as payment details and addresses are gold to hackers. Obviously, companies that store internal and external data should seriously consider a policy as they have the most to lose. However, according to PWC’s June 2014 Managing cyber risks with insurance report, risks can often come from within -- which puts both external and internal data at huge risk.

According to the report, "a systemic cyber risk can stem from internal enterprise vulnerabilities and lack of controls, but it can also emanate from upstream infrastructure, disruptive technology, supply-chain providers, trusted partners, outsourcing contractors, and external sources such as hacktivist attacks or geopolitical actors".

In 2014, cyber-attacks and cybercrime against large companies rose 40 percent globally, according to Symantec’s annual Internet Security Threat report. Unfortunately, for many organizations across the US and UK, the complexity in finding a suitable cyber insurance policy, coupled with the underwriting process can be daunting and considered too much hassle.

What executives are not aware of is that purchasing cyber insurance is affordable and ultimately a good exercise that provides the opportunity for them to take a closer look at their internal technology and security policies -- ensuring they are up to snuff for underwriters. This is why strong cybersecurity measures such as two-factor authentication need to be considered for all businesses.

Companies need to make sure they have the best technology in place to protect their information, before implementing a cyber insurance policy. Without the right protection in place, companies will find it incredibly difficult to procure an affordable insurance policy and could potentially lose millions if they suffer a data breach. This is significant to any business when you consider that the total cost of a breach is now $43.8 million (£28.7 million), up 23 percent since 2013, according to Ponemon’s 2015 Cost of a Data Breach study.

Selecting the right policy is not as hard, nor as expensive, as some may think. Yet, when it comes to cyber insurance, not having a strong security system in place is the equivalent of admitting that you left the front door open when your house was robbed.

The right systems need to be in place before CIOs, CFOs and risk managers can make such an important purchase. Security acts as the vaccination, while insurance is a cure should the worst happen.

Steve Watts is co-founder and sales director of SecurEnvoy

Published under license from ITProPortal.com, a Net Communities Ltd Publication. All rights reserved.

Image Credit: FuzzBones/Shutterstock

-

How to protect your business from ransomware

Publié: juin 17, 2015, 5:07pm CEST par Steve Harcourt

You may have heard about ransomware attacks in the last few months. These are attacks that seize control of your machine or your data and demand a ransom to remove the virus. Back in the 90’s, these attacks were less common but demanded large quantities of money and would target large organizations, governments and critical infrastructure suppliers.

More recently, the criminals involved in ransomware attacks have realized that demanding small payments and targeting individual users can be more fruitful, and arguably is less likely to raise enough interest to warrant a law-enforcement counter-attack.

Back in September 2013, CryptoLocker emerged and was propagated via infected email attachments and links. It is particularly difficult to counteract, resulting in infected files and folders becoming encrypted using RSA-1024 public-key encryption, whilst a countdown to deletion of this data is initiated should you decide to not pay up. Payment of a few hundred pounds/dollars or even bitcoins is demanded.

At Information Security Europe 2015 this week, Steve Harcourt (Redstor’s Information Security subject matter expert) found that ransomware attacks were discussed in great technical detail. Organizations who specialize in detection and removal of these infections talked through what they call the "Cyber Security Lifecycle" and how businesses should consider Cyber Security as a core business process.

A common theme from the conference was the understanding that prevention is no longer enough to protect yourself from ransomware attacks. It is certainly important to do everything possible to reduce the chances of being attacked, however these days it is necessary to take the attitude that "I will get attacked at some point and need to consider how I react when it happens".

The experts suggested that the number one action that all companies should take to protect themselves is to schedule regular point-in-time backups. Merely replicating data and services to another location for the purposes of resilience may just result in a quick replication of an infection.

So, the message to take away is that in addition to your extensive spend on network security, your in-house patch policy to keep all servers up-to-date and your mobile device management policy, you also need to consider using cloud backup as a way to recover and rollback, should the inevitable happen.

Steve Harcourt is Senior Information Security Consultant at Redstor.

Published under license from ITProPortal.com, a Net Communities Ltd Publication. All rights reserved.

Image Credit: Carlos Amarillo / Shutterstock

-

NFC can kill passwords for good

Publié: mai 4, 2015, 11:54pm CEST par Steve Watts

Since the dawn of the digital age, we’ve signed up to the password, trusting in its ability to keep our digital lives safe from thieves and those who would mean us harm.

Moore’s law tells us that every two years computing power doubles -- meaning every two years the amount of time it takes to crack a password using a brute force attack decreases considerably. It’s now reached the point where a password can be cracked in minutes, sometimes in as little as just six seconds. Six seconds to potentially lose your entire digital life.

In an attempt to protect ourselves many of us have turned to increasingly long and complex passwords made up of numbers, symbols and differing cases. There are two things wrong with this. First, all it does is slow the hacker down, not stop them.

Second, with no hope of ever remembering these complicated passwords we’ve resorted to writing them down, with many of us admitting to the unsafe practice of password vaulting, storing them all in one insecure place!

The antidote to password hacking is two-factor authentication (2FA), which incorporates something you know, such as a password or PIN, something you are, such as a fingerprint or retinal scan, and something you own, which can either be a physical token or a soft token on a device you use every day, such as a mobile phone. The idea behind 2FA is to bring two of these separate methods together to introduce a much stronger level of security, should one of the methods become compromised.

In the past, increasing the security of user authentication has always meant additional time and complication to the end-user logging in. Many organizations have therefore refrained from making it compulsory as they felt the end user experience was more important than the need for better security. Lacking simplicity, these solutions have not been able to replace the password and because of this our information continues to be at risk.

The media is awash with headlines of yet another celebrity that’s had their social media profiles or iCloud breached, with hackers stealing images and sensitive correspondence, as well as sending out embarrassing messages from their Twitter or Instagram. Social media platforms and the Apple iCloud all offer two-factor authentication but many clearly choose not to initiate it, despite having a lot to lose.

There is a solution however -- Near Field Communication (NFC). The technology enables smartphones and other devices to establish radio communication with each other to, for instance, wirelessly transfer data by bringing them into proximity. NFC differs from other technologies such as Bluetooth, as it doesn’t require devices to be paired before use.

Mobile applications can utilize NFC to securely transfer all the information required to enable a browser to start up, connect to the required URL, and then automatically enter the user id, password and second factor passcode in one seamless logon.

This technology can be used for any back end solution that needs to verify a user, whether it be at initial logon or at the point of verifying a transaction. Effectively, any time an application needs to positively prove the end user is who they say they are, this technology can be invoked.

This effectively removes the need for a password and creates a solution which is quicker, easier and more secure -- all the ingredients needed to signal the death of the password. Windows 10, which is set to launch this summer, incorporates NFC technology into the operating system which means a Windows smartphone can be used to interact with Windows 10 tablets, laptops and PCs.

This technology isn’t just limited to mobile phones either. Wearable technology, highly personal in nature, can also be utilized, enabling you to authenticate using your smartwatch by simply tapping your wrist against the corresponding device.

NFC is already supported on most leading Android and Windows smartphones whilst Apple is yet to open up the NFC chips in the Apple Watch and iPhone for third-party application use, it is expected to do this in the near future, which would make NFC authentication possible for Apple devices as well.

From an end user perspective, they simply choose the account they want to activate, enter a four-digit pin or fingerprint, and tap their phone or smart watch against the corresponding device. A pin, a tap, and you’re in. It’s that simple.

Steve Watts isCo-founder of SecurEnvoy.

Published under license from ITProPortal.com, a Net Communities Ltd Publication. All rights reserved.

Photo Credit: Roger Wissmann/Shutterstock

-

10 music releases that brought down entire websites

Publié: octobre 22, 2014, 6:42pm CEST par Steve Rawlinson

The Internet and digital downloads have moved us past the times when the shelves of record stores were often cleared of every last copy of the latest hit single. But that doesn't mean that the mad onslaught of devoted fans can't still cause havoc as they scramble to get the newest songs.

The businesses behind online music stores and artists' sites often fail to predict the incredible and unusual demand for their services and, rather than queues at record shops, the shift to downloads now means that website crashes are the new normal for merchants caught by surprise. Here are 10 music releases that have caused web crashes -- it really can happen to anyone.

Chris Webby, Webster's Laboratory

Whilst Chris Webby probably hoped his sixth mixtape, Webster's Laboratory, would go down a storm, he probably didn't expect Datpiff, the site hosting the mixtape, to go down with it! The mixtape gained big exposure among British hip hop fans, and its popularity was boosted by the guest appearances from Gorilla Zoe, Freeway, Apathy and Kinetics.

Boards of Canada, Tomorrow's Harvest

Boards of Canada fans had to wait eight years before the group made their comeback in June 2013, with the album Tomorrow's Harvest. Rubbing salt into the wound, the long wait was extended for many when the live album playback crashed the band's official website under the massive demand.

Whilst everyone was excited about the record launch, Warp Records got a little bit carried away when it saw that Twitter went down for several minutes not long into the transmission. "Did @boctransmission break twitter? #tomorrowsharvest" it asked. I'm going to hazard a guess that it didn't...

Ellie Goulding and Tinie Tempah, Hanging On

When two of the biggest British stars collaborate together on a single, online mayhem should be expected. Ellie Goulding's and Tinie Tempah's single, Hanging On, was no exception. In July 2012, Ellie announced the unveiling of the new song on Twitter. Fans swarmed onto Soundcloud, bringing the site down with them.

Ellie's urge to "Enjoy and share responsibly" no longer seemed the best advice and she later had to apologize on Twitter, saying "I'm sorry sound cloud d/l link is down it will be back up soon yous crashed it!!!"

My Bloody Valentine, Loveless

Communication is key to My Bloody Valentine's position on this list. Choosing to go live with the follow-up to the band's first album since 1991 solely on their website wasn't the best idea. The site quickly crashed as fans clambered to download a copy. However, the real mistake was not giving their fans any updates. With only a server error for information, fans quickly turned to social media to vent their frustration.

Kylie Minogue, All The Lovers

The hidden mystery of the "extra special" news on her website brought a mad rush of fans that caused Kylie Minogue's site to crash in April 2010. Thousands of devoted fans logged on, desperate for a glimpse of the video clip for her upcoming track, All The Lovers.

Kylie turned to Twitter, exclaiming "Aaahhhhhhhhh ... you've overloaded the system!!!

Arrgggghhhhhhhhh!!!!!... That is the strength of your combined power!!!!!! OMG (Oh my God)!!!!"

Coldplay, Violet Hill

Coldplay seemed to underestimate how many of their fans love a bargain when they released their single, Violet Hill, as a free download on their website. Thousands immediately logged on, quickly crashing the site. By the end of the evening, only 900 fans had successfully managed to download the single for free, in many cases turning to fansites to taunt those who hadn't.

5 Seconds of Summer

5 Seconds of Summer demonstrated the immense power of "tweenagers" everywhere (and why we should probably harness that power as the only effective form of renewable energy) without even having to sing a note. Announcing that they'd hidden something in the maze of their Pacman-inspired video game was enough to provoke an onslaught of fans, crashing the website. Only a few fans managed to play the game before the site went down, and these winners were lucky enough to see the new album... artwork.

Radiohead, In Rainbows

You'd expect one of the most popular bands in the world to expect a fair amount of interest to an album launch, especially one where the fans could choose the price they want to pay. But Radiohead's website collapsed after waves of fans -- first from Britain, followed by east coast USA, and then its west coast -- all logged on upon hearing the announcement of the release of In Rainbows.

Lady Gaga, Born This Way

If you think it's just fan sites that fall short of user demand, then Lady Gaga will make an impression on you (and in a different, less 'creative' way than usual). Amazon.com decided to release Lady Gaga's album, Born This Way, for download at $0.99 for one day only. Sadly it wasn't long before the site buckled under the demand as fans flocked to download it at a full $11 discount.

Beyonce, Beyonce

Queen Bey tops the list with the release of her latest album, Beyonce, which impressively resulted in an amazing 80,000 downloads of her latest album in just three hours. However, iTunes' performance was less impressive as the site crashed under the strain of millions of hysterical fans who logged on after the album was placed on the website without any prior warning.

Even if a business doesn't have an avid following of millions of fans, like some of the artists mentioned above, website surges still happen and website owners need to be aware and ready to act. Predicting when a website might experience surges in traffic is the best way to ensure that consumers and stakeholders don't end up frustrated and unable to access the content they need or want. We saw this just recently when the UK DVLA website crashed as thousands of motorists logged on on the launch day of the new digitized tax discs service.

But not all traffic surges are predictable, Chris Webby never could have anticipated the demand for his sixth mixtape. Yet sadly customers are no more sympathetic when the surge is unexpected as when it is expected. The results for a business are always the same: lost revenues and angry customers.

Website owners need to know that when their website has a massive increase in demand they are ready to scale up and withstand the traffic. Scalability must be a priority to avoid lost revenue and damages to the brand. Because without the right resources, a website will buckle when the most eyes are upon them.

Image Credit: Serhiy Kobyakov / Shutterstock

Published under license from ITProPortal.com, a Net Communities Ltd Publication. All rights reserved.

-

Is it time for your business to implement an artificial intelligence strategy?

Publié: septembre 11, 2014, 11:26pm CEST par Steve Mason

For decades the prospect of artificial intelligence (AI) has loomed over the business world. Often warped and distorted by its depiction in fiction, there's been a certain stigma associated with the use and the potential impact of AI. From Skynet enslaving the world to psychotic computers threatening astronauts in 2001: A Space Odyssey, the concept of AI has been taken a long way from the fundamental point of having a software which can independently carry out rudimentary tasks. But there are real benefits it can bring that can make life easier and more enjoyable for workers and citizens alike.

You will often hear business leaders talking about maximizing productivity and driving efficiency in their organizations. Yet, when you look at some of the typical wastage that goes on at companies, a lot of it comes from the standard admin and menial tasks none of us like doing. We recently asked workers, who are often targeted in these productivity drives, if they thought a little automation could help them in their day to day work. Over half said they believe predictive software will be capable of doing 10 per cent of daily admin work in the very near future.

It would seem strange, given the depiction in fiction, that people would be so willing to allow AI into their working lives. Yet when we take a closer look at the results, there is very much a generational difference in willingness to embrace this kind of technology. Millennials -- those who have grown up with Siri and smartphones -- are in general the most in favor of incorporating AI into their future work lives, whilst the over 55s need a bit more convincing. The millennial generation, which might have played games like Halo, from which Microsoft has taken the name of its upcoming rival to Siri, Cortana, have been weaned on the benefits rather than the threats of what AI could bring.

Will AI ever be able to replace workers in all their roles? Highly doubtful. We could be over 100 years away from the day when a cognitive AI service could be totally independent, and even that is a generous prediction. So the idea that people's jobs would be on the line if what is available now were to be brought into our workplace is not really credible.

Even when a genius like Stephen Hawking has his doubts about AI, saying, "Creating artificial intelligence will be the biggest event in human history.... it might also be the last", we are clearly a long way from this happening. In the meantime, we can take advantage of what is and what will be soon available to handle some of the more mundane tasks, allowing us to focus on more important things.

Given this groundswell of opinion and expectation of AI's incorporation into our working lives, it's another consideration CIOs and IT managers should be contemplating as part of their IT strategies in the years to come. When you look at businesses that could benefit most from this, certain industries stand out. Take a big service company which already utilizes automation to share information to the various departments. What if you took the level of automation even further? How much better would it be for all concerned when a customer request is lodged with a company for an engineer to come and fix something that is then automatically built into the engineer's schedule. The parts that are needed will be instantly ordered and ready to go.

At the moment, you can ring a call center and lodge your query. That is then passed onto the service team. That is then passed onto the resource manager. That is then passed onto the engineer. It's quite a prosaic approach when, with a little bit more automation, the whole process could be made far slicker. This can, of course, be accomplished by human intelligence, but when it is something as precise and repetitive as a good chain of command, why not delegate a piece of central AI to better plan the course of events that need to unfold?

The key to success for this will be identifying the areas within a business or industry that could most benefit from automation. For example, if you run a field service team, a lot of data is analyzed and examined before travel routes and staff rosters are created. There are millions of calculations and considerations that need to go into this process, including factors such as equipment, skill sets and staff holidays, as well as day to day occurrences like traffic jams and sick leave. Wouldn't it be better if those calculations were automated and the optimum result was worked out by an AI function -- saving time, reducing cost and eliminating the risk of human error?

Working back from the problem, you can see how one day we can expect more AI products on the market which solve problems that businesses have faced for years. Staff can benefit too, when menial or time-consuming tasks can be taken off their hands. Companies don't tend to employ staff for the accuracy of their admin skills, instead they want them to focus on their primary functions. AI gives businesses and employees the possibility to finally optimize the roles they are doing and potentially take on new skills and functions that will ultimately be to the benefit of the business they are working for.

Steve Mason is the vice president of mobility for EMEA at ClickSoftware

Published under license from ITProPortal.com, a Net Communities Ltd Publication. All rights reserved.

-

Celebrities and identity theft

Publié: août 14, 2014, 8:29pm CEST par Steve Weisman

America loves celebrities. Scam artists, the only criminals we refer to as artists, are well aware of our fascination with the lives and sometimes untimely deaths of celebrities, and exploit this interest through a number of schemes aimed at turning the public's fascination into the identity thief’s treasure.

The sad and tragic death by suicide of Robin Williams has become the latest opportunity for identity thieves to exploit a celebrity death for financial gain. In one Robin Williams related scam, a post appears on your Facebook page -- it often can appear to come from someone you know, when, in fact, it is really from an identity thief who has hacked into the Facebook account of your real friend. The post provides a link to photos or videos that appeal in some instances to an interest in Robin Williams related movie or standup performances. However, in other instances, the link will appeal to the lowest common denominator and purport to provide police photos or videos of the suicide site. If you fall for this bait by clicking on the link, one of two things can happen, both of which are bad.

In one version of this scam, you are led to a survey that you need to complete before you can view the video. In fact, there is no such video and by providing information, you have enabled the scammer to get paid by advertisers for collecting completed surveys. However, the problem is worse because by completing the survey, you may have turned over valuable information to a scammer who can use that information to target you for spear phishing and further identity theft threats. However, worst of all, in another variation of this scam, when you click on the link you will unwittingly download keystroke logging malware that will steal all of your personal information from your computer, laptop, smartphone or other device including credit card numbers, passwords and bank account information and use that information to make you a victim of identity theft.

The scams following the death of Robin Williams are just the latest manifestation of celebrity death related ploys that we saw in recent years following the deaths of Whitney Houston, Amy Winehouse and Paul Walker, among others.

Scammers and identity thieves do not need a celebrity death to turn the public’s interest in a celebrity into identity theft. Not long ago many people found on their Facebook page a photograph of a stabbed person’s back along with a message that stated "Rapper Eminem left nearly DEAD after being stabbed 4 times in NYC! Warning, 18+ It was all caught on surveillance video! Click the pic to play the video!" The truth is that Eminem was not stabbed. In fact, the same photograph was used in 2011 as a part of a scam in which the photograph was purported to be a photograph of the back of Justin Beiber following a stabbing attack. Once again, by clicking on the link, the unwary victim downloaded keystroke logging malware that turned him or her into a victim of identity theft.

Remember my mantra, "trust me, you can’t trust anyone". Merely because a post on your Facebook page, a text message or an email appears to come from someone that you trust is no reason to consider it reliable. The communication may come from an identity thief who has hacked your friend’s account or it may actually come from your friend who is unwittingly passing on tainted links that they received. For news matters including celebrity news, stick with the websites of legitimate news sources such as CNN or TMZ.

In addition. it is important to note that even if you think you are protected from such threats because you have anti-malware software installed on all of your electronic devices, it generally takes the security software companies at least a month to come up with security patches and updates to protect you from the latest malware and virus threats.

Image Credit: dashingstock /Shutterstock

Steve Weisman is a lawyer, college professor and one of the nation’s leading experts on identity theft and scams. His latest book is "Identity Theft Alert". He also writes the blog www.scamicide.com where he provides daily updated information on the latest scams and identity theft threats.

Steve Weisman is a lawyer, college professor and one of the nation’s leading experts on identity theft and scams. His latest book is "Identity Theft Alert". He also writes the blog www.scamicide.com where he provides daily updated information on the latest scams and identity theft threats. -

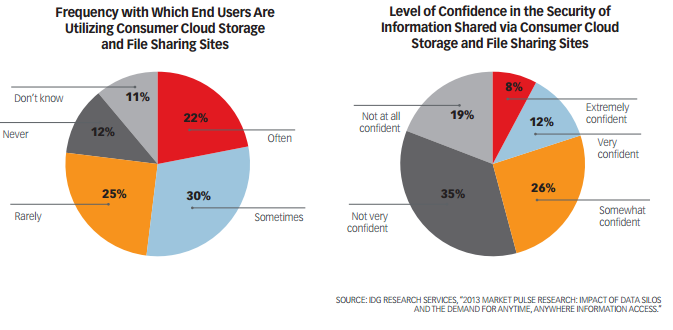

Cloud sprawl: What is it, and how can you beat it?

Publié: juillet 2, 2014, 8:23pm CEST par Steve Browell

Today's workplace plays host to employees using a variety of cloud services side-by-side with corporate-sanctioned IT. This often results in incongruent information sourcing and storage, typically known as cloud sprawl. Whilst software as a service (SaaS) can boost smarter working and innovation in businesses, information disparity issues need to be addressed to sustain efficient working environments.

As businesses adoption and management of cloud services matures, some are still suffering organizational inefficiencies due to cloud sprawl. At the moment, different software is being selected for different solutions by different departments or even individual members of staff; there is a knowledge gap where businesses aren't fully informed about how cloud technology can respond to business challenges in a different way to on-premise solutions, and so the potential for better information management is not being realized.

From the mid-market to larger enterprise, businesses need to enable company-wide education about the benefits of cloud.

To avoid cloud sprawl, there are a host of things that need to be considered; things that IT managers don't often think about when moving to cloud. People are still figuring out what cloud means to their business, and this often leads to information sprawl as new and different clouds are being deployed. Eventually cloud computing will mature and become standardized and interoperable, but until then there are certain sprawling issues to be addressed.

Cloud sprawl is also partly down to CIOs losing control of their IT strategies and procurement processes. Software as a Service has enabled more business departments (whether HR, marketing or finance) to make purchasing decisions oncloud solutions. For the CIO, this can lead to chaos as there are lots of different systems running in siloes. This can lead to challenges meeting overall goals, with CIOs effectively taking on a supply management role as they fight to manage disparate systems and ensure interoperability and business agility.

With 'Shadow IT' (systems businesses depend upon not deployed or managed by the IT team) becoming increasingly commonplace in the industry, teams are now making decisions without the CIO. As a result, we are seeing fragmentation of ultimate decision makers; not the desired effect of cloud computing.

There needs to be clear direction from the CIO to the business in terms of where it is necessary for information systems to share data; they must be clear on the need to interrelate information.

Individual departments may work perfectly well alone, but they still need the CIO to provide mechanisms to connect with each other and ensure compatibility. Many SaaS solutions have overlapping and duplicate functions, such as social or messaging plug-ins, which is another feature of cloud sprawl.

The key lesson to be learnt from the cloud sprawl issue is about the interconnection of business systems. This highlights particular problems for SaaS since linking systems together is critical to achieving maximum return. Organizations need to learn that systems might potentially overlap, and that solutions being too siloed or having too many overlaps may cause sprawl.

Steve Browell is CTO at Intrinsic

Published under license from ITProPortal.com, a Net Communities Ltd Publication. All rights reserved.

-

I think we’ve seen this before... Why 'incident intelligence' is imperative

Publié: avril 12, 2014, 10:14am CEST par Steven Weinstein

Lately, I’ve had a lot of conversations about how threat intelligence can enrich organizations’ incident response processes and how the right intelligence can make them more effective. As a note, I’m a former full time lead incident responder for a massive organization and now a researcher.

I can confidently say that when you’re dealing with literally hundreds of malware incidents per day, the minute differences in identified indicators can all start to blur together. Being able to very quickly and efficiently answer the question of whether or not a particular indicator of compromise has been seen before (and in what context) is crucial. Let’s call this "incident intelligence". Incident responders always need to have a clear picture of what they are dealing with and how it may relate to something already encountered during previous incidents, but unfortunately for most teams, this is easier said than done.

An equally inhibiting issue is the fact that incident responders or security operations analysts typically require up to ten different Web pages or portals open at any given time in order to fully understand the elements of the current incident. The number of details involved in a single security incident is staggering, and when responders need to gather those details from numerous sources, it only adds to the confusion of trying to remember if a single indicator has been seen in a previous incident or not.

The solution to this (lack of) incident intelligence problem is surprisingly simple: Put proper processes in place! While many organizations implement complex incident handling and tracking processes, they may not be addressing the issue in the most effective way. Processes for ongoing incidents must be updated to include searching back through previous incidents to determine if there is any overlap. Hopefully organizations have a powerful search capability built into their incident tracking systems (assuming an organization even has a tracking system), which they can leverage in these situations.

It’s important to have your processes updated to include this historical search capability because it’s absolutely imperative to be able to know what you’ve seen before. If you rely solely on that instinct of "I think we’ve seen this before," you will make mistakes, you will miss crucial details, and you won’t be as effective as you need to be. On this point, I speak from experience.

Having this visibility into whether or not a specific indicator of compromise (including registry keys, IP addresses, domains, etc) has been seen before really makes an impact. Those Dynamic DNS FQDNs all looked the same after dealing with the onslaught of the Blackhole and Neutrino exploit kits’ prolific usage of DynDNS domains, right? Being able to determine overlaps in incidents can help show how specific threats relate to each other -- maybe how a specific exploit kit tends to continuously drop the same type of malware, or how Cryptolocker is typically spread via ZeuS Gameover (which often comes from malspam). You could also find overlaps and uncover extended undetected access after you thought a previous incident had been remediated -- dare I say "persistent?"

What's one of the biggest benefits of having incident intelligence? It substantially reduces the security operations team’s reliance on your subject matter experts (dedicated malware teams, incident response teams, CERTs, etc). The ability for a SOC analyst to be able to say "yes, we’ve seen this before in incident #99999, it was related to this threat, and this is how we handled it" is incredibly powerful and saves precious time and resources all around.

It is time for incident response to evolve. Companies need processes that give them the ability to recognize if an ongoing incident overlaps with a previous incident. This way, they can immediately reference the past incident to understand the details and the outcome -- all of this information is extremely important in determining the incident response action items.

Armed with this insight into what has been encountered before by leveraging knowledge of past incidents, incident response and security operations teams can completely change their decision making processes and respond to incidents more effectively than ever.

Now that’s incident intelligence.

Image Credit: Sergey Nivens/Shutterstock

As a Malware Researcher at Lookingglass, Steven Weinstein combines a deep understanding of malware analysis and incident response to research the latest threats, their impact on organizations and strategies to defend against them. He is also responsible for enrichment of current data sets by producing relevant and actionable malware indicators of compromise at mass scale. Prior to joining Lookingglass, Steven was the lead malware incident responder for Deutsche Bank. He was responsible for day-to-day malware incident handling and investigating high severity incidents involving APT attacks. Steven also created the bank's malware incident handling procedures and trained dozens of security analysts on incident response best practices. Additionally, he aided in the creation of a world-class automated malware triage tool. Steven is a subject matter expert in the fields of incident response and malware analysis. He graduated with honors from Towson University with a degree in Computer Science with a focus in Security.

As a Malware Researcher at Lookingglass, Steven Weinstein combines a deep understanding of malware analysis and incident response to research the latest threats, their impact on organizations and strategies to defend against them. He is also responsible for enrichment of current data sets by producing relevant and actionable malware indicators of compromise at mass scale. Prior to joining Lookingglass, Steven was the lead malware incident responder for Deutsche Bank. He was responsible for day-to-day malware incident handling and investigating high severity incidents involving APT attacks. Steven also created the bank's malware incident handling procedures and trained dozens of security analysts on incident response best practices. Additionally, he aided in the creation of a world-class automated malware triage tool. Steven is a subject matter expert in the fields of incident response and malware analysis. He graduated with honors from Towson University with a degree in Computer Science with a focus in Security. -

Are hosted cloud storage providers heading down a slippery security slope?

Publié: avril 3, 2014, 11:26am CEST par Steven Luong

The Bring Your Own Device (BYOD) trend shows no sign of slowing; in fact, 38 percent of companies expect to stop providing devices to workers by 2016 according to research from Gartner. As such, some hosted cloud storage providers, such as Dropbox, are making it possible for users to manage both work and personal accounts from a single mobile device using their software. Products like these, which focus heavily on the user experience, are indeed commendable. However, they often ignore the entire IT side of the equation for data management and risk management, something that could cause serious security issues down the road.

The Bring Your Own Device (BYOD) trend shows no sign of slowing; in fact, 38 percent of companies expect to stop providing devices to workers by 2016 according to research from Gartner. As such, some hosted cloud storage providers, such as Dropbox, are making it possible for users to manage both work and personal accounts from a single mobile device using their software. Products like these, which focus heavily on the user experience, are indeed commendable. However, they often ignore the entire IT side of the equation for data management and risk management, something that could cause serious security issues down the road.There are security and control issues inherent in allowing "rogue users" -- users that find ways around network security policies -- to use consumer accounts at work without IT oversight, as this greatly increases corporate risk. IT must be able to centrally manage and backup all corporate information regardless of whether or not it’s synced or shared via a personal or business account.

Assuring litigation readiness and information governance is critical for most organizations. Where eDiscovery is concerned, IT must be able to quickly search all corporate data to ensure regulatory compliance, including documents and files stored and shared via consumer-oriented file sharing services. Enterprises that wish to allow the use of these services should consider enabling a software solution that can protect endpoint data, provide data access and sync across multiple devices, make the data searchable for eDiscovery and deliver fast data recovery if and when disasters strike.

While the risks associated with the use of consumer-oriented file sharing services is applicable to all industries, liabilities increase tenfold in data-sensitive industries such as legal or healthcare where additional requirements, such as HIPAA compliance, must be met.

To fully enable enterprise- class mobility and mitigate potentially catastrophic security issues, organizations should consider the following:

- Give IT control over ALL data. Understand that anything less is a compromise. Taking data out of IT’s hands makes organizations ripe for a major security issue. If companies are going to accept the risk of allowing users to use file sync and share solutions, they should consider enterprise software that ensures the business data is visible to IT and backed up.

- Mitigate corporate risk. For IT to do its job effectively, it needs to know what's out there regardless of whether data is stored within the enterprise or on consumer-oriented file-sharing services. All data must be searchable and discoverable if required by their compliance and legal teams.

As file sharing and data backup services continue to converge, mobility solutions must balance: a) self-service access and data portability for end users with b) simplified management and control for IT departments. Force fitting a consumer-focused cloud solution to an enterprise environment is a very risky scenario and quite frankly, won’t provide the data protection and security required for a sustainable successful business.

Photo Credit: Marynchenko Oleksandr /

Steven Luong is Senior Manager, Product Marketing, at CommVault. Steven has over 11 years of experience in the technology industry delivering server, storage, and information management software solutions. Steven received his bachelor’s degree in Chemical Engineering and MBA from the University of Texas at Austin.

Steven Luong is Senior Manager, Product Marketing, at CommVault. Steven has over 11 years of experience in the technology industry delivering server, storage, and information management software solutions. Steven received his bachelor’s degree in Chemical Engineering and MBA from the University of Texas at Austin.

Secrets2moteurs

-

Comment Justin Bieber sauve votre Netlinking

Publié: janvier 11, 2013, 8:17am CET par Steve

Vous le savez comme moi, être une PME et s’engager dans une stratégie de Netlinking pour populariser son contenu ce n’est pas donné à tout le monde. Cela prend du temps, de l’argent et des ressources. Alors aujourd’hui étudions un moyen de mettre en place une stratégie efficace prenant appui sur la popularité d’un prescripteur. Pourquoi je mentionne Justin Bieber ? La réponse dans l’article complet sur le blog de l’agence e-mondeos

-

Votre contenu Adwords a aussi une importance

Publié: novembre 27, 2012, 7:54am CET par Steve

Vous écoutez chaque jours vos collègues vous vendre le mérite du content marketing, mais après tout rien ne vaut une bonne petite campagne Adwords pour rentabiliser vos heures de recherches et d’optimisations. Mais même sur Adwords votre contenu doit être optimisé… Petite review des facteurs essentiels à la bonne mise en place de votre campagne Adwords lorsque l’on parle de contenu optimisé.

Article : Adwords et votre contenu optimisé

-

Mesurer les retombées de votre Content Marketing

Publié: novembre 16, 2012, 1:49pm CET par Steve

Vous le savez tous, le content marketing (ou stratégie marketing d’optimisation par le contenu) est pour vous une arme sûre lorsqu’il s’agit d’attirer un trafic qualifier. Mais comment mesurer vos retombées ? quels KPI’s à prendre en compte ?

Mais attention à ne pas rentrer dans une optique de produire plus pour gagner plus au risque de perdre en pertinence !

Décryptage : Mesurer votre Content Marketing

BetaNews.Com

-

Security lessons Zappos' 24 million customer breach should teach us

Publié: janvier 17, 2012, 10:13pm CET par Steven Sprague

Another major breach is in the headlines. Zappos, an online shoe and apparel retailer owned by Amazon, disclosed Sunday night that more than 24 million of its customer accounts had been compromised. Hackers accessed customer names, email addresses, phone numbers, the last four digits of credit card numbers and cryptographically scrambled passwords.

Another major breach is in the headlines. Zappos, an online shoe and apparel retailer owned by Amazon, disclosed Sunday night that more than 24 million of its customer accounts had been compromised. Hackers accessed customer names, email addresses, phone numbers, the last four digits of credit card numbers and cryptographically scrambled passwords.To its credit, Zappos moved quickly, resetting the passwords for all the affected accounts. But it was cold comfort for those who may still be in danger of having their data exposed if they used the same or similar credentials on other websites. This concern prompted Zappos CEO Tony Hsieh to warn customers of possible phishing scam exposures in an email to affected customers. It’s another reminder of the sad state of security today.

Allegedly, the attack originated on one of the company’s servers based in Kentucky. While details of how the server was hacked are scant, perhaps the best advice to all the would-be Zappos of the world would be to take the simple step: Restrict server access to only known PCs.

Establishing the identity of a platform isn’t difficult at all, in fact, the corporate PCs that would need access to an enterprise server already have the technology embedded on the motherboard. The Trusted Platform Module (TPM) can attest to the identity of the device with nearly absolute certainty.

And a second, powerful safeguard is encryption. With that much sensitive information housed on the network, encryption at the server level should be considered a "must" to prevent a Zappos-like breach in the future.

And the last lesson from the Zappos breach? Isn’t it time we armed the consumer with stronger authentication than the fragile username and password? Here, too, the TPM has proven its ability to play a role; providing an excellent mechanism to protect individual identity with an extremely secure, integrated capacity to store a PC’s security keys.

Because the TPM can store multiple keys, it enables the end-user to access secure services from multiple independent providers – each with a unique, secure key. Whoever owns the PC controls the keys stored in the TPM—giving power to the end user, where it belongs.

Photo Credit: Andrea Danti/Shutterstock

Steven Sprague is CEO of Wave Systems. His expertise lies in leveraging advancements in hardware security for strong authentication, data protection, advanced password management, enterprise-wide trust management services and more. Mr. Sprague earned a BS from Cornell University in 1987.

Steven Sprague is CEO of Wave Systems. His expertise lies in leveraging advancements in hardware security for strong authentication, data protection, advanced password management, enterprise-wide trust management services and more. Mr. Sprague earned a BS from Cornell University in 1987. -

US Chamber of Commerce hack shows need for vigilance

Publié: décembre 23, 2011, 5:14pm CET par Steven Sprague

This week’s high-profile hack of the US Chamber of Commerce underscores the inadequacy of today’s security policies and technologies. With the holidays quickly approaching and IT staffs stepping away from offices to spend time with family and friends, we face increased vulnerabilities and security threats. We should be more vigilant than ever, reflecting on national security policies and how we can better protect our sensitive data.

This week’s high-profile hack of the US Chamber of Commerce underscores the inadequacy of today’s security policies and technologies. With the holidays quickly approaching and IT staffs stepping away from offices to spend time with family and friends, we face increased vulnerabilities and security threats. We should be more vigilant than ever, reflecting on national security policies and how we can better protect our sensitive data. Stories like this continue to point to the fact that we need a broad, across-the-board approach. We need to collaborate and inform when breaches take place. We need diplomatic support to reduce the desire or economic benefit to steal. It is time to have a Y2K approach to cyber protection. That means investment and support from the top down.

The first step should be whitelisting all of the devices that could have accessed the Chambers’ servers. Access should have been restricted to known and trusted devices. The technology to establish trust in the endpoint is already there, with more than half a billion Trusted Platform Modules (TPM) security chips built into PCs. This simple step has worked for many industries to protect the viability of their networks and business models -- from Comcast to Verizon to Apple.

Waiting for IT to do the right thing is killing us. Perhaps they need regulation to prompt them into action. Perhaps they just need leadership. What’s very clear is that the economic growth of the United States in the future can’t wait for us to take steps to protect ourselves.

We are more vulnerable now than we have ever been, and the faster we dispel the illusion that cyber-attacks happen to someone else, the better off we’ll be. It’s nice to remember the way things used to be, but times have changed. Just because our parents never locked the car or the front door of the house does not mean that we can’t learn to lock the car and the front door. RSA, the leading provider of "door locks" lost the master key and still not everyone changed their locks.

This year’s resolution: "NO more passwords for remote access". Let’s make 2012 the year that every user logs into his device and the device logs the user into the network (the device can be a smartphone or a desktop). Auditors need to be our ground troops. There should be no access to manage servers, access databases where the application does not verify that a known machine is being used. Then we need to check on the list of known machines.

We have the technology. The economics make sense. But do we have the will to enact such a sweeping, yet simple, measure? Historians will label 2011 as the year when our IT security infrastructure failed us. The RSA and Sony breaches, attacks by Anonymous and LulzSec, even Wikileaks drove home to the broad marketplace that when it comes to data security, cyber-attackers can take down systems and steal data at will. We must be vigilant in the next ten days and beyond. Let’s learn from the mistakes of the past year and look forward to a safer, more secure 2012.

Photo Credit: Kheng Guan Toh/Shutterstock

Steven Sprague is CEO of Wave Systems. Since taking the company's helm in 2000, Sprague has played an integral role driving the industry transition to embed stronger, hardware-based security into the PC. Sprague has guided Wave to a position of market leadership in enterprise management of self-encrypting hard drives and Trusted Platform Module security chips. His expertise lies in leveraging advancements in hardware security for strong authentication, data protection, advanced password management, enterprise-wide trust management services and more. Mr. Sprague earned a BS from Cornell University in 1987.

Steven Sprague is CEO of Wave Systems. Since taking the company's helm in 2000, Sprague has played an integral role driving the industry transition to embed stronger, hardware-based security into the PC. Sprague has guided Wave to a position of market leadership in enterprise management of self-encrypting hard drives and Trusted Platform Module security chips. His expertise lies in leveraging advancements in hardware security for strong authentication, data protection, advanced password management, enterprise-wide trust management services and more. Mr. Sprague earned a BS from Cornell University in 1987. -

Data can be saved from your water-soaked computer

Publié: septembre 2, 2011, 6:37pm CEST par Steven Brier

Irene's assault on the Eastern Seaboard earlier this week is just the beginning of what is expected to be another heavy season of hurricanes and tropical storms. What happens to your data if raging rains or flooding waters damage your computer? The electronics may be gone but your precious files are likely recoverable.

Irene's assault on the Eastern Seaboard earlier this week is just the beginning of what is expected to be another heavy season of hurricanes and tropical storms. What happens to your data if raging rains or flooding waters damage your computer? The electronics may be gone but your precious files are likely recoverable.It’s tempting to turn that water-logged computer back on and see if anything can be saved. Don’t. The disk drives contain contaminants that can destroy the drive and all the data on it. You pose the greatest risk to your valuable data. Here's what you should or should not do instead:

Hard Disk Drives

* Never assume that data is unrecoverable.

* Do not shake or disassemble any hard drive that has been damaged.

* Do not attempt to clean or dry water-logged drives or tapes.

* Before storing or shipping wet media, it should be placed in a Ziploc bag or other container that will keep it damp and protect shipping material from getting wet. Wet boxes can break apart during transit causing further damage to a drive.

* Do not use software utility programs on broken or water-damaged devices.

* When shipping hard drives, tapes or other removable media to a data recovery service, package them in a box that has enough room for both the media and some type of packing material that allows for no movement. The box should also have sufficient barrier room around the inside edges to absorb impact during shipping.

* If you have multiple drives, tapes or other removable media that need recovery, ship them in separate boxes or make sure they are separated enough with packing material so there will be no contact.

Floppy Disks / Tapes

* Water-damaged tapes and floppy disks are fragile. If a tape is wet, store it in a bag so it remains wet. If a tape is dry, do not rewet it. Many data recovery services can unspool tapes and recover data.

* Floppy disks have so little capacity that they typically aren’t worth the expense of trying to recover.

CDs/DVDs

* CDs and DVDs are reasonably sturdy. Unless they had been sitting in a corrosive mix or are visibly damaged they can be cleaned and the data recovered.

* If they were on a spindle or stacked, and are now stuck together, do not try to peel them apart.

* Mix a solution of 90 percent clean (potable) water and 10 percent isopropyl alcohol. Soak the stack of CDs until they become unstuck. Wipe dry with clean, lint-free cloth if possible.

* To clean CDs or DVDs, rinse them in the water/isopropyl alcohol solution. If necessary to remove grime, wipe them again with cotton swaps or cloth soaked in isopropyl alcohol. Remember, scratches will cause loss of data, so be gentle.

* After cleaning, place CD in a functioning computer and copy any recovered data.

USB Flash Drives

* USB flash drives contain no moving parts. Unless physically damaged or corroded, the drives usually work. Ensure the drive is dry and clean (Use a Q-tip soaked in the alcohol/water solution to wipe the USB contacts) before using a water-soaked flash drive.

* If a drive has been physically damaged, some data recovery services will remove the flash chips and connect them to a new device in an effort to recover the data.

What Will Professional Services Cost You?