Virtualization has widely been seen as one of the most cost-saving server technologies to emerge in the last decade. The flexibility virtual machines allow to start up whole servers as and when they are needed, then shut them down when they are not, has in theory meant that general-purpose server hardware can be readily re-allocated from one task to another as necessary.

So there won’t be idle resources wasting money doing nothing, because that particular area has been over-specified. But the theory doesn’t always work this way in practice, as there can be hidden costs that the concept obscures. In this feature, we uncover some of these hidden costs, and discuss the steps a network administrator can take to address the impact.

One of the key areas where virtualization has hidden costs comes from the very same feature that makes it so useful. To achieve its much-feted flexibility, virtualization has to rely on being "jack of all trades but master of none", so that optimizations for one task don’t mean a significant slow-down in another.

As a result, hardware will be designed to give the best possible performance across a broad range of the most frequently used applications. But this will mean that it won’t be able to deliver best-in-class performance for any given application compared to hardware that was designed specifically with that task in mind. So if you do know which applications you will be running, and how much performance will be required, there is a good chance that dedicated hardware will be more cost-effective than full virtualization.

Linked to this, multi-core scaling is another area where virtualization can create serious performance bottlenecks. Even when a virtual machine has dedicated use of the underlying hardware and isn’t using emulation, it can still result in performance that is a few times slower than the non-virtualized equivalent.

The main reason for this is the intervention of the virtual machine manager during synchronization-induced idling in the application itself, host operating system or supporting libraries. It is possible to implement software that reduces the detrimental effect of these idling times via a process of idleness consolidation.

This ensures that each virtual core is fully utilized via interleaving workloads, allowing unused cores to power down, saving energy costs. However, this is not what a lot of virtualization systems do, so their virtual cores will spend a fair bit of time sitting around doing nothing whilst they wait for the next block of work to do.

Another hidden cost is more directly financial. When purchasing software licenses, these may be costed on a per-core basis for the host server, even if only some of those cores will actually be tasked with running virtual environments using that particular piece of software. There may be no legal right to ask for fewer licenses than the server capacity, although some vendors will allow a Sub-Capacity License Agreement.

Even in this case, it will probably be necessary to set up a complex dynamic license auditing system that checks how many licenses are in use, and ensures that the number purchased is never exceeded. Either way, an extra cost is involved.

A company will either have to buy more licenses than it really needs, or at least purchase and implement an additional sophisticated system so that the company can prove it is complying with licensing levels. In the worst-case scenario, where a virtualization server is considerably over-specified for a particular application, for failover reasons or because it runs a number of other virtual tasks, a company may end up paying for many more licenses than it ever actually uses.

Tracking Costs

On a related note, tracking costs in general when IT services are virtualized is an order of magnitude more complicated as well. In a traditional IT environment, a department or application will have specific hardware, software, and infrastructure allocated to it, and the costs for this will be clearly ascribed.

But in a virtualized environment, the hardware and infrastructure is shared across all departments and applications that use it, and allocated dynamically as required. Usage levels will be constantly in flux, so keeping track of how much each department or application is using will not be straightforward. This makes it hard to build solid, data-driven business cases of how utilization might require new capacity initiatives for a particular scenario.

Some of the most sophisticated integrated cloud-based virtual server systems make it possible to allocate costs to various different types of deployment and their utilization levels, or to the underlying server resources used.

This makes it possible to keep track of how much different usage scenarios are costing relative to others, which will make it possible to equate this with the revenue these activities are generating, so development budget can be allocated accordingly. But this will require extensive work modelling the implications of infrastructure, hardware and software licensing costs for different types of virtual machine, which in itself will be a cost.

Where all these hidden costs are becoming a problem, a solution like HP’s Moonshot could be the answer. The cartridge-based approach it takes to provisioning, and the relatively low cost of these cartridges, means that a single system can serve multiple different applications, but allow each one to scale as required.

So some of the advantages of virtualization are preserved, although it’s not possible to dynamically re-allocate resources from one task to a radically different one. This is because the Moonshot cartridges are tailored to a selection of frequently required server types at the hardware level, and a company will purchase the type of cartridge it needs for a particular task.

But the advantages of this can be great, as HP’s Moonshot avoids a significant hidden cost of virtualization by supplying servers that really are optimized for specific tasks. Only the truly shared resources, such as power and networking infrastructure, are kept common. The quantity and type of cores, memory, and storage in each cartridge are all balanced for best provisioning of service types such as Web servers, Web caching, DSP-based calculations or remote virtual desktops.

The costs for each service are kept transparent by a direct relation to the cost of the cartridges used for that service, and licensing can similarly be kept down to only the servers that actually run that particular application.

Although virtualization will continue to have a huge amount to offer the future of computing, in circumstances where its hidden costs could potentially outweigh the benefits, HP’s Moonshot can supply an alternative where costs are very clear, so the gains can be clear as well.

Photo credit: Nomad_Soul/Shutterstock

Published under license from ITProPortal.com, a Net Communities Ltd Publication. All rights reserved.

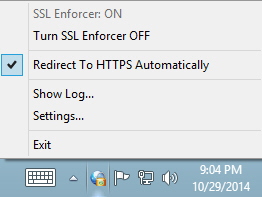

Accessing websites via an encrypted HTTPS connection is a great way to protect your personal information, and especially important when you’re using public Wi-Fi.

Accessing websites via an encrypted HTTPS connection is a great way to protect your personal information, and especially important when you’re using public Wi-Fi.

Jeffrey Man has compiled a rich knowledge base in cryptography, information security, and most recently PCI. With PCI impacting nearly every business vertical, he has served as a QSA and trusted advisor for both VeriSign and AT&T Consulting. As an NSA cryptographer, he oversaw completion of some of the first software-based cryptosystems ever produced for the high-profile government agency. Current Position: As

Jeffrey Man has compiled a rich knowledge base in cryptography, information security, and most recently PCI. With PCI impacting nearly every business vertical, he has served as a QSA and trusted advisor for both VeriSign and AT&T Consulting. As an NSA cryptographer, he oversaw completion of some of the first software-based cryptosystems ever produced for the high-profile government agency. Current Position: As