Seventeenth in a series. Love triangles were commonplace during the early days of the PC. Adobe, Apple and Microsoft engaged in such a relationship during the 1980s, and allegiances shifted -- oh did they. This installment of Robert X. Cringely's 1991 classic Accidental Empires shows how important is controlling a standard and getting others to adopt it.

Seventeenth in a series. Love triangles were commonplace during the early days of the PC. Adobe, Apple and Microsoft engaged in such a relationship during the 1980s, and allegiances shifted -- oh did they. This installment of Robert X. Cringely's 1991 classic Accidental Empires shows how important is controlling a standard and getting others to adopt it.

Of the 5 billion people in the world, there are only four who I’m pretty sure have stayed consistently on the good side of Steve Jobs. Three of them -- Bill Atkinson, Rich Page, and Bud Tribble -- all worked with Jobs at Apple Computer. Atkinson and Tribble are code gods, and Page is a hardware god. Page and Tribble left Apple with Jobs in 1985 to found NeXT Inc., their follow-on computer company, where they remain in charge of hardware and software development, respectively.

So how did Atkinson, Page, and Tribble get off so easily when the rest of us have to suffer through the rhythmic pattern of being ignored, then seduced, then scourged by Jobs? Simple; among the three, they have the total brainpower of a typical Third World country, which is more than enough to make even Steve Jobs realize that he is, in comparison, a single-celled, carbon-based life form. Atkinson, Page, and Tribble have answers to questions that Jobs doesn’t even know he should ask.

The fourth person who has remained a Steve Jobs favorite is John Warnock, founder of Adobe Systems. Warnock is the father that Steve Jobs always wished for. He’s also the man who made possible the Apple LaserWriter printer and desktop publishing. He’s the man who saved the Macintosh.

Warnock, one of the world’s great programmers, has the technical ability that Jobs lacks. He has the tweedy, professorial style of a Robert Young, clearly contrasting with the blue-collar vibes of Paul Jobs, Steve’s adoptive father. Warnock has a passion, too, about just the sort of style issues that are so important to Jobs. Warnock is passionate about the way words and pictures look on a computer screen or on a printed page, and Jobs respects that passion.

Both men are similar, too, in their unwillingness to compromise. They share a disdain for customers based on their conviction that the customer can’t even imagine what they (Steve and John) know. The customer is so primitive that he or she is not even qualified to say what they need.

Welcome to the Adobe Zone.

John Warnock’s rise to programming stardom is the computer science equivalent of Lana Turner’s being discovered sitting in Schwab’s Drugstore in Hollywood. He was a star overnight.

A programmer’s life is spent implementing algorithms, which are just specific ways of getting things done in a computer program. Like chess, where you may have a Finkelstein opening or a Blumberg entrapment, most of what a programmer does is fitting other people’s algorithms to the local situation. But every good programmer has an algorithm or two that is all his or hers, and most programmers dream of that moment when they’ll see more clearly than they ever have before the answer to some incredibly complex programming problem, and their particular solution will be added to the algorithmic lore of programming. During their fifteen minutes of techno-fame, everyone who is anyone in the programming world will talk about the Clingenpeel shuffle or the Malcolm X sort.

Most programmers don’t ever get that kind of instant glory, of course, but John Warnock did. Warnock’s chance came when he was a graduate student in mathematics, working at the University of Utah computer center, writing a mainframe program to automate class registration. It was a big, dumb program, and Warnock, who like every other man in Utah had a wife and kids to support, was doing it strictly for the money.

Then Warnock’s mindless toil at the computer center was interrupted by a student who was working on a much more challenging problem. He was trying to write a graphics program to present on a video monitor an image of New York harbor as seen from the bridge of a ship. The program was supposed to run in real time, which meant that the video ship would be moving in the harbor, with the view slowly shifting as the ship changed position.

The student was stumped by the problem of how to handle the view when one object moved in front of another. Say the video ship was sailing past the Statue of Liberty, and behind the statue was the New York skyline. As the ship moved forward, the buildings on the skyline should appear to shift behind the statue, and the program would have to decide which parts of the buildings were blocked by the statue and find a way to turn off just those parts of the image, shaping the region of turned-off image to fit along the irregular profile of the statue. Put together dozens of objects at varying distances, all shifting in front of or behind each other, and just the calculation of what could and couldn’t be visible was bringing the computer to its knees.

“Why not do it this way?” Warnock asked, looking up from his class registration code and describing a way of solving the problem that had never been thought of before, a way so simple that it should have been obvious but had somehow gone unthought of by the brightest programming minds at the university. No big deal.

Except that it was a big deal. Dumbfounded by Warnock’s casual brilliance, the student told his professor, who told the department chairman, who told the university president, who must have told God (this is Utah, remember), because the next thing he knew, Warnock was giving talks all over the country, describing how he solved the hidden surface problem. The class registration program was forever forgotten.

Warnock switched his Ph.D. studies from mathematics to computer science, where the action was, and was soon one of the world’s experts on computer graphics.

Computer graphics, the drawing of pictures on-screen and on-page, is very difficult stuff. It’s no accident that more than 80 percent of each human brain is devoted to processing visual data. Looking at a picture and deciding what it portrays is a major effort for humans, and often an impossible one for computers.

Jump back to that image of New York harbor, which was to be part of a ship’s pilot training simulator ordered by the U.S. Maritime Academy. How do you store a three-dimensional picture of New York harbor inside a computer? One way would be to put a video camera in each window of a real ship and then sail that ship everywhere in the harbor to capture a video record of every vista. This would take months, of course, and it wouldn’t take into account changing weather or other ships moving around the harbor, but it would be a start. All the video images could then be digitized and stored in the computer. Deciding what view to display through each video window on the simulator would be just a matter of determining where the ship was supposed to be in the harbor and what direction it was facing, and then finding the appropriate video scene and displaying it. Easy, eh? But how much data storage would it require?

Taking the low-buck route, we’ll require that the view only be in typical PC resolution of 640-by-400 picture elements (pixels), which means that each stored screen will hold 256,000 pixels.

Since this is 8-bit color (8 bits per pixel), that means we’ll need 256,000 bytes of storage (8 bits make 1 byte) for each screen image. Accepting a certain jerkiness of apparent motion, we’ll need to capture images for the video database every ten feet, and at each of those points we’ll have to take a picture in at least eight different directions. That means that for every point in the harbor, we’ll need 2,048,000 bytes of storage. Still not too bad, but how many such picture points are there in New York harbor if we space them every ten feet? The harbor covers about 100 square miles, which works out to 27,878,400 points. So we’ll need just over 57 billion bytes of storage to represent New York harbor in this manner. Twenty years ago, when this exercise was going on in Utah, there was no computer storage system that could hold 57 billion bytes of data or even 5.7 billion bytes. It was impossible. And the system would have been terrifically limited in other ways, too. What would the view be like from the top of the Statue of Liberty? Don’t know. With all the data gathered at sea level, there is no way of knowing how the view would look from a higher altitude.

The problem with this type of computer graphics system is that all we are doing is storing and calling up bits of data rather than twiddling them, as we should do. Computers are best used for processing data, not just retrieving them. That’s how Warnock and his buddies in Utah solved the data storage problem in their model of New York harbor. Rather than take pictures of the whole harbor, they described it to the computer.

Most of New York harbor is empty water. Water is generally flat with a few small waves, it’s blue, and it lives its life at sea level. There I just described most of New York harbor in eighteen words, saving us at least 50 billion bytes of storage. What we’re building here is an imaging model, and it assumes that the default appearance of New York harbor is wet. Where it’s not wet—where there are piers or buildings or islands—I can describe those, too, by telling the computer what the object looks like and where it is positioned in space. What I’m actually doing is telling the computer how to draw a picture of the object, specifying characteristics like size, shape, and color. And if I’ve already described a tugboat, for example, and there are dozens of tugboats in the harbor that look alike, the next time I need to describe one I can just refer back to the earlier description, saying to draw another tugboat and another and another, with no additional storage required.

This is the stuff that John Warnock thought about in Utah and later at Xerox PARC, where he and Martin Newell wrote a language they called JaM, for John and Martin. JaM provided a vocabulary for describing objects and positioning them in a three-dimensional database. JaM evolved into another language called Interpress, which was used to describe words and pictures to Xerox laser printers. When Warnock was on his own, after leaving Xerox, Interpress evolved into a language called PostScript. JaM, Interpress, and PostScript are really the same language, in fact, but for reasons having to do with copyrights and millions of dollars, we pretend that they are different.

In PostScript, the language we’ll be talking about from now on, there is no difference between a tugboat or the letter E. That is, PostScript can be used to draw pictures of tugboats and pictures of the letter E, and to the PostScript language each is just a picture. There is no cultural or linguistic symbolism attached to the letter, which is, after all, just a group of straight and curved lines filled in with color.

PostScript describes letters and numbers as mathematical formulas rather than as bit maps, which are just patterns of tiny dots on a page or screen. PostScript popularized the outline font, where a description of each letter is stored as a formula for lines and bezier curves and recipes for which parts of the character are to be filled with color and which parts are not. Outline fonts, because they are based on mathematical descriptions of each letter, are resolution independent; they can be scaled up or down in size and printed in as fine detail as the printer or typesetter is capable of producing. And like the image of a tugboat, which increases in detail as it sails closer, PostScript outline fonts contain “hints” that control how much detail is given up as type sizes get smaller, making smaller type sizes more readable than they otherwise would be.

Before outline fonts can be printed, they have to be rasterized, which means that a description of which bits to print where on the page has to be generated. Before there were outline fonts, bit-mapped fonts were all there were, and they were generated in a few specific sizes by people called fontographers, not computers. But with PostScript and outline fonts, it’s as easy to generate a 10.5-point letter as the usual 10-, 12-, or 14-point versions.

Warnock and his boss at Xerox, Chuck Geschke, tried for two years to get Xerox to turn Interpress into a commercial product. Then they decided to start their own company with the idea of building the most powerful printer in history, to which people would bring their work to be beautifully printed. Just as Big Blue imagined there was a market for only fifty IBM 650 mainframes, the two ex-Xerox guys thought the world needed only a few PostScript printers.

Warnock and Geschke soon learned that venture capitalists don’t like to fund service businesses, so they next looked into creating a computer workstation with custom document preparation software that could be hooked into laser printers and typesetters, to be sold to typesetting firms and the printing departments of major corporations. Three months into that business, they discovered at least four competitors were already underway with similar plans and more money. They changed course yet again and became sellers of graphics systems software to computer companies, designers of printer controllers featuring their PostScript language, and the first seller of PostScript fonts.

Adobe Systems was named after the creek that ran past Warnock’s garden in Los Altos, California. The new company defined the PostScript language and then began designing printer controllers that could interpret PostScript commands, rasterize the image, and direct a laser engine to print it on page. That’s about the time that Steve Jobs came along.

The usual rule is that hardware has to exist before programmers will write software to run on it. There are a few exceptions to this rule, and one of these is PostScript, which is very advanced, very complex software that still doesn’t run very fast on today’s personal computers. PostScript was an order of magnitude more complex than most personal computer software of the mid-1980s. Tim Paterson’s Quick and Dirty Operating System was written in less than six months. Jonathan Sachs did 1-2-3 in a year. Paul Allen and Bill Gates pulled together Microsoft BASIC in six weeks. Even Andy Hertzfeld put less than two years into writing the system software for Macintosh. But PostScript took twenty man-years to perfect. It was the most advanced software ever to run on a personal computer, and few microcomputers were up to the task.

The mainframe world, with its greater computing horsepower, might logically have embraced PostScript printers, so the fact that the personal computer was where PostScript made its mark is amazing, and is yet another testament to Steve Jobs’s will.

The 128K Macintosh was a failure. It was an amazing design exercise that sat on a desk and did next to nothing, so not many people bought early Macs. The mood in Cupertino back in 1984 was gloomy. The Apple III, the Lisa, and now the Macintosh were all failures. The Apple II division was being ignored, the Lisa division was deliberately destroyed in a fit of Jobsian pique, and the Macintosh division was exhausted and depressed.

Apple had $250 million sunk in the ground before it started making money on the Macintosh. Not even the enthusiasm of Steve Jobs could make the world see a 128K Mac with a floppy disk drive, two applications, and a dot-matrix printer as a viable business computer system.

Apple employees may drink poisoned Kool-Aid, but Apple customers don’t.

It was soon evident, even to Jobs, that the Macintosh needed a memory boost and a compelling application if it was going to succeed. The memory boost was easy, since Apple engineers had secretly included the ability to expand memory from 128K to 512K, in direct defiance of orders from Jobs. Coming up with the compelling application was harder; it demanded patience, which was never seen as a virtue at Apple.

The application so useful that it compels people to buy a specific computer doesn’t have to be a spreadsheet, though that’s what it turned out to be for the Apple II and the IBM PC. Jobs and Sculley thought it would be a spreadsheet, too, that would spur sales of the Mac. They had high hopes for Lotus Jazz, which turned up too late and too slow to be a major factor in the market. There was, as always, a version of Microsoft’s Multiplan for the Mac, but that didn’t take off in the market either, primarily because the Mac, with its small screen and relatively high price, didn’t offer a superior environment for spreadsheet users. For running spreadsheets, at least, PCs were cheaper and had bigger screens, which was all that really mattered.

For the Lisa, Apple had developed its own applications, figuring that the public would latch onto one of the seven as the compelling application. But while the Macintosh came with two bundled applications of its own -- MacWrite and MacPaint -- Jobs wanted to do things in as un-Lisa-like manner as possible, which meant that the compelling application would have to come from outside Apple.

Mike Boich was put in charge of what became Apple’s Macintosh evangelism program. Evangelists like Alain Rossmann and Guy Kawasaki were sent out to bring the word of Macintosh to independent software developers, giving them free computers and technical support. They hoped that these efforts would produce the critical mass of applications needed for the Mac to survive and at least one compelling application that was needed for the Mac to succeed.

There are lots of different personal computers in the world, and they all need software. But little software companies, which describes about 90 percent of the personal computer software companies around, can’t afford to make too many mistakes by developing applications for computers that fail in the marketplace. At Electronic Arts, Trip Hawkins claims to have been approached to develop software for sixty different computer types over six or seven years. Hawkins took a chance on eighteen of those systems, while most companies pick only one or two.

When considering whether to develop for a different computer platform, software companies are swayed by an installed base -- the number of computers of a given type that are already working in the world -- by money, and by fear of being left behind technically. Boich, Rossmann, and Kawasaki had no installed base of Macintoshes to point to. They couldn’t claim that there were a million or 10 million Macintoshes in the world, with owners eager to buy new and innovative applications. And they didn’t have money to pay developers to do Mac applications -- something that Hewlett-Packard and IBM had done in the past.

The pitch that worked for the Apple evangelists was to cultivate the developers’ fear of falling behind technically. “Graphical user interfaces are the future of computing,” they’d say, “and this is the best graphical user interface on the market right now. If you aren’t developing for the Macintosh, five years from now your company won’t be in business, no matter what graphical platform is dominant then.”

The argument worked, and 350 Macintosh applications were soon under development. But Apple still needed new technology that would set the Mac apart from its graphical competitors. The Lisa and the Xerox Star had not been ignored by Apple’s competitors, and a number of other graphical computing environments were announced in 1983, even before the Macintosh shipped.

VisiCorp was betting (and losing) its corporate existence on a proprietary graphical user interface and software for IBM PCs and clones called VisiOn. VisiOn appeared in November 1983, more than a year after it was announced. With VisiOn, you got a mouse, a special circuit card that was installed inside the PC, and software including three applications -- word processing, spreadsheet, and graphics. VisiOn offered no color, no icons, and it was slow -- all for a list price of $1,795. The shipping version was supposed to have been twelve times faster than the demo; it wasn’t. Developers hated VisiOn because they had, to pay a big up-front fee to get the information needed to write programs (literally anti-evangelism) and then had to buy time on a Prime minicomputer, the only computer environment in which applications could be developed. VisiOn was a dud, but until it was actually out, failing in the world, it had a lot of people scared.

One person who was definitely scared by VisiOn was Bill Gates of Microsoft, who stood transfixed through three complete VisiOn demonstrations at the Comdex computer trade show in 1982. Gates had Charles Simonyi fly down from Seattle just to see the VisiOn demo, then Gates immediately went back to Bellevue and started his own project to throw a graphical user interface on top of DOS. This was the Interface Manager, later called Microsoft Windows, which was announced in 1983 and shipped in 1985. Windows was slow, too, and there weren’t very many applications that supported the environment, but it fulfilled Gates’ goal, which was not to be the best graphical environment around, but simply to defend the DOS franchise. If the world wanted a graphical user interface, Gates would add one to DOS. If they want a pen-based interface, he’ll add one to DOS (it’s called Windows for Pen Computing). If the world wants voice recognition, or multimedia, or fingerpainting input, Gates will add it to DOS, because DOS, and the regular income it provides, year after year, funds everything else at Microsoft. DOS is Microsoft.

Gates did Windows as a preemptive strike against VisiOn, and he developed Microsoft applications for the Macintosh, because it was clear that Windows would not be good enough to stop the Mac from becoming a success. Since he couldn’t beat the Macintosh, Gates supported it, and in turn gained knowledge of graphical environments. He also made an agreement with Apple allowing him to use certain Macintosh features in Windows, an agreement that later landed both companies in court.

Finally, there was GEM, another graphical environment for the IBM PC, which appeared from Gary Kildall’s Digital Research, also in 1983. GEM is still out there, in fact, but the only GEM application of note is Ventura Publisher, a popular desktop publishing package for the IBM world, ironically sold by Xerox. Most Ventura users don’t even know they are using GEM.

Apple needed an edge against all these would-be competitors, and that edge was the laser printer. Hewlett-Packard introduced its LaserJet printer in 1984, setting a new standard for PC printing, but Steve Jobs wanted something much, much better, and when he saw the work that Warnock and Geschke were doing at Adobe, he knew they could give him the sort of printer he wanted. HP’s LaserJet output looked as if it came from a typewriter, while Jobs was determined that his LaserWriter output would look like it came from a typesetter.

Jobs used $2.5 million to buy 15 percent of Adobe, an extravagant move that was wildly unpopular among Apple’s top management, who generally gave up the money for lost and moved to keep Jobs from making other such investments in the future. Apple’s investment in Adobe was far from lost though. It eventually generated more than $10 billion in sales for Apple, and the stock was sold six years later for $89 million. Still, in 1984, conventional wisdom said the Adobe investment looked like a bad move.

The Apple LaserWriter used the same laser print mechanism that HP’s LaserJet did. It also used a special controller card that placed inside the printer what was then Apple’s most powerful computer; the printer itself was a computer. Adobe designed a printer controller for the LaserWriter, and Apple designed one too. Jobs arrogantly claimed that nobody—not even Adobe—could engineer as well as Apple, so he chose to use the Apple-designed controller. For many years, this was the only non-Adobe-designed PostScript controller on the market. The first generation of competitive PostScript printers from other companies all used the rejected Adobe controller and were substantially faster as a result.

The LaserWriter cost $7,000, too much for a printer that would be available to only a single microcomputer. Jobs, who still didn’t think that workers needed umbilical cords to their companies, saw the logic in at least having an umbilical cord to the LaserWriter, and so AppleTalk was born. AppleTalk was clever software that worked with the Zilog chip that controlled the Macintosh serial port, turning it into a medium-speed network connection. AppleTalk allowed up to thirty-two Macs to share a single LaserWriter.

At the same time that he was ordering AppleTalk, Jobs still didn’t understand the need to link computers together to share information. This antinetwork bias, which was based on his concept of the lone computist -- a digital Clint Eastwood character who, like Jobs, thought he needed nobody else -- persisted even years later when the NeXT computer system was introduced in 1988. Though the NeXT had built-in Ethernet networking, Jobs was still insisting that the proper use of his computer was to transfer data on a removable disk. He felt so strongly about this that for the first year, he refused orders for NeXT computers that were specifically configured to store data for other computers on the network. That would have been an impure use of his machine.

Adobe Systems rode fonts and printer software to more than $100 million in annual sales. By the time they reach that sales level, most software companies are being run by marketers rather than by programmers. The only two exceptions to this rule that I know of are Microsoft and Adobe -- companies that are more alike than their founders would like to believe.

Both Microsoft and Adobe think they are following the organizational model devised by Bob Taylor at Xerox PARC. But where Microsoft has a balkanized version of the Taylor model, got second-hand through Charles Simonyi, Warnock and Geschke got their inspiration directly from the master himself. Adobe is the closest a commercial software company can come to following Taylor’s organizational model and still make a profit.

The problem, of course, is that Bob Taylor’s model isn’t a very good one for making products or profits -- it was never intended to be -- and Adobe has been able to do both only through extraordinary acts of will.

As it was at PARC, what matters at Adobe is technology, not marketing. The people who matter are programmers, not marketers. Ideologically correct technology is more important than making money—a philosophy that clearly differentiates Adobe from Microsoft, where making money is the prime directive.

John Warnock looks at Microsoft and sees only shoddy technology. Bill Gates looks at Adobe and sees PostScript monks who are ignoring the real world -- the world controlled by Bill Gates. And it’s true; the people of Adobe see PostScript as a religion and hate Gates because he doesn’t buy into that religion.

There is a part of John Warnock that would like to have the same fatherly relationship with Bill Gates that he already has with Steve Jobs. But their values are too far apart, and, unlike Steve, Bill already has a father.

Being technologically correct is more important to Adobe than pleasing customers. In fact, pleasing customers is relatively unimportant. Early in 1985, for example, representatives from Apple came to ask Adobe’s help in making the Macintosh’s bitmapped fonts print faster. These were programmers from Adobe’s largest customer who had swallowed their pride to ask for help. Adobe said, “No.”

“They wanted to dump screens [to the printer] faster, and they wanted Apple-specific features added to the printer,” Warnock explained to me years later. “Apple came to me and said, ‘We want you to extend PostScript in a way that is proprietary to Apple.’ I had to say no. What they asked would have destroyed the value of the PostScript standard in the long term.”

If a customer that represented 75 percent of my income asked me to walk his dog, wash her car, teach their kids to read, or to help find a faster way to print bit-mapped fonts, I’d do it, even if it meant adding a couple proprietary features to PostScript, which already had lots of proprietary features -- proprietary to Adobe.

The scene with Apple was quickly forgotten, because putting bad experiences out of mind is the Adobe way. Adobe is like a family that pretends grandpa isn’t an alcoholic. Unlike Microsoft, with its screaming and willingness to occasionally ship schlock code, all that matters at Adobe is great technology and the appearance of calm.

A Stanford M.B.A. was hired to work as Adobe’s first evangelist, trying to get independent software developers to write PostScript applications. Technical evangelism usually means going on the road -- making contacts, distributing information, pushing the product. Adobe’s evangelist went more than a year without leaving the building on business. He spent his days up in the lab, playing with the programmers. His definition of evangelism was waiting for potential developers to call him, if they knew he existed at all. What’s amazing about this story is that this nonevangelist came under no criticism for his behavior. Nobody said a thing.

Nobody said anything, too, when a technical support worker occasionally appeared at work wearing a skirt. Nobody said, “Interesting skirt, Glenn.” Nobody said anything.

Some folks from Adobe came to visit InfoWorld one afternoon, and I asked about Display PostScript, a product that had been developed to bring PostScript fonts and graphics to Macintosh screens. Display PostScript had been licensed to Aldus for a new version of its PageMaker desktop publishing program called PageMaker Pro. But at the last minute, after the product was finished and the deal with Aldus was signed, Adobe decided that it didn’t want to do Display PostScript for the Macintosh after all. They took the product back, and scrambled hard to get Aldus to cancel PageMaker Pro, too. I wanted to know why they withdrew the product.

The product marketing manager for PostScript, the person whose sole function was to think about how to get people to buy more PostScript, claimed to have never heard of Display PostScript for the Mac or of PageMaker Pro. He looked bewildered.

“That was before you joined the company,” explained Steve MacDonald, an Adobe vice-president who was leading the group. ”You don’t tell new marketing people the history of their own products?” I asked, incredulous. “Or is it just the mistakes you don’t tell them about?”

MacDonald shrugged.

For all its apparent disdain for money, Adobe has an incredible ability to wring the stuff out of customers. In 1989, for example, every Adobe programmer, marketing executive, receptionist, and shipping clerk represented $357,000 in sales and $142,000 in profit. Adobe has the highest profit margins and the greatest sales per employee of any major computer hardware or software company, but such performance comes at a cost. Under the continual prodding of the company’s first chairman, a venture capitalist named Q. T. Wiles, Adobe worked hard to maximize earnings per share, which boosted the stock price. Warnock and Geschke, who didn’t know any better, did as Q. T. told them to.

Q. T. is gone now, his Adobe shares sold, but the company is trapped by its own profitability. Earnings per share are supposed to only rise at successful companies. If you earned a dollar per share last year, you had better earn $1.20 per share this year. But Adobe, where 400 people are responsible for more than $150 million in sales, was stretched thin from the start. The only way that the company could keep its earnings going ever upward was to get more work out of the same employees, which means that the couple of dozen programmers who work most of the technical miracles are under terrific pressure to produce.

This pressure to produce first became a problem when Warnock decided to do Adobe Illustrator, a PostScript drawing program for the Macintosh. Adobe’s customers to that point were companies like Apple and IBM, but Illustrator was meant to be sold to you and me, which meant that Adobe suddenly needed distributors, dealers, printers for manuals, duplicators for floppy disks—things that weren’t at all necessary when serving customers meant sending a reel of computer tape over to Cupertino in exchange for a few million dollars, thank you. But John Warnock wanted the world to have a PostScript drawing tool, and so the world would have a PostScript drawing tool. A brilliant programmer named Mike Schuster was pulled away from the company’s system software business to write the application as Warnock envisioned it.

In the retail software business, you introduce a product and then immediately start doing revisions to stay current with technology and fix bugs. John Warnock didn’t know this. Adobe Illustrator appeared in 1986, and Schuster was sent to work on other things. They should have kept someone working on Illustrator, improving it and fixing bugs, but there just wasn’t enough spare programmer power to allow that. A version of Illustrator for the IBM PC followed that was so bad it came to be called the “landfill version” inside the company. PC Illustrator should have been revised instantly, but wasn’t.

When Adobe finally got around to sprucing up the Macintosh version of Illustrator, they cleverly called the new version Illustrator 88, because it appeared in 1988. You could still buy Illustrator 88 in 1989, though. And in 1990. And even into 1991, when it was finally replaced by Illustrator 3.0. Adobe is not a marketing company.

In 1988, Bill Gates asked John Warnock for PostScript code and fonts to be included with the next version of Windows. With Adobe’s help users would be able to see the same beautiful printing on-screen that they could print on a PostScript printer. Gates, who never pays for anything if he can avoid it, wanted the code for free. He argued that giving PostScript code to Microsoft would lead to a dramatic increase in Adobe’s business selling fonts, and Adobe would benefit overall. Warnock said, “No.”

In September 1989, Apple Computer and Microsoft announced a strategic alliance against Adobe. As far as both companies were concerned, John Warnock had said “No” twice too often. Apple was giving Microsoft its software for building fonts in exchange for use of a PostScript clone that Microsoft had bought from a developer named Cal Bauer.

Forty million Apple dollars were going to Adobe each year, and clever Apple programmers, who still remembered being rejected by Adobe in 1985, were arguing that it would be cheaper to roll their own printing technology than to continue buying Adobe’s.

In mid-April, news had reached Adobe that Apple would soon announce the phasing out of PostScript in favor of its own code, to be included in the upcoming release of new Macintosh control software called System 7.0. A way had to be found fast to counter Apple’s strategy or change it.

Only a few weeks after learning Apple’s decision -- and before anything had been announced by Apple or Microsoft -- Adobe Type Manager, or ATM, was announced -- software that would bring Adobe fonts directly to Macintosh screens without the assistance of Apple since it would be sold directly to users. ATM, which would work only with fonts -- with words rather than pictures -- was replacing Display PostScript, which Adobe had already tried (and failed) to sell to Apple. ATM had the advantage over Apple’s System 7.0 software that it would work with older Macintoshes. Adobe’s underlying hope was that quick market acceptance of ATM would dissuade Apple from even setting out on its separate course.

But Apple made its announcement anyway, sold all its Adobe shares, and joined forces with Microsoft to destroy its former ally. Adobe’s threat to both Apple and Microsoft was so great that the two companies conveniently ignored their own yearlong court battle over the vestiges of an earlier agreement allowing Microsoft to use the look and feel of Apple’s Macintosh computer in Microsoft Windows.

Apple-Microsoft and Apple-Adobe are examples of strategic alliances as they are conducted in the personal computer industry. Like bears mating or teenage romances, strategic alliances are important but fleeting.

Apple chose to be associated with Adobe only as long as the relationship worked to Apple’s advantage. No sticking with old friends through thick and thin here.

For Microsoft, fonts and printing technology had been of little interest, since Gates saw as important what happened inside the box, not inside the printer. Then IBM decided it wanted the same fonts in both its computers and printers, only to discover that Microsoft, its traditional software development partner, had no font technology to offer. So IBM began working with Adobe and listening to the ideas of John Warnock.

If IBM is God in the PC universe then Bill Gates is the pope. Warnock, now talking directly with IBM, was both a heretic and a threat to Gates. Warnock claimed that Gates was not a good servant of God, that Microsoft’s technology was inferior. Worse, Warnock was correct, and Gates knew it. Control of the universe in the box was at stake.

Warnock and Adobe had to die, Gates decided, and if it took an unholy alliance with Apple and a temporary putting aside of legal conflicts between Microsoft and Apple to kill Adobe, then so be it.

This passion play of Adobe, Apple, and Microsoft could have taken place between companies in many industries, but what sets the personal computer industry apart is that the products in question -- Adobe Type Manager and Apple’s System 7.0 -- did not even exist.

Battles of midsized cars or two-ply toilet tissue take place on showroom floors and supermarket shelves, but in the personal computer industry, deals are cut and share prices fluctuate on the supposed attributes of products that have yet to be written or even fully designed. Apple’s offensive against Adobe was based on revealing the ongoing development of software that users could not expect to purchase for at least a year (two years, it turned out); Adobe’s response was a program that would take months to develop.

ATM was announced, then developed, essentially by a single programmer who used to joke with the Adobe marketing manager about whether the product or its introduction would be done first.

Both companies were dueling with intentions, backed up by the conviction of some computer hacker that given enough time and junk food, he could eventually write software that looked pretty much like what had just been announced with such fanfare.

As I said, computer graphics software is very hard to do well. By the middle of 1991, Apple and Adobe had made friends again, in part because Microsoft had not been able to fulfill its part of the deal with Apple. “Our entry into the printer software business has not succeeded,” Bill Gates wrote in a memo to his top managers. “Offering a cheap PostScript clone turned out to not only be very hard but completely irrelevant to helping our other problems. We overestimated the threat of Adobe as a competitor and ended up making them an ‘enemy,’ while we hurt our relationship with Hewlett-Packard …”

Overestimated the threat of Adobe as a competitor? In a way it’s true, because the computer world is moving on to other issues, leaving Adobe behind. Adobe makes more money than ever in its PostScript backwater, but is not wresting the operating system business from Microsoft, as both companies had expected.

With its reliance on only a few very good programmers. Adobe was forced to defend its existing businesses at the cost of its future. John Warnock is still a better programmer than Bill Gates, but he’ll never be as savvy.

Reprinted with permission

Photo Credit: NinaMalyna/Shutterstock

Back in January I had the opportunity to test out BitTorrent Sync. I did not find the product to be completely ready for prime time, but I also did not find it to be terrible. I couldn't call BitTorrent Sync ready to replace my dearly departed Live Mesh, but I saw some promise, just lacking a bit of polish around the edges.

Back in January I had the opportunity to test out BitTorrent Sync. I did not find the product to be completely ready for prime time, but I also did not find it to be terrible. I couldn't call BitTorrent Sync ready to replace my dearly departed Live Mesh, but I saw some promise, just lacking a bit of polish around the edges.

Starting April 5, AT&T will carry one model of Amazon's tablet in stores, with $150 discount for those customers making a two-year contractual commitment. Just as

Starting April 5, AT&T will carry one model of Amazon's tablet in stores, with $150 discount for those customers making a two-year contractual commitment. Just as

BetaNews has learned that Facebook's "new home on Android" is not a phone, as widely rumored, but -- get this -- a smartwatch. A source with knowledge of the

BetaNews has learned that Facebook's "new home on Android" is not a phone, as widely rumored, but -- get this -- a smartwatch. A source with knowledge of the

In place of the usual Street View, there’s an amusing sepia "Telescope View" to peer through.

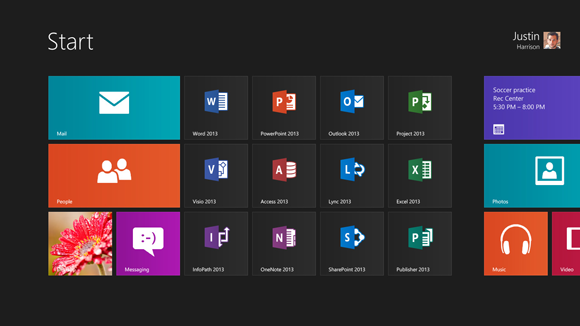

In place of the usual Street View, there’s an amusing sepia "Telescope View" to peer through. Seventeenth in a series. Love triangles were commonplace during the early days of the PC. Adobe, Apple and Microsoft engaged in such a relationship during the 1980s, and allegiances shifted -- oh did they. This installment of Robert X. Cringely's 1991 classic Accidental Empires shows how important is controlling a standard and getting others to adopt it.

Seventeenth in a series. Love triangles were commonplace during the early days of the PC. Adobe, Apple and Microsoft engaged in such a relationship during the 1980s, and allegiances shifted -- oh did they. This installment of Robert X. Cringely's 1991 classic Accidental Empires shows how important is controlling a standard and getting others to adopt it. Donald Trump is in negotiations with Fox and NBC to bring a new reality show to television, featuring corporate CEOs swapping roles. The concept is in advanced planning stages, with a mock-up pilot already shot and the two networks vying to add the series to their 2013-14 season. Normally, we don't bother with entertainment news at BetaNews, but two of the confirmed chief executives will interest our readership -- Apple's Tim Cook and Steve Ballmer of Microsoft.

Donald Trump is in negotiations with Fox and NBC to bring a new reality show to television, featuring corporate CEOs swapping roles. The concept is in advanced planning stages, with a mock-up pilot already shot and the two networks vying to add the series to their 2013-14 season. Normally, we don't bother with entertainment news at BetaNews, but two of the confirmed chief executives will interest our readership -- Apple's Tim Cook and Steve Ballmer of Microsoft.