By Angela Gunn, Betanews

Our continuing Linux-vs.-Windows series turns now to the absolute basics -- the most universal, and occasionally most important, task you will undertake with any computer. Whatever software and OS you use, whatever you do with the machine, sooner or later you're going to install, update or upgrade something. How does the process compare on the two platforms?

Our continuing Linux-vs.-Windows series turns now to the absolute basics -- the most universal, and occasionally most important, task you will undertake with any computer. Whatever software and OS you use, whatever you do with the machine, sooner or later you're going to install, update or upgrade something. How does the process compare on the two platforms?

(Again, Mac OS folk, you're not the topic of discussion here. If you want to comment on the .dmg experience or other aspects of tending your Apple orchards, please do so in comments, civilly.)

There are two classes of people who shudder at the prospect of maintaining their machines: Civilian users like your mama (or mine, who happily ran Windows Me for years rather than go through the XP install process), and sysadmins who have to handle matters for multiple users, whose machines may or may not be physically present for it. We'll try to address both in our comparison.

Breaking it down task by task:

Installing applications

Windows applications these days, whether downloaded or installed from optical disc, tend to include installation wizards; at the very least, there's likely to be a setup.exe program in there. Click and go (or just "go" if it's an autorun).

Linux packages come in a few different wrappings, depending on your preferred flavor. The installation process for *nix packages used to be rather tedious. Many experienced users are familiar with .tar balls, which are similar to .zip files under Windows. The .tar, .gz, and .tgz extensions all indicated that you had before you an archive, which had to be unpacked and the readme or install file sought in the collection within.

Fortunately, we're past all that now, thanks to package management -- a development that brought Linux installation management on an ease-of-use par with other operating systems by making the install process part of the operating system, not part of the individual package. The first iteration of the genre was given the unfortunate name "pms" (package management system). Perhaps in deference to the greater needs of humanity, it was followed quickly by RPP (Red Hat Software Program Packages), Red Hat's first essay in the field. Red hat later turned to RPM (RPM Package Manager, thank you Unix recursivity fiends), still a going concern for the Red Hat/Fedora/RHEL contingent. Yum (YellowDog Updater Modified) is one of the most popular package managers for that crowd.

The Ubuntu project (which is based on Debian -- the full family name is actually Debian Gnu/Linux, so you know) focused especially on making the install and upgrade process pain-free -- and in doing so actually provides a model that closed-source OS vendors would do well to follow. Like its progenitor Debian, Ubuntu uses the APT (Advanced Package Tool)-based Synaptic package-management tool to handle installations (and, as we'll see, updates and upgrades). APT, which is one of the centerpieces of the Debian/Ubuntu usability philosophy, is the interface to the wide world of DEB packages; instead of every individual package toting its own installation, the smarts for the process lie in the OS itself. Specifically, APT manages and resolves problems with dependencies -- a ticket out of the dreaded "dependency hell," in fact. APT sits atop dpkg, a Debian package manager.

To APT, a repository looks like a collection of files, plus an index. The index tells APT (and, therefore, Synaptic) about a desired program's dependencies, the additional files required to make the thing run. (Windows users, think "dynamic link library" here.) Synaptic checks the local machine to see if any of the listed dependencies need to be retrieved along with the program itself, and it tells you before installation if it will retrieve those for you.

More importantly, APT handles problems in which a package's dependencies conflict with each other, are circular to each other, or are otherwise out of control. Windows users will easily recognize that mess: It's DLL Hell by another name -- and if Windows' Add/Remove Programs function (Programs and Features in Vista) behaved nicely, it would actually do this sort of dependency tracking rather than simply enquiring of the setup.exe files it finds on the computer.

Jeremy Garcia, founder and proprietor of LinuxQuestions.org, notes that "the newer repository-based Linux distributions have gone to great lengths to mitigate the dependency hell issue... it's something I rarely hear complaints of anymore."

For our purposes, let's look at how the process works in Ubuntu. When installing a new app, the easiest method is to fire up Synaptic Package Manager and type in the name or even just a few description terms concerning what you want: "yahtzee game," for instance. Synaptic knows of several software repositories -- collections of software that are carefully maintained and checked for malware and such -- and users can add third-party repositories to check if they choose. By default, all repositories signed their packages, providing a level of quality assurance.

Some people find the Linux package management tools and repositories confusing, and some of that is due to the creative (and sometimes silly) naming of the tools. When the rubber hits the road, though, it's not that complicated. RPM, YUM, RHN, and several others all relate to management of packages in RPM format. APT, Synaptec, Ubuntu Update Manager, Canonical's commercial package manager -- all of these relate to management of packages in the Debian DEB format. And if you happen to want a package that's only available in RPM format for your Debian system (or vice versa) you can use a utility called Alien to translate between the two package formats, and keep everything on your system under the watchful eye of your chosen package manager.

There are four types of repositories in the Ubuntu universe: main, restricted, universe and multiverse. Main repositories hold officially supported software. Restricted software is for whatever reason (local laws, patent issues) not available under a completely free license, and you will want to know why before you install it. ("Free" in this case doesn't mean free-like-beer but free-like-speech; if a package may not be examined, modified, and improved by the community, it's not free.) Ubuntu has sorted matters out in this fashion, but once again it's a wide Linux world out there, and you're apt to encounter other terminology if you choose other flavors of the OS.

Software in the "universe" repository isn't official, but is maintained by the community; sometimes particularly popular and well-supported packages are promoted from universe to main. And many "multiverse" wares (e.g., closed-source drivers required to play DVDs on an open-source system) are not free-like-speech; you'll need to be in touch with the copyright holder to find out your responsibilities there. Many repositories of any stripe are signed with GPG keys to authenticate identity; APT looks for that authentication and warns users if it's not available.

To the end user, all this looks like: Open Synaptic. Type in search term. Select stuff that looks cool. Click "Apply." Done. Because the default repositories are actively curated, there's very little danger of malware; because the packages themselves must conform with Debian's install rules, the end user needs to do little to nothing to complete the process; because APT manages every files and configuration component completely, every package can be updated or removed completely without breaking the rest of the system. The applications are even sorted appropriately; my new Open Yahtzee game (I really do knock myself out for you people, don't I) appeared under Games with no prompting from me at all.

If you're determined, you can still do old-style installs in Debian, circumventing APT. If you're compiling your own software or installing some truly paleolithic code, you can end up scattering files and such all around your system, none of it tracked by APT. But you've really got to try.

Next: Updating and backing up Linux applications...

Updating applications

Microsoft some years ago combined its two main update services -- Windows Update and Office Update -- into one big Microsoft Update service. (For administrators, there's WSUS, which gives sysadmins greater control over updates.) And then the other programs folks use on Windows often have their own update processes of greater or lesser frequency and persistence.

Linux offers a few choices for managing your updates, but in Ubuntu, again, the method of choice is Synaptic. The system periodically checks online for updates to all the applications it sees on your system. Updates fall into four categories: critical security updates, recommended updates from serious problems not related to security, pre-released updates (mmmm, beta), and unsupported updates, which are mainly fixes for older, no longer generally supported versions of Ubuntu. Most users will automatically update only those patches falling in the first two categories.

The process is otherwise identical to install -- click and go. It is recommended, by the way, that users always do updates before upgrades to either individual programs or the OS.

Backing up applications

Backing up applications

Windows users installing from optical disc are wise to keep those in case they're needed later (along with any required license keys, of course). Because the repository model works as it does, Linux users may choose to simply rely on those servers. However, the program APTonCD makes it quite simple to create discs with backup copies of the packages installed on your system. The Ubuntu system must, however, be told about the specific disc from which you wish to install. That's a three-step system-administration process, and you will need to have the actual disc in hand and ready to drop into the machine. (It can also help you burn discs of packages you don't have on your system -- if you wanted to hand someone a nice clean install disk, for instance -- as Jeremy Garcia points out, "There are many corporate environments where Internet access in not available, for a variety of reasons.")

Or, if you like, on the basic Synaptic menu, there's an option to export a list of every blessed item on your system that APT is tracking. Take that list to another machine, import it, and Synaptic will install everything on the list, including appropriate updates, from the repositories.

Rolling back applications

Though the Internet often provides little recourse when one seeks an earlier version of a Windows program one didn't have the sense to back up before an ill-advised install, both Linux platforms have a wide range of rollback ease, depending on which applications you're dealing with.

Debian / Ubuntu leaders made a decision that new packages would not automatically uninstall older versions. This may or may not present tidying-up challenges for tiny-disked systems -- in my own experience I find that the Computer Janitor utility does an adequate job of keeping things in check -- but it certainly makes it easier to revert to an earlier version of a particular program.

Updating the OS

Minor updates to Windows are pushed out about once a month, or more often when Microsoft chooses to release an out-of-cycle patch. Major updates -- the Service Packs -- are less frequent. Desktop systems are often configured to automatically install updates when they become available, while Windows servers are typically configured to notify (but not install) updates so that proper testing can occur.

In Linux, the operating system and the kernel are constantly being updated. That doesn't mean you need to update every time something changes, and as with Windows there are perfectly good reasons to wait -- as with other operating systems, an upgrade can occasionally cause confusion with dependencies and break third-party software (especially, Murphy's Law being what it is, on production machines). On the other hand, tiny performance improvements, support for newer gadgets, and assorted bug fixes may mean you find the prospect of frequently freshened kernels appealing, especially if you're not doing the installation for any machine but your own. And many sysadmins would in any case like to automate the update process as much as possible for civilian users.

One good reason to update your kernel is to prepare for a larger upgrade; while a major version installation itself can't be easily rolled back once installed, the kernel, modules or specific applications all can. A cautious or curious sysadmin could get a preview of how a newer version of the OS treats an older application by upgrading the kernel, checking its behavior, then testing individual applications to see how they behave, rather than upgrading the whole shebang and hoping for the best.

In related thinking, smart Linux users make their /home/ -- the directory for data and documents -- on a separate partition from the OS installation. That way, changes to the OS -- up to and including switching to an all-new Linux distribution -- don't necessarily require you to reconfigure all applications and reload all your documents, photos, and other user data.

Upgrading the OS

Late October is going to a big time for you no matter which OS you use; Windows 7 is expected for release on the 22nd, while Ubuntu is expected to level up to Karmic Koala (did we mention the amusement factor in Ubuntu's naming system?) on the 29th.

Whether or not you think Windows or Linux has the edge here is perhaps dependent on what you expect from a large install process. With Windows, the process goes relatively well if you remember to do your BIOS upgrades before you start the process (and are sure your current version can be upgraded to the new one). Linux upgrades must be done in lockstep, and if you're more than one version behind you'll have to install all the intervening versions until you're up to date. On the other hand, upgrades for Linux can be done over the Net if you like; when upgrading Windows, on the other hand, you're wise to get offline completely.

Backing up the OS

Windows users who purchase their machines with the OS pre-loaded used to be supplied with rescue disks in case of disaster; these days, it's on a partition on the hard drive itself (and heaven help you if the drive fails). Various good options exist for backing up one's Registry and system files in case of trouble. But things can get a little awkward (or expensive) when it's time to start absolutely fresh with a clean install. (And every machine needs to do that now and then.) Did you save your license key? If the Debian / Ubuntu effort made nothing else simpler, the "free" part ensures a lot less drama when chaos strikes.

Rolling back the OS

It happens: You need to be where you were, not where you are. In Windows, you're hosed; format and start again. In Linux... you're still hosed. You can, however, roll back the kernel as mentioned. (In fact, you're not really rolling back the kernel itself; the upgrade process leaves the old kernel in there, available from the boot loader just in case. They're only about 10-15 MB, after all; you have room.) That's rather helpful for testing purposes, and can save you some unpleasant surprises with individual applications; careful use of the testing technique described above may well spare you the need to roll back at all. (Also, this is an excellent time to have done that /home/ partition we mentioned.)

I am fairly sure RPM supports rollback, although I think it's disabled by default. I'm not sure about dpkg. It's also possible to force install an older version of the package you're having issues with in many cases.

So what's the verdict? For maintenance of applications and the operating system with minimum pain and maximum control, the answer to this Can Linux Do This question is YES, and well enough that Microsoft and other closed-source shops ought to be taking notes.

Copyright Betanews, Inc. 2009

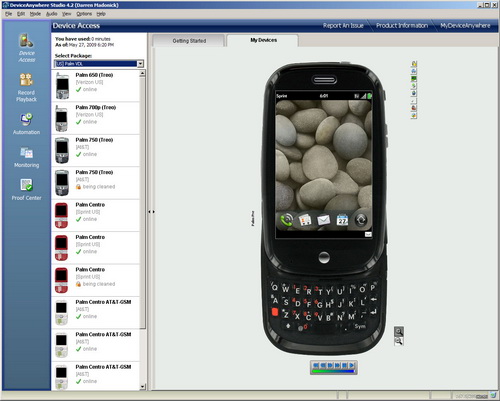

So it comes as no surprise that barely a month after issuing a support note containing a thinly veiled warning against the practice, Apple has officially and concretely responded to Palm's opening move by releasing an update to iTunes. Known as version 8.2.1, the new code specifically kills the ability of earlier versions of iTunes to sync content on non-Apple devices. Palm Pre users with a particular addiction to iTunes now face a short list of inelegant alternatives: They can either elect to never upgrade iTunes beyond 8.2, or revert to dragging and dropping their music files onto their device. If they're feeling really adventurous, they can always pull out that scuffed up first-generation Shuffle from the junk drawer and go to town. Either way, the Pre's days as a wannabe-iPod are over.

So it comes as no surprise that barely a month after issuing a support note containing a thinly veiled warning against the practice, Apple has officially and concretely responded to Palm's opening move by releasing an update to iTunes. Known as version 8.2.1, the new code specifically kills the ability of earlier versions of iTunes to sync content on non-Apple devices. Palm Pre users with a particular addiction to iTunes now face a short list of inelegant alternatives: They can either elect to never upgrade iTunes beyond 8.2, or revert to dragging and dropping their music files onto their device. If they're feeling really adventurous, they can always pull out that scuffed up first-generation Shuffle from the junk drawer and go to town. Either way, the Pre's days as a wannabe-iPod are over. Our continuing Linux-vs.-Windows series turns now to the absolute basics -- the most universal, and occasionally most important, task you will undertake with any computer. Whatever software and OS you use, whatever you do with the machine, sooner or later you're going to install, update or upgrade something. How does the process compare on the two platforms?

Our continuing Linux-vs.-Windows series turns now to the absolute basics -- the most universal, and occasionally most important, task you will undertake with any computer. Whatever software and OS you use, whatever you do with the machine, sooner or later you're going to install, update or upgrade something. How does the process compare on the two platforms? Backing up applications

Backing up applications

July 15, 2009, 9:08 am PDT • It was inevitable that Facebook would break the quarter-billion barrier, and it took only three months to get that last 50 million, according to

July 15, 2009, 9:08 am PDT • It was inevitable that Facebook would break the quarter-billion barrier, and it took only three months to get that last 50 million, according to

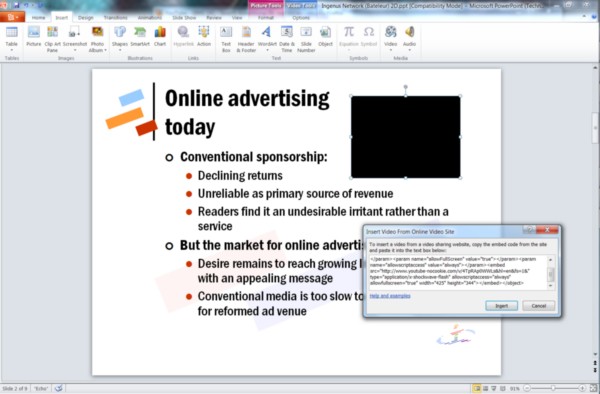

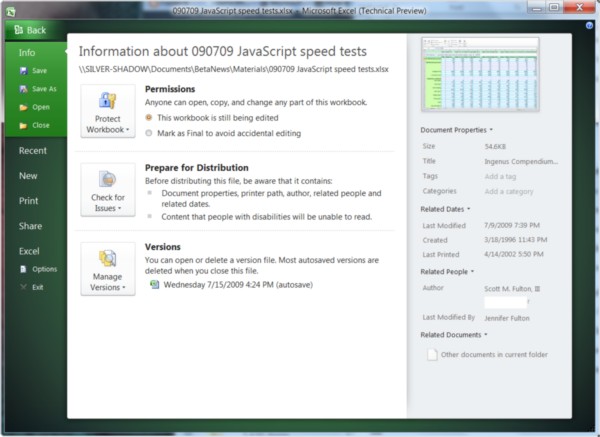

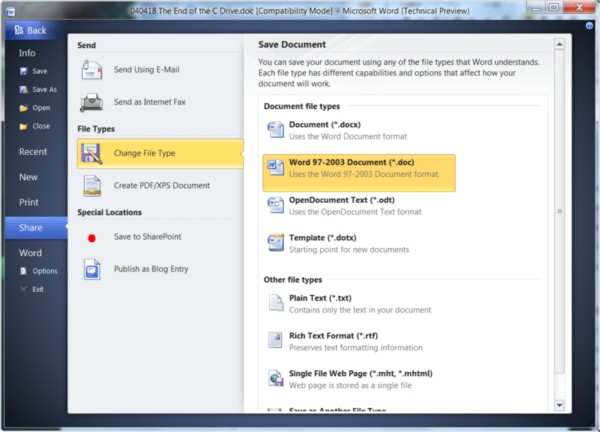

The question has been asked, who really needs to use Microsoft Office these days? The answer is, anyone who is in the business of professionally generating content for a paying customer. Word 2010 may not be the optimum tool for the everyday blogger, and Excel 2010 maybe not the best summer trip planner, just as a John Deere is not the optimum vehicle for a trip to the grocery store. But in recent years, Microsoft is the only software producer that has come close to understanding what professional content creators require in their daily toolset.

The question has been asked, who really needs to use Microsoft Office these days? The answer is, anyone who is in the business of professionally generating content for a paying customer. Word 2010 may not be the optimum tool for the everyday blogger, and Excel 2010 maybe not the best summer trip planner, just as a John Deere is not the optimum vehicle for a trip to the grocery store. But in recent years, Microsoft is the only software producer that has come close to understanding what professional content creators require in their daily toolset.

4. Restrict Editing command in Word 2010. Many publishing organizations use Word as their principal tool for editing textual content, which means collaborators shuttle multiple documents between authors, editors, and proofreaders. Microsoft's collaboration tools are supposed to enable only certain parties to make changes. But in the publishing business, formatting codes are the keys to the final formatting of a production document, and if someone who has access rights can change those paragraph formats, even accidentally (which is easier to accomplish than you might imagine, thanks to customizable document templates belonging to each user), the entire production process can be held up, sometimes for days, while formatters work out the kinks.

4. Restrict Editing command in Word 2010. Many publishing organizations use Word as their principal tool for editing textual content, which means collaborators shuttle multiple documents between authors, editors, and proofreaders. Microsoft's collaboration tools are supposed to enable only certain parties to make changes. But in the publishing business, formatting codes are the keys to the final formatting of a production document, and if someone who has access rights can change those paragraph formats, even accidentally (which is easier to accomplish than you might imagine, thanks to customizable document templates belonging to each user), the entire production process can be held up, sometimes for days, while formatters work out the kinks.

But since this is a security column, let us view the proposal through that lens. Because honestly, someone should have before this "just a concept, an idea" piece was ever released to a nation that needs serious thought about fixing its schools, rather than dreamy-eyed drooling over a gadget.

But since this is a security column, let us view the proposal through that lens. Because honestly, someone should have before this "just a concept, an idea" piece was ever released to a nation that needs serious thought about fixing its schools, rather than dreamy-eyed drooling over a gadget.