Canaux

108470 éléments (108470 non lus) dans 10 canaux

Actualités

(48730 non lus)

Actualités

(48730 non lus)

Hoax

(65 non lus)

Hoax

(65 non lus)

Logiciels

(39066 non lus)

Logiciels

(39066 non lus)

Sécurité

(1668 non lus)

Sécurité

(1668 non lus)

Referencement

(18941 non lus)

Referencement

(18941 non lus)

éléments par Vivek Vahie

BetaNews.Com

-

What you need to consider before adopting Microsoft Office 365

Publié: novembre 15, 2016, 8:39pm CET par Vivek Vahie

The cloud-based office productivity software market is expected to reach $17 billion in 2016. That’s more than a 400 percent increase from 2009 when the market was valued at $3.3 billion. With the success of cloud-based applications and their pay-as-you-go model, it shouldn’t be surprising that the cloud version of one of the most widely used desktop application packages would grow to become a popular choice for businesses. I’m of course referring to Office 365, Microsoft’s leading productivity and work software package delivered via the cloud. What’s the Fuss? Mobile devices and cloud technologies at large have drastically changed the… [Continue Reading] -

Moving legacy apps to the cloud: Why and how you should do it

Publié: novembre 10, 2015, 4:33pm CET par Vivek Vahie

With shrinking IT budgets it is crucial that businesses ensure they are spending their IT budget wisely. As a result, organizations are already taking advantage of the economies of scale that cloud computing offers.

However, despite the benefits being clear, businesses still have applications running on legacy environments. Moving legacy applications to the cloud is one way to reduce operating costs and relieve staff from managing tasks that are better served in the cloud, freeing them to focus on tasks that contribute to business growth.

The Key Drivers for Moving Legacy Apps to the Cloud:

- The high cost -- maintaining legacy applications is eating into a huge chunk of IT budgets

- Accessibility and availability -- businesses need applications to be accessible from anywhere and anytime, many legacy systems do not support this

- Internal support -- it is becoming increasingly difficult for staff to support applications using outdated technology

- Flexibility and scalability -- businesses need to be able to adapt quickly to keep up with customer demands which will ultimately impact its business growth

Moving legacy applications to the cloud is a great alternative for many organizations due to the flexible nature of the cloud and its potential to reduce operational costs. Migration isn’t painless and can be complicated, costly and lengthy depending on the applications and the approach taken. However, most find that this investment of time and resources is worth it when the long term gains are considered.

Key Considerations When Planning

- Understand the complexities involved in the current legacy system and identify the features that are required or that need to be enhanced

- Understand the cost, time and resource constraints -- this can help you to decide the number of components of the existing application that will be transferred to the cloud

- Plan for testing the application -- this is a critical part of the process, moving the application to the cloud has multiple parameters that need to be tested before it can go live

Evaluate and ensure that the upgrade doesn’t interfere with existing SLAs or compliance and security needs

Identify the applications that would make the best fit to be transferred to the cloud. Although some applications can seem to be a good fit they still might require tweaking and multiple testing cyclesWays of Moving to the Cloud

There are multiple ways to move to the cloud and a number of other considerations to think about before embarking on a big project like cloud migration. Here are a few of the available options:

- Completely re-engineering the identified application making it cloud enabled and in the process enhancing the application features to better meet the needs of the business

- Hybrid model -- making subtle changes to the identified application architecture and porting elements of the application onto the cloud

- Moving to a SaaS model -- replacing the identified application with something that is already available on the cloud, sometimes it is prudent to replace in-house apps as opposed to re-engineering them

You will also have to decide whether to choose a Private, Public or Hybrid cloud model for hosting your applications. This decision should be based upon your security requirements and the level of control the application requires.

One of the most critical parts of the process when moving the applications to the cloud is choosing the right vendor to work with, as the migration can have a high overhead from a cost, time and skill perspective.

It requires engineering skills, application expertise, technology knowhow and the ability to stay within budget and the right vendor partner should help you make well informed decisions around the approach to migration and assist in some of the complexities that this involves.

It is also important to ensure that the vendor you choose has the capabilities to manage and host your cloud enabled applications whilst helping you to control the levels of cost, SLAs and security that your business needs.

Once you have evaluated, planned and deployed applications in the cloud you will soon be able to realize the benefits of the migration through IT budgets being freed up and staff able to be deployed on value add projects.

Vivek Vahie is senior director at NaviSite India.

Published under license from ITProPortal.com, a Net Communities Ltd Publication. All rights reserved.

Image Credit: Khakimullin Aleksandr/Shutterstock

-

Why businesses should embrace the hybrid cloud

Publié: mai 22, 2015, 9:48am CEST par Vivek Vahie

When cloud technology started to gain traction with businesses the main concerns expressed were over data security and control. Customers questioned what compromises they would have to make with their on-premise infrastructure to reap the benefits of cloud computing.

However, the cloud has developed significantly over the past few years, and the emergence of hybrid cloud has allowed businesses to reap the benefits of lower cost public cloud offerings whilst keeping control of their most prized and sensitive data on-premise. Hybrid cloud is any combination of public and private computing combined with existing on-premise infrastructure which is tailored to fit each individual business’ needs. With hybrid cloud, organizations are able to invest in both public and private cloud offerings from different vendors, giving them more flexibility and control.

These platforms operate independently whilst cross communicating with one another, allowing data to be shared. Hybrid cloud allows businesses to find the balance between their in-house IT infrastructure and public cloud services.

Due to the flexibility and potential cost savings that the hybrid cloud model offers, we are seeing businesses of all sizes and from all sectors shifting towards the adoption of these solutions. In a recent NaviSite survey, a staggering 89 percent of respondents signified that deploying some sort of private cloud and hybrid infrastructure is a key priority for them in 2015.

There has been a particular interest from vertical markets which are looking to outsource some of their IT infrastructure in order to focus on other business demands. The survey also revealed that 31 percent of UK respondents said that a service provider’s ability to unlock tangible cost savings on existing IT spend was one of the top reasons why they are considering the investment.

This is where the hybrid cloud model can really benefit businesses which are looking to reduce IT spending without losing the top focus of reliability, continuity, security, compliance and agility.

Although hybrid cloud has a clear set of benefits it can be difficult to implement. We wish that deploying a Hybrid Cloud model was as simple as creating a VPN tunnel between the two solutions and making it functional, but unfortunately it is not as simple and straightforward as that. There are challenges to ensure compatibility of the chosen cloud solutions and existing in-house infrastructure -- both at a networking layer as well as the application layer.

The design needs to be well crafted to ensure that the differing infrastructure models can be fully integrated. This represents a paradigm shift from the traditional cloud computing model which positions all of IT operating from the cloud to a whole new realm of possibilities that never existed before and can now be explored.

The hybrid cloud model allows a lot of opportunities for business from scalability to agility -- at the same time as delivering cost effectiveness for the business. It can be a highly rewarding experience if it is successfully deployed, however it can be a frustrating experience to set up which can restrain the business to be able to reap the benefits of the Hybrid Model.s

There is a clear appetite for businesses who want to take cloud offerings to the next level -- and that is exactly what a hybrid cloud solution provides. The model of Infrastructure-as-a-Service is now commoditising with vendors offering services to quickly migrate legacy infrastructure to an existing cloud option -- whether private or public.

There are customers in specific verticals who have been reluctant to move to the cloud primarily because of control and security concerns. With the development of hybrid cloud, businesses are now able to move a considerable amount of their infrastructure to the cloud whilst still remaining in control of their most sensitive data. This also allows cautious customers to follow a staggered approach, evaluating all the possibilities on offer to them.

The hybrid cloud model provides a lot of opportunities for businesses -- from scalability to agility -- at the same time as offering a cost effective solution.

The key to deploying a successful hybrid cloud model is to fully understand which datasets and applications will best be suited to physical hosting, a cloud environment and to work with trusted providers to ensure that you can capitalize on the best platform for your business needs in a fully integrated manner.

Vivek Vahie is senior director at Navisite India.

Photo Credit: Oleksiy Mark/Shutterstock

Published under license from ITProPortal.com, a Net Communities Ltd Publication. All rights reserved.

-

Why Virtual Desktop Infrastructure is not the future

Publié: décembre 2, 2014, 5:45pm CET par Vivek Vahie

One of the current challenges for a business IT team is supporting a variety of desktops, laptops, tablets and mobile end user devices for employees. This task can be a considerable overhead in terms of time, resource and cost. Physical desktops are not only expensive, insecure, and maintenance heavy, they are also not necessarily a good fit for an increasingly mobile and demanding workforce. The groundswell of Windows 7 migration plans, an expanding virtual workforce, the growing popularity of mobile devices, and tighter IT budgets each point to the need to re-evaluate desktop strategies.

While virtual desktop infrastructure (VDI) seems like a promising alternative to managing physical desktops and mobiles, in reality, it’s too costly and complex for most companies to implement successfully. Cloud services, however, are helping to mitigate many of the challenges of traditional VDI implementations. Cloud based virtual desktops deliver benefits around centralized management and simplified deployment without the high costs, limitations, or difficulties of VDI.

Let’s (not) Get Physical, Physical

Let us try to understand why businesses are currently investigating options beyond physical desktops and how VDI and Cloud based Desktop-as-a-Service (DaaS) are evolving as viable alternatives and understand their respective advantages and limitations.

While desktop computing has historically been an essential mechanism for delivering business applications and services to end users, IT managers are finding that an ever increasing amount of their time and IT budget is focused on managing and securing these physical PCs, driving the need for a less complex and more cost effective solution.

Some of the obvious challenges around physical desktops (or laptops) are: hardware limitations leading to an inability to absorb the requirements of newer versions of operating systems, together with an increasing demand of end users to be able to access information without delay from any place, at any time. Physical desktops consume 10 percent of IT budgets and yet fail to provide any significant competitive advantages over cloud based desktops.

So it is clear that there is a dire need for organizations to move away from physical desktops to a solution that is scalable, cost effective and allows access to information from any place, at any time and from a range of end user devices. Once a company has decided to move forward with desktop virtualization, the next step is to determine which type of desktop virtualization will best meet the needs of both the end user and the IT team. The two alternatives to the provision of physical desktops that have evolved over the past few years are VDI and Cloud based Desktop-as-a-Service (DaaS).

Swap Your Head in the Clouds, For a Desktop in the Cloud

VDI is primarily an individual virtual machine that is running a desktop operating system and as the data is normally located locally, on premise, security is totally under the control of the company. This has both advantages and disadvantages in that the infrastructure for the VDI implementation needs to be hosted locally. For companies that don’t have the power, space, CAPEX or required IT knowledge, this can be a disadvantage, whilst for companies that do have these facilities and skills in-house, it can be relatively easy and cost effective to use existing internal resources.

An added requirement for hosting VDI internally would be to ensure that the relevant security and controls were in place to keep company data safe. Again, if this is an internal VDI implementation, it would be incumbent on the business to ensure that this was in place and maintained by their in-house IT team. This contrasts to the implementation model of DaaS, where the managed service provider would be maintaining all of the above at their data centre, leveraging their own skills and resources.

DaaS or Virtual Desktops in the Cloud are not markedly different from VDI, so let’s examine the differences and similarities of DaaS compared to VDI. When implementing a DaaS solution the virtual desktops are outsourced to a cloud service provider, so the internal IT team is relieved of the task of managing the desktop software and hardware and like any other cloud offering, this approach offers cost savings, flexibility and scalability.

IT Infrastructure Showdown: VDI vs. DaaS

In many ways the advantages and disadvantages of private and public cloud mirror the advantages and disadvantages of VDI versus DaaS. For exactly the same reasons that public cloud is gaining more success over private cloud, DaaS is gaining more success over VDI for desktop management.

Cloud computing has become a hot topic in today’s world, largely due to the flexibility and cost savings it can deliver. In the same manner as with virtualization, cloud computing implementations started with server infrastructure and has moved to the desktop; the cloud is now ripe for desktop infrastructure.

By moving desktops to the cloud rather than an internally deployed and managed VDI solution in a data centre, businesses can realize all of the promised benefits of virtual desktops—through centralized management, improved data security, and simplified deployment— without VDI’s costs, challenges, limitations or difficulties.

Vivek Vahie is the senior director of service delivery at NaviSite.

Published under license from ITProPortal.com, a Net Communities Ltd Publication. All rights reserved.

Photo Credit: alphaspirit/Shutterstock

-

Does your company need an Intrusion Detection System?

Publié: décembre 1, 2014, 7:59pm CET par Vivek Vahie

Unauthorized access to networks is currently one of the most serious threats to the hosting business. Intruders and viruses present the two biggest security threats to the industry. Let us examine three key definitions: intruders (or hackers), intrusion, which is a formal term for describing the act of compromising a network or system, and Intrusion Detection Systems, which help business detect when they are vulnerable to an attack.

Intruders can be external or internal and their intents may vary from benign to serious. Statistically 80 percent of security breaches are committed by internal users and these are by far the most difficult to detect and prevent. These intruders create a significant issue for network systems and IT equipment. Intruders come in a variety of classes with a varying level of competence -- an external user without authorized access to the system will want to penetrate the system to exploit legitimate user accounts to access data, programs or resources with a purpose of misuse. Intruders may even use compromised systems to launch attacks.

Examples of intrusions that may vary from serious to benign:

1. Installing and making unauthorized use of remote administration tools

2. Performing a remote root compromise on the Exchange server

3. Defacing a Web Server

4. Using sniffers to capture passwords

5. Guessing or cracking the passwords

6. Noting the credit card details from a Database

7. Impersonating a user to reset or gain access to the password

8. Using unattended and logged on workstations

9. Accessing the network during off hours

10. Email access of former employees

Defining an Intrusion Detection System

An intrusion detection system (IDS) is a device or application that monitors all inbound and outbound network activity and identifies suspicious patterns that may indicate a network or system attack from someone attempting to compromise the system. It is an intelligent system which is deployed on the physical layer in a network which then monitors any unauthorized intervention to the environment. An IDS is based on the assumption that the intruder behaves differently from that of any authorized users and these activities can be quantified. The following discusses the approaches to intrusion detection:

Statistical anomaly threshold detection

The approach where data is collected relating to the behavior of a legitimate user. The data and the threshold are defined along with the frequency of occurrence for various events.

Statistical anomaly detection -- Profile based is the approach where the data is collected relating to the behavior of a legitimate user. A profile of activity is created of each user and is then developed for further use to detect any significant behavior that would lead to a suspicious act.

Rule based anomaly detection

This detection method defines the set of rules that can be used to define the type of behavior of an intruder. These rules may represent past behavior patterns (of users, devices, timelines, etc.) which are matched to the current patterns to determine any intrusion.

Rule based penetration detection is an expert system that defines a class of rules to identify suspicious behavior even when the behavior is confined to the patterns already established. These rules are more specific to the devices or the operating system.

The prime difference is that the statistical approach attempts to define the normal or expected behavior and rule-based approach focuses on way the way the user navigates its way around the system.

Below are some measures that are used for intrusion detection:

1. By analyzing the login frequency of the user by day and time, the system can detect whether the intruder is likely to login during out–of-office hours.

2. By measuring the frequency of where the user logs in and at which specific locations, an IDS can detect whether a particular user rarely or never uses that location

3. By measuring the time between each login, an IDS can verify whether a dead user is performing an intrusion

4. The time elapsed per session, including failures to login, might lead an IDS to an intruder

5. The quantity of output to a location can inform an IDS of significant leakage of sensitive data

6. Unusual resource utilization can signal an intrusion

7. Abnormalities for read, write and delete for a user may signify illegitimate browsing by an intruder

8. Failure count for read, write, create, delete may detect users who persistently attempt to access unauthorized files.

Here’s how it works with an IT network

How can I protect myself?

Intrusion detection systems use a variety of methods and the goal of each is to identify the suspicious activities in different ways. There are network based IDS (NIDS) and host based IDS (HIDS). As the name suggests the network based IDS is strategically placed at various points in a network so that the data traversing to or from the different devices on that network is monitored. The network based IDS checks for attacks or irregular behavior by inspecting the content and the header information of all the packets moving across the network. Host based IDS refers to intrusion detection that takes place on a single host system. These are aimed at collecting information about the activity on a particular host which are also referred to as sensors. Sensors work by collecting data about events taking place on the system being monitored. Both types of IDS have unique strengths, and while the goal of the two types of IDS is the same, the approach differs:

Host based IDS:

- Monitors specific activities

- Detects attacks that are missed by network IDS

- Provides near real time detection and response

- Requires no additional hardware

Network IDS:

- Is independent of the operating system

- Provides real time detection and response

- Is difficult for the intruder to remove evidence

- Detects attacks that are missed by host based IDS

Vivek Vahie is senior director of service delivery at NaviSite.

Picture credit: alphaspirit/Shutterstock

Published under license from ITProPortal.com, a Net Communities Ltd Publication. All rights reserved.

-

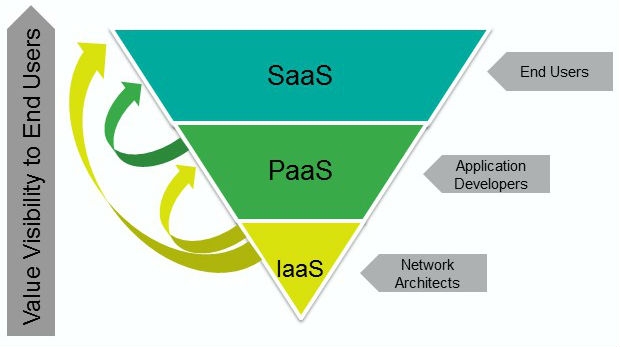

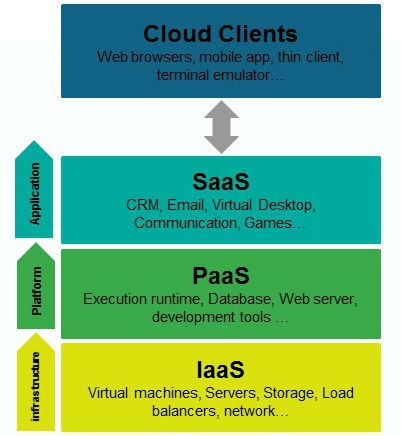

Making sense of the cloud: SaaS, PaaS and IaaS explained

Publié: août 22, 2014, 7:12pm CEST par Vivek Vahie

Cloud computing is one of the most important technologies in the world right now, but it can be extremely confusing at times.

What, for example, are SaaS, PaaS and IaaS? Read on as we take the jargon out of the cloud and explain things in a much more brain-friendly way.

SaaS

Cloud application services -- or software-as-a-service (SaaS) -- represent the largest cloud market and are still growing quickly. SaaS uses the web to deliver applications that are managed by a third party vendor and whose interface is accessed on the clients' side. Most SaaS applications can be run directly from a web browser without the need for any downloads or installations, although some require small plugins.

Because of the web delivery model, SaaS eliminates the need to install and run applications on individual computers. With SaaS, it's easy for enterprises to streamline their maintenance and support, since everything -- applications, runtime, data, middleware, operating systems, virtualization, servers, storage, networking, etc. -- can be managed by vendors.

Popular SaaS offering types include email and collaboration, customer relationship management and healthcare-related applications. Some large enterprises that are not traditionally considered software vendors have started building SaaS as an additional source of revenue in order to gain a competitive advantage.

SaaS examples: Gmail, Microsoft 365, Salesforce, Citrix GoToMeeting, Cisco WebEx

Common SaaS use-case: Replacing traditional on-device software

PaaS

Cloud platform services -- or platform-as-a-service (PaaS) -- are used for applications, and other development, while providing cloud components to software. What developers gain with PaaS is a framework they can build upon to develop or customize applications. PaaS makes the development, testing, and deployment of applications quick, simple, and cost-effective. With this technology, enterprise operations, or third party providers, can manage operating systems, virtualization, servers, storage, networking, and the PaaS software itself. Developers, however, manage the applications.

Enterprise PaaS provides line-of-business software developers a self-service portal for managing computing infrastructure from centralized IT operations and the platforms that are installed on top of the hardware. The enterprise PaaS can be delivered through a hybrid model that uses both public IaaS and on-premise infrastructure or as a pure private PaaS that only uses the latter.

Similar to the way in which you might create macros in Excel, PaaS allows you to create applications using software components that are built into the PaaS (middleware). Applications using PaaS inherit cloud characteristics such as scalability, high availability, multi-tenancy, SaaS enablement, and more. Enterprises benefit from PaaS because it reduces the amount of coding necessary, automates business policy and helps migrate apps to a hybrid model.

Enterprise PaaS example: Apprenda

Common PaaS use-case: Increase developer productivity and utilization rates and decrease an application's time-to-market

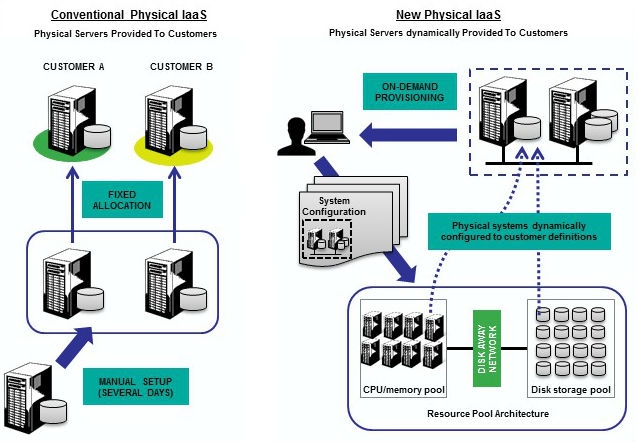

IaaS

Cloud infrastructure services -- known as infrastructure-as-a-service (IaaS) -- are self-service models for accessing, monitoring, and managing remote data center infrastructures, such as compute (virtualized or bare metal), storage, networking, and networking services (e.g. firewalls). Instead of having to buy hardware outright, users can purchase IaaS based on consumption, similar to payment for electricity or other utilities.

Cloud infrastructure services -- known as infrastructure-as-a-service (IaaS) -- are self-service models for accessing, monitoring, and managing remote data center infrastructures, such as compute (virtualized or bare metal), storage, networking, and networking services (e.g. firewalls). Instead of having to buy hardware outright, users can purchase IaaS based on consumption, similar to payment for electricity or other utilities.Compared to SaaS and PaaS, IaaS users are responsible for managing applications, data, runtime, middleware and operating systems. Providers still manage virtualization, servers, hard drives, storage, and networking. Many IaaS providers now offer databases, messaging queues, and other services above the virtualization layer as well. Some tech analysts draw a distinction here and use the IaaS+ moniker for these other options. What users gain with IaaS is infrastructure on top of which they can install any required platform. Users are responsible for updating these if new versions are released.

IaaS examples: NaviSite NaviCloud, Amazon Web Service (AWS), Microsoft Azure, Google Compute Engine (GCE)

Common IaaS use-case: Extending current data center infrastructure for temporary workloads (e.g. increased Christmas holiday site traffic)

Vivek Vahie is the senior director of service delivery at NaviSite

Published under license from ITProPortal.com, a Net Communities Ltd Publication. All rights reserved.