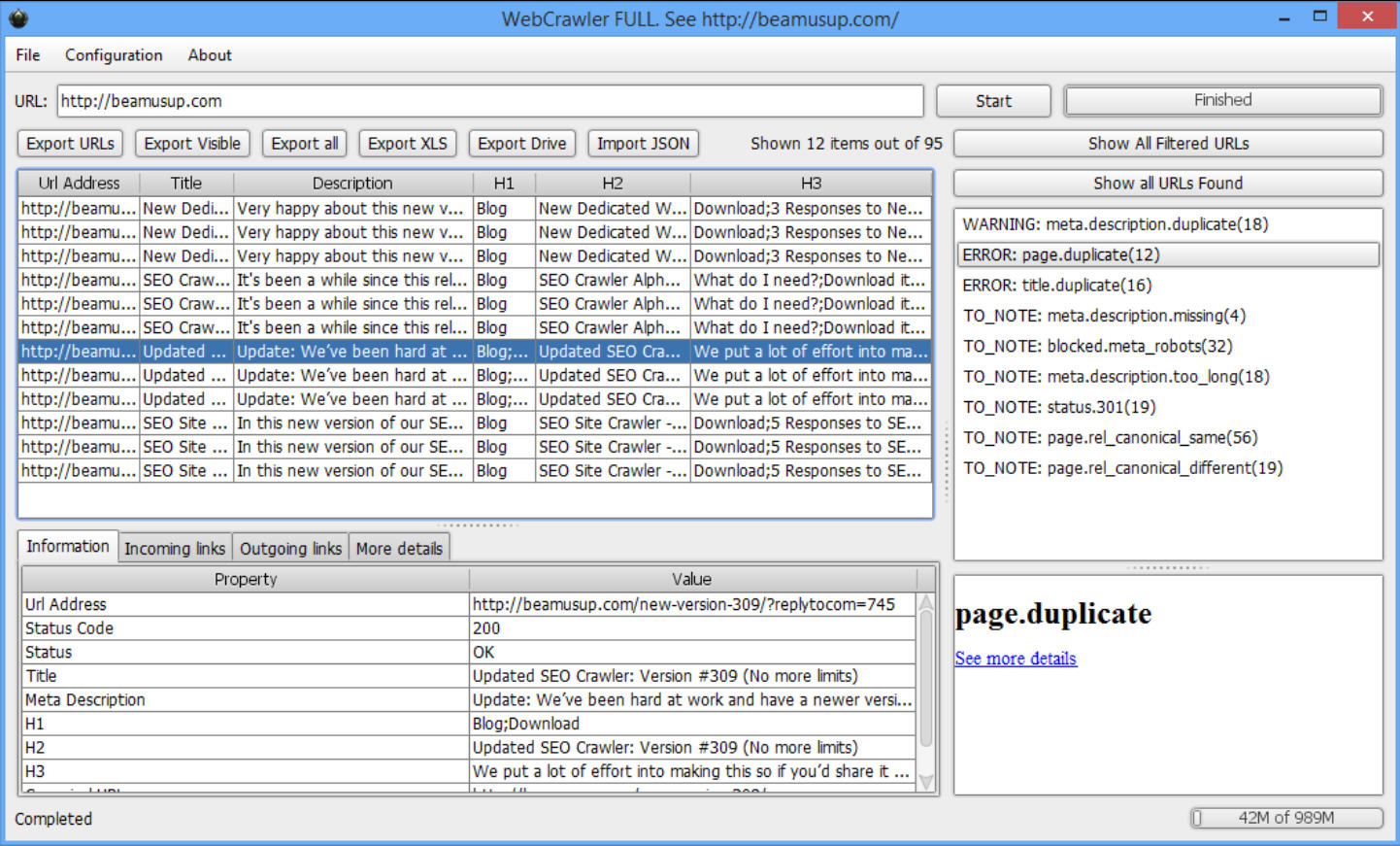

BeamUsUp is a free Java-based crawler which quickly scans a website and reports on common SEO issues: status error codes, description/ title too long or missing, missing headings, duplicate content and more.

Free? We were skeptical, but it’s true. It’s free for all uses, no adware, no flashing "Donate" button, and no annoying restrictions to persuade you to upgrade (can’t scan more than xxx links, can’t produce reports, can’t use it on Tuesdays -- you know the kind of thing).

Usage is just as straightforward and there’s nothing to install (as long as you’ve got Java, anyway). We just downloaded the program, ran it, typed our starting domain and pressed Enter. That’s it.

After parsing robots.txt and sitemaps.xml, BeamUsUp starts scanning the initial URL and any subfolders. By default it doesn’t scan subdomains or external URLs, but you can change that from the settings.

The program uses 10 crawler threads by default, so performance is reasonable. Results begin to appear in real time, too, with a sidebar listing individual problems and the number of pages where they’ve been found ("WARNING: title.too_long(43)"). Clicking any of these problem types displays their associated links, and you can view more details about them in a click or two.

When you’ve finished, the results of the scan may be saved as a local CSV file, an XLS, or directly to a Google Drive spreadsheet.

We had one or two issues with the program. When we turned on external link checking, it sometimes incorrectly reported 404's. And, presumably because it’s using 10 crawler threads, the interface can become very unresponsive during scans. You can try reducing the number of threads, if that’s a problem, but it might impact on scanning performance.

For the most part, though, BeamUsUp worked very well, and as the program is also entirely hassle and catch-free we’d say it’s well worth a try.

The program is available now for Windows, Linux and Mac.