By Scott M. Fulton, III, Betanews

The Betanews test suite for Windows-based Web browsers is a set of tools for measuring the performance, compliance, and scalability of the processing component of browsers, particularly their JavaScript engines and CSS renderers. Our suite does not test the act of loading pages over the Internet, or anything else that is directly dependent on the speed of the network.

But what is it measuring, really? The suite is measuring the browser's capability to perform instructions and produce results. In the early days of microcomputing, computers (before we called them PCs) came with interpreters that processed instructions and produced results. Today, browsers are the virtual equivalent of Apple IIs and TRS-80s -- they process instructions, and produce results. Many folks think they're just using browsers to view blog pages and check the scores. And then I catch them watching Hulu or playing a game on Facebook or doing something silly on Miniclip, and surprise, they're not just reading the paper online anymore. More and more, a browser is a virtual computer.

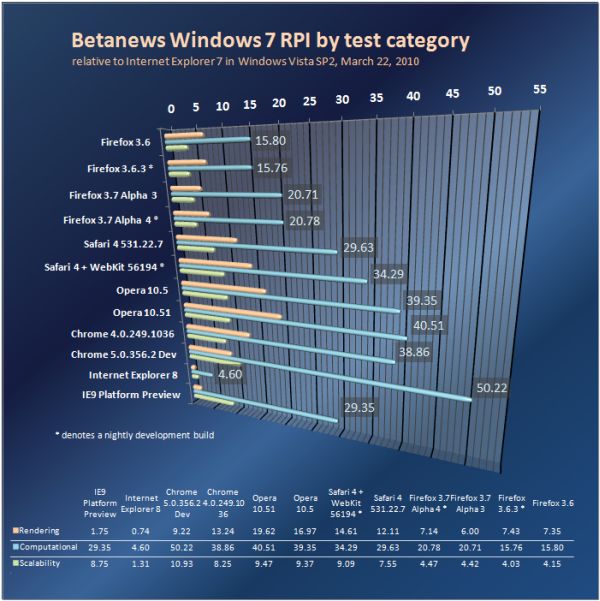

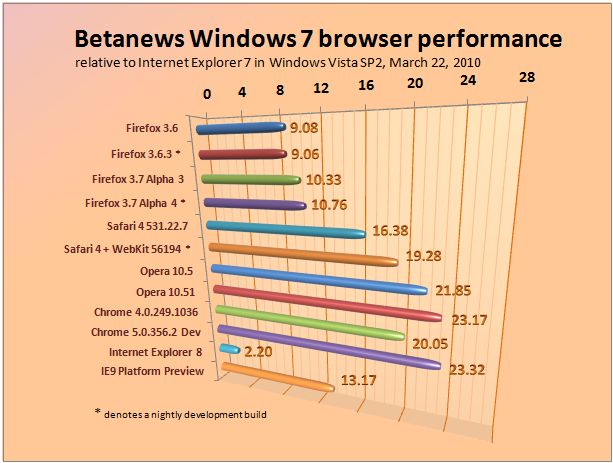

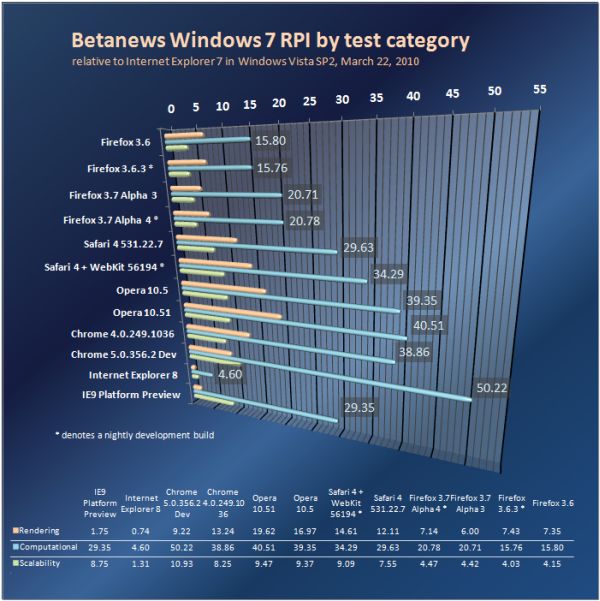

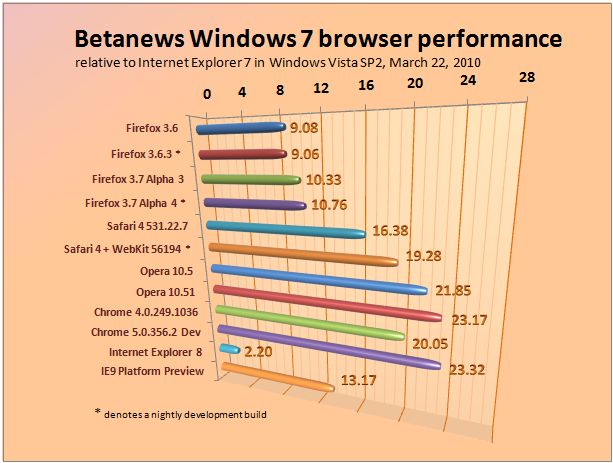

So I test it like a computer, not like a football scoreboard. The final result is a raw number that, for the first time on Betanews, can be segmented into three categories: computational performance (raw number crunching), rendering performance, and scalability (defined momentarily). These categories are like three aspects of a computer; they are not all the aspects of a computer, but they are three important ones. They're useful because they help you to appreciate the different browsers for the quality of the work they are capable of providing.

Many folks tell me they don't do anything with their browsers that requires any significant number crunching. The most direct response I have to this is: Wrong. Web pages are sophisticated pieces of software, especially where cross-domain sharing is involved. They're not pre-printed and reproduced like something from a fax machine; more and more, they're adaptive composites of both textual and graphic components from a multitude of sources, that come together dynamically. The degree of math required to make everything flow and align just right, is severely under-appreciated.

Why is everything relative?

By showing relative performance, the aim of the RPI index is to give you a simple-to-understand gauge of how two or more browsers compare to one another on any machine you install them on. That's why we don't render results in milliseconds. Instead, all of our results are multiples of the performance of a relatively slow Web browser that is not a recent edition: Microsoft Internet Explorer 7, running on Windows Vista SP2 (the slower of the three most recent Windows versions), on the same machine (more about the machine itself later).

I'm often asked, why don't I show results relative to IE8, a more recent version? The answer is simple: It wouldn't be fair. Many users prefer IE8 and deserve a real measurement of its performance. When Internet Explorer 9 debuts, I will switch the index to reflect a multiple of IE8 performance. In years to come, however, I predict it will be more difficult to find a consistently slow browser on which to base our index. I don't think it's fair for anyone, including us, to presume IE will always be the slowest brand in the pack.

Browsers will continue to evolve; and as they do, certain elements of our test suite may become antiquated, or unnecessary. But that time hasn't come yet. JavaScript interpreters are, for now, inherently single-threaded. So the notion that there may be some interpreters better suited to multi-core processors, has not been borne out by our investigation, including from the information we've been given by browser manufacturers. Also, although basic Web standards compliance continues to be important (our tests impose a rendering penalty for not following standards, where applicable), HTML 5 compliance is not a reality for any single browser brand. Everyone, including Microsoft, wants to be the quintessential HTML 5 browser, but the final working draft of the standard was only published by W3C weeks ago.

There will be a time when HTML 5 compliance should be mandatory, and we'll adapt when the time comes.

What's this "scalability" thing?

In speaking recently with browser makers -- especially with the leader of the IE team at Microsoft, Dean Hachamovitch -- I came to agree with a very fair argument about our existing test suite: It failed to account for performance under stress, specifically with regard to each browser's capability to crunch harder tasks and process them faster when necessary.

A simple machine slows down proportionately as its workload increases linearly. A well-programmed interpreter is capable of literally pre-digesting the tasks in front of it, so that at the very least it doesn't slow down as much -- if planned well, it can accelerate. Typical benchmarks have the browser perform a number of tasks repetitively. But because JavaScript interpreters are (for now) single-threaded, when scripts are written to interrupt a sequence of reiterative instructions every second or so -- for instance, to update the elapsed time reading, or to spin some icon to remind the user the script is running -- that very act works against the interpreter's efficiency. Specifically, it inserts events into the stream that disrupt the flow of the test, and work against the ability of the interpreter to break it down further. As I discovered when bringing together some tried and true algorithms for our new test suite, including one-second or even ten-second timeouts messes with the results.

Up to now, the third-party test suites we've been using (SunSpider, SlickSpeed, JSBenchmark) perform short bursts of instructions and measure the throughput within given intervals. These are fair tests, but only under narrow circumstances. With the tracing abilities of JavaScript interpreters used in Firefox, Opera, and the WebKit-based browsers, the way huge sequences of reiterative processes are digested, is different than the way short bursts are digested. I discovered this, with the help of engineers from Opera, when debugging the old test battery I've since thrown out of our suite: A smart JIT compiler can effectively dissolve a thousand or a million or ten million instructions that effectively do nothing, into a few void instructions that accomplish the same nothing. So if a test pops up a "0 ms" result, and you increase the workload by a factor of, say, a million, and it still pops up "0 ms"...that's not an error. That's the sign of a smart JIT compiler.

The proof of this premise also shows up when you vary the workload of a test that does a little something more than nothing: Browsers like Chrome accelerate the throughput when the workload is greater. So if their interpreters "knew" the workload was great to begin with, rather than just short bursts or, instead, infinite loops broken by timeouts (whose depth perhaps cannot be traced in advance), they might actually present better results.

That's why I've decided to make scalability count for one-third of our overall score: Perhaps an interpreter that's not the fastest to begin with, is still capable of finding that extra gear when the workload gets tougher; and perhaps we could appreciate that fact if we only took the time to do so.

Next: The newest member of the suite...

The newest member of the suite

Decades ago, back when I tested the efficiency of BASIC and C compilers, I published the results under my old pseudonym, "D. F. Scott." In honor of my former "me," I named our latest benchmark test battery "DFScale."

It has ten components, two of which are broken into two sub-components, for a total of 12 tests. Each component renders results on a grid which is divided into numbers of total iterations: 20, 22, 24, 30, 100, 250, 500, 1000, 10000, 25000, 50000, 100000, 250000, 500000, 1000000, and 10000000. Not every component can go that high. By that, I mean that at high levels, the browser can crash, or hang, or even generate stack overflow errors. Until I am satisfied that these errors are not exploitable for malicious purposes, I will not release the DFScale suite to the public.

On the low end of the scale, I scale some components up where the iteration numbers are too low to make meaningful judgments. So for a test whose difficulty scales exponentially -- and rapidly -- like the Fibonacci sequence, for instance, I stick to the low end of the scale.

Some tests involve the use of arrays of random integers, whose size and scale equally correspond to the grid numbers. So an array of 10,000 units, for example, would contain random values from 1 to 10,000. Yes, we use the fairer random number generator and value shuffler used by Microsoft to correct its browser choice screen, in an incident brought to the public's attention in early March by IBM's Rob Weir. In fact, the shuffler is one of our tests; and in honor of Weir, we named the test the "Rob Weir Shuffle."

To ensure fairness, we randomize and shuffle the arrays that components will use (except for the separate test of the "Weir Shuffle") at the start of runtime. Then components will sort through copies of the same shuffled arrays, rather than regenerate new ones.

Reiterative loops run uninterrupted on each browser, which is something that some browsers don't like. Internet Explorer stops "runaway" loops to ask the user if she wishes to continue; to keep this from happening, we tweaked the System Registry. Chrome puts up a warning giving the user the option to stop the loop, but that warning is on a separate thread which apparently does not slow down the loop.

Each component of DFScale produces a pair of scores, for speed and scalability, which require Excel for us to tally. The speed score is the average of the iterations per second for each grid number. The scalability score is a product of two elements: First, the raw times for each grid number are plotted geometrically on the y-axis, with the numbers of iterations on the x-axis. We then estimate a best-fit line, and calculate the slope of that line. The lower the slope, the higher the score. Secondly, we estimate a best-fit exponential curve, representing the amount of acceleration a JIT compiler can provide over time. Again, the lower the curve's coefficient, the flatter the curve is, and the higher the score.

Rather than attribute arbitrary numbers to that score, we compare the slope and coefficient values to those generated from a test of IE7 in Vista SP2. Otherwise, saying that browser A scored a 3 and browser B scored a 12, would be meaningless -- twelve of what? So the final score on a component is 50% slope, 50% curve, relative to IE7.

The ten tests in the (current) DFScale suite are as follows:

- The "Rob Weir Shuffle" - or rather, the fairer array shuffling algorithm made popular by Weir's disassembly of the standard random number generator in IE.

- The Sieve of Eratosthenes (which I used so often during the '80s that several readers suggested it be officially renamed "The D. F. Sieve"). It's the algorithm used to identify prime numbers in a long sequence; and here, the sequence lengths are determined by the grid numbers.

- The Fibonacci sequence, which is an algorithm that produces integers that are each the sum of the previous two. Of the many ways of expressing this algorithm, we picked two with somewhat different construction. The first compresses the entire comparison operation into a single instruction, using an alternative syntax first introduced in C. The second breaks down the operation into multiple instructions -- and because it's longer, you'd think it would be harder to run. It's not, because the alternative syntax ends up being more difficult for JIT compilers to digest, which is why the longer form has been dubbed the "Fast Fib."

- The native JavaScript

sort() method, which isn't really a JavaScript algorithm at all, but a basic test of each browser's ability to sort an array using native code. In the real world, the sort() method is the typical way developers will handle large arrays; they don't include their own QuickSort or Heap Sort scripts. The reason we test these other sorting algorithms (to be explained further) is to examine the different ways JavaScript interpreters handle and break down reiterative tasks. The native method can conceivably act as a benchmark for relative efficiency; and amazingly, external scripts can be faster than the native method, especially in Google Chrome.

- Radix Sort is an algorithm that simulates the actions of an old IBM punch-card sorter, which sorted a stack of cards based on their 0 - 9 digits at various locations. No one would dare use this algorithm today for real sorting, but it does test each browser's ability to scale up a fixed and predictable set of rules, when the complexity of the problem scales exponentially. Nearly all browsers are somewhat slower with the Radix at larger values, with IE8 dramatically slower.

- QuickSort is the time-tested "divide-and-conquer" approach to sorting lists or arrays, that often remains the algorithm of choice for large indexes. It picks a random array value, called a "pivot point," and then shuffles the others to one side or the other based on their relative value. The result are groups of lower and higher values that are separated from one another, and can in turn be sorted as smaller groups.

- Merge Sort is a more complex approach to divide-and-conquer that is often used for sorting objects with properties rather than just arrays. It efficiently divides a complex array into smaller arrays within a binary tree, sorts the smaller ones, and then integrates the results with bigger ones as it goes along. It's also very memory intensive, because it breaks up the problem all over the place. (If the video below doesn't explain it for you, then at least it will give you something to temper your nightmares.)

- Bubble Sort is the quintessential test of the simplest, if least efficient, methodology for attacking a problem: It compares two adjacent values in the array repetitively, and keeps moving the highest one further forward...until it can't do that anymore, at which point, it declares the array sorted. As a program, it should break down extremely simply, which should give interpreters an excuse to find that extra gear...if it has one. If it doesn't, the sort times will scale very exponentially as the problem size scales linearly.

- Heap Sort has been said to be the most stable performer of all the sort algorithms. Naturally, this is the one that gives our browsers the most fits -- the stack overflow errors happen here. Safari handles the error condition by simply refusing to crash, but ending the loop -- which speaks well for its security. It cannot perform this test at ten million iterations. Other browsers...can force a reboot if the problem size is too high. Essentially, its task is to weed out high values from a shrinking array. The weeds are piled atop a heap, and the pile forms a sorted array.

- Euler's Problem #14 is a wonderful discovery I made while searching for a test that was similar in some ways to, but different in others from, the Fibonacci sequence. The page where I discovered it grabbed my attention for its headline, "Yet Another Meaningless JavaScript Benchmark," which somehow touched my soul. The challenge for this problem was to find a way of demonstrating the "unproven conjecture" that a particular chain of numbers, starting with any given integer value and following only two rules, would eventually pare down to 1. The rules are: 1) if the value is even, the next value in the chain is the current one divided by 2; 2) if the value is odd, the next one is equal to three times the current one, plus 1. Our test runs the conjecture with the first 1000, 10000, 25000 integers, and so on. The problem should scale almost linearly, giving interpreters the opportunity to push the envelope and accelerate. In our first test of the IE9 Tech Preview, it appeared to be accelerating very well, running the first 250,000 integer sequence in 0.794 seconds versus 10.9 seconds for IE8. Alas, IE9 is not yet stable enough to run the test with higher values.

Problem 14 is also interesting for us because we test it two ways, suggested by blogger and developer Motti Kanzkron: He realized that at some point, future sequences would always touch upon a digit used in previous sequences, whose successive values in the chain would always be the same. So there's no need to complete the rest of the sequence if it's already been done once; his optimization borrows memory to tack old sequences onto new ones and move forward. We compare the brute-force "naïve" version to the smart "optimized" version, to judge the ability of a JIT compiler to optimize code compared to an optimization that would be more obvious to a programmer.

Next: The other third-party test batteries...

The other third-party test batteries

Since we started testing browsers in early 2009, Betanews has maintained one very important methodology: We take a slow Web browser that you might not be using much anymore, and we pick on its sorry self as our test subject. We base our index on the assessed speed of Microsoft Internet Explorer 7 on Windows Vista SP2 -- the slowest browser still in common use. For every test in the suite, we give IE7 a 1.0 score. Then we combine the test scores to derive an RPI index number that, in our estimate, best represents the relative performance of each browser compared to IE7. So for example, if a browser gets a score of 6.5, we believe that once you take every important factor into account, that browser provides 650% the performance of IE7.

We believe that "performance" means doing the complete job of providing rendering and functionality the way you expect, and the way Web developers expect. So we combine computational efficiency, rendering efficiency (coupled with standards compliance tests), and scalability. This way, a browser with a 6.5 score can be thought of as doing the job more than five times faster and better.

Here now are the other third-party batteries we use for our Browser Test Suite 3.0, and how we've modified them where necessary to suit our purposes:

- Nontroppo CSS rendering test. Up until recently, we were using a modified version of a rendering test used by HowToCreate.co.uk, whose two purposes have been to time how long it takes to re-render the contents of multiple arrays of <DIV> elements and to time the loading of the page that includes those elements. The reason we modified this page was because the JavaScript onLoad event fires at different times for different browsers -- despite its documented purpose, it doesn't necessarily mean the page is "loaded." There's a real-world reason for these variations: In Apple Safari, for instance, some page contents can be styled the moment they're available, but before the complete page is rendered, so firing the event early enables the browser to do its job faster -- in other words, Apple doesn't just do this to cheat. But the actual creators of the test themselves, at nontroppo.org, did a better job of compensating for the variations than we did: Specifically, the new version now tests to see when the browser is capable of accessing that first <DIV> element, even if (and especially when) the page is still loading.

Here's how we developed our new score for this test battery: There are three loading events: one for Document Object Model (DOM) availability, one for first element access, and the third being the conventional onLoad event. We counted DOM load as one sixth, first access as two sixths, and onLoad as three sixths of the rendering score. Then we adjusted the re-rendering part of the test so that it iterates 50 times instead of just five. This is because some browsers do not count milliseconds properly in some platforms -- this is the reason why Opera mysteriously mis-reported its own speed in Windows XP as slower than it was. (Opera users everywhere...you were right, and we thank you for your persistence.) By running the test for 10 iterations for five loops, we can get a more accurate estimate of the average time for each iteration because the millisecond timer will have updated correctly. The element loading and re-rendering scores are averaged together for a new and revised cumulative score -- one which readers will discover is much fairer to both Opera and Safari than our previous version.

- Celtic Kane JSBenchmark.The new JSBenchmark from Sean P. Kane is a modern version of the classic math tests first made popular, if you can believe it, by folks like myself who tested compilers for computer magazines. QuickSort is covered here too, and in this case, JSBenchmark renders relative throughput during a given interval. There's other problems too, including one called the "Genetic Salesman," which finds the shortest route through a geometrically complex maze. It's good to see a modern take on my old favorites. Rather than run a fixed number of iterations and time the result, JSBenchmark runs an undetermined number of iterations within a fixed period of time, and produces indexes that represent the relative efficiency of each algorithm during that set period -- higher numbers are better.

- SunSpider JavaScript benchmark. Maybe the most respected general benchmark suite in the field, SunSpider focuses on computational JavaScript performance rather than rendering -- the raw ability of the browser's underlying JavaScript engine. It comes from the folks who produce the WebKit open source rendering engine that currently has closer ties with Safari, but we've found SunSpider's results to appear fair and realistic, and not weighted toward WebKit-based browsers. There are nine categories of real-world computational tests (3D geometry, memory access, bitwise operations, complex program control flow, cryptography, date objects, math objects, regular expressions, and string manipulation). Each test in this battery is much more complex, and more in-tune with real functions that Web browsers would perform every day, than the more generalized, classic approach now adopted by JSBenchmark. All nine categories are scored and average relative to IE7 in Vista SP2.

- Mozilla 3D cube by Simon Speich, also known as Testcube 3D, is an unusual discovery from an unusual source: an independent Swiss developer who devised a simple and quick test of DHTML 3D rendering while researching the origins of a bug in Firefox. That bug has been addressed already, but the test fulfills a useful function for us: It tests only graphical dynamic HTML rendering -- which is finally becoming more important thanks to more capable JavaScript engines. And it's not weighted toward Mozilla -- it's a fair test of anyone's DHTML capabilities.

There are two simple heats whose purpose is to draw an ordinary wireframe cube and rotate it in space, accounting for forward-facing surfaces. Each heat produces a set of five results: total elapsed time, the amount of that time spent actually rendering the cube, the average time each loop takes during rendering, and the elapsed time in milliseconds of the fastest and slowest loop. We add those last two together to obtain a single average, which is compared with the other three times against scores in IE7 to yield a comparative index score. We also now extrapolate a scalability score, which compares the results from the larger cube to the smaller one to see if the interpreter accelerated and by how much.

- SlickSpeed CSS selectors test suite. As JavaScript developers know, there are a multitude of third-party libraries in addition to the browser's native JS library, that enable browsers to access elements of a very detailed and intricate page (among other things). For our purposes, we've chosen a modified version of SlickSpeed by Llama Lab, which covers many more third-party libraries including Llama's own. This version tests no fewer than 56 shorthand methods that are supposed to be commonly supported by all JavaScript libraries, for accessing certain page elements. These methods are called CSS selectors (one of the tested libraries, called Spry, is supported by Adobe and documented here).

So Llama's version of the SlickSpeed battery tests 56 selectors from 10 libraries, including each browser's native JavaScript (which should follow prescribed Web standards). Multiple iterations of each selector are tested, and the final elapsed times are rendered. Here's the controversial part: Some have said the final times are meaningless because not every selector is supported by each browser; although SlickSpeed marks each selector that generates an error in bold black, the elapsed time for an error is usually only 1 ms, while a non-error is as high as 1000. We compensate for this by creating a scoring system that penalizes each error for 1/56 of the total, so only the good selectors are scored and the rest "get zeroes."

Here's where things get hairy: As some developers already know, IE7 got all zeroes for native JavaScript selectors. It's impossible to compare a good score against no score, so to fill the hole, we use the geometric mean of IE7's positive scores with all the other libraries, as the base number against which to compare the native JavaScript scores of the other browsers, including IE8. The times for each library are compared against IE7, with penalties assessed for each error (Firefox, for example, can generate 42 errors out of 560, for a penalty of 7.5%.) Then we assess the geometric mean, not the average, of each battery -- the reason we do this is because we're comparing the same functions for each library, not different categories of functions as with the other suites. Geometric means will account better for fluctuations and anomalies.

Next: Table rendering and standards compliance...

Table rendering and standards compliance

- Nontroppo table rendering test. As has already been proven in the field, CSS is the better platform for rendering complex pages using magazine-style layout. Still, a great many of the world's Web pages continue to use HTML's old <TABLE> element (created to render data in formal tables) for dividing pages into grids. We heard from you that if IE7 is still important (it is our index browser after all), old-style table rendering should still be tested. And we concur.

The creator of our CSS rendering test has created a similar platform for testing not only how long it takes a browser to render a huge table, but how soon the individual cells (<TD> elements) of that table are available for manipulation. When the test starts, it times the duration until the browser starts rendering the table and then ends that rendering, from the same mark, for two index scores. It also times the loading of the page, for a third index score. Then we have it re-render the contents of the table five times, and average the time elapsed for each one, for a fourth score. The four items are then averaged together for a cumulative score.

- Nontroppo standard browser load test. (That Nontroppo gets around, eh?) This may very well be the most generally boring test of the suite: It's an extremely ordinary page with ordinary illustrations, followed by a block full of nested <DIV> elements. But it allows us to take away all the variable elements and concentrate on straight rendering and relative load times, especially when we launch the page locally. It produces document load time, document plus image load times, DOM load times, and first access times, all of which are compared to IE7 and averaged.

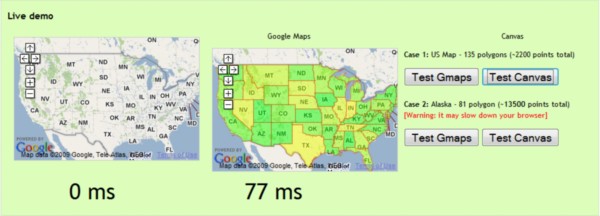

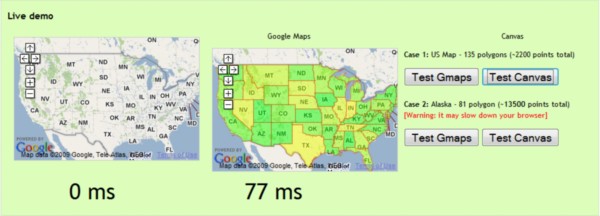

- Canvas rendering test. The

canvas object in JavaScript is a local memory segment where client-side instructions can plot complex geometry or even render detailed, animated text, all without communicating with the server. The Web page contains all the instructions the object needs; the browser downloads them, and the contents are plotted locally. We discovered on the blog of Web developer Ernest Delgado a personal test originally meant to demonstrate how much faster the Canvas object was than using Vector Markup Language in Internet Explorer, or Scalable Vector Graphics in Firefox. We'd make use of the VML and SVG test ourselves if Apple's Safari -- in the interest of making things faster -- hadn't implemented a system that replaces them with Canvas by default. (To embrace HTML 5, newer browsers will have to incorporate SVG; and IE9 is finally taking big steps in that direction.)

We use Delgado's rendering test to grab two sets of plot points from Yahoo's database -- one outlining the 48 contiguous United States, and one set outlining Alaska complete with all its islands. Those plot points are rendered on top of Google Maps projections of the mainland US and Alaska at equal scale, and both renderings are timed separately. Those times are compared against IE7, and the two results are averaged with one another for a final score.

- Acid3 standards compliance test. To the extent to which browsers don't follow the most commonly accepted Web standards, we penalize them in the rendering category. Think of it like a Winter biathlon where, for every target the skier fails to shoot, he has to ski a mini-lap. The function of the Web Standards Project's Acid3 test has changed dramatically, especially as most of our browsers become fully compliant. IE7 only scored a 12% on the Acid3, and IE8 scored 20%; but today, most of the alternative browsers are at 100% compliance, with Firefox at 93% and with 3.7 Alpha 1 scoring 96%. So it means less now than it did in earlier months to have Acid3 yield an index score of 8.33, which is the score for any browser that scores 100% thanks to IE7. Now that cumulative index scores are closer to 20, having an eight-and-a-third in the mix has become a deadweight rather than a reward.

So now, for the other batteries that have to do with rendering (all three Nontroppos and TestCube 3D), plus the native JavaScript library portion of the SlickSpeed test, we're multiplying the index score by the Acid3 percentage. As a result, the amount of any non-compliance with the Web Standards Project's assessment is applied as a penalty against those rendering scores. Today, only Mozilla and Microsoft browsers are affected by this penalty, and Firefox only slightly -- all the others are unaffected.

- The CSS3.info compliance test. Microsoft asked us, as long as we're calling Acid3 a litmus test for standards, why don't we also pay attention to the CSS3.info test for CSS compliance. It was a fair question, and we checked into it, we couldn't find anything against it. There are 578 standard selectors in the CSS3 test, the fraction of which that each browser supports constitutes a percentage. It's then combined with the Acid3 percentage to yield a total rendering penalty. (Yes, Microsoft argued its way out of a few points: IE8 scores 20% on the Acid3, but 60.38% on the CSS3.info, for a 40% allowable percentage instead of 20%.)

Next: Our physical test platform, and why it doesn't matter...

Our physical test platform, and why it doesn't matter

The physical test platform we've chosen for browser testing is a triple-boot system, which enables us to boot different Windows platforms from the same large hard drive. For the Comprehensive Browser Test (CRPI), our platforms are Windows XP Professional SP3, Windows Vista Ultimate SP2, and Windows 7 RTM. For the standard RPI test, we use just Windows 7.

All platforms are always brought up to date using the latest Windows updates from Microsoft, prior to testing. We realize, as some have told us, this could alter the speed of the results obtained. However, we expect real-world users to be making the same changes, rather than continuing to use unpatched and outdated software. Certainly the whole point of testing Web browsers on a continual basis is because folks want to know how Web browsers are evolving, and to what degree, on as close to real-time a scale as possible. When we update Vista, we re-test IE7 on that platform to ensure that all index scores are relative to the most recent available performance, even for that aging browser on that old platform.

Our physical test system is an Intel Core 2 Quad Q6600-based computer using a Gigabyte GA-965P-DS3 motherboard, an Nvidia 8600 GTS-series video card, 3 GB of DDR2 DRAM, and a 640 GB Seagate Barracuda 7200.11 hard drive (among others). Three Windows XP SP3, Vista SP2, and Windows 7 RC partitions are all on this drive. Since May 2009, we've been using a physical platform for browser testing, replacing the virtual test platforms we had been using up to that time. Although there are a few more steps required to manage testing on a physical platform, you've told us you believe the results of physical tests will be more reliable and accurate.

But the fact that we perform all of our tests on one machine, and render their results as relative speeds, means that the physical platform is actually immaterial here. We could have chosen a faster or slower computer (or, frankly, a virtual machine) and you could run this entire battery of tests on whatever computer you happen to own. You'd get the same numbers because our indexes are all about how much faster x is than y, not how much actual time elapsed.

The speed of our underlying network is also not a factor here, since all of our tests contain code that is executed locally, even if it's delivered by way of a server. The download process is not timed, only the execution.

Why don't we care about download speeds, especially how long it takes to load certain pages? We do, but we're still in search of a scientifically reliable method to test download efficiency. Web pages change by the second, so any test that measures the time a handful of browsers consumes to download content from any given set of URLs, is almost pointless today. And the speed of the network can vary greatly, so a reliable final score would have to factor out the speed at the time of each iteration. That's a cumbersome approach, and that's why we haven't embarked on it yet.

There are three major benchmark suites that we have evaluated and re-evaluated, and with respect to their authors, we have chosen not to use. Dromaeo comes from a Firefox contributor whom we respect greatly, named John Resig. We appreciate Resig's hard work, but we don't yet feel his results numbers correspond to the differences in performance that we see with our own eyes, or that we can time with a stopwatch. The browsers just aren't that close together. Meanwhile, we've currently rejected Google's V8 suite -- built to test its V8 JavaScript engine -- for the opposite reason: Yes, we know Chrome is more capable than IE. But 230 times more capable? No. That's overkill. There's a huge exponential curve there that's not being accounted for, and once it is, we'll reconsider it.

We've also been asked to evaluate Futuremark's PeaceKeeper. I'm very familiar with Futuremark's tests from my days at Tom's Hardware. Though it's obvious to me that there's a lot going on in each of the batteries of the Peacekeeper suite, it doesn't help much that the final result is rendered only as a single tick-mark. And while that may sound hypocritical from a guy who's pushing a single performance index, the point is, for us to make sense of it, we need to be able to see into it -- how did that number get that high or that low? If Futuremark would just break down the results into components, we could compare each of those components against IE7 and the modern browsers, and we could determine where each browser's strengths and weaknesses lie. Then we could tally an index based on those strengths and weaknesses, rather than an artificial sum of all values that blurs all those distinctions.

This is as complete and as thorough an explanation of our testing procedure as you're ever going to get.

Copyright Betanews, Inc. 2010

Although

Although  By mid-morning, however, it appeared the gig was up, as every request for a proxy connection ended up being blocked. ChinaChannel rotates its proxy requests through a list of services (including aiya.com.cn, chinanetcenter.com, and a few services whose names are now blanked out in their "Access Denied" messages) that, at one time, were open. Requests to Aiya were met with this message: "Access control configuration prevents your request from being allowed at this time. Please contact your service provider if you feel this is incorrect."

By mid-morning, however, it appeared the gig was up, as every request for a proxy connection ended up being blocked. ChinaChannel rotates its proxy requests through a list of services (including aiya.com.cn, chinanetcenter.com, and a few services whose names are now blanked out in their "Access Denied" messages) that, at one time, were open. Requests to Aiya were met with this message: "Access control configuration prevents your request from being allowed at this time. Please contact your service provider if you feel this is incorrect."

Late last year, I took a look at

Late last year, I took a look at  Literally on the wheels of a gourmet cupcake truck, Lenovo this week rolled out the 14- and 15-inch editions of its emerging ThinkPad Edge laptop line-up.

Literally on the wheels of a gourmet cupcake truck, Lenovo this week rolled out the 14- and 15-inch editions of its emerging ThinkPad Edge laptop line-up.