By Scott M. Fulton, III, Betanews

Perhaps you've read an article this week purporting to offer new details on "Windows 8," or whatever Microsoft's next client operating system will be called, only to be perturbed at discovering at the end that you were the one being asked to supply the details. The expectation among many observers is that Windows 8 will be a lot like Windows 7, maybe something less than the great leap forward that a "point-oh" release typically implies.

But that doesn't mean there aren't folks at Microsoft (or at least, folks being funded by Microsoft) who are unaware that such a significant advance may be necessary within the next few years. Granted, simply because a project is being undertaken at Microsoft Research is no guarantee that anything that culminates from it will ever be put to use (case in point: HTTP-NG). On the other hand, to paraphrase a slogan formerly used by PBS, if Microsoft doesn't do it, who will?

A team of nine researchers led by the Swiss university ETH Zurich and the Cambridge, UK offices of Microsoft Research, have launched a Web site to promote their ongoing effort to create of a completely new, experimental operating system for super-multicore systems. Called Barrelfish (PDF available here), it isn't Windows at all; in fact, it's intentionally designed not to be Windows, at this point. In the interest of removing the structural complications brought about by decades of layering upon layering of fixes, add-ons, and workarounds, Barrelfish attempts to exploit a phenomenon of computers with large numbers of cores (say, more than eight) that doesn't manifest itself in smaller systems.

Up to now, multicore design from both AMD and Intel has focused on the ability for each core on a die to have access to shared memory; in fact, AMD has recently claimed advances in architectural quality over Intel in the way its advanced HyperTransport directs shared memory to individual cores. But even AMD has acknowledged that certain types of programs can run slower on multicore systems than even on single-core systems, and has offered techniques for encouraging better parallelism in programming, with more multithreading, as its solution.

"This upheaval in hardware has important consequences for a monolithic OS that shares data structures across cores," the Barrelfish research team writes. "These systems perform a delicate balancing act between processor cache size, the likely contention and latency to access main memory, and the complexity and overheads of the cache-coherence protocol. The irony is that hardware is now changing faster than software, and the effort required to evolve such operating systems to perform well on new hardware is becoming prohibitive."

For shared memory to work effectively as CPU producers envision it, the Barrelfish team argues, the structural changes that software engineers would need to make will be too complex. But preventing them from being able to achieve their goals in due course is the fact that hardware engineers' and software engineers' evolutionary plans are being executed at two different speeds, with latter being the slowest perhaps by orders of magnitude.

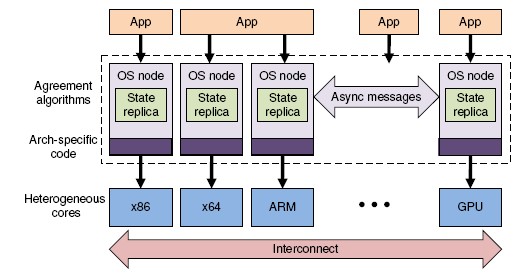

A state diagram of the configuration of the experimental Barrelfish OS, from the ETH Zurich / Microsoft Research white paper.

The Barrelfish solution, as proposed, is to replace the shared memory architecture of the present operating system with a new and simplified structure that would appear, at least on the surface, to be inefficient and non-conservative. It's a multikernel operating system structure where each core effectively has its own OS and its own exclusive memory. Everything each core needs to know about the state of the other is replicated, in a kind of broadcast-like system where all cores are getting the same picture. And everything each core needs to "tell" the other is exchanged by way of a messaging scheme.

As the team explains it, "Within a multikernel OS, all inter-core communication is performed using explicit messages. A corollary is that no memory is shared between the code running on each core, except for that used for messaging channels. As we have seen, using messages to access or update state rapidly becomes more efficient than shared memory access as the number of cache-lines involved increases. We can expect such effects to become more pronounced in the future. Note that this does not preclude applications sharing memory between cores, only that the OS design itself does not rely on it."

Single-core kernels, deployed en masse in a distributed scheme, the team argues, enables the architecture of the operating system to be more platform- and vendor-neutral than current models. An analogy might be all the different variants of billiards that have been created over the centuries, without there being a fundamental need to revise or re-architect billiard balls. If the kernel is small enough, then what makes a deployment unique would be the way it's multiplexed, thus making the deployment on a Sun SPARC processor-based system different than it would be on an Intel Xeon-based system, without the kernel itself needing to be different at all.

Previous efforts at building experimental operating systems (including at Microsoft Research) have been stopped dead in their tracks, we've been told, by the fact that migration would be technically impossible -- moving an enterprise's applications onto the new platform would take more years of humanpower than they are willing to invest in. But the onset of the age of virtualization may have already changed this state of affairs: Since more businesses are already becoming comfortable with running virtual Windows Servers, through Hyper-V or VMware or through Windows Server itself, there may not have to be any huge migration involved at all.

Conceptually, Barrelfish might not have to be the next Windows as much as "the next Hyper-V," enabling a new and more efficient platform for the execution of virtual Windows Server as its application. As the team acknowledges, virtual shared memory systems would be a key feature of Barrelfish, even though the operating system itself doesn't require it -- it's for the sake of hypervisors, which could likely become such a system's principal application, were it ever to be released for sale.

"We view the OS as, first and foremost," the research team concludes, "a distributed system which may be amenable to local optimizations, rather than centralized system which must somehow be scaled to the network-like environment of a modern or future machine. By basing the OS design on replicated data, message-based communication between cores, and split-phase operations, we can apply a wealth of experience and knowledge from distributed systems and networking to the challenges posed by hardware trends."

Copyright Betanews, Inc. 2009

When you think of kids on the playground playing Star Trek make-believe, you see the guy who plays Scotty inevitably being shouted at to increase or decrease the power, and then the guy putting on his best (or worst) Scottish accent and complaining back to the captain about how it canna be done, she can't take this abuse much longer or we're all genna bloe! Powering up and powering down is the most common task that amateurs think of when they consider the role of an engineer running a big machine.

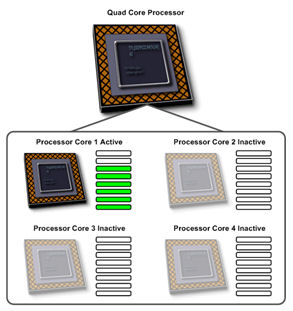

When you think of kids on the playground playing Star Trek make-believe, you see the guy who plays Scotty inevitably being shouted at to increase or decrease the power, and then the guy putting on his best (or worst) Scottish accent and complaining back to the captain about how it canna be done, she can't take this abuse much longer or we're all genna bloe! Powering up and powering down is the most common task that amateurs think of when they consider the role of an engineer running a big machine. As an obscure, but obtainable, new piece of Microsoft documentation explains it, "The PPM engine chooses a minimum number of cores on which threads will be scheduled. Cores that are chosen to be 'parked' do not have any threads scheduled on them and they can drop into a lower power state. The remaining set of 'unparked' cores are responsible for the entirety of the workload (with the exception of affinitized work or directed interrupts). Core parking can increase power efficiency during lower usage periods on the server because parked cores can drop into a low-power state."

As an obscure, but obtainable, new piece of Microsoft documentation explains it, "The PPM engine chooses a minimum number of cores on which threads will be scheduled. Cores that are chosen to be 'parked' do not have any threads scheduled on them and they can drop into a lower power state. The remaining set of 'unparked' cores are responsible for the entirety of the workload (with the exception of affinitized work or directed interrupts). Core parking can increase power efficiency during lower usage periods on the server because parked cores can drop into a low-power state."