By Scott M. Fulton, III, Betanews

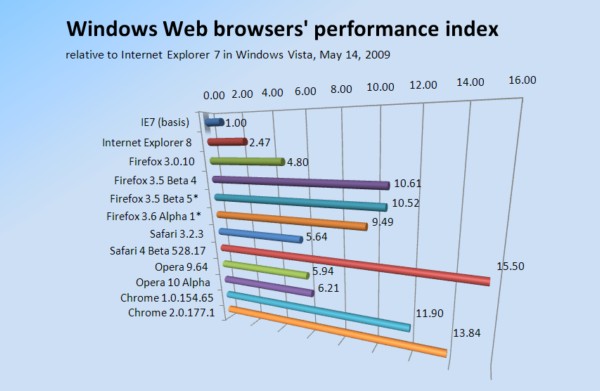

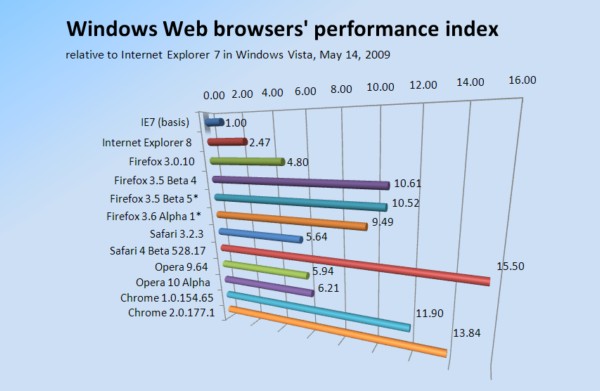

Earlier this week, Apple posted security updates for both its production and experimental versions of its Safari browser, for both Mac and Windows platforms. But Betanews tests indicate that the company may have sneaked in a few performance improvements as well, as the experimental browser posted its best index score yet: above 15 times better performance than Internet Explorer 7 in the same system.

After some security updates to Windows Vista, Betanews performed a fresh round of browser performance tests on the latest production and experimental builds. That made our test virtual platform (see page 2 for some notes about our methodology) a little faster overall, and while many browsers appeared to benefit including Firefox 3.5 Beta 4, the very latest Mozilla experimental browsers in the post-3.5 Beta 4 tracks clearly did not. For the first time, we're including the latest production build of Apple Safari 3 in our tests (version 3.2.3, also patched this week) as well as Opera 9.64. Safari 4, however, posted better times than even our test system's general acceleration would allow on its own.

In our first tests of the Safari 4 public beta against the most recent edition of Google Chrome 2 last month, we noted Safari scored about 10% better than Chrome 2, that company's experimental build. Since that time, there's been a few shakedowns of Chrome 2, and a few security updates to Vista.

Those Vista speed improvements of about 4% overall were reflected in our latest IE8 and Firefox 3.5 Beta 4 test scores. After applying the latest updates, we noticed IE8 performance improve immediately, by almost 13% over last month to 2.47 -- meaning, about 247% better performance than Internet Explorer 7 on the same platform. While Google Chrome posted better numbers this month over last, neither version may have benefitted from the Vista speed boost very much, with Chrome 1 jumping 2.4% over last month to 11.9, but Chrome 2 faring better, improving almost 6% to 13.84.

Safari 4's speed gains were closer to 8% over last month, with a record index score of 15.5 in our latest test. This while the latest developmental builds of Firefox 3.5 not-yet-public Beta 5 ("Shiretoko" track) and 3.6 Alpha 1 ("Minefield" track) were both noticeably slower than even 3.5 Beta 4. This was a head scratcher, so we repeated the test four times, refreshing the circumstances each time (that's why the report I'd planned for yesterday ended up being posted today), with our results confirmed each time.

We started fresh with Opera, this time testing both the production and preview builds for the first time. Opera 9.64 put in a score very comparable to Safari 3.2.3, at 5.94 versus 5.64, respectively. But our latest download of the Opera 10 preview kicked performance up more than a notch, with a nice 15.4% improvement over last month to 6.21.

Next: A word on methodology...

A word on methodology I've gotten a number of comments and concerns regarding the way our recent series of browser performance tests are conducted, many of which are very valid and even important. For the majority of these tests, I use a Virtual PC 2007 VM with Windows Vista Ultimate. Most notably, I've received questions regarding why I use virtual machines in timed tests, especially given their track record of variable performance in their own right.

The key reason I began using VMs was so that I could maintain a kind of white-box environment for applications being tested with an operating system. In such an environment, there are no anti-malware or anti-virus or firewall apps to slow the system down or to place another variable on applications' performance. I can always keep a clean, almost blank environment as a backup should I ever install something that compromises the Registry or makes relative performance harder to judge.

That said, even though using VMs gives me that convenience, there is a tradeoff: One has to make certain that any new tests are being conducted in a host environment that is as unimpeded and functional as for previous tests. With our last article on this subject, I received a good comment from a reader who administers VMs who said from personal experience that their performance is unreliable. With my rather simplified VM environment (nothing close to a virtual data center), I can report the following about my performance observations: If the Windows XP SP3 host hasn't been running other VMs or isn't experiencing difficulties or overloaded apps, then Virtual PC 2007 will typically run VMs with astoundingly even performance characteristics. The way I make sure of this is by running a test I've already conducted on a prior day again (for instance, with Firefox 3.5 Beta 4). If the results end up only changing the final index score by a few hundredths of a point, then I'm okay with going ahead with testing new browser builds.

I should also point out that running one browser and even exiting it often leaves other browsers slower, in both virtual and physical environments. This is especially noticeable with Apple Safari 3 and 4; even after exiting it on any computer I've used, including real ones, Firefox and IE8 are both very noticeably slower, as is everything else in Windows. My tests show Firefox 3 and 3.5 Beta 4 JavaScript performance can be slowed down by around 300%. For that reason, after conducting a Safari test, the VM must be rebooted before trying any other browser.

When VM performance does change on my system, it either changes drastically or little at all. If the index score on a retrial isn't off by a few hundredths of a point, then it will be off by as much as three points. It's never in-between. In that case, I shut down the VM, I reboot XP, and I start over with another retrial. Every time I've done this without exception (and we're getting well into the triple-digits now), the retrial goes back to that few-hundredths-of-a-point shift.

Therefore I can faithfully say I stand behind the results I've reported here in Betanews, which after all are only about browsers' relative performance with respect to one another. If I were testing them in a faster system or on a bare bones physical machine, I'd expect the relative index numbers to be the same. Think of it like geometry: No matter how big a triangle may be, the angles measure up the same, they add up to 180, and its sides have the same proportionate length.

Now, all that having been said, for reasons of reader fidelity alone, there's benefits to be gained from testing on a physical level. You need to trust the numbers I'm giving you, and if I can do more to facilitate that, I should. For that reason, I will be moving our browser tests very soon to a new physical platform (I've already ordered the parts for it). At that point, I plan to restart the indexes from scratch with fresh numbers.

Next, there's been another important question to address concerning one of the tests I chose for our suite of four: It's the HowToCreate.uk rendering benchmark, which also tests load times. On that particular benchmark, Safari and Chrome both put in amazing scores. But long-time Safari users have reported that there may be an unfair reason for that: Safari, they say, fires the onLoad JavaScript event at the wrong time. I've encountered such problems many times before, especially during the late 1980s and early '90s when testing basic compilers whose form redraw events had the very same issues.

This time, the creators of the very test we chose called the issue into question, so we decided to take the matter seriously. HowToCreate.uk's engineers developed a little patch to their page which they said forces the onLoad event to fire sooner, at least more in accordance with other browsers. I applied that patch and noticed a big difference: While Safari 4 still appeared faster than its competition at loading pages, it wasn't ten times as fast, but more like three times. And that's a significant difference -- enough for me to adjust the test itself to reflect that difference. For fairness, I applied the adjusted test to all the other browsers, and noticed a slighter difference in Google Chrome 1 and 2, but a negligible difference in Firefox and IE. Our current round of index numbers reflects this adjustment.

Copyright Betanews, Inc. 2009

AT&T and Samsung on Thursday announcement the imminent availability of the Jack, a successor to the exceedingly popular BlackJack and BlackJack II. The handset, also to be known as the i637, goes on sale May 19 and will retail for $100 with a rebate, two-year service agreement, and the other usual restrictions.

AT&T and Samsung on Thursday announcement the imminent availability of the Jack, a successor to the exceedingly popular BlackJack and BlackJack II. The handset, also to be known as the i637, goes on sale May 19 and will retail for $100 with a rebate, two-year service agreement, and the other usual restrictions.

Numeracy is on my mind this week here in Seattle, where the geek contingent

Numeracy is on my mind this week here in Seattle, where the geek contingent